IDEA创建Spark开发环境

Posted weiking

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了IDEA创建Spark开发环境相关的知识,希望对你有一定的参考价值。

- 下面安装的六步和通过Maven安装Java项目的方式相同

- 如果刚安装完IDEA,直接点击[Create New Project]

- 左侧选择Maven,然后先勾选上部的[Create from archetype],然后右侧选择[maven-archetype-quickstart]

- 填写GroupID、ArtifactId,点击Next

- 配置一下maven的位置以及配置文件和本地仓库位置

- 配置项目存储的路径,接着点击Next即可

- 进入项目后在右下角选择[Enable Auto-Import],这样在修改配置文件时自动导入了

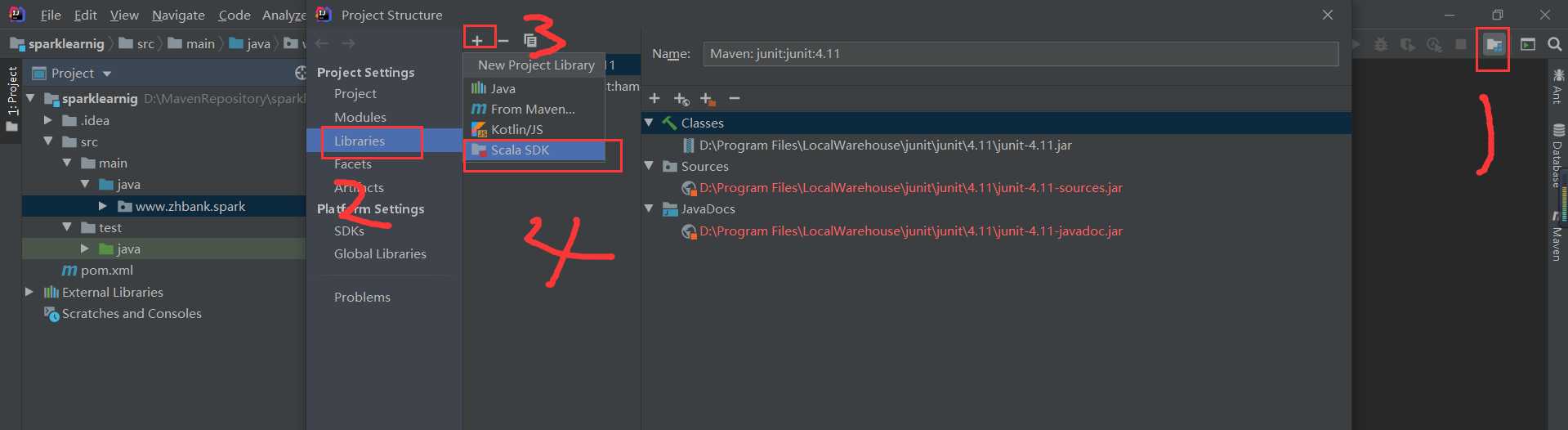

- 导入 scala SDK

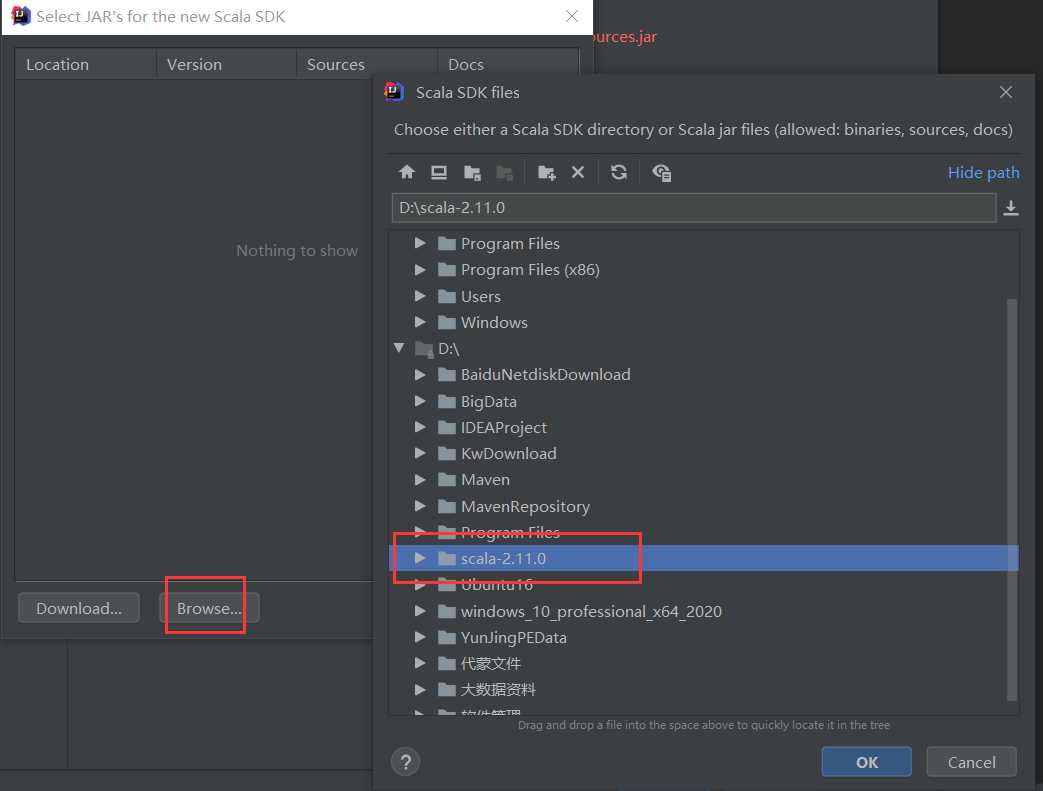

- 选择导入的scala SDK的地址

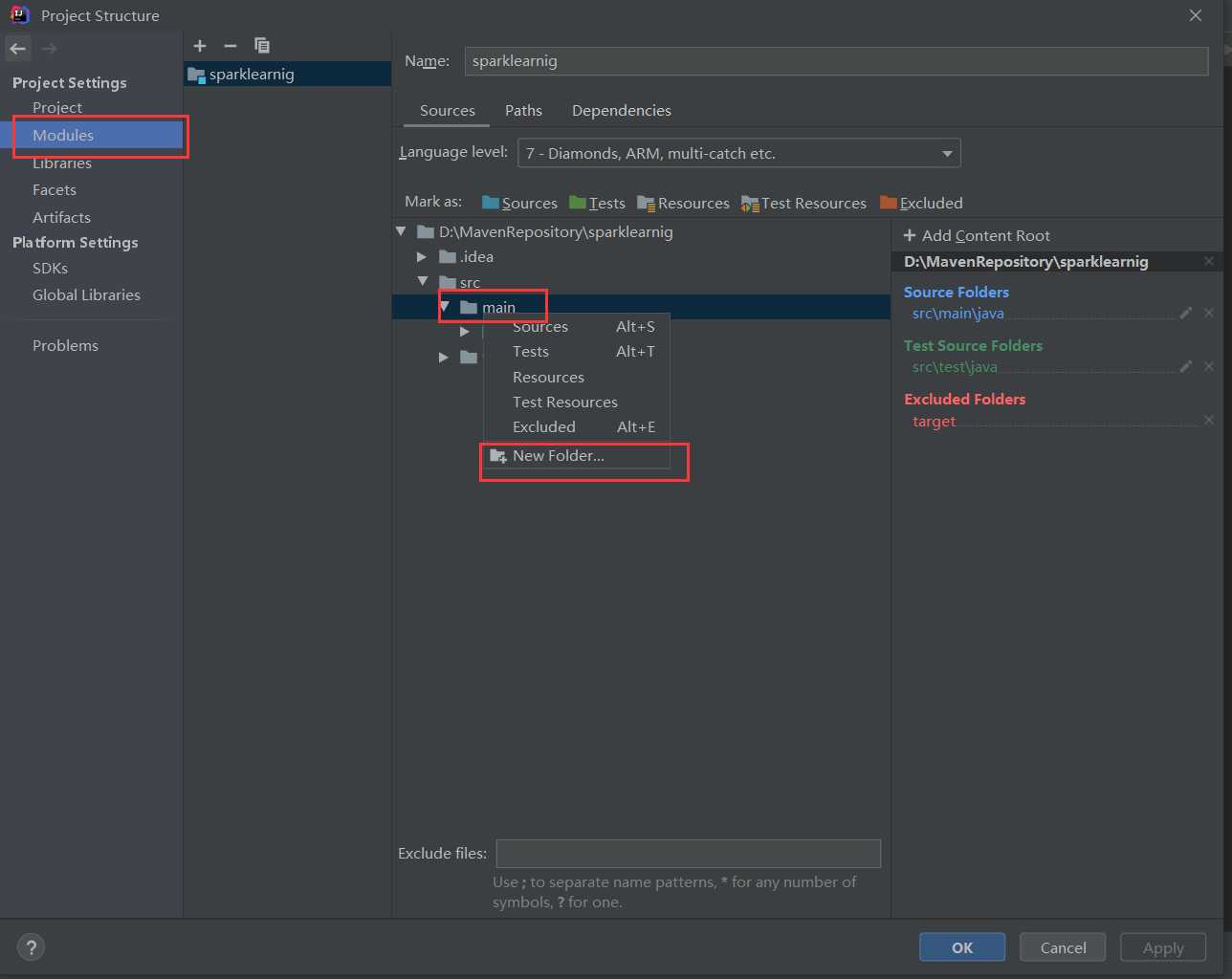

- 构建自己需要的项目结构(便于管理)

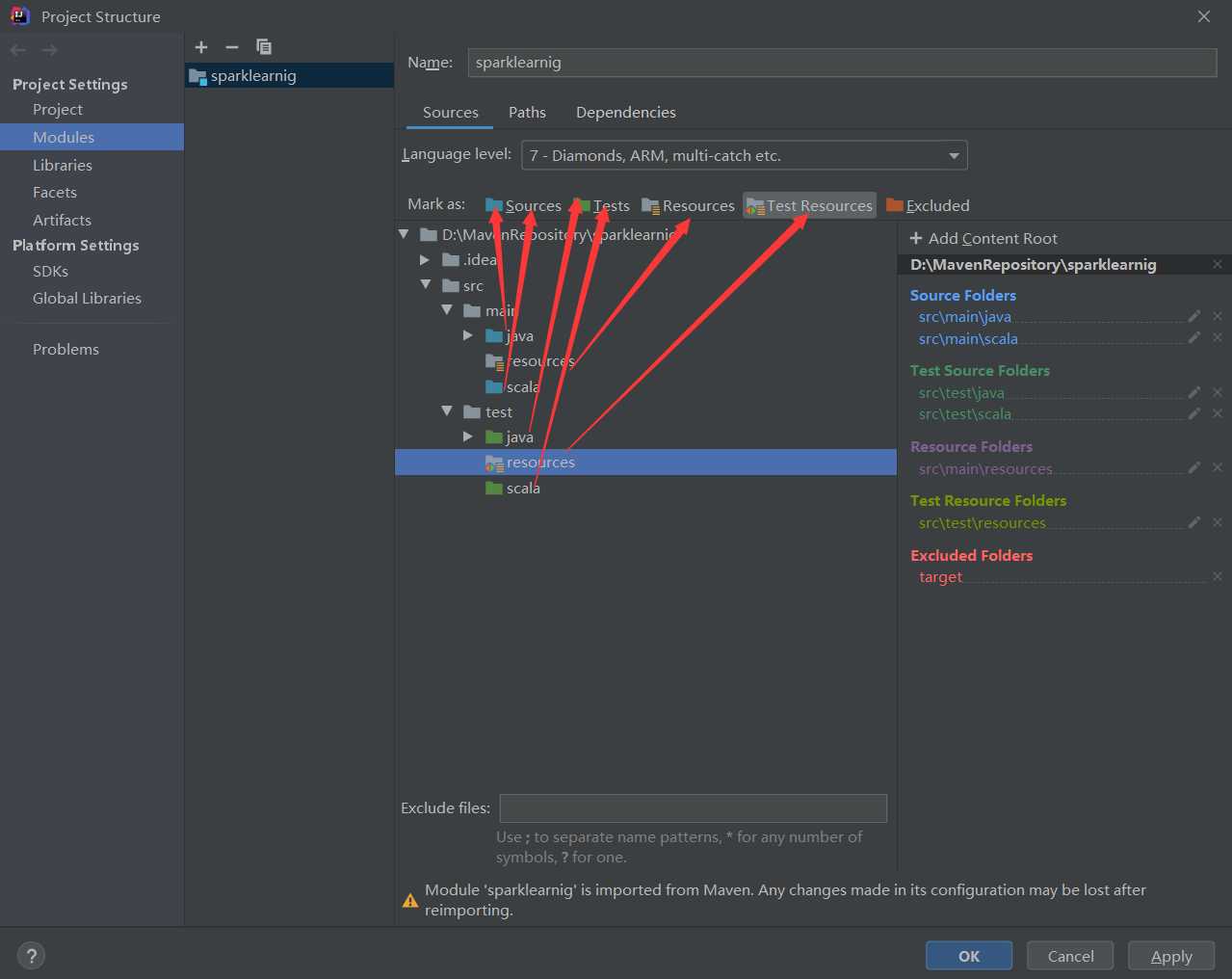

- 将对应的目录结构改成对应的源码包

- 导入需要的Spark开发的依赖

<repositories> <repository> <id>central</id> <name>aliyun maven</name> <url>http://maven.aliyun.com/nexus/content/groups/public/</url> <layout>default</layout> <!-- 是否开启发布版构件下载 --> <releases> <enabled>true</enabled> </releases> <!-- 是否开启快照版构件下载 --> <snapshots> <enabled>false</enabled> </snapshots> </repository> <repository> <id>cloudera</id> <url>https://repository.cloudera.com/artifactory/cloudera-repos/</url> </repository> <repository> <id>jboss</id> <url>http://repository.jboss.com/nexus/content/groups/public</url> </repository> </repositories> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <maven.compiler.source>1.7</maven.compiler.source> <maven.compiler.target>1.7</maven.compiler.target> <hadoop.version>2.6.0-cdh5.7.6</hadoop.version> <spark.version>2.2.0</spark.version> <mysq.version>5.1.27</mysq.version> <hbase.version>1.2.0-cdh5.7.6</hbase.version> <uasparser.version>0.6.1</uasparser.version> </properties> <dependencies> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.11</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.11</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_2.11</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming-kafka-0-8_2.11</artifactId> <version>2.2.0</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-hive_2.11</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoop.version}</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>${mysq.version}</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-server</artifactId> <version>${hbase.version}</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-hadoop2-compat</artifactId> <version>1.2.0-cdh5.7.6</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-client</artifactId> <version>1.2.0-cdh5.7.6</version> </dependency> <dependency> <groupId>cz.mallat.uasparser</groupId> <artifactId>uasparser</artifactId> <version>${uasparser.version}</version> </dependency> <!-- Spark MLlib依赖包--> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-mllib_2.11</artifactId> <version>2.2.0</version> </dependency> <dependency> <groupId>org.scalanlp</groupId> <artifactId>breeze_2.11</artifactId> <version>0.13.1</version> </dependency> <!-- <dependency> <groupId>com.github.fommil.netlib</groupId> <artifactId>all</artifactId> <version>1.1.2</version> </dependency>--> <dependency> <groupId>org.jblas</groupId> <artifactId>jblas</artifactId> <version>1.2.3</version> </dependency> <dependency> <groupId>org.mongodb.spark</groupId> <artifactId>mongo-spark-connector_2.11</artifactId> <version>2.3.1</version> </dependency> <dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> <version>2.8.0</version> </dependency> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>0.8.2.1</version> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.31</version> </dependency> </dependencies>

以上是关于IDEA创建Spark开发环境的主要内容,如果未能解决你的问题,请参考以下文章

IDEA+maven搭建scala开发环境(spark)(半转载)