python实现normal equation进行一元、多元线性回归

一元线性回归

数据

代码

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

from sklearn.linear_model import SGDRegressor

from sklearn.preprocessing import StandardScaler

# 梯度下降

def gradientDecent(xmat,ymat):

sgd=SGDRegressor(max_iter=1000000, tol=1e-7)

sgd.fit(xmat,ymat)

return sgd.coef_,sgd.intercept_

# 导入数据

def loadDataSet(filename):

x=[[],[]]

y=[]

with open(filename,\'r\') as f:

for line in f.readlines():

lineDataList=line.split(\'\\t\')

lineDataList=[float(x) for x in lineDataList]

x[0].append(lineDataList[0])

x[1].append(lineDataList[1])

y.append(lineDataList[2])

return x,y

# 转化为矩阵

def mat(x):

return np.matrix(np.array(x)).T

# 可视化

def dataVisual(xmat,ymat,k,g,intercept):

k1,k2=k[0],k[1]

g1,g2=g[0],g[1]

matplotlib.rcParams[\'font.sans-serif\'] = [\'SimHei\']

plt.title(\'拟合可视化\')

plt.scatter(xmat[:,1].flatten().A[0],ymat[:,0].flatten().A[0])

x = np.linspace(0, 1, 50)

y=x*k2+k1

g=x*g2+g1+intercept

plt.plot(x,g,c=\'yellow\')

plt.plot(x,y,c=\'r\')

plt.show()

# 求解回归的参数

def normalEquation(xmat,ymat):

temp=xmat.T.dot(xmat)

isInverse=np.linalg.det(xmat.T.dot(xmat))

if isInverse==0.0:

print(\'不可逆矩阵\')

else:

inv=temp.I

return inv.dot(xmat.T).dot(ymat)

# 主函数

def main():

xAll,y=loadDataSet(\'linearRegression/ex0.txt\')

xlines=[]# 用于梯度下降算钱调用

for i in range(len(xAll[0])):

temp=[]

temp.append(xAll[0][i])

temp.append(xAll[1][i])

xlines.append(temp)

ylines=np.array(y).reshape(len(y),1)

# xlines=StandardScaler().fit_transform(xlines)

# ylines=StandardScaler().fit_transform(ylines)

# print(xlines)

# print(ylines)

gradPara,intercept=gradientDecent(xlines,y)

print(\'梯度下降参数\')

print(gradPara)

print(\'梯度下降截距\')

print(intercept)

xmat=mat(xAll)

ymat=mat(y)

print(\'normequation的参数:\')

res=normalEquation(xmat,ymat)

print(res)

k1,k2=res[0,0],res[1,0]

dataVisual(xmat,ymat,[k1,k2],gradPara,intercept)

if __name__ == "__main__":

main()

结果

注意这里我踩了一个小小的坑,就是用SGDRegressor的时候,总是和预期结果相差一个截距,通过修改g从g=xg2+g1到g=xg2+g1+intercept,加上截距就好了

图中红色表示normalequation方法,而黄线表示梯度下降,由于我通过调参拟合的非常好,所以重合的很厉害,不好看出来

多元线性回归

数据

代码

import numpy as np

import matplotlib.pyplot as plt

import re

from sklearn.linear_model import SGDRegressor

# 将数据转化成为矩阵

def matrix(x):

return np.matrix(np.array(x)).T

# 线性函数

def linerfunc(xList,thList,*intercept):

res=0.0

for i in range(len(xList)):

res+=xList[i]*thList[i]

if len(intercept)==0:

return res

else:

return res+intercept[0][0]

# 加载数据

def loadData(fileName):

x=[]

y=[]

regex = re.compile(\'\\s+\')

with open(fileName,\'r\') as f:

readlines=f.readlines()

for line in readlines:

dataLine=regex.split(line)

dataList=[float(x) for x in dataLine[0:-1]]

xList=dataList[0:8]

x.append(xList)

y.append(dataList[-1])

return x,y

# 求解回归的参数

def normalEquation(xmat,ymat):

temp=xmat.T.dot(xmat)

isInverse=np.linalg.det(xmat.T.dot(xmat))

if isInverse==0.0:

print(\'不可逆矩阵\')

return None

else:

inv=temp.I

return inv.dot(xmat.T).dot(ymat)

# 梯度下降求参数

def gradientDecent(xmat,ymat):

sgd=SGDRegressor(max_iter=1000000, tol=1e-7)

sgd.fit(xmat,ymat)

return sgd.coef_,sgd.intercept_

# 测试代码

def testTrainResult(normPara,gradPara,Interc,xTest,yTest):

nright=0

for i in range(len(xTest)):

if round(linerfunc(xTest[i],normPara)) ==yTest[i]:

nright+=1

print(\'关于normequation的预测方法正确率为 {}\'.format(nright/len(xTest)))

gright=0

for i in range(len(xTest)):

if round(linerfunc(xTest[i],gradPara,Interc))==yTest[i]:

gright+=1

print(\'关于梯度下降法预测的正确率为 {}\'.format(gright/len(xTest)))

# 运行程序

def main():

x,y=loadData(\'linearRegression/abalone1.txt\')

# 划分训练集合和测试集

lr=0.8

xTrain=x[:int(len(x)*lr)]

yTrain=y[:int(len(y)*lr)]

xTest=x[int(len(x)*lr):]

yTest=y[int(len(y)*lr):]

xmat=matrix(xTrain).T

ymat=matrix(yTrain)

# 通过equation来计算模型的参数

theta=normalEquation(xmat,ymat)

print(\'通过equation来计算模型的参数\')

theta=theta.reshape(1,len(theta)).tolist()[0]

print(theta)

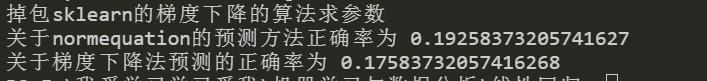

# 掉包sklearn的梯度下降的算法求参数

print(\'掉包sklearn的梯度下降的算法求参数\')

gtheta,Interc=gradientDecent(xTrain,yTrain)

print(\'参数\')

print(gtheta)

print(\'截距\')

print(Interc)

testTrainResult(theta,gtheta,Interc,xTest,yTest)

if __name__ == "__main__":

main()

结果

目录结构

数据下载

链接:https://pan.baidu.com/s/1JXrE4kbYsdVSSWjTUSDT3g

提取码:obxh

链接:https://pan.baidu.com/s/13wXq52wpKHbIlf3v21Qcgg

提取码:w4m5