云原生第五周--k8s实战案例

Posted zhaoxiangyu-blog

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了云原生第五周--k8s实战案例相关的知识,希望对你有一定的参考价值。

前言 业务容器化优势:

- 提高资源利用率、节约部署IT成本。

- 提高部署效率,基于kubernetes实现微服务的快速部署与交付、容器的批量调度与秒级启动。

- 实现横向扩容、灰度部署、回滚、链路追踪、服务治理等。

- 可根据业务负载进行自动弹性伸缩。

- 容器将环境和代码打包在镜像内,保证了测试与生产运行环境的一致性。

- 紧跟云原生社区技术发展的步伐,不给公司遗留技术债,为后期技术升级夯实了基础。

- 为个人储备前沿技术,提高个人level。

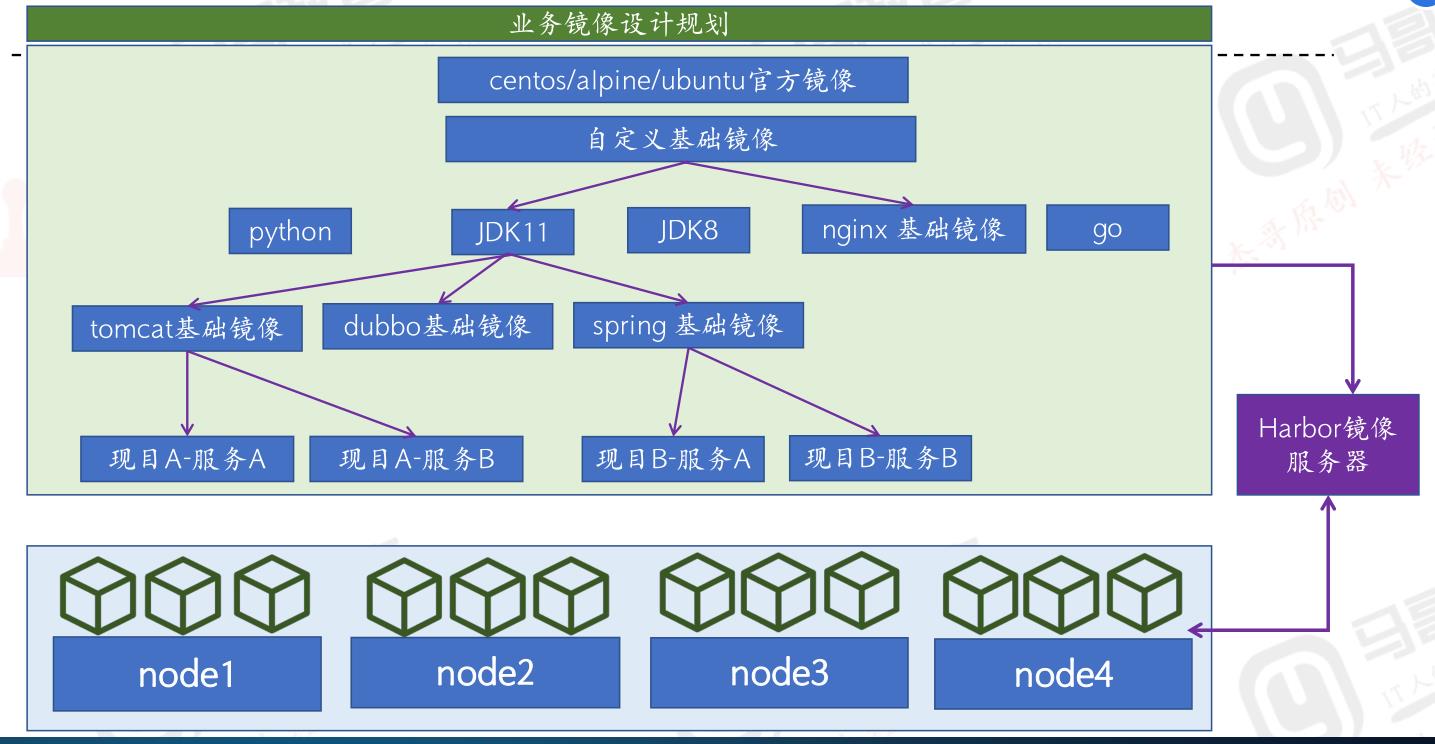

案例一 业务规划以及镜像分层构建:

第一步:先通过官方基础系统镜像制作出自定义基础系统镜像;

第二步:在自定义基础镜像中添加中间件,做成各种中间件基础镜像

第三步;在中间件中添加业务代码,做成可以使用的业务镜像并使用

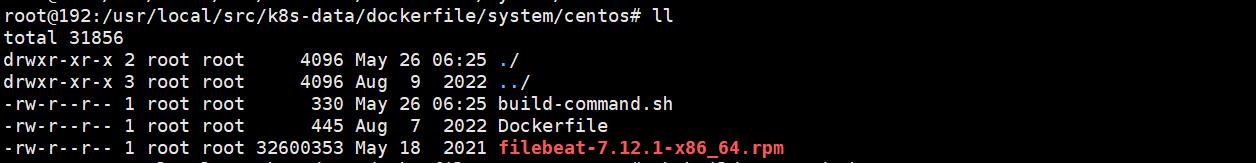

制作centos基础镜像

Dockerfile文件

root@192:/usr/local/src/k8s-data/dockerfile/system/centos# cat Dockerfile

#×Ô¶¨ÒåCentos »ù´¡¾µÏñ

FROM centos:7.9.2009

MAINTAINER Jack.Zhang 2973707860@qq.com

ADD filebeat-7.12.1-x86_64.rpm /tmp

RUN yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && rm -rf /etc/localtime /tmp/filebeat-7.12.1-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && useradd nginx -u 2088

制作镜像脚本

root@192:/usr/local/src/k8s-data/dockerfile/system/centos# cat build-command.sh

#!/bin/bash

#docker build -t harbor.magedu.net/baseimages/magedu-centos-base:7.9.2009 .

#docker push harbor.magedu.net/baseimages/magedu-centos-base:7.9.2009

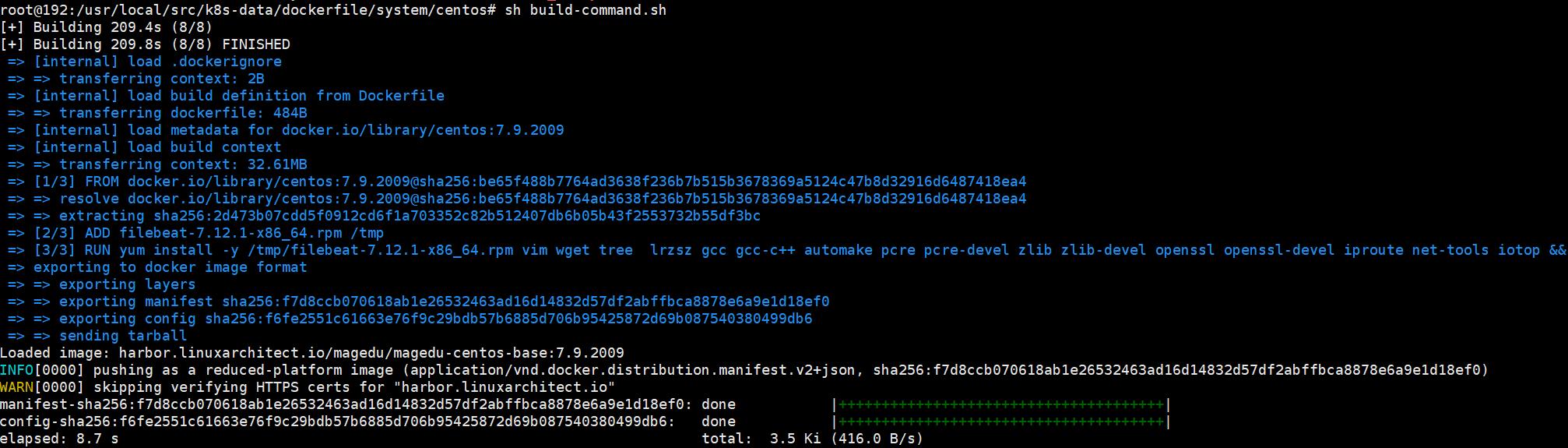

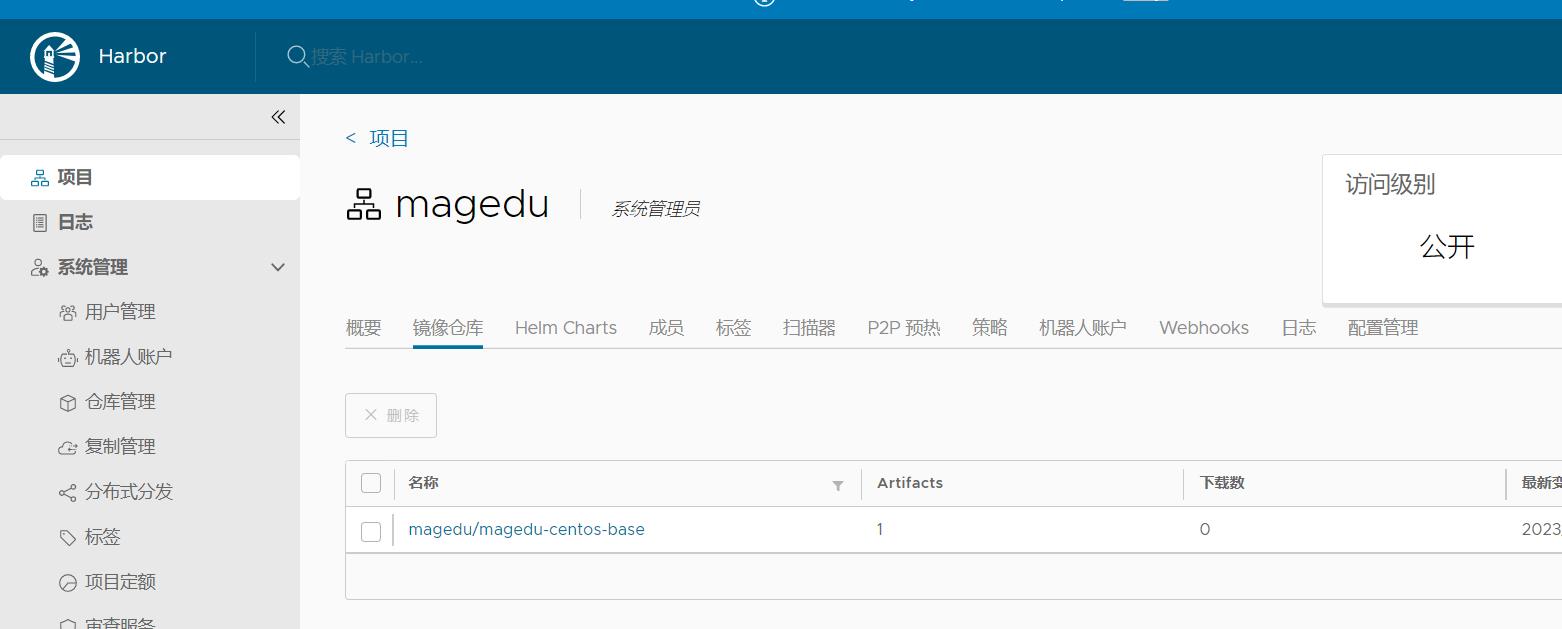

/usr/bin/nerdctl build -t harbor.linuxarchitect.io/magedu/magedu-centos-base:7.9.2009 .

/usr/bin/nerdctl push harbor.linuxarchitect.io/magedu/magedu-centos-base:7.9.2009

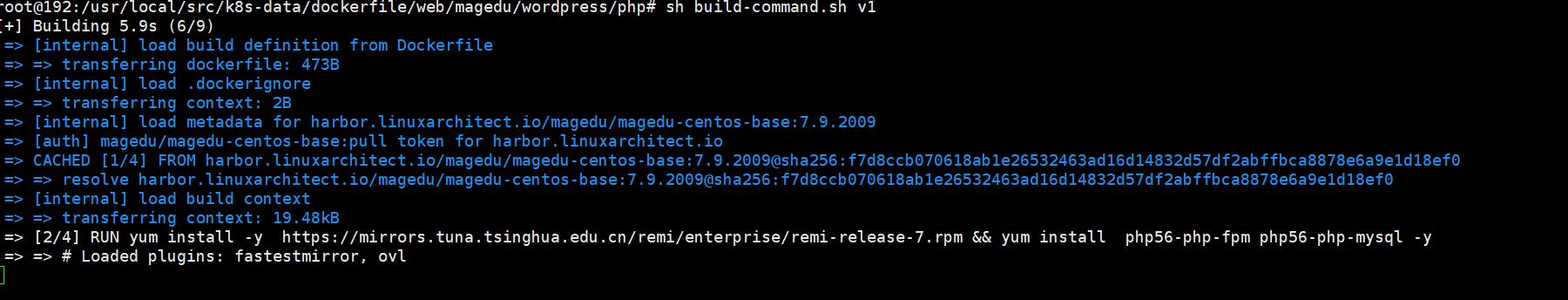

开始制作镜像

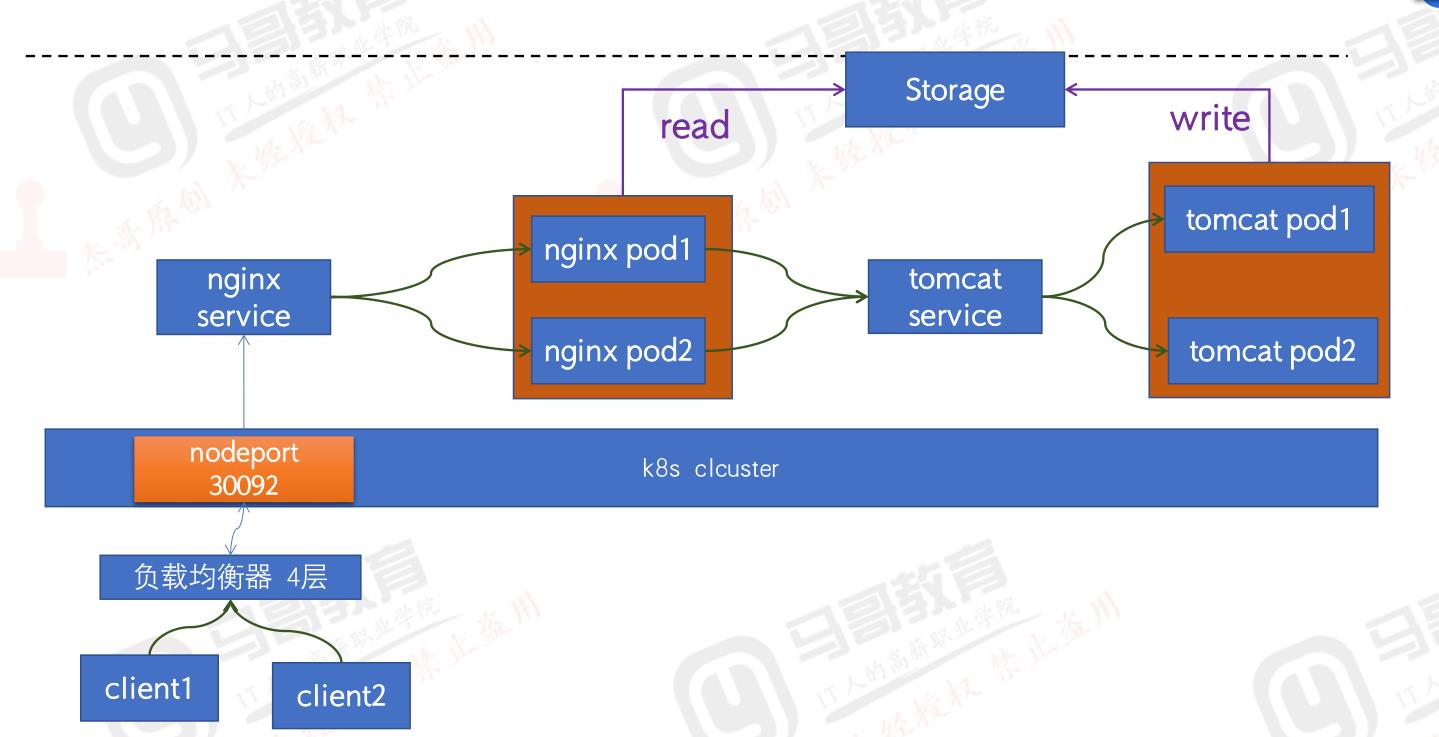

案例二 Nginx+Tomcat+NFS 实现动静分离:

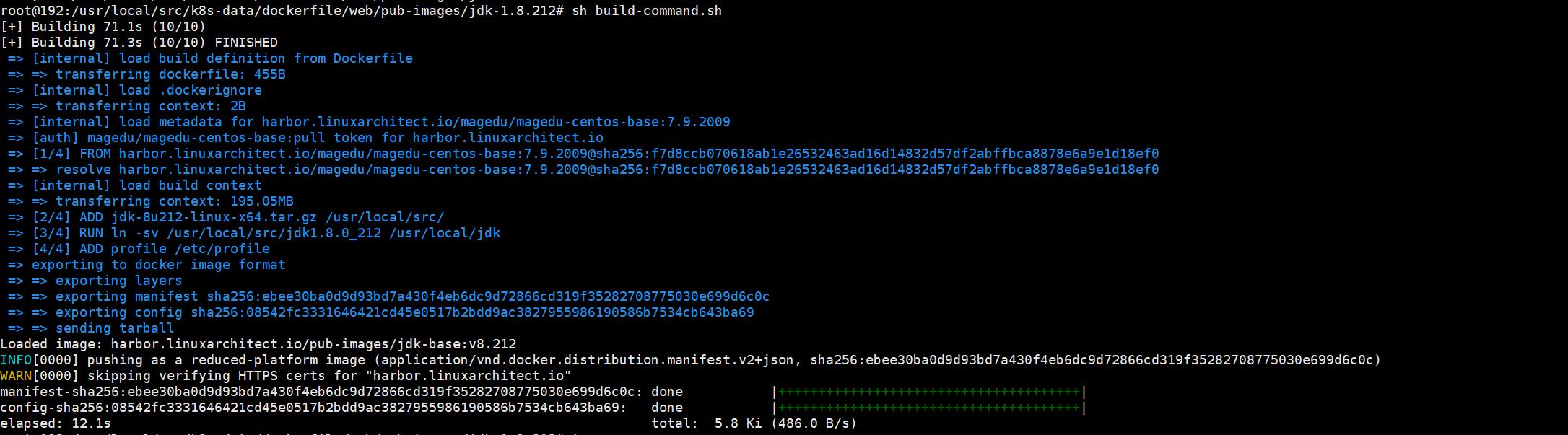

1 jdk 基础镜像制作

查看dockerfile

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# cat Dockerfile

#JDK Base Image

FROM harbor.linuxarchitect.io/magedu/magedu-centos-base:7.9.2009

#FROM centos:7.9.2009

MAINTAINER zhangshijie "zhangshijie@magedu.net"

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

ADD profile /etc/profile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

查看构建脚本

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/pub-images/jdk-base:v8.212 .

#sleep 1

#docker push harbor.linuxarchitect.io/pub-images/jdk-base:v8.212

nerdctl build -t harbor.linuxarchitect.io/pub-images/jdk-base:v8.212 .

nerdctl push harbor.linuxarchitect.io/pub-images/jdk-base:v8.212

创建镜像

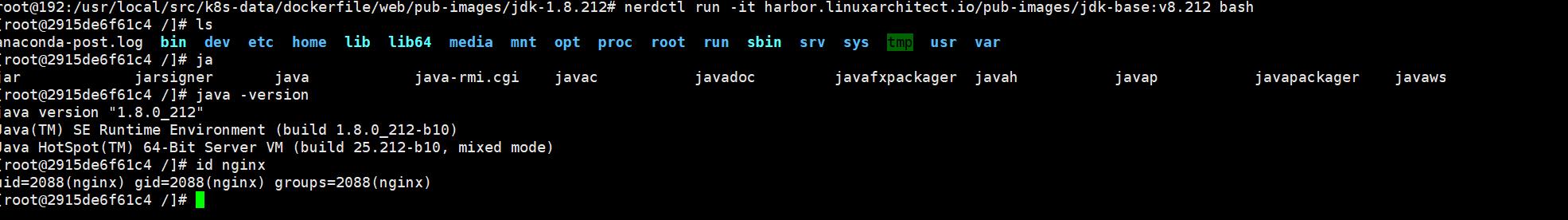

镜像制作完成后 创建一个容器验证镜像功能是否符合预期

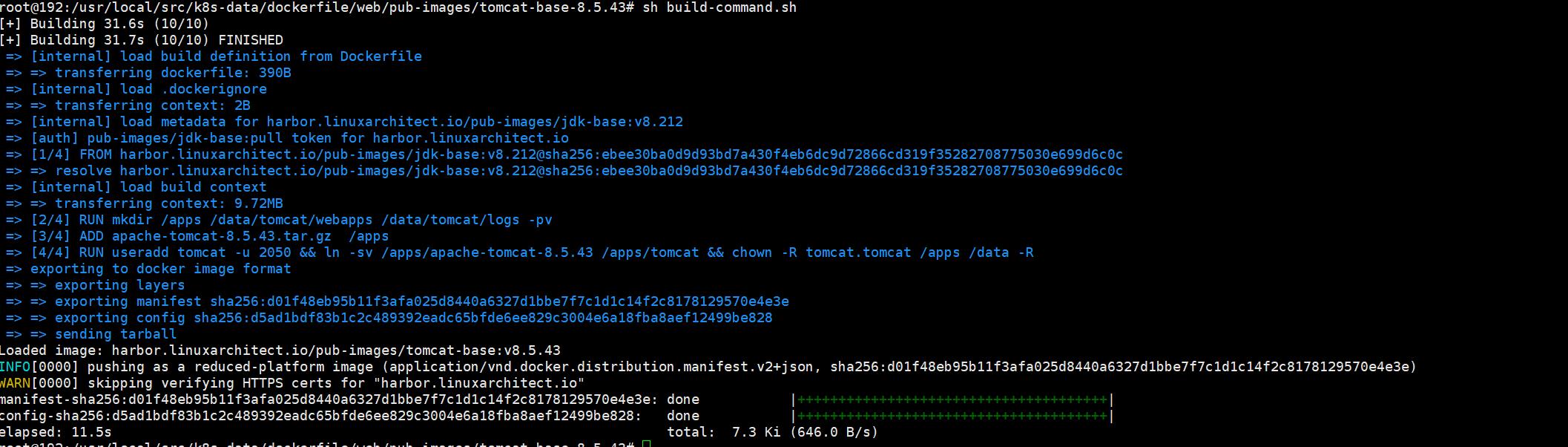

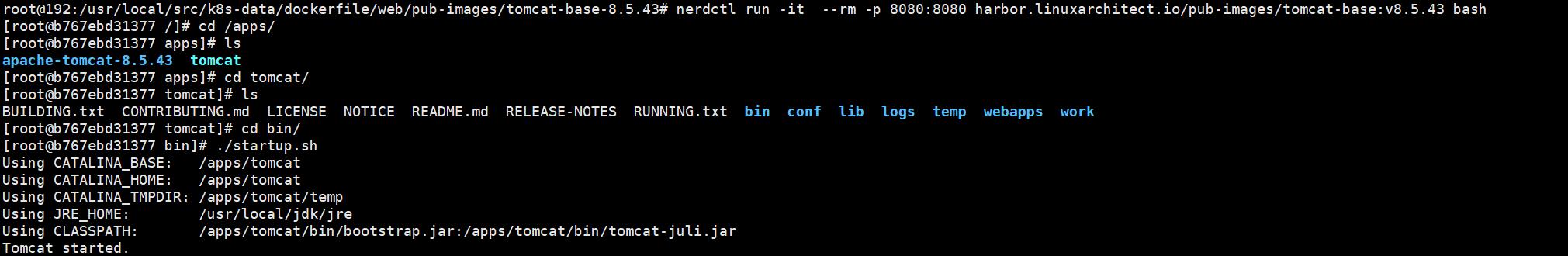

2 tomcat 基础镜像制作

dockerfile

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# cat Dockerfile

#Tomcat 8.5.43基础镜像

FROM harbor.linuxarchitect.io/pub-images/jdk-base:v8.212

MAINTAINER zhangshijie "zhangshijie@magedu.net"

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.43.tar.gz /apps

RUN useradd tomcat -u 2050 && ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat && chown -R tomcat.tomcat /apps /data -R

build-command.sh

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43 .

#sleep 3

#docker push harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43

nerdctl build -t harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43 .

nerdctl push harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43

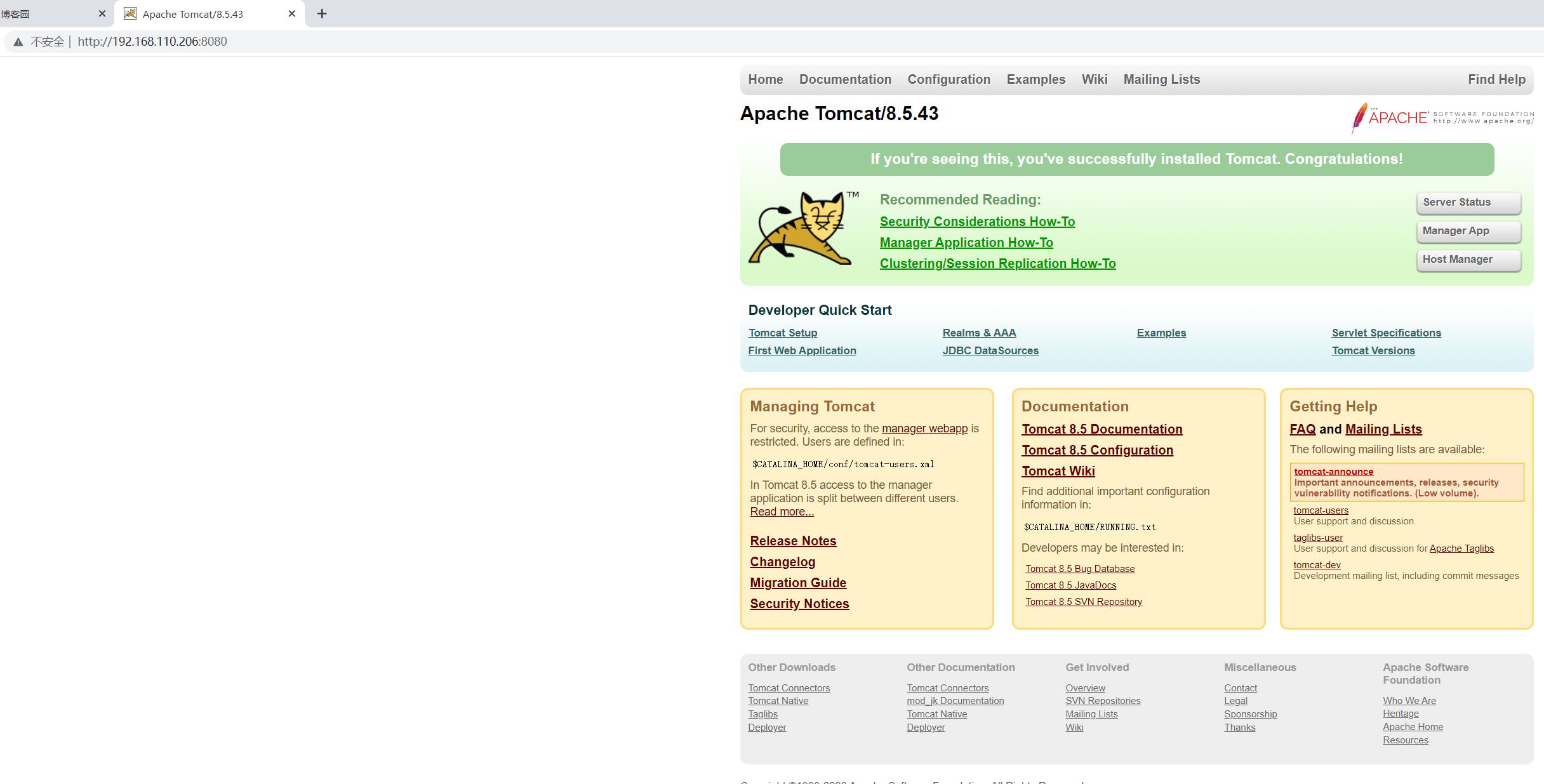

制作镜像

创建容器测试

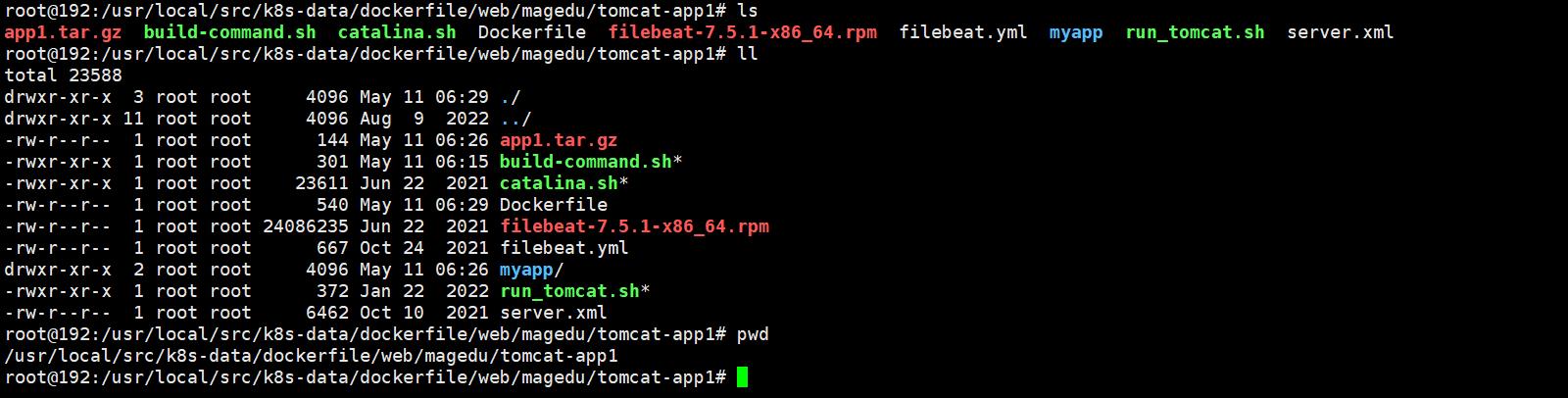

3 制作tomcat项目镜像

tomcat项目路径

dockerfile

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/tomcat-app1# cat Dockerfile

#tomcat web1

FROM harbor.linuxarchitect.io/pub-images/tomcat-base:v8.5.43

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

#ADD myapp/* /data/tomcat/webapps/myapp/

ADD app1.tar.gz /data/tomcat/webapps/app1/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

#ADD filebeat.yml /etc/filebeat/filebeat.yml

RUN chown -R nginx.nginx /data/ /apps/

#ADD filebeat-7.5.1-x86_64.rpm /tmp/

#RUN cd /tmp && yum localinstall -y filebeat-7.5.1-amd64.deb

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

buildcommand.sh

-rw-r--r-- 1 root root 6462 Oct 10 2021 server.xml

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/tomcat-app1# cat build-command.sh

#!/bin/bash

TAG=$1 #注意要添加环境变量 用于镜像tag的版本号

#docker build -t harbor.linuxarchitect.io/magedu/tomcat-app1:$TAG .

#sleep 3

#docker push harbor.linuxarchitect.io/magedu/tomcat-app1:$TAG

nerdctl build -t harbor.linuxarchitect.io/magedu/tomcat-app1:$TAG .

nerdctl push harbor.linuxarchitect.io/magedu/tomcat-app1:$TAG

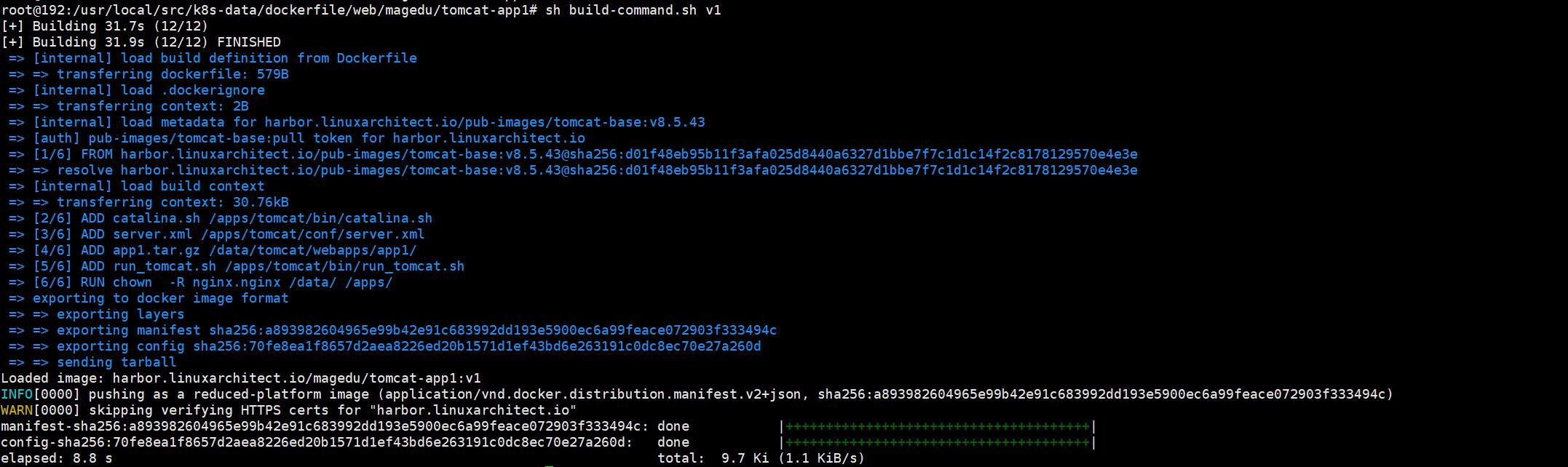

制作镜像

bash build-command.sh v1

用生成的镜像创建pod;

tomcat.yaml文件

root@192:/usr/local/src/k8s-data/yaml/magedu/tomcat-app1# cat tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-tomcat-app1-deployment-label

name: magedu-tomcat-app1-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-tomcat-app1-selector

template:

metadata:

labels:

app: magedu-tomcat-app1-selector

spec:

containers:

- name: magedu-tomcat-app1-container

image: harbor.linuxarchitect.io/magedu/tomcat-app1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

#resources:

# limits:

# cpu: 1

# memory: "512Mi"

# requests:

# cpu: 500m

# memory: "512Mi"

volumeMounts:

- name: magedu-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: magedu-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: magedu-images

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/images

- name: magedu-static

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/static

# nodeSelector:

# project: magedu

# app: tomcat

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-tomcat-app1-service-label

name: magedu-tomcat-app1-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30092

selector:

app: magedu-tomcat-app1-selector

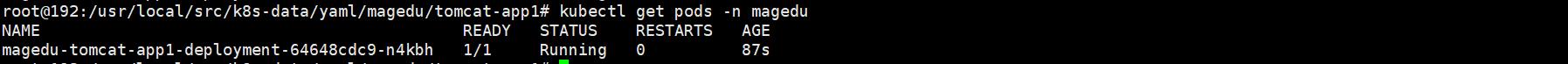

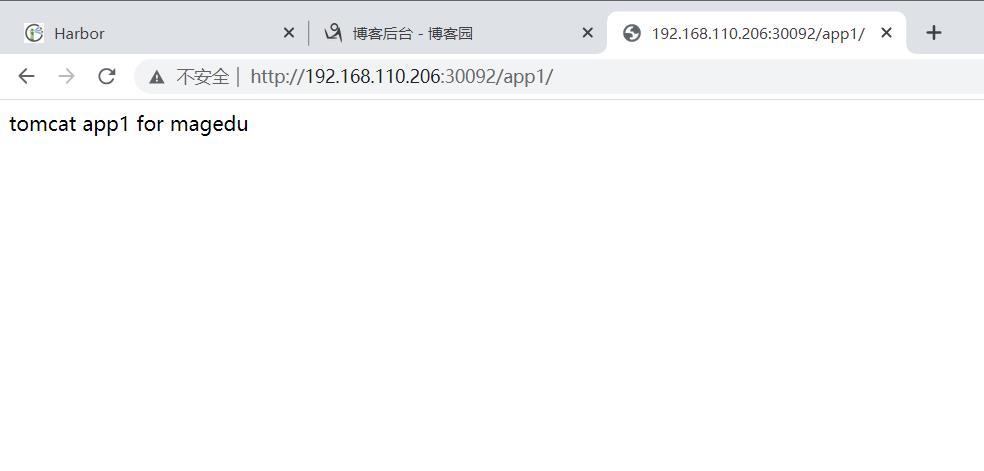

创建pod并测试

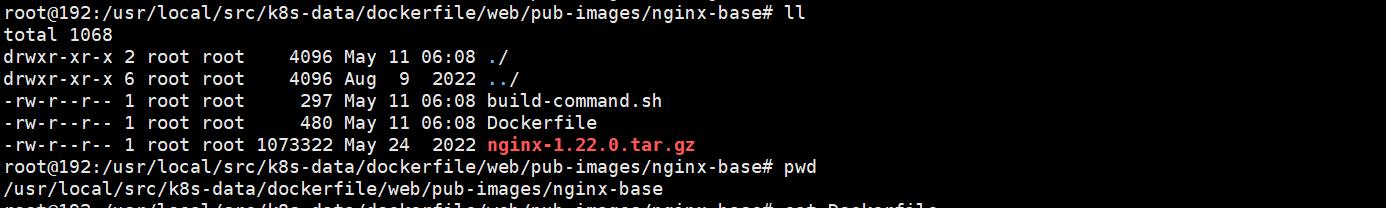

4 创建nginx基础镜像

dockerfile

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/nginx-base# cat Dockerfile

#Nginx Base Image

FROM harbor.linuxarchitect.io/baseimages/magedu-centos-base:7.9.2009

MAINTAINER zhangshijie@magedu.net

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.22.0.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.22.0 && ./configure && make && make install && ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.22.0.tar.gz

build-command.sh

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/nginx-base# cat build-command.sh

#!/bin/bash

#docker build -t harbor.magedu.net/pub-images/nginx-base:v1.18.0 .

#sleep 1

#docker push harbor.magedu.net/pub-images/nginx-base:v1.18.0

nerdctl build -t harbor.linuxarchitect.io/pub-images/nginx-base:v1.22.0 .

nerdctl push harbor.linuxarchitect.io/pub-images/nginx-base:v1.22.0

5 创建nginx项目镜像

dockerfile和build-command.sh

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/nginx# cat Dockerfile

#Nginx 1.22.0

FROM harbor.linuxarchitect.io/pub-images/nginx-base:v1.22.0

ADD nginx.conf /usr/local/nginx/conf/nginx.conf

ADD app1.tar.gz /usr/local/nginx/html/webapp/

ADD index.html /usr/local/nginx/html/index.html

#静态资源挂载路径

RUN mkdir -p /usr/local/nginx/html/webapp/static /usr/local/nginx/html/webapp/images

EXPOSE 80 443

CMD ["nginx"]

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/nginx# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.linuxarchitect.io/magedu/nginx-web1:$TAG .

#echo "镜像构建完成,即将上传到harbor"

#sleep 1

#docker push harbor.linuxarchitect.io/magedu/nginx-web1:$TAG

#echo "镜像上传到harbor完成"

nerdctl build -t harbor.linuxarchitect.io/magedu/nginx-web1:$TAG .

nerdctl push harbor.linuxarchitect.io/magedu/nginx-web1:$TAG

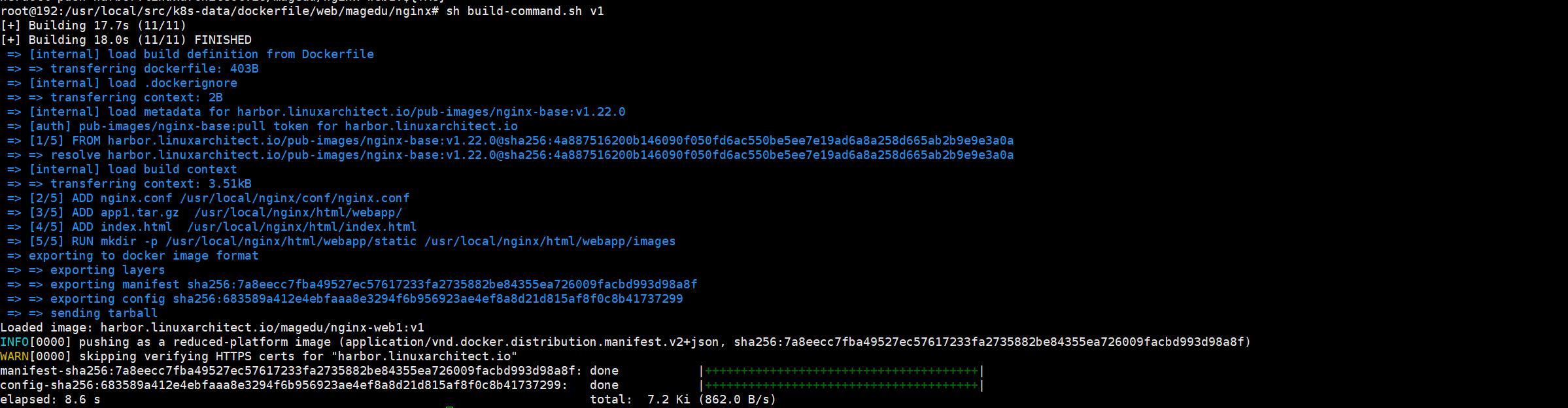

创建镜像

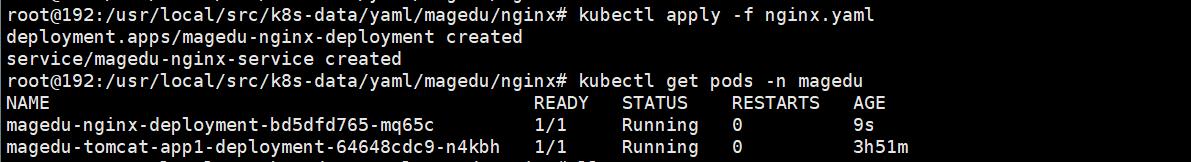

创建nginx pod测试

root@192:/usr/local/src/k8s-data/yaml/magedu/nginx# cat nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: magedu-nginx-deployment-label

name: magedu-nginx-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-nginx-selector

template:

metadata:

labels:

app: magedu-nginx-selector

spec:

containers:

- name: magedu-nginx-container

image: harbor.linuxarchitect.io/magedu/nginx-web1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "20"

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 256Mi

volumeMounts:

- name: magedu-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: magedu-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: magedu-images

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/images

- name: magedu-static

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/static

#nodeSelector:

# group: magedu

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-nginx-service-label

name: magedu-nginx-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30090

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30091

selector:

app: magedu-nginx-selector

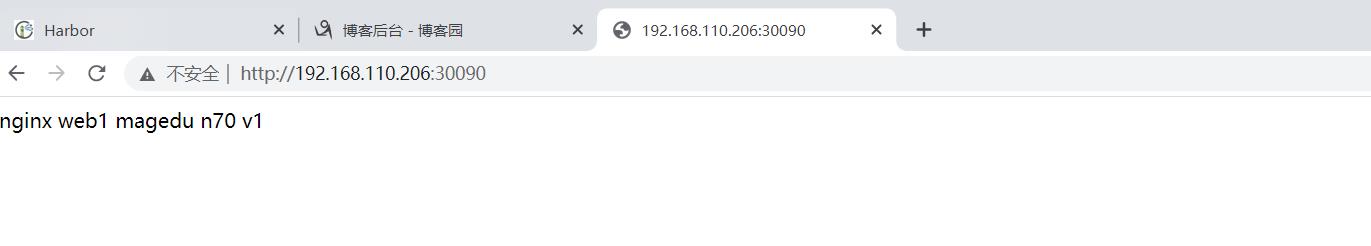

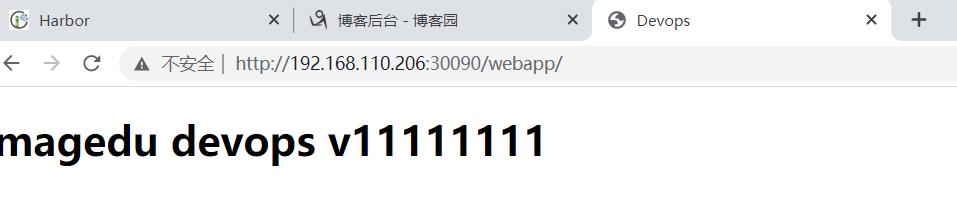

创建pod并测试

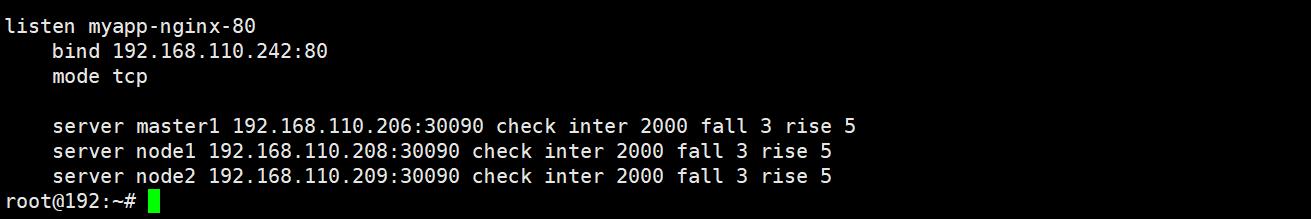

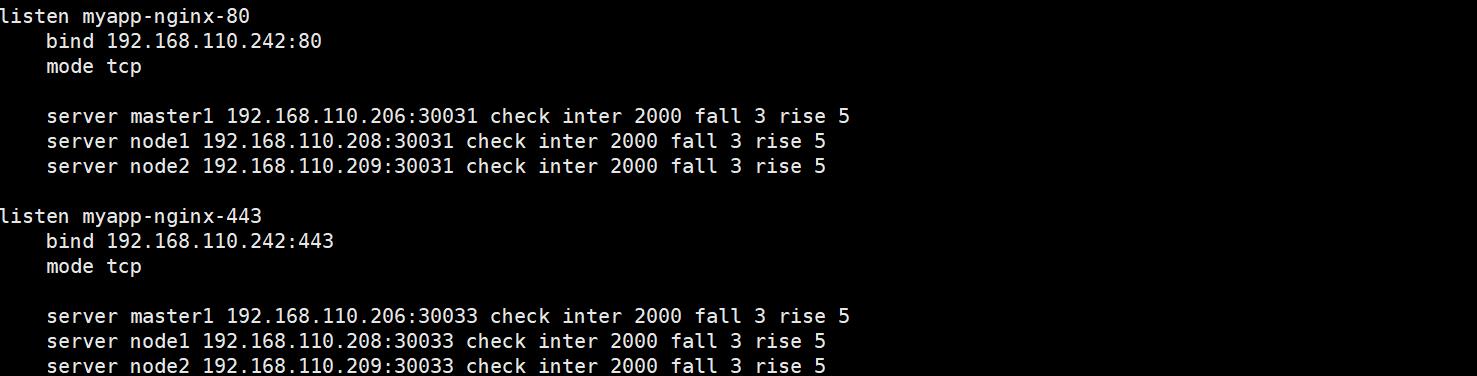

修改负载均衡器haproxy配置

访问负载均衡器地址测试

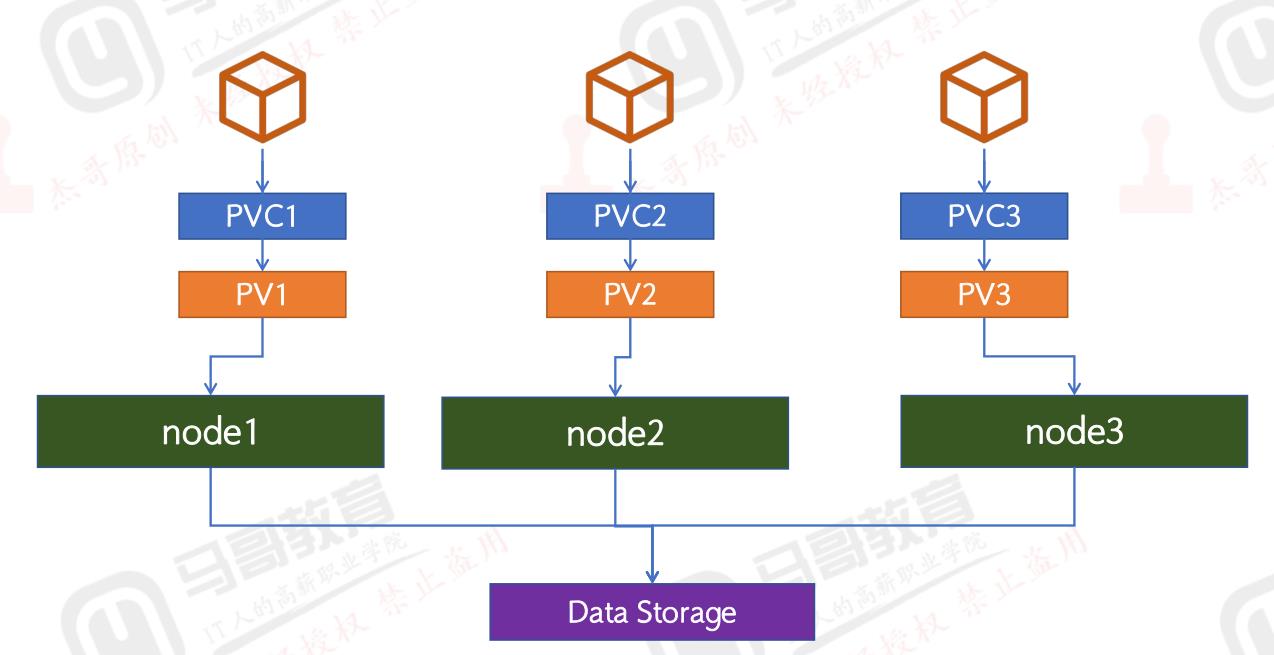

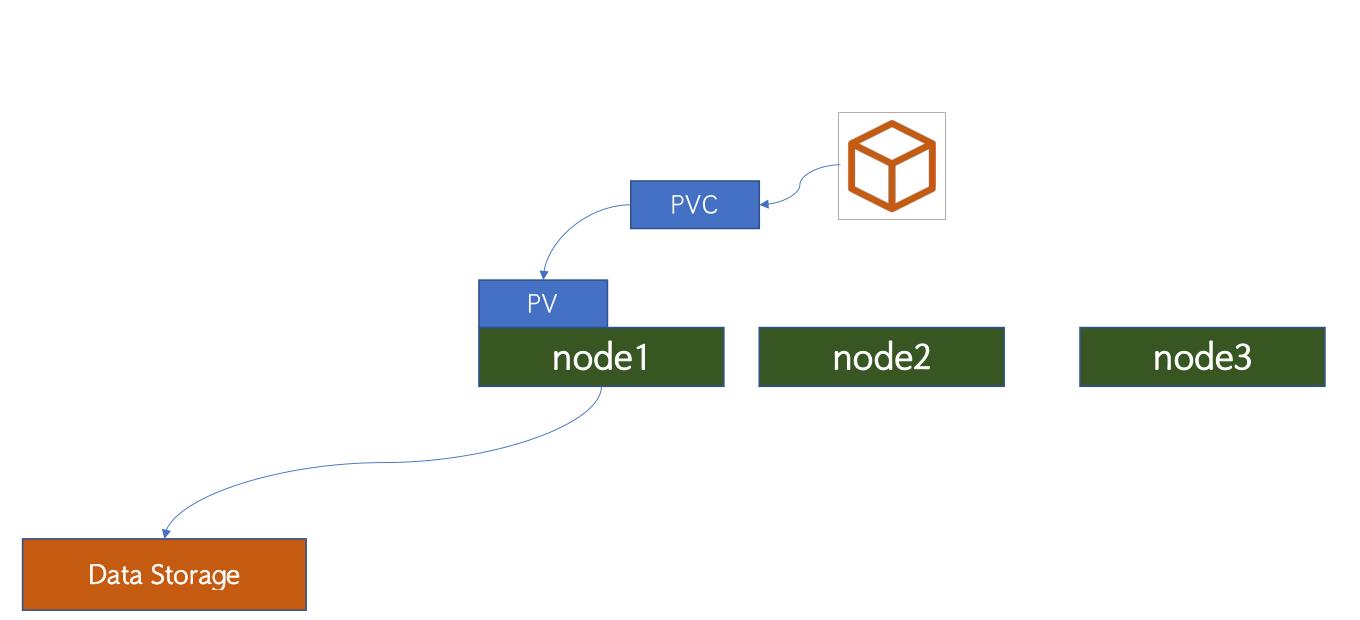

案例三 PV/PVC及zookeeper

制作步骤

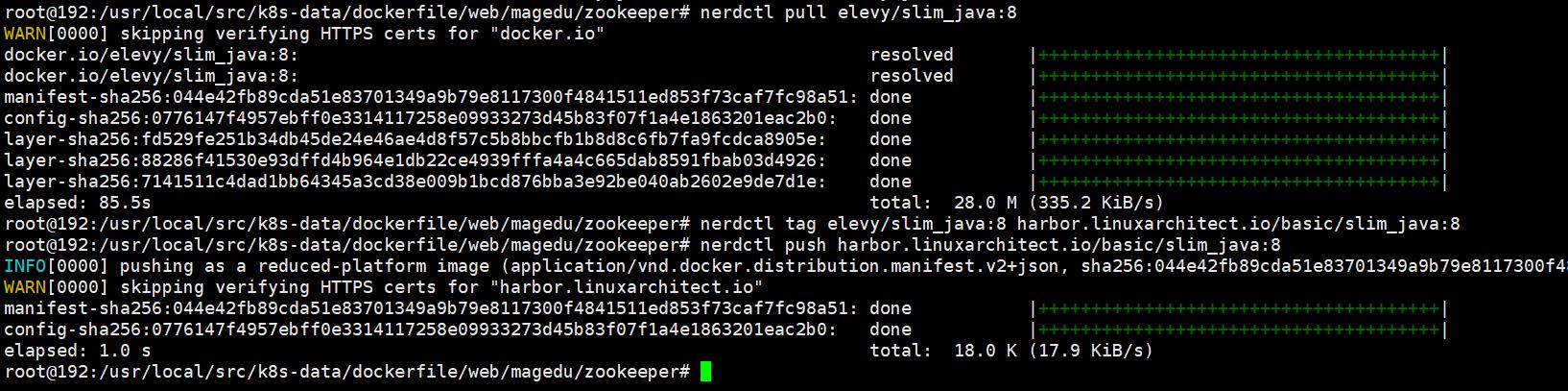

构建 zookeeper镜像

- nerdctl pull elevy/slim_java:8

- nerdctl tag elevy/slim_java:8 harbor.linuxarchitect.io/baseimages/slim_java:8

- nerdctl push harbor.linuxarchitect.io/baseimages/slim_java:8

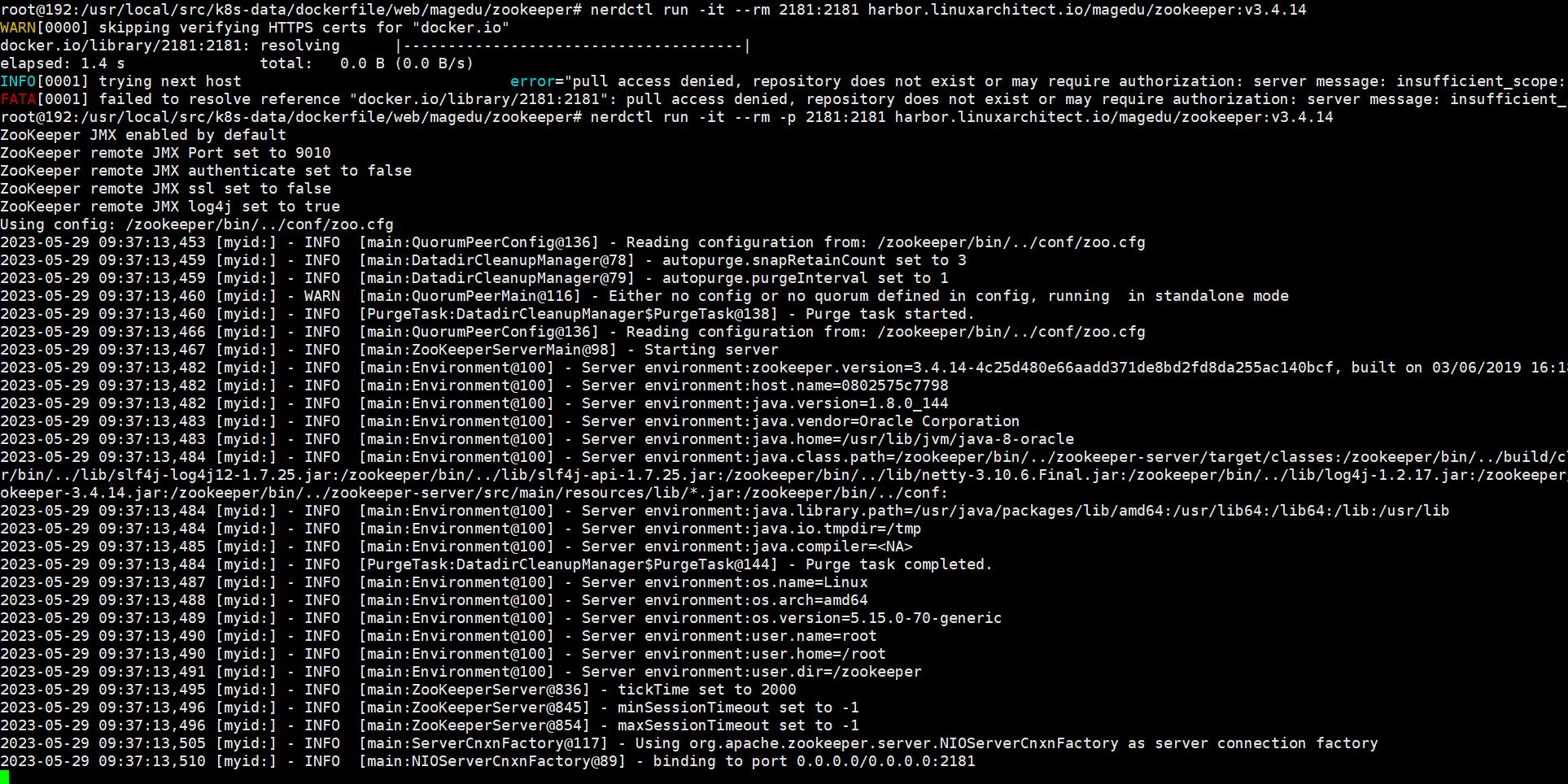

测试 zookeeper 镜像

创建PV/PVC

运行zookeeper集群

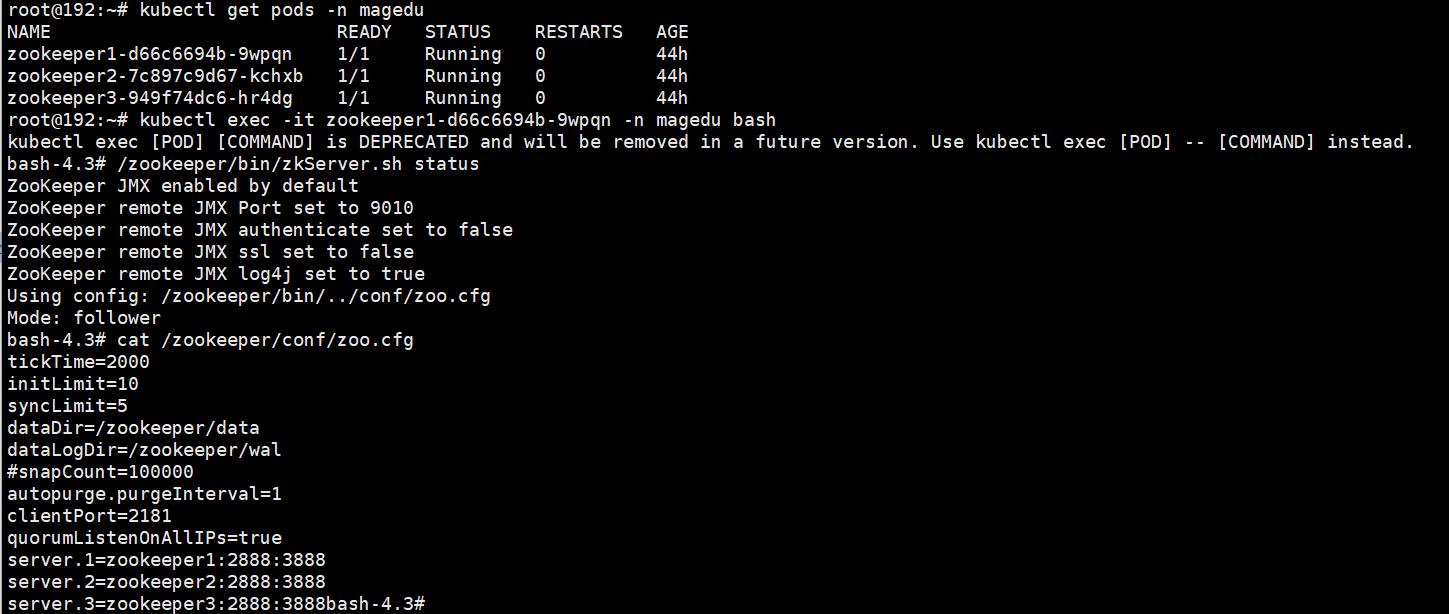

验证集群状态

拉取镜像,修改tag并上传harbor:

查看dockerfile文件

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/zookeeper# cat Dockerfile

FROM harbor.linuxarchitect.io/basic/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \\

ca-certificates \\

gnupg \\

tar \\

wget && \\

#

# Install dependencies

apk add --no-cache \\

bash && \\

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \\

gpg -q --batch --import /tmp/KEYS && \\

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \\

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \\

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \\

#

# Slim down

cd /zookeeper && \\

cp dist-maven/zookeeper-$ZK_VERSION.jar . && \\

rm -rf \\

*.txt \\

*.xml \\

bin/README.txt \\

bin/*.cmd \\

conf/* \\

contrib \\

dist-maven \\

docs \\

lib/*.txt \\

lib/cobertura \\

lib/jdiff \\

recipes \\

src \\

zookeeper-*.asc \\

zookeeper-*.md5 \\

zookeeper-*.sha1 && \\

#

# Clean up

apk del .build-deps && \\

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:$PATH \\

ZOO_LOG_DIR=/zookeeper/log \\

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \\

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ] #执行该脚本创建zookeeper

CMD [ "zkServer.sh", "start-foreground" ] #启动脚本参数

EXPOSE 2181 2888 3888 9010

entrypoint.sh 启动脚本

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/zookeeper# cat entrypoint.sh

#!/bin/bash

echo $MYID:-1 > /zookeeper/data/myid #将MYID的值希尔MYID文件,如果变量为空就默认为1,MYID为pod中的系统级别环境变量

if [ -n "$SERVERS" ]; then #如果$SERVERS不为空则向下执行,SERVERS为pod中的系统级别环境变量

IFS=\\, read -a servers <<<"$SERVERS" #IFS为bash内置变量用于分割字符并将结果形成一个数组

for i in "$!servers[@]"; do #$!servers[@]表示获取servers中每个元素的索引值,此索引值会用做当前ZK的ID

printf "\\nserver.%i=%s:2888:3888" "$((1 + $i))" "$servers[$i]" >> /zookeeper/conf/zoo.cfg #打印结果并输出重定向到文件/zookeeper/conf/zoo.cfg,其中%i和%s的值来分别自于后面变量"$((1 + $i))" "$servers[$i]"

done

fi

cd /zookeeper

exec "$@" #$@变量用于引用给脚本传递的所有参数,传递的所有参数会被作为一个数组列表,exec为终止当前进程、保留当前进程id、新建一个进程执行新的任务,即CMD [ "zkServer.sh", "start-foreground" ]

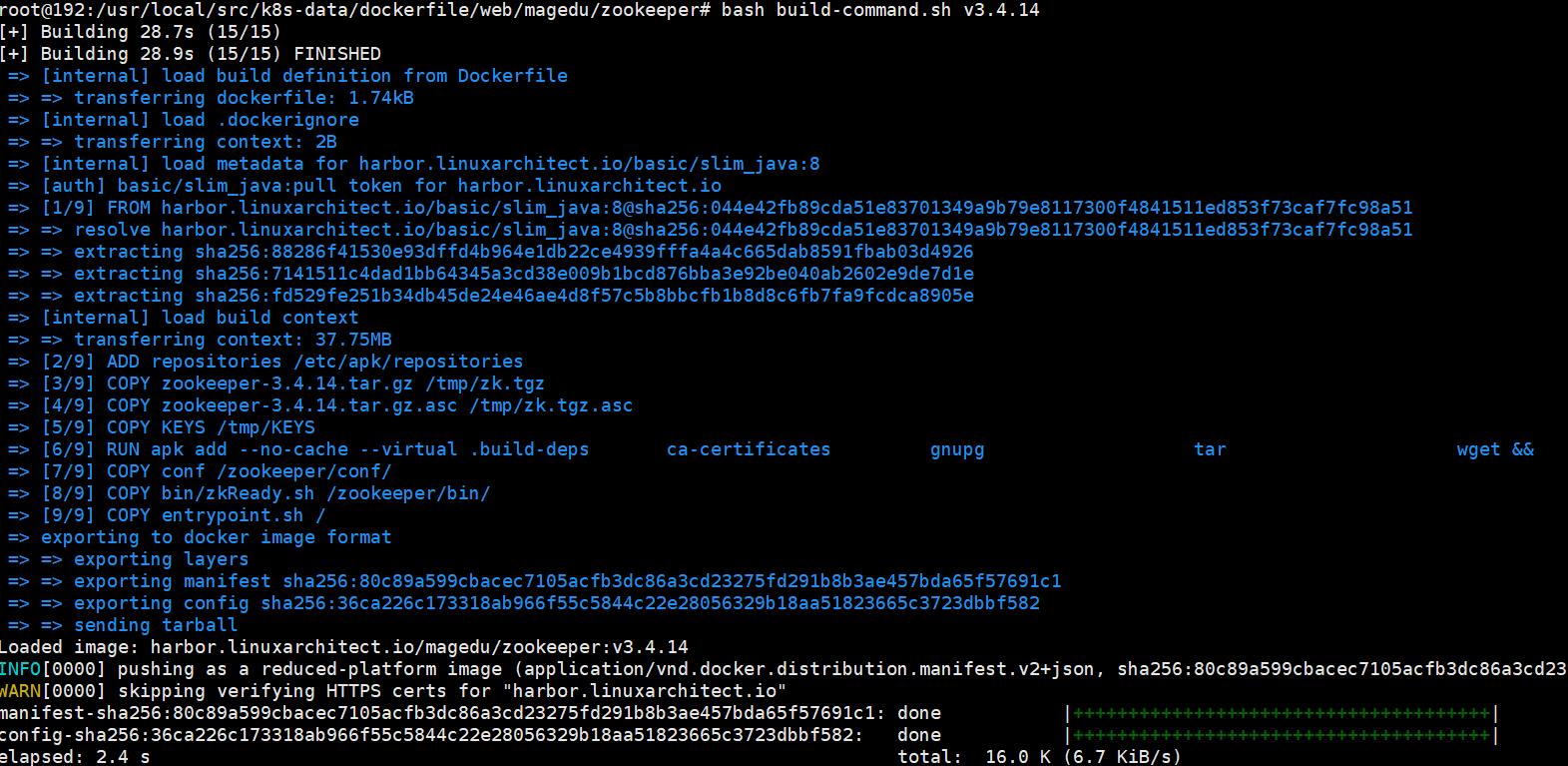

制作镜像

测试zookeeper镜像 参数不添加server 默认启动单机zookeeper

zookeeper.yaml文件

root@192:/usr/local/src/k8s-data/yaml/magedu/zookeeper# cat zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: magedu

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir:

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.linuxarchitect.io/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir:

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.linuxarchitect.io/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir:

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.linuxarchitect.io/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

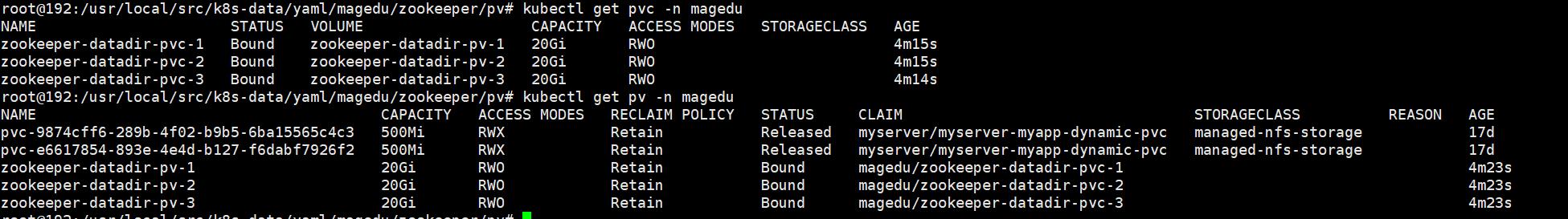

pv pvc文件 用于分别挂载server1 server2 server3

root@192:/usr/local/src/k8s-data/yaml/magedu/zookeeper/pv# cat zookeeper-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/zookeeper-datadir-1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/zookeeper-datadir-2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/zookeeper-datadir-3

root@192:/usr/local/src/k8s-data/yaml/magedu/zookeeper/pv# cat zookeeper-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-1

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-2

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-3

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-3

resources:

requests:

storage: 10Gi

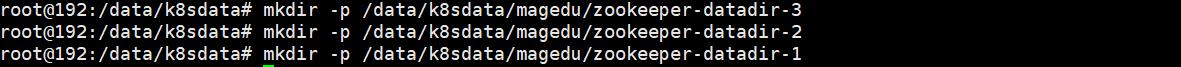

存储服务器创建目录

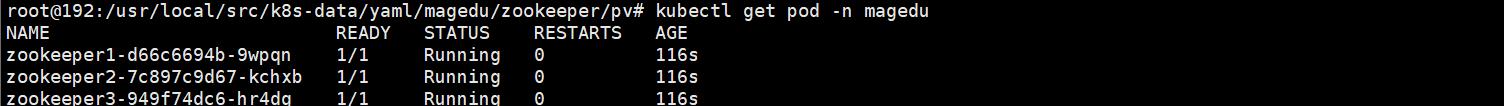

创建pod pv pvc

进入pod测试

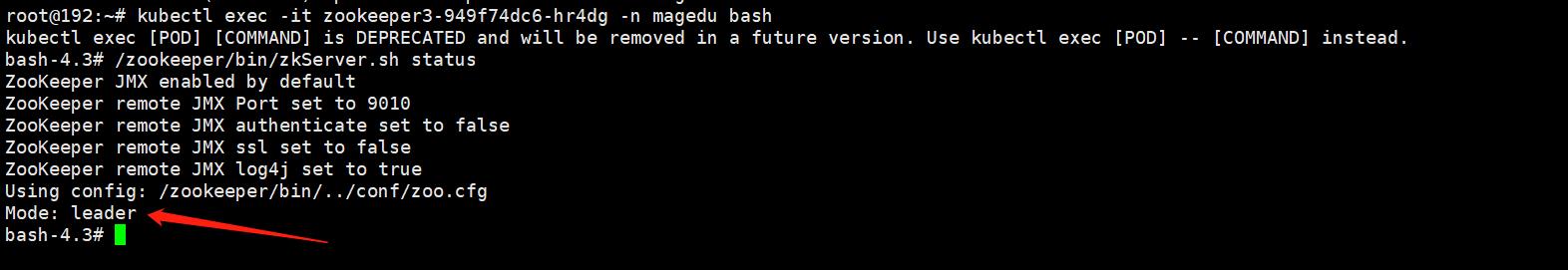

测试zookeeper能否选举成功,进入pod3,发现是leader

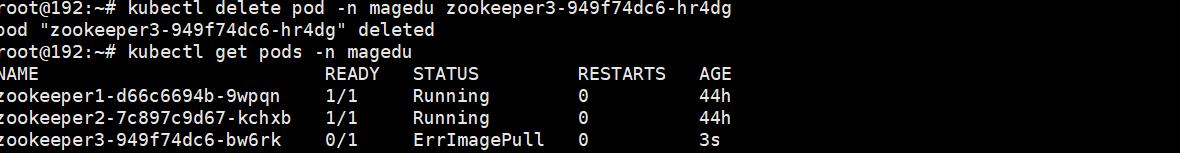

挂掉harbor 然后删除pod3,查看是否触发选举

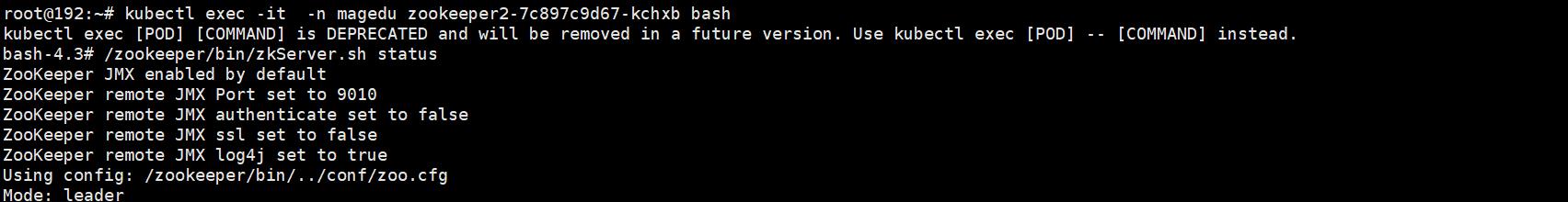

进入pod2 查看到pod2已经成为leader 选举机制生效。

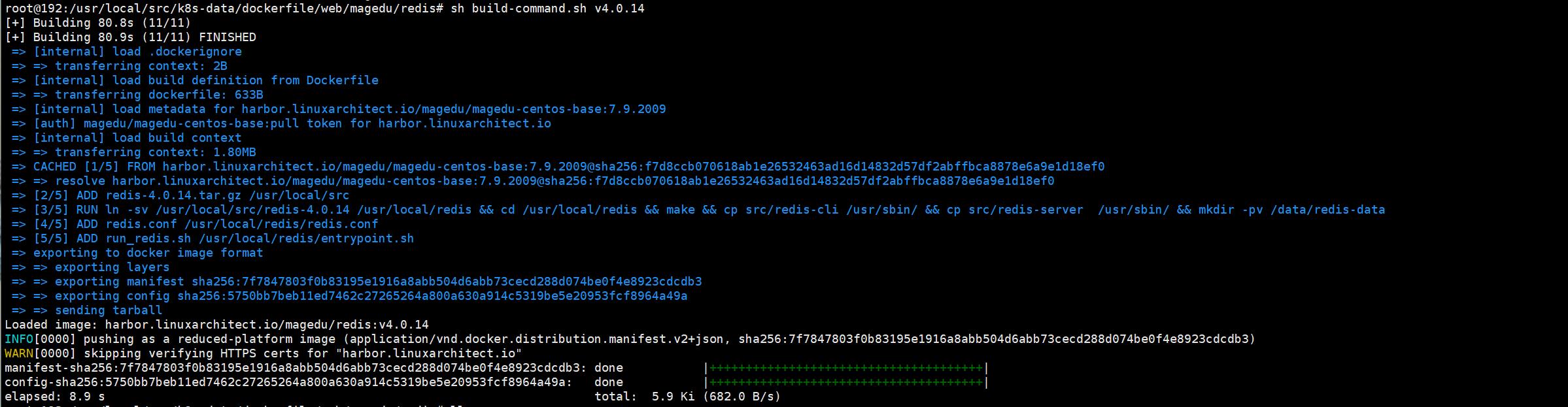

案例四 PV/PVC以及Redis单机:

redis的dockerfile文件

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/redis# cat Dockerfile

#Redis Image

FROM harbor.linuxarchitect.io/baseimages/magedu-centos-base:7.9.2009

MAINTAINER zhangshijie "zhangshijie@magedu.net"

ADD redis-4.0.14.tar.gz /usr/local/src

RUN ln -sv /usr/local/src/redis-4.0.14 /usr/local/redis && cd /usr/local/redis && make && cp src/redis-cli /usr/sbin/ && cp src/redis-server /usr/sbin/ && mkdir -pv /data/redis-data

ADD redis.conf /usr/local/redis/redis.conf

EXPOSE 6379

#ADD run_redis.sh /usr/local/redis/run_redis.sh

#CMD ["/usr/local/redis/run_redis.sh"]

ADD run_redis.sh /usr/local/redis/entrypoint.sh

ENTRYPOINT ["/usr/local/redis/entrypoint.sh"]

build-command.sh文件

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/redis# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.linuxarchitect.io/magedu/redis:$TAG .

#sleep 3

#docker push harbor.linuxarchitect.io/magedu/redis:$TAG

nerdctl build -t harbor.linuxarchitect.io/magedu/redis:$TAG .

nerdctl push harbor.linuxarchitect.io/magedu/redis:$TAG

redis.conf配置文件

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/redis# cat redis.conf |grep -v "^#" |grep -v "^$"

bind 0.0.0.0

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize yes

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile ""

databases 16

always-show-logo yes

save 900 1

save 5 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error no

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data/redis-data

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

requirepass 123456

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

slave-lazy-flush no

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble no

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

创建镜像

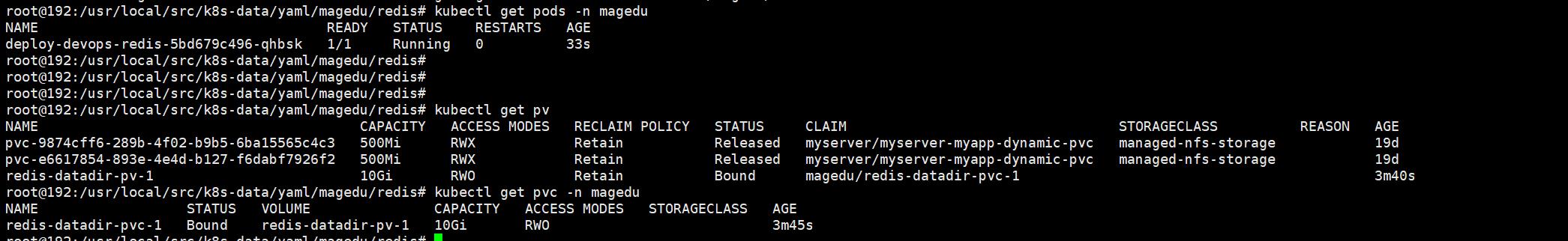

利用镜像创建pod

redis.yaml

root@192:/usr/local/src/k8s-data/yaml/magedu/redis# cat redis.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: devops-redis

name: deploy-devops-redis

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: devops-redis

template:

metadata:

labels:

app: devops-redis

spec:

containers:

- name: redis-container

image: harbor.linuxarchitect.io/magedu/redis:v4.0.14

imagePullPolicy: Always

volumeMounts:

- mountPath: "/data/redis-data/"

name: redis-datadir

volumes:

- name: redis-datadir

persistentVolumeClaim:

claimName: redis-datadir-pvc-1

---

kind: Service

apiVersion: v1

metadata:

labels:

app: devops-redis

name: srv-devops-redis

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 6379

targetPort: 6379

nodePort: 31379

selector:

app: devops-redis

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

pv pvc文件

root@192:/usr/local/src/k8s-data/yaml/magedu/redis/pv# cat redis-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-datadir-pv-1

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/magedu/redis-datadir-1

server: 192.168.110.184

root@192:/usr/local/src/k8s-data/yaml/magedu/redis/pv# cat redis-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-datadir-pvc-1

namespace: magedu

spec:

volumeName: redis-datadir-pv-1

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

创建pv pvc pod

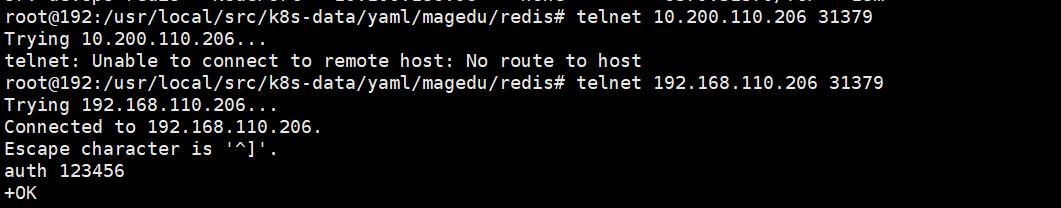

测试

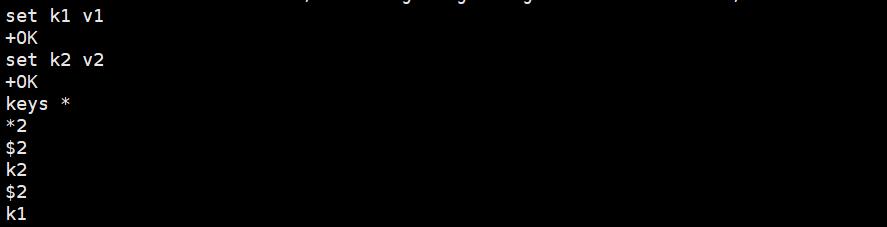

插入数据

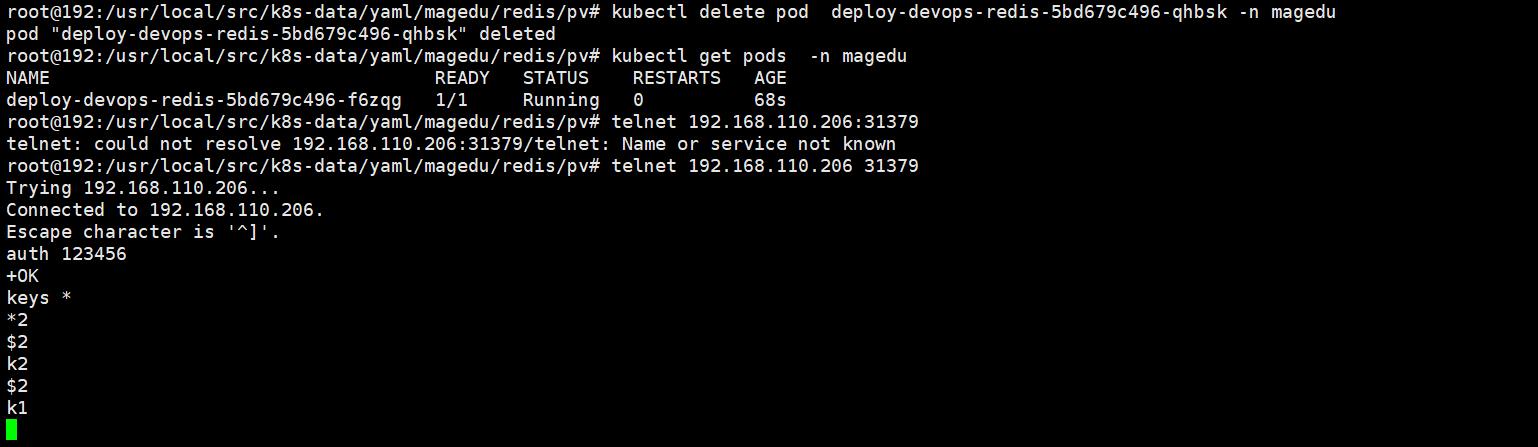

删除pod 重建 查看redis数据是否丢失

可以看到数据还在,因此可以看出redis的pod删除后,数据还留在存储中,重建后可以通过挂载存储看到数据。

案例五 PV/PVC以及Redis集群 Statefulset:

创建步骤:

- 创建PV与PVC

- pod名称:StatefulSet名称-id

- pvc的名称:volumeClaimTemplatesm名称-StatefulSet名称-id

- 部署redis cluster

- 初始化redis cluster

- 验证redis cluster状态

- 验证验证redis cluster高可用

pv文件

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/redis-cluster/pv# cat redis-cluster-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv0

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/redis0

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv1

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/redis1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv2

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/redis2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv3

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/redis3

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv4

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/redis4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv5

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/redis5

创建pv

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/redis-cluster/pv# kubectl apply -f redis-cluster-pv.yaml

persistentvolume/redis-cluster-pv0 created

persistentvolume/redis-cluster-pv1 created

persistentvolume/redis-cluster-pv2 created

persistentvolume/redis-cluster-pv3 created

persistentvolume/redis-cluster-pv4 created

persistentvolume/redis-cluster-pv5 created

redis.conf

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/redis-cluster# cat redis.conf

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

基于配置文件创建configmap

kubectl create configmap redis-conf --from-file=redis.conf -n magedu

redis.yaml

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/redis-cluster# cat redis.yaml

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: magedu

labels:

app: redis

spec:

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis

port: 6379

clusterIP: None

---

apiVersion: v1

kind: Service

metadata:

name: redis-access

namespace: magedu

labels:

app: redis

spec:

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis-access

protocol: TCP

port: 6379

targetPort: 6379

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: magedu

spec:

serviceName: redis

replicas: 6

selector:

matchLabels:

app: redis

appCluster: redis-cluster

template:

metadata:

labels:

app: redis

appCluster: redis-cluster

spec:

terminationGracePeriodSeconds: 20

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- redis

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: redis:4.0.14

command:

- "redis-server"

args:

- "/etc/redis/redis.conf"

- "--protected-mode"

- "no"

resources:

requests:

cpu: "500m"

memory: "500Mi"

ports:

- containerPort: 6379

name: redis

protocol: TCP

- containerPort: 16379

name: cluster

protocol: TCP

volumeMounts:

- name: conf

mountPath: /etc/redis

- name: data

mountPath: /var/lib/redis

volumes:

- name: conf

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

volumeClaimTemplates:

- metadata:

name: data

namespace: magedu

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi

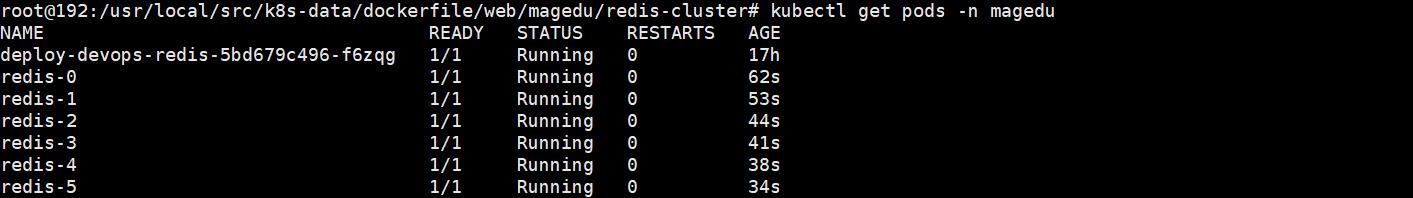

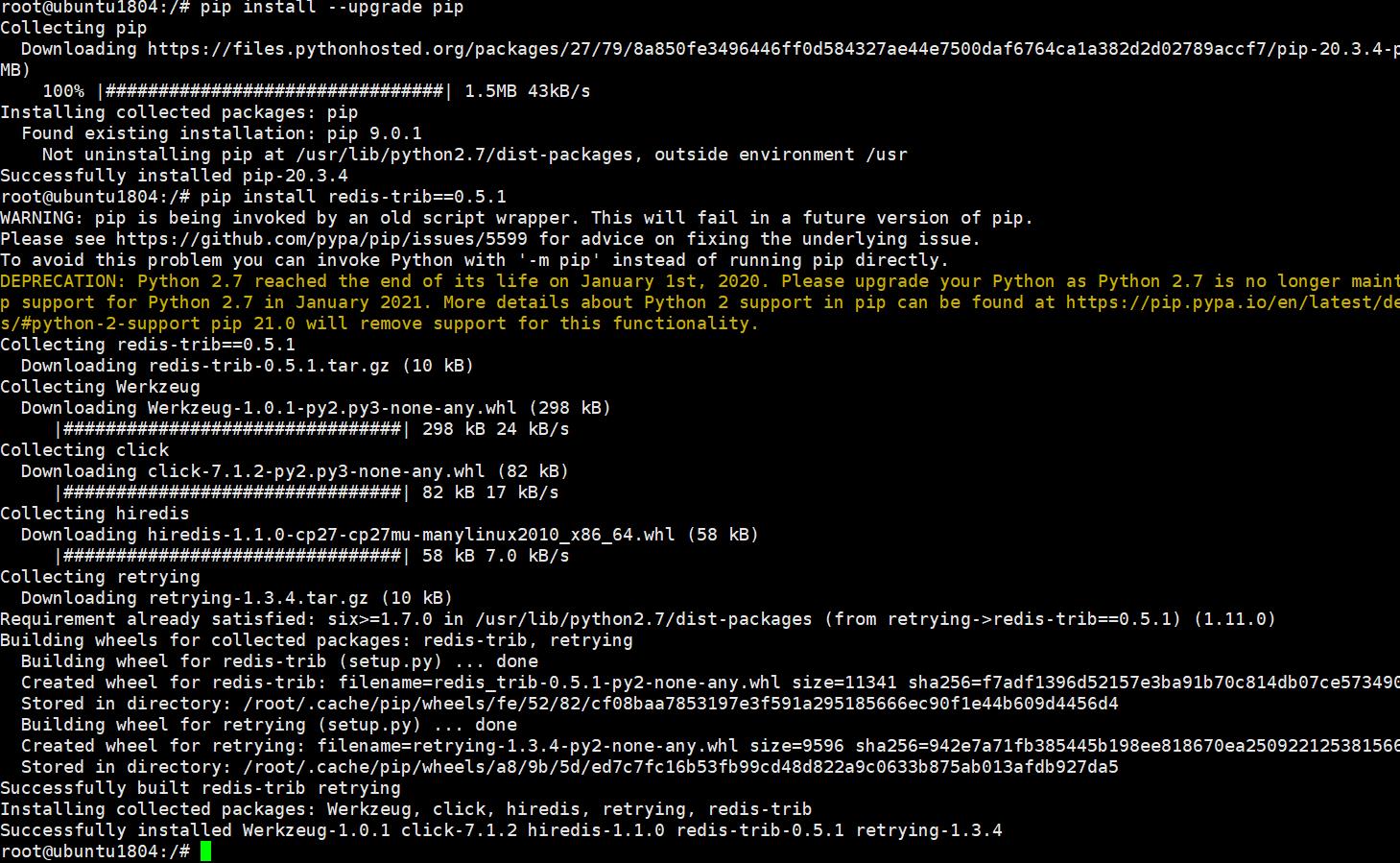

创建pod

初始化cluster

初始化只需要初始化一次,redis 4及之前的版本需要使用redis-tribe工具进行初始化,redis 5开始使用redis-cli。

在maegdu创建一个临时容器用于初始化redis-cluster:

kubectl run -it ubuntu1804 --image=ubuntu:18.04 --restart=Never -n magedu bash

root@ubuntu:/# apt update

root@ubuntu1804:/# apt install python2.7 python-pip redis-tools dnsutils iputils-ping net-tools

root@ubuntu1804:/# pip install --upgrade pip

root@ubuntu1804:/# pip install redis-trib==0.5.1

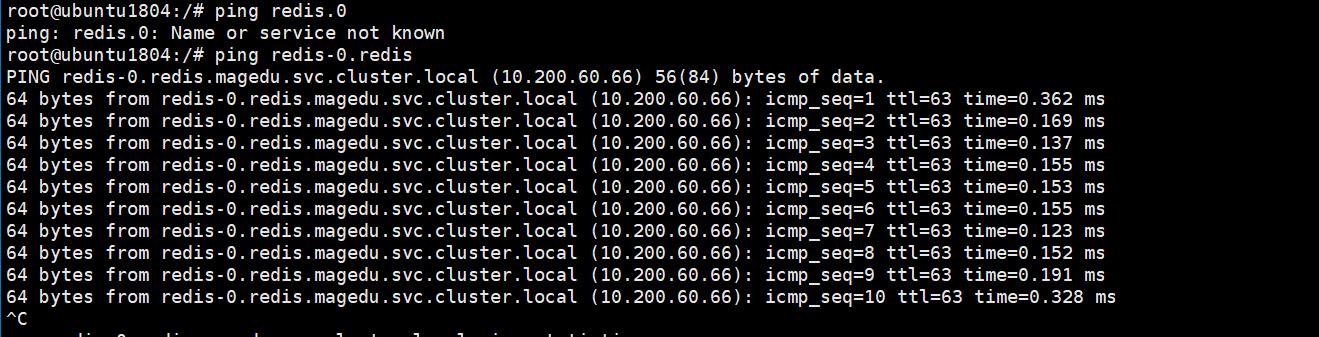

statefulset创建pod的名称是固定的,容器内可一通过pod名称来找到pod的ip

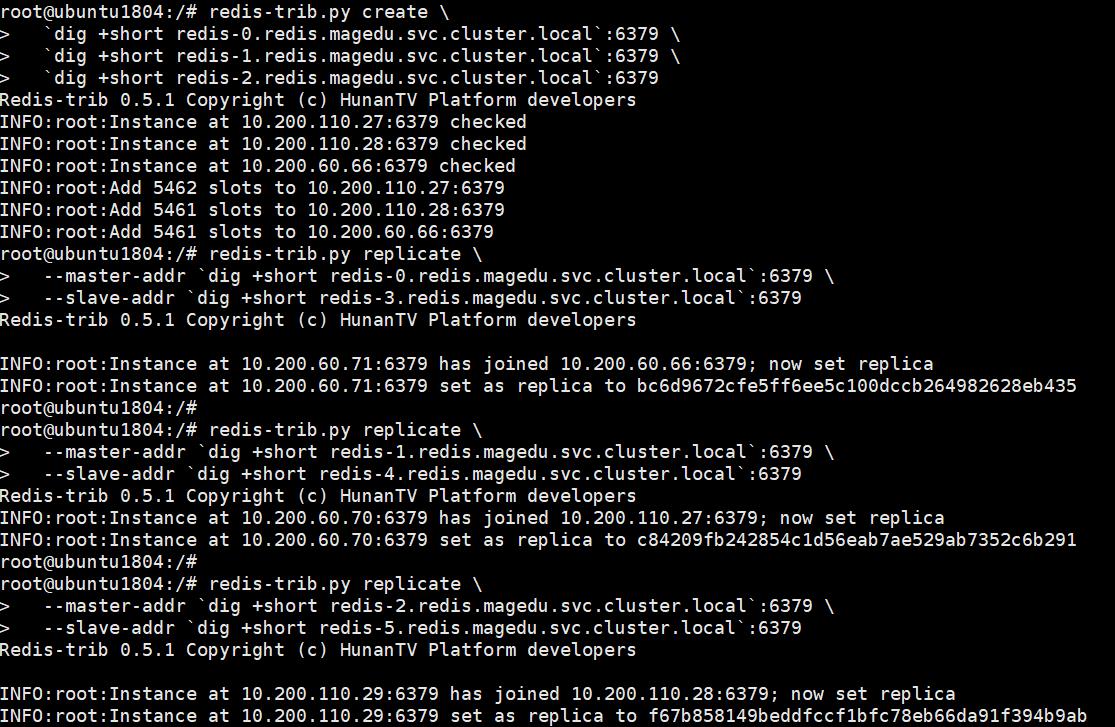

创建集群:

redis-trib.py create \\

`dig +short redis-0.redis.magedu.svc.cluster.local`:6379 \\

`dig +short redis-1.redis.magedu.svc.cluster.local`:6379 \\

`dig +short redis-2.redis.magedu.svc.cluster.local`:6379

将redis-3加入redis-0 成为redis-0的slave ; 4加入1 5加入2

redis-trib.py replicate \\

--master-addr `dig +short redis-0.redis.magedu.svc.cluster.local`:6379 \\

--slave-addr `dig +short redis-3.redis.magedu.svc.cluster.local`:6379

redis-trib.py replicate \\

--master-addr `dig +short redis-1.redis.magedu.svc.cluster.local`:6379 \\

--slave-addr `dig +short redis-4.redis.magedu.svc.cluster.local`:6379

redis-trib.py replicate \\

--master-addr `dig +short redis-2.redis.magedu.svc.cluster.local`:6379 \\

--slave-addr `dig +short redis-5.redis.magedu.svc.cluster.local`:6379

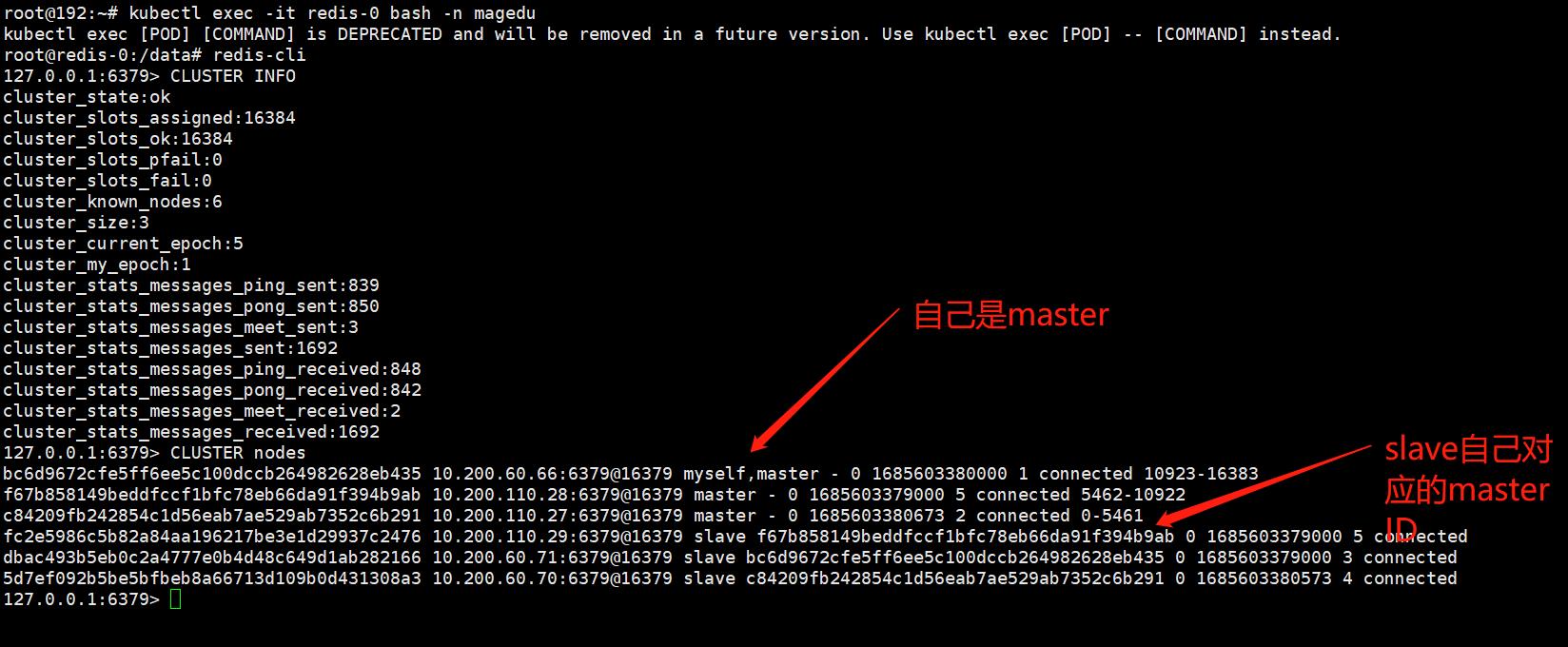

进入redis-0 查看集群情况

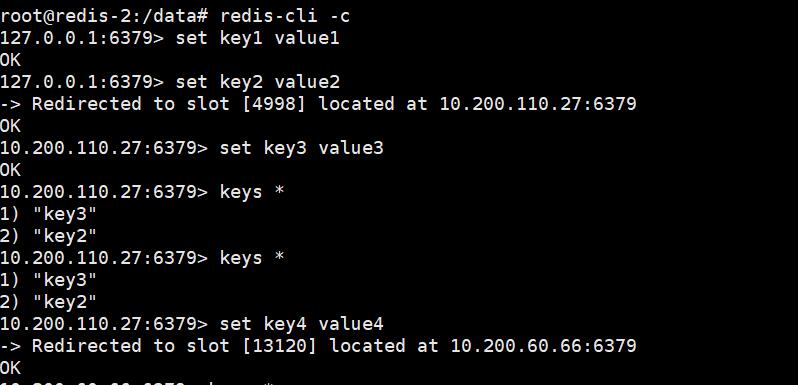

创建键值对测试集群

案例六 Mysql一主多从

mysql 一主多从架构是为了 读写分离 (主库写,从库读;降低主库压力)

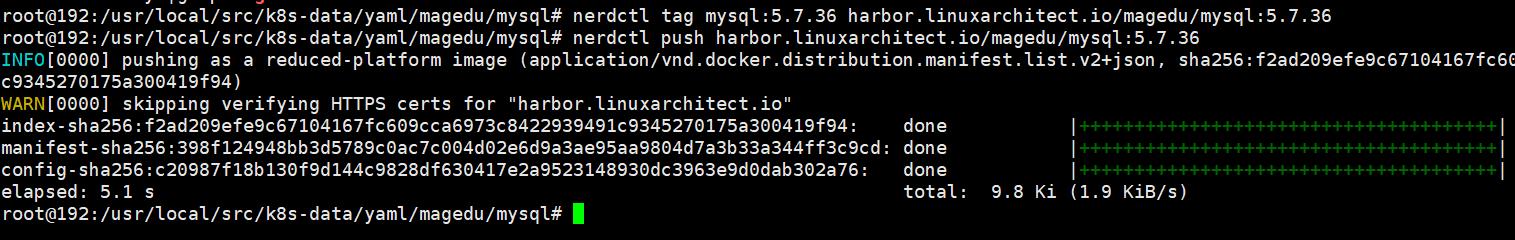

下载mysql和xtrabackup镜像,打tag,上传镜像

yaml文件

root@192:/usr/local/src/k8s-data/yaml/magedu/mysql# cat mysql-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

namespace: magedu

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 2

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql #初始化容器1、基于当前pod name匹配角色是master还是slave,并动态生成相对应的配置文件

image: harbor.linuxarchitect.io/magedu/mysql:5.7.36

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1 #匹配hostname的最后一位、最后是一个顺序叠加的整数

ordinal=$BASH_REMATCH[1]

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then #如果是master、则cpmaster配置文件

cp /mnt/config-map/master.cnf /mnt/conf.d/

else #否则cp slave配置文件

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf #临时卷、emptyDir

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql #初始化容器2、用于生成mysql配置文件、并从上一个pod完成首次的全量数据clone(slave 3从slave2 clone,而不是每个slave都从master clone实现首次全量同步,但是后期都是与master实现增量同步)

image: harbor.linuxarchitect.io/magedu/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=$BASH_REMATCH[1]

[[ $ordinal -eq 0 ]] && exit 0 #如果最后一位是0(master)则退出clone过程

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql #从上一个pod执行clone(binlog),xbstream为解压缩命令

# Prepare the backup.xue

xtrabackup --prepare --target-dir=/var/lib/mysql #通过xtrabackup恢复binlog

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql #业务容器1(mysql主容器)

image: harbor.linuxarchitect.io/magedu/mysql:5.7.36

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data #挂载数据目录至/var/lib/mysql

mountPath: /var/lib/mysql

subPath: mysql

- name: conf #配置文件/etc/mysql/conf.d

mountPath: /etc/mysql/conf.d

resources: #资源限制

requests:

cpu: 500m

memory: 1Gi

livenessProbe: #存活探针

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe: #就绪探针

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup #业务容器2(xtrabackup),用于后期同步master 的binglog并恢复数据

image: harbor.linuxarchitect.io/magedu/xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we\'re cloning from an existing slave.

mv xtrabackup_slave_info change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it\'s useless).

rm -f xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We\'re cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm xtrabackup_binlog_info

echo "CHANGE MASTER TO MASTER_LOG_FILE=\'$BASH_REMATCH[1]\',\\

MASTER_LOG_POS=$BASH_REMATCH[2]" > change_master_to.sql.in #生成CHANGE MASTER命令

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

#执行CHANGE MASTER操作并启动SLAVE

mysql -h 127.0.0.1 <<EOF

$(<change_master_to.sql.orig),

MASTER_HOST=\'mysql-0.mysql\',

MASTER_USER=\'root\',

MASTER_PASSWORD=\'\',

MASTER_CONNECT_RETRY=10;

START SLAVE;

EOF

fi

# Start a server to send backups when requested by peers. #监听在3307端口,用于为下一个pod同步全量数据

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \\

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root"

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 100m

memory: 100Mi

volumes:

- name: conf

emptyDir:

- name: config-map

configMap:

name: mysql

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

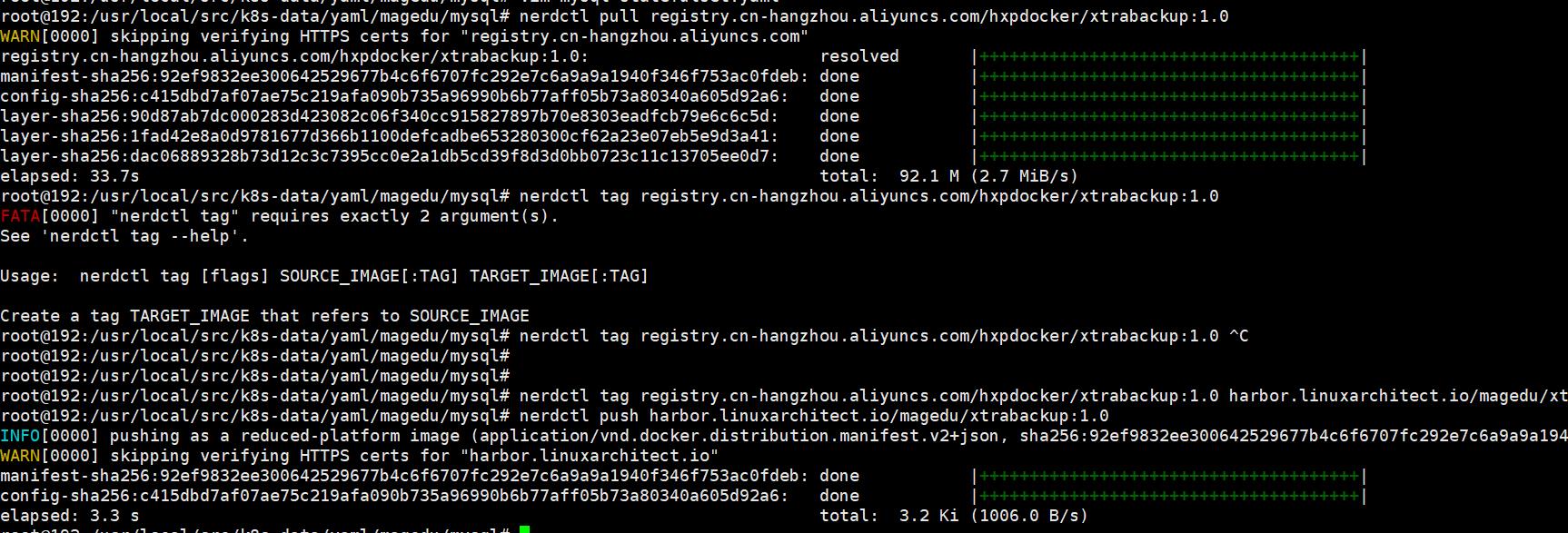

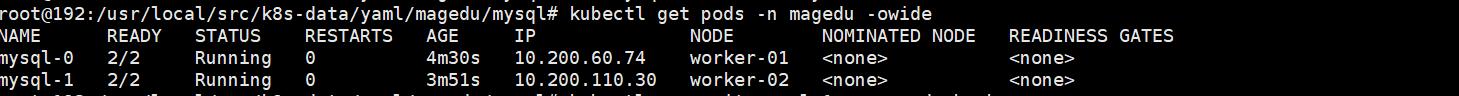

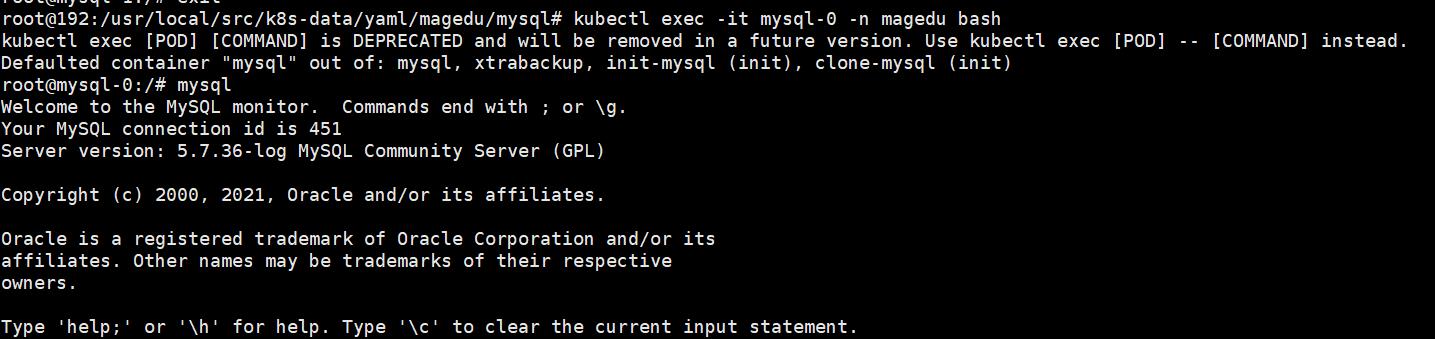

创建mysql pod

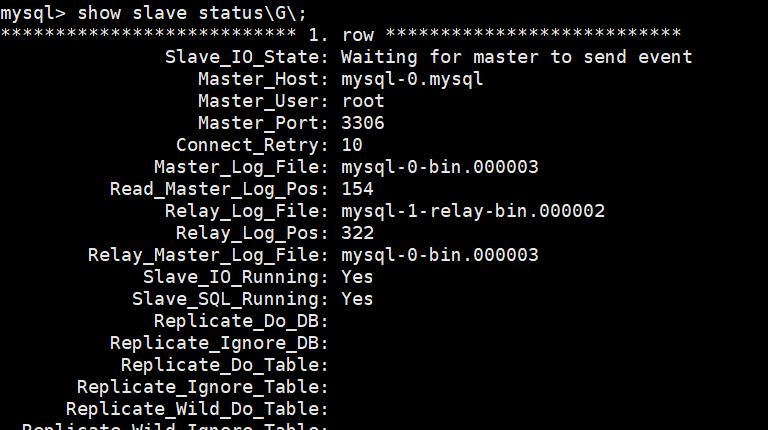

进入mysql-1 应该是从库

root@192:/usr/local/src/k8s-data/yaml/magedu/mysql# kubectl exec -it mysql-1 -n magedu bash

root@mysql-1:/# mysql

mysql> show slave status\\G\\;

可以看到从库状态和它主库的名称

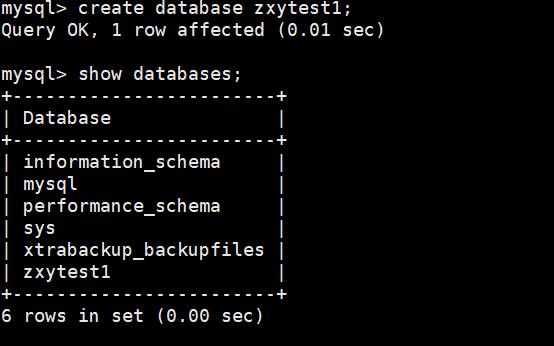

进入mysql-0 创建新库

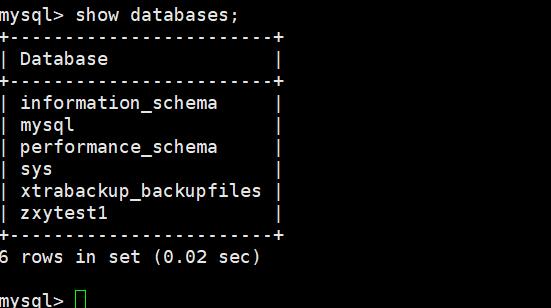

后在mysql-1中 可以看到新库zxytest1;主从架构成功

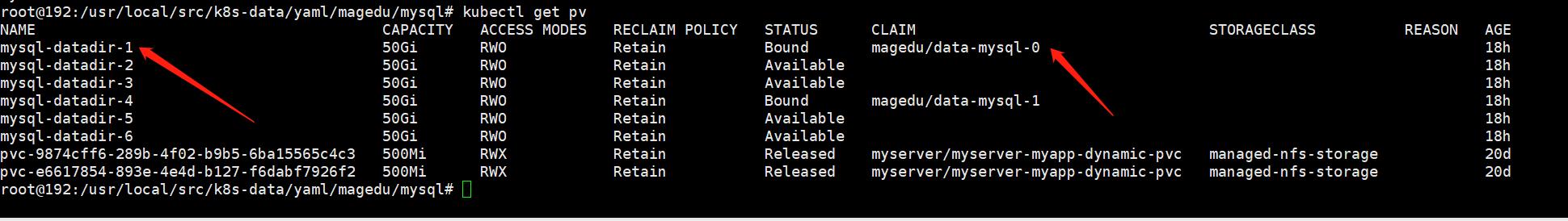

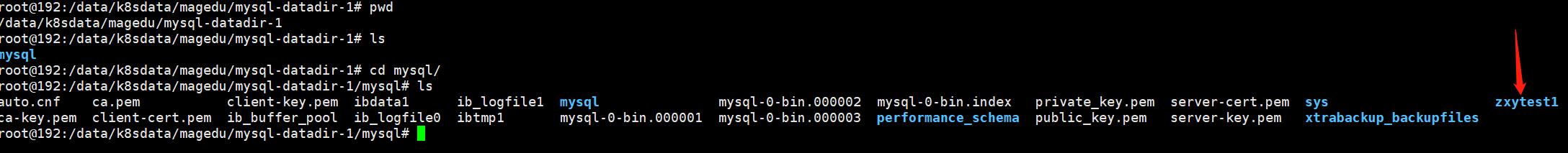

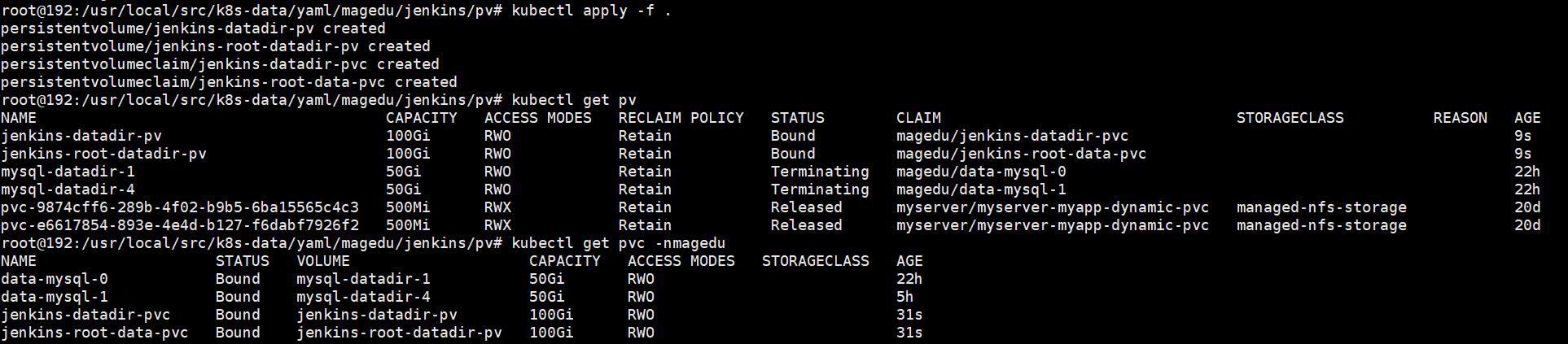

查看pv挂载 可以看到 mysql-datadir-1和4 被绑定

进入对应存储目录 可以看到数据文件

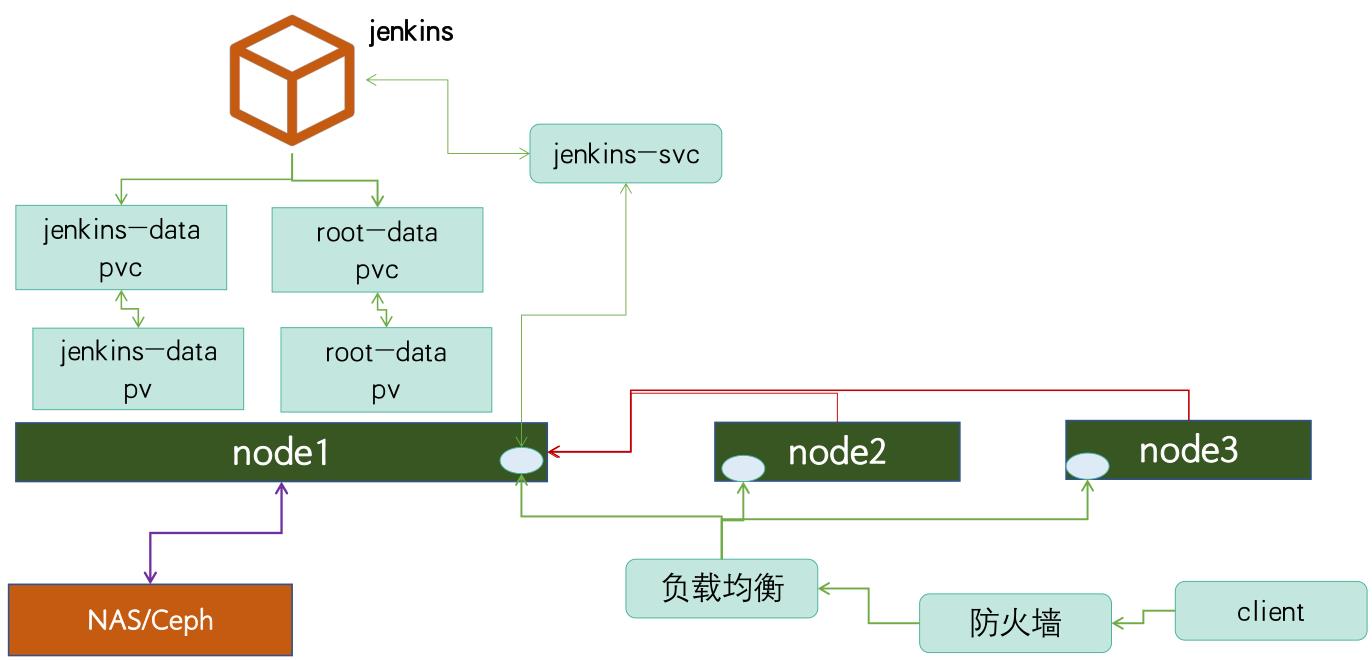

案例七 Java应用-Jenkins:

dockerfile文件

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/jenkins# cat Dockerfile

#Jenkins Version 2.190.1

FROM harbor.linuxarchitect.io/pub-images/jdk-base:v8.212

MAINTAINER zhangshijie zhangshijie@magedu.net

ADD jenkins-2.319.2.war /apps/jenkins/jenkins.war

ADD run_jenkins.sh /usr/bin/

EXPOSE 8080

CMD ["/usr/bin/run_jenkins.sh"]

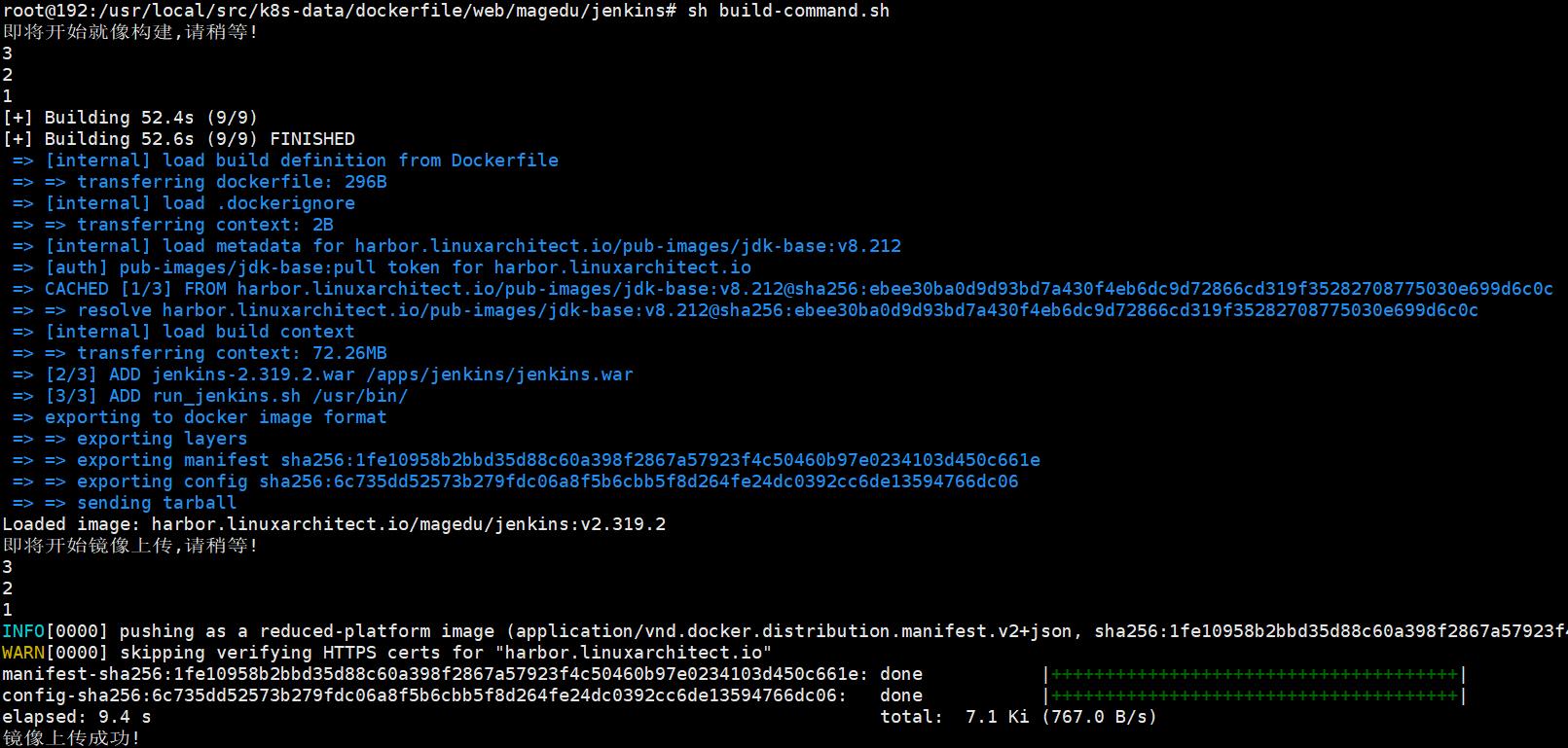

build-command.sh文件

root@192:/usr/local/src/k8s-data/dockerfile/web/magedu/jenkins# cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/magedu/jenkins:v2.319.2 .

#echo "镜像制作完成,即将上传至Harbor服务器"

#sleep 1

#docker push harbor.linuxarchitect.io/magedu/jenkins:v2.319.2

#echo "镜像上传完成"

echo "即将开始就像构建,请稍等!" && echo 3 && sleep 1 && echo 2 && sleep 1 && echo 1

nerdctl build -t harbor.linuxarchitect.io/magedu/jenkins:v2.319.2 .

if [ $? -eq 0 ];then

echo "即将开始镜像上传,请稍等!" && echo 3 && sleep 1 && echo 2 && sleep 1 && echo 1

nerdctl push harbor.linuxarchitect.io/magedu/jenkins:v2.319.2

if [ $? -eq 0 ];then

echo "镜像上传成功!"

else

echo "镜像上传失败"

fi

else

echo "镜像构建失败,请检查构建输出信息!"

fi

制作镜像

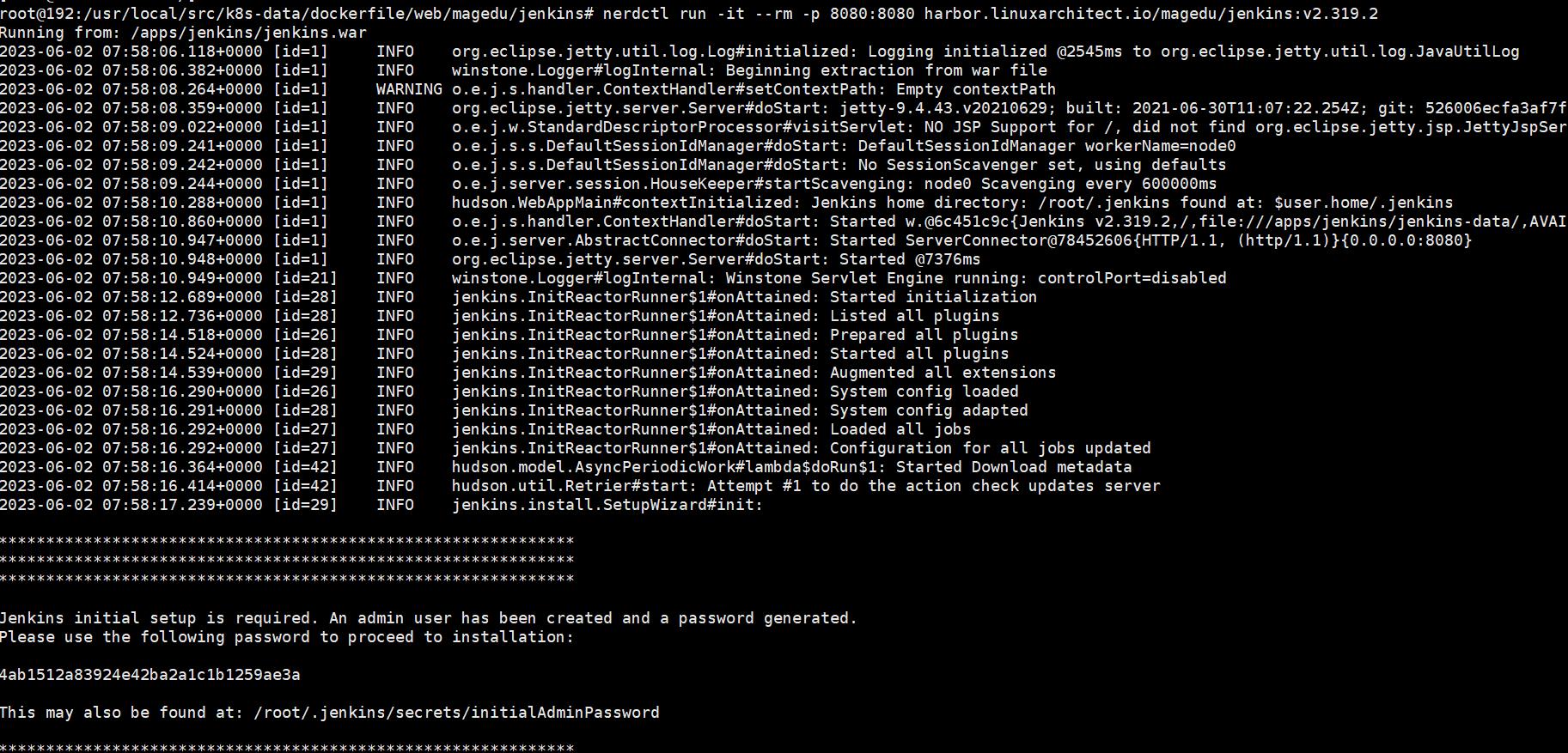

创建容器测试

jenkins.yaml文件

root@192:/usr/local/src/k8s-data/yaml/magedu/jenkins# cat jenkins.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-jenkins

name: magedu-jenkins-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-jenkins

template:

metadata:

labels:

app: magedu-jenkins

spec:

containers:

- name: magedu-jenkins-container

image: harbor.linuxarchitect.io/magedu/jenkins:v2.319.2

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

volumeMounts:

- mountPath: "/apps/jenkins/jenkins-data/"

name: jenkins-datadir-magedu

- mountPath: "/root/.jenkins"

name: jenkins-root-datadir

volumes:

- name: jenkins-datadir-magedu

persistentVolumeClaim:

claimName: jenkins-datadir-pvc

- name: jenkins-root-datadir

persistentVolumeClaim:

claimName: jenkins-root-data-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-jenkins

name: magedu-jenkins-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30080

selector:

app: magedu-jenkins

pv pvc文件

root@192:/usr/local/src/k8s-data/yaml/magedu/jenkins/pv# cat jenkins-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-datadir-pv

namespace: magedu

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/jenkins-data

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-root-datadir-pv

namespace: magedu

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/jenkins-root-data

root@192:/usr/local/src/k8s-data/yaml/magedu/jenkins/pv# cat jenkins-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-datadir-pvc

namespace: magedu

spec:

volumeName: jenkins-datadir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 80Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-root-data-pvc

namespace: magedu

spec:

volumeName: jenkins-root-datadir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 80Gi

创建pv pvc pod

登录测试

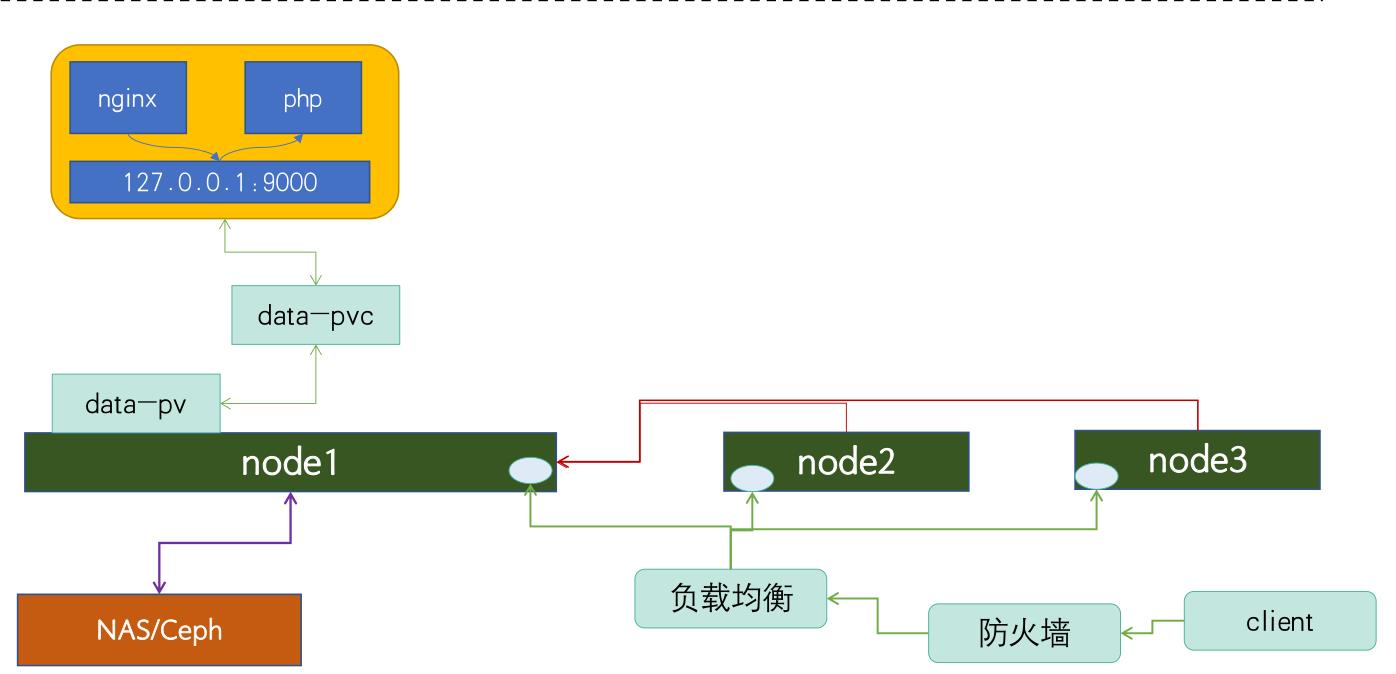

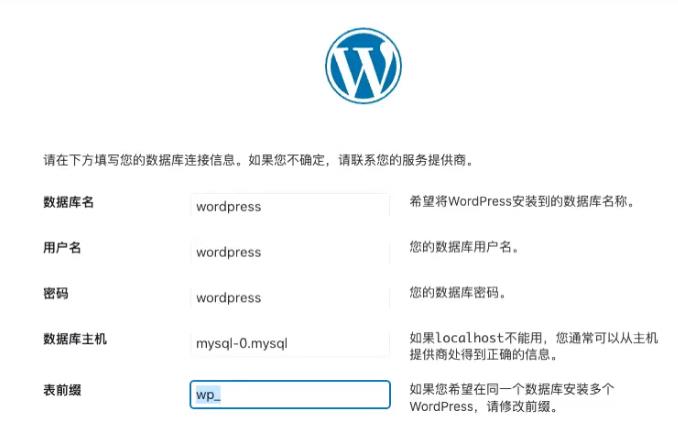

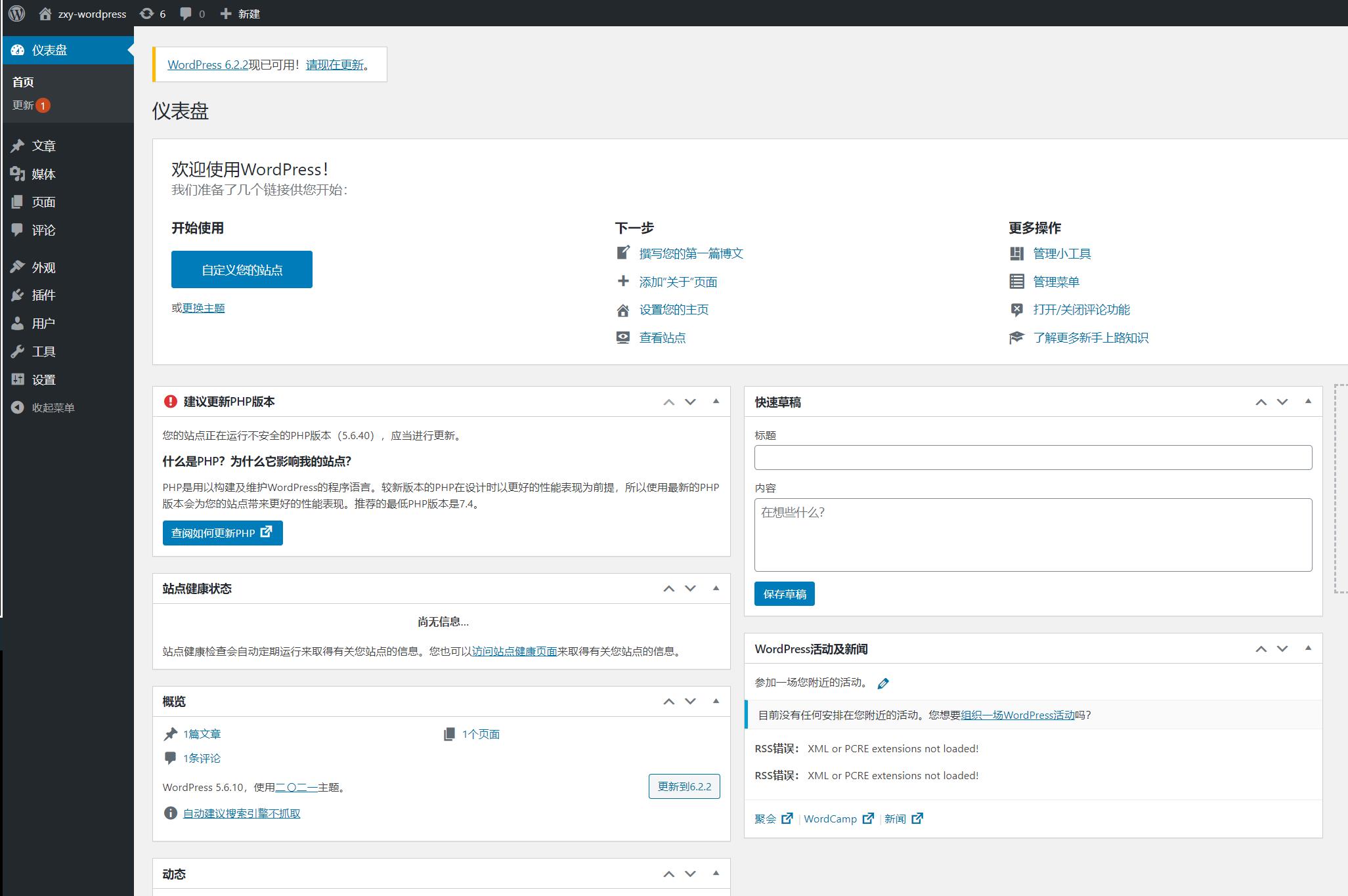

案例八: WordPress

WordPress简介:

WordPress是使用PHP语言开发的博客平台,用户可以在支持PHP和MySQL数据库的服务器上架设属于自己的网站。也可以把 WordPress当作一个内容管理系统(CMS)来使用。WordPress是一款个人博客系统,并逐步演化成一款内容管理系统软件,它是使用PHP语言和MySQL数据库开发的,用户可以在支持 PHP 和 MySQL数据库的服务器上使用自己的博客。WordPress有许多第三方开发的免费模板,安装方式简单易用。不过要做一个自己的模板,则需要你有一定的专业知识。比如你至少要懂的标准通用标记语言下的一个应用HTML代码、CSS、PHP等相关知识。

制作镜像

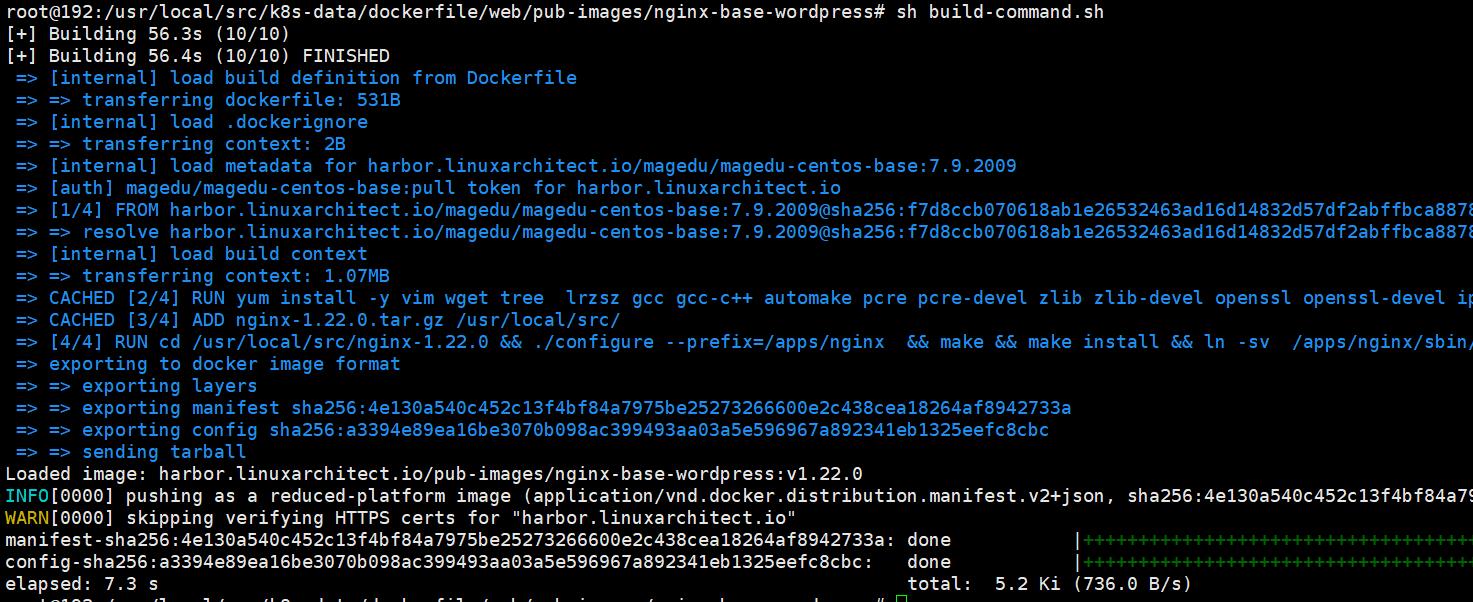

先制作nginx-base-wordpress镜像:

dockerfile

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/nginx-base-wordpress# cat Dockerfile

#Nginx Base Image

FROM harbor.linuxarchitect.io/baseimages/magedu-centos-base:7.9.2009

MAINTAINER zhangshijie@magedu.net

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.22.0.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.22.0 && ./configure --prefix=/apps/nginx && make && make install && ln -sv /apps/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.22.0.tar.gz

build-command.sh

root@192:/usr/local/src/k8s-data/dockerfile/web/pub-images/nginx-base-wordpress# cat build-command.sh

#!/bin/bash

#docker build -t harbor.linuxarchitect.io/pub-images/nginx-base-wordpress:v1.20.2 .

#sleep 1

#docker push harbor.linuxarchitect.io/pub-images/nginx-base-wordpress:v1.20.2

nerdctl build -t harbor.linuxarchitect.io/pub-images/nginx-base-wordpress:v1.22.0 .

nerdctl push harbor.linuxarchitect.io/pub-images/nginx-base-wordpress:v1.22.0

制作镜像

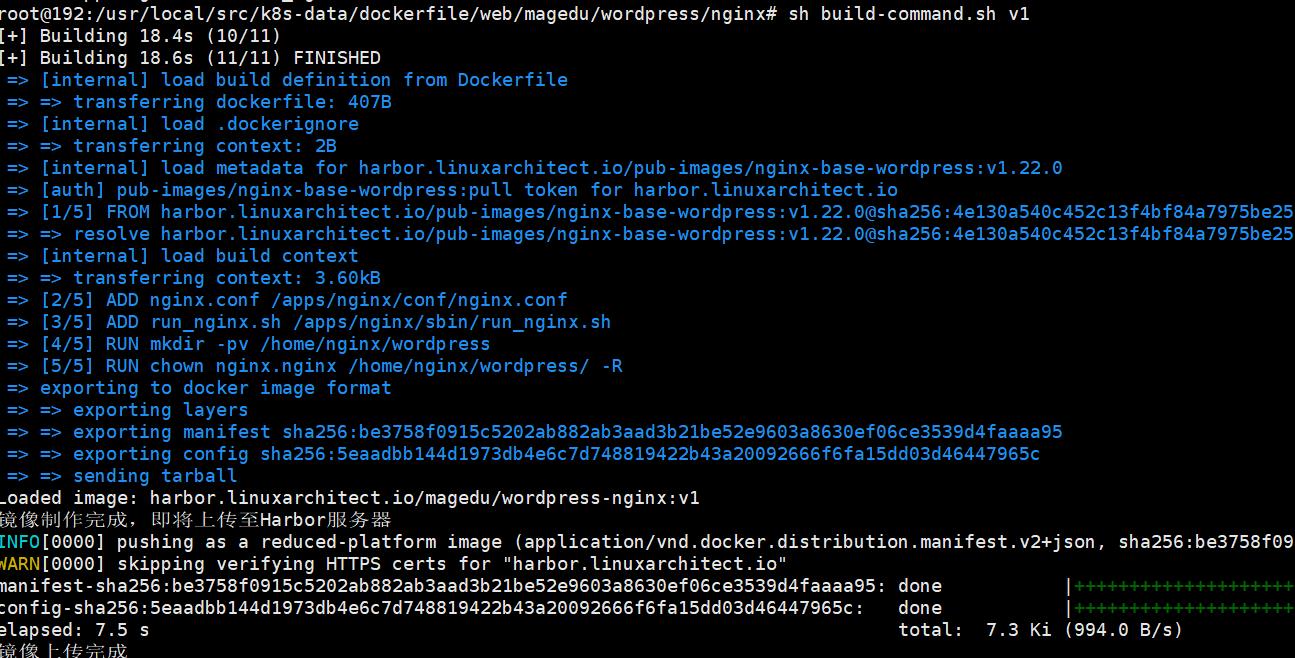

创建nginx镜像 代码略

创建php镜像

wordpress.yaml文件

root@192:/usr/local/src/k8s-data/yaml/magedu/wordpress# cat wordpress.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: wordpress-app

name: wordpress-app-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-app

template:

metadata:

labels:

app: wordpress-app

spec:

containers:

- name: wordpress-app-nginx

image: harbor.linuxarchitect.io/magedu/wordpress-nginx:v1

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

volumeMounts:

- name: wordpress

mountPath: /home/nginx/wordpress

readOnly: false

- name: wordpress-app-php

image: harbor.linuxarchitect.io/magedu/wordpress-php-5.6:v1

#image: harbor.linuxarchitect.io/magedu/php:5.6.40-fpm

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 9000

protocol: TCP

name: http

volumeMounts:

- name: wordpress

mountPath: /home/nginx/wordpress

readOnly: false

volumes:

- name: wordpress

nfs:

server: 192.168.110.184

path: /data/k8sdata/magedu/wordpress

---

kind: Service

apiVersion: v1

metadata:

labels:

app: wordpress-app

name: wordpress-app-spec

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30031

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30033

selector:

app: wordpress-app

在负载均衡器上配置响应ip和端口

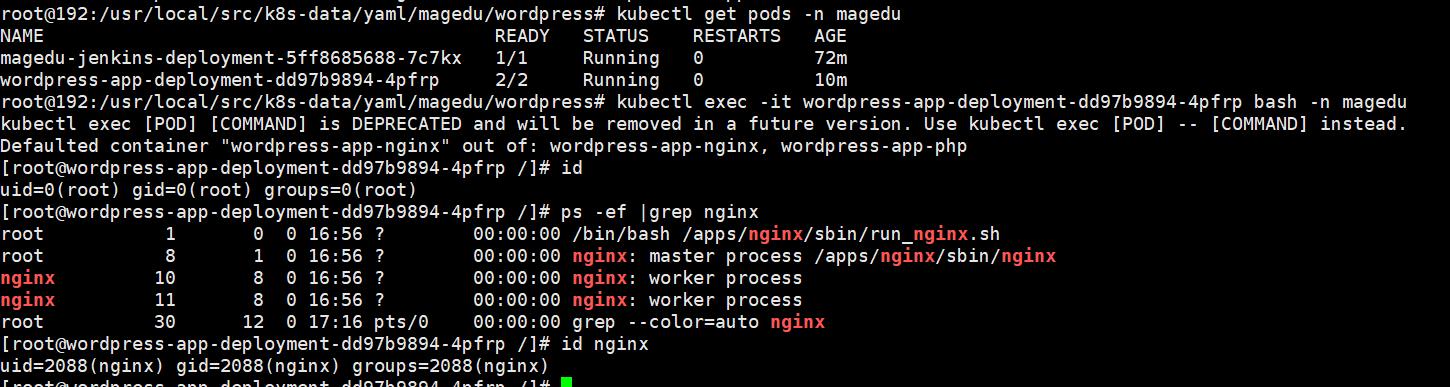

创建pod 进入pod 查看nginx id

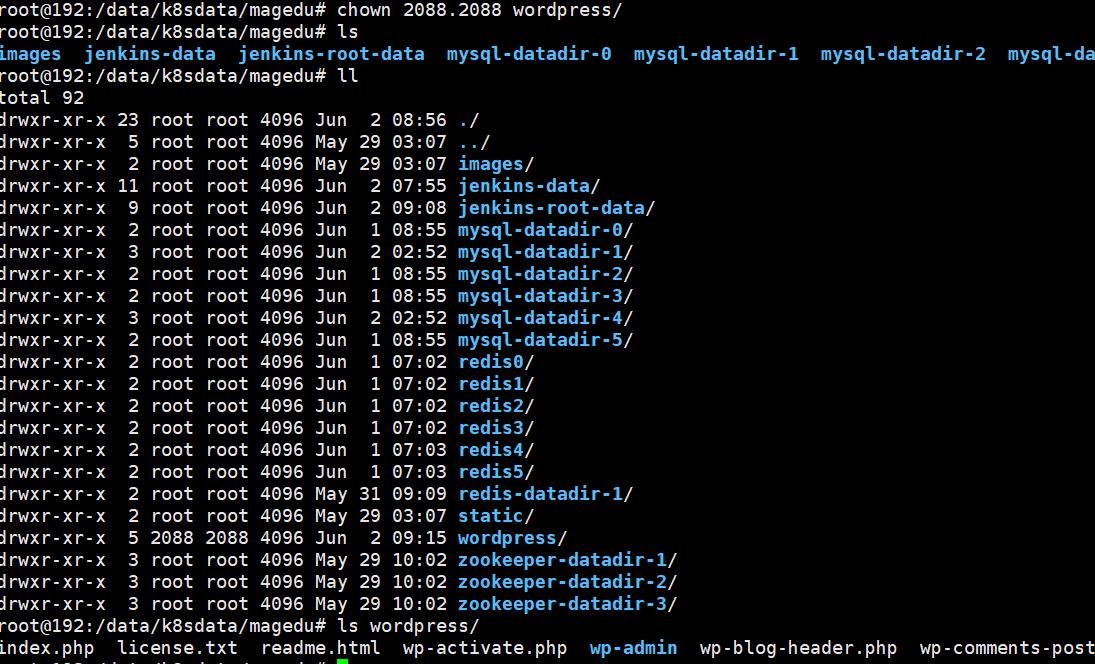

将wordpress包拷入存储服务器;并将存储服务区的目录权限id改成和pod内的一致;

进入之前创建的msyql-0 当做WordPress数据库

root@192:/usr/local/src/k8s-data/yaml/magedu/mysql# kubectl exec -it mysql-0 -n magedu bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

root@mysql-0:/# mysql

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 40

Server version: 5.7.36-log MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

mysql> create database wordpress;

Query OK, 1 row affected (0.01 sec)

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| wordpress |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.01 sec)

mysql> GRANT ALL PRIVILEGES ON wordpress.* TO "wordpress"@"%" IDENTIFIED BY "wordpress";

Query OK, 0 rows affected, 1 warning (0.02 sec)

测试登录

root@mysql-0:/# mysql -uwordpress -hmysql-0.mysql -pwordpress

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 162

Server version: 5.7.36-log MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type \'help;\' or \'\\h\' for help. Type \'\\c\' to clear the current input statement.

mysql>

登录使用

12.Kong入门与实战 基于Nginx和OpenResty的云原生微服务网关 --- 高级案例实战

第 12 章 高级案例实战

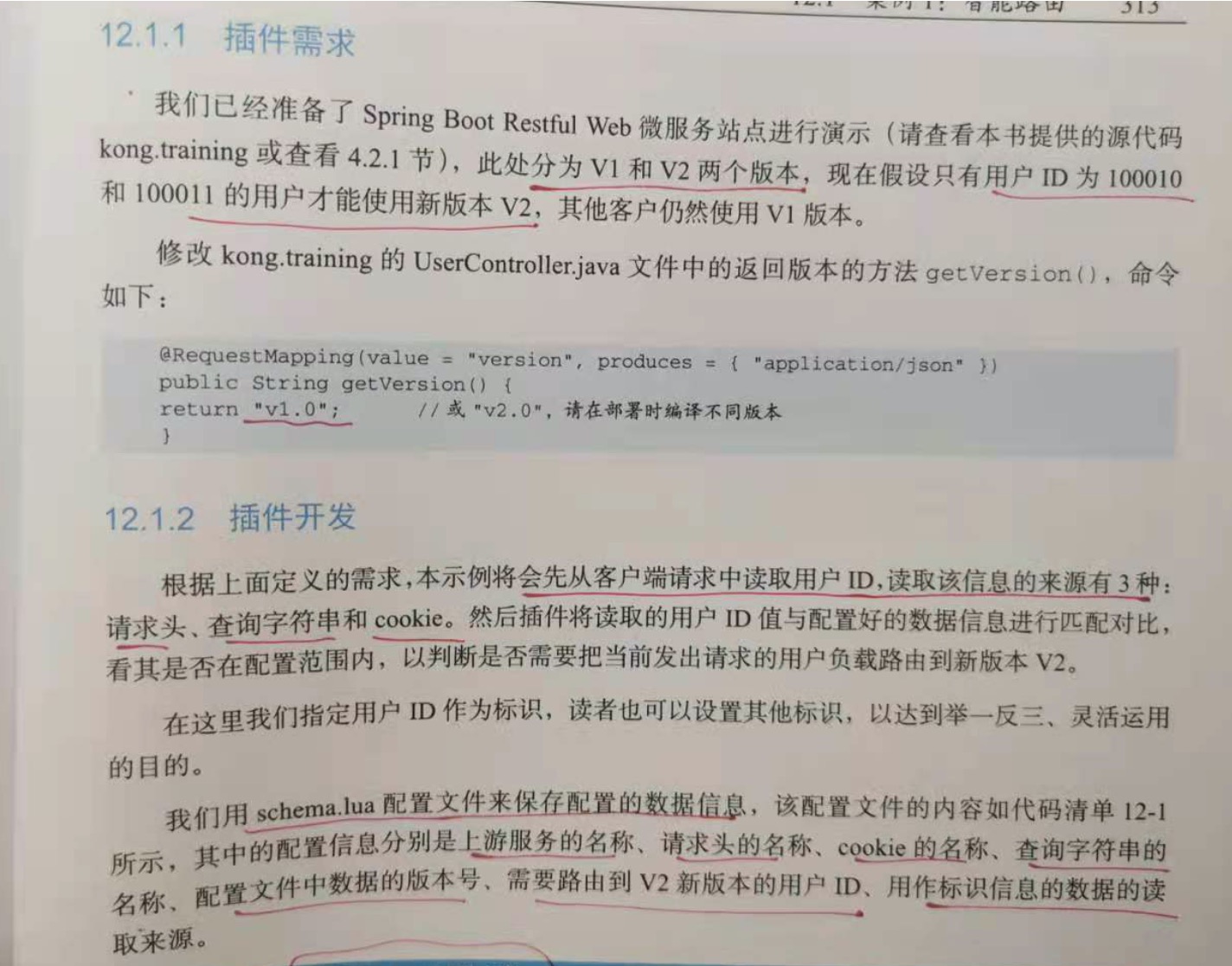

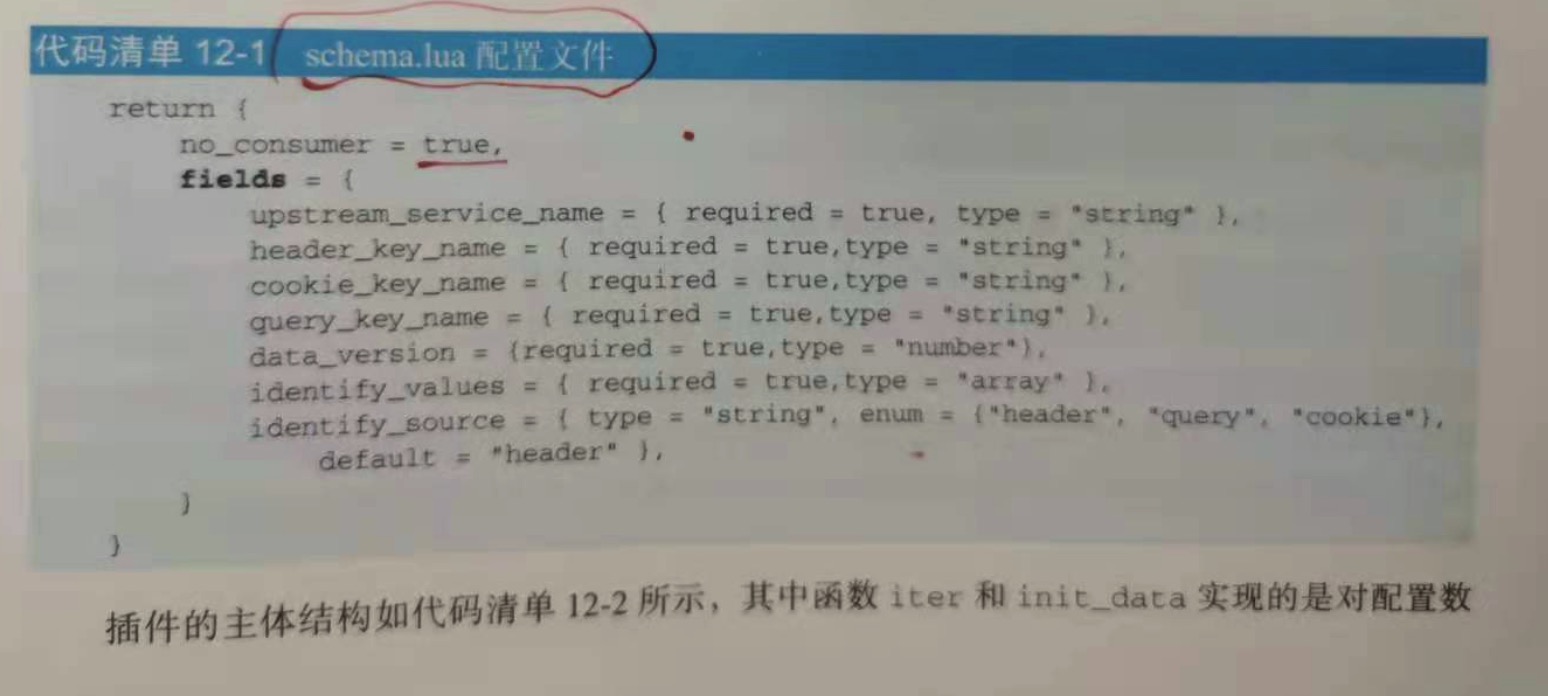

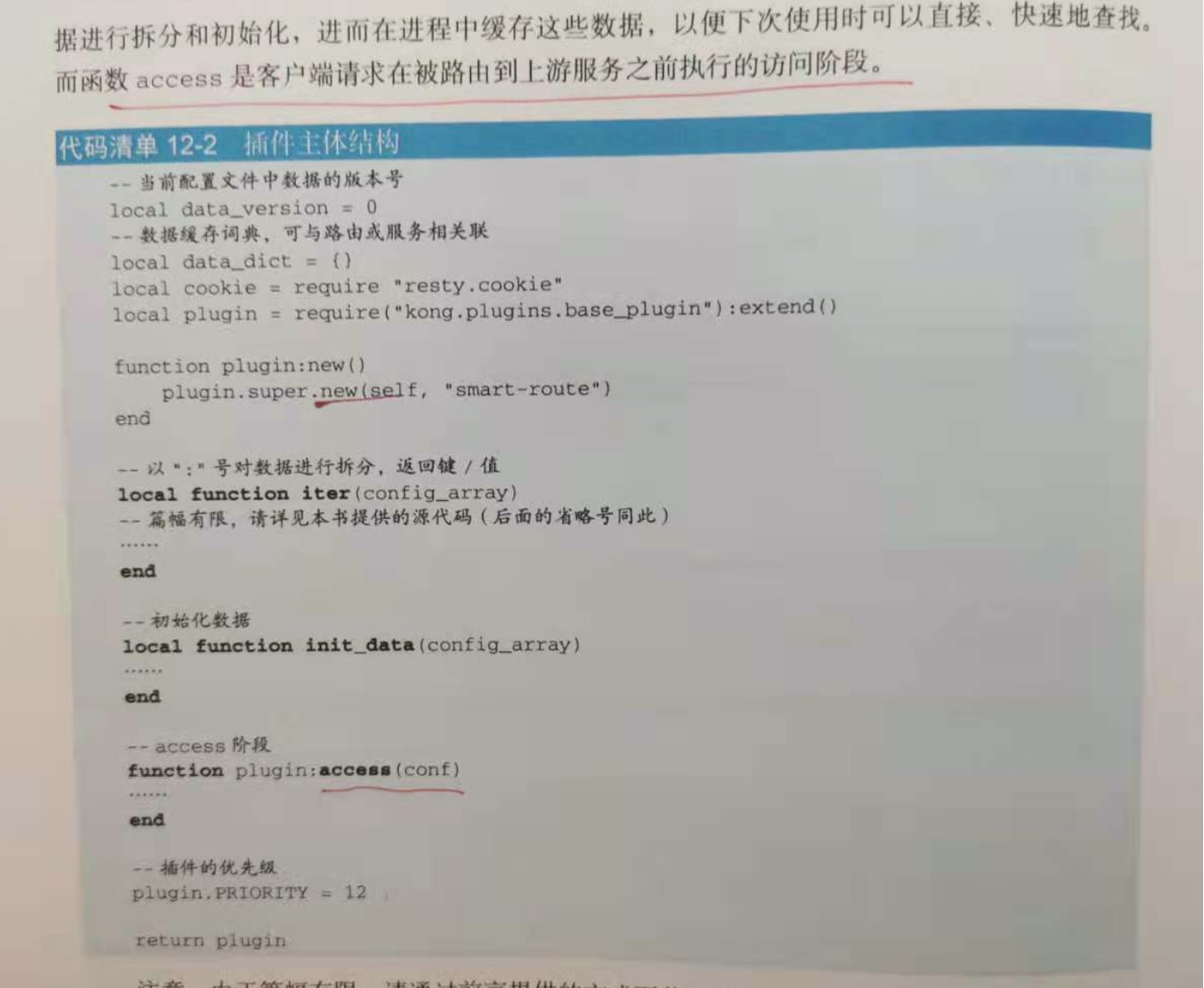

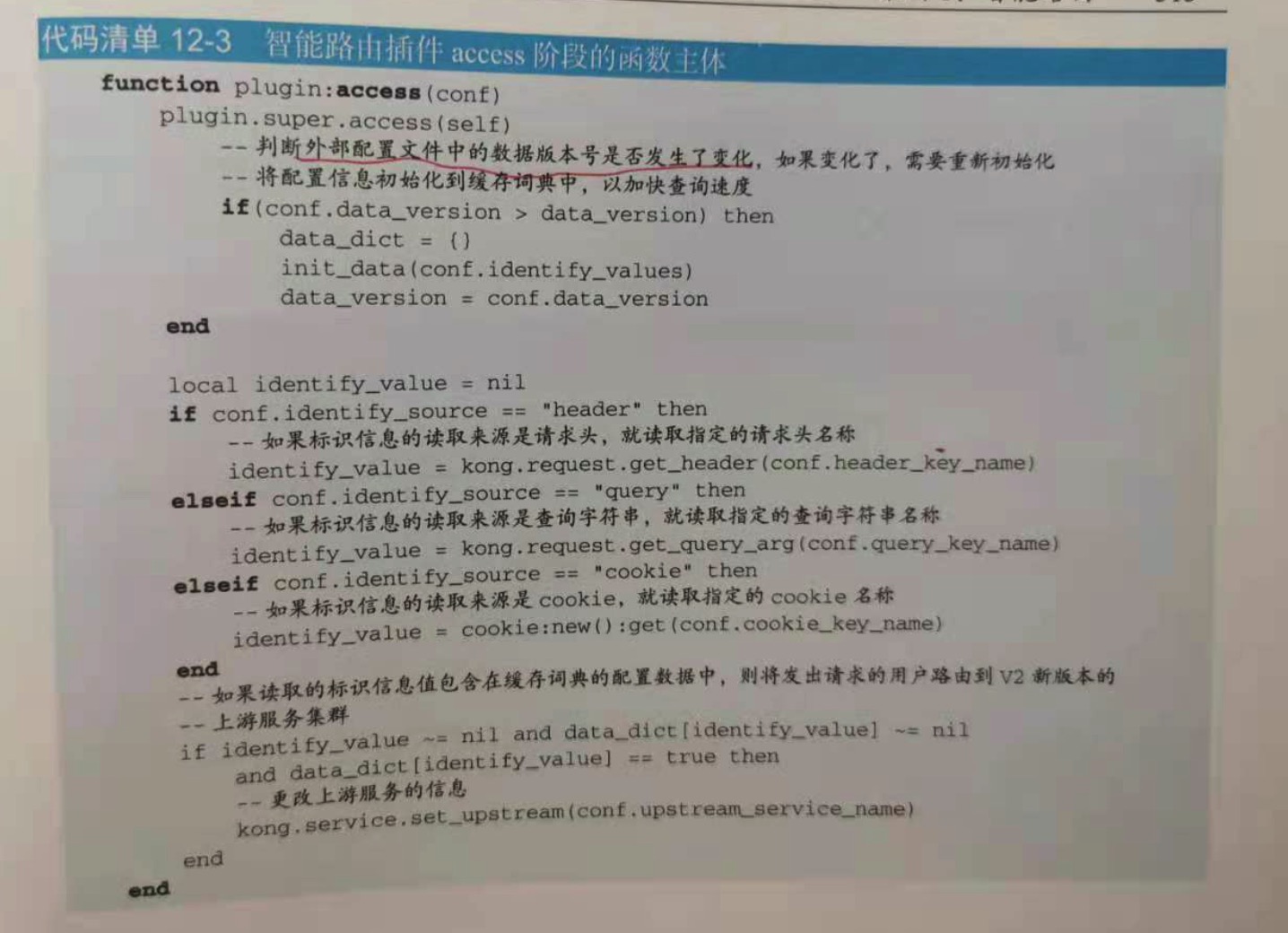

12.1 案例 1:智能路由

通过智能路由使部分用户使用新版本加以验证。

12.1.1 插件需求

12.1.2 插件开发

12.1.3 插件部署

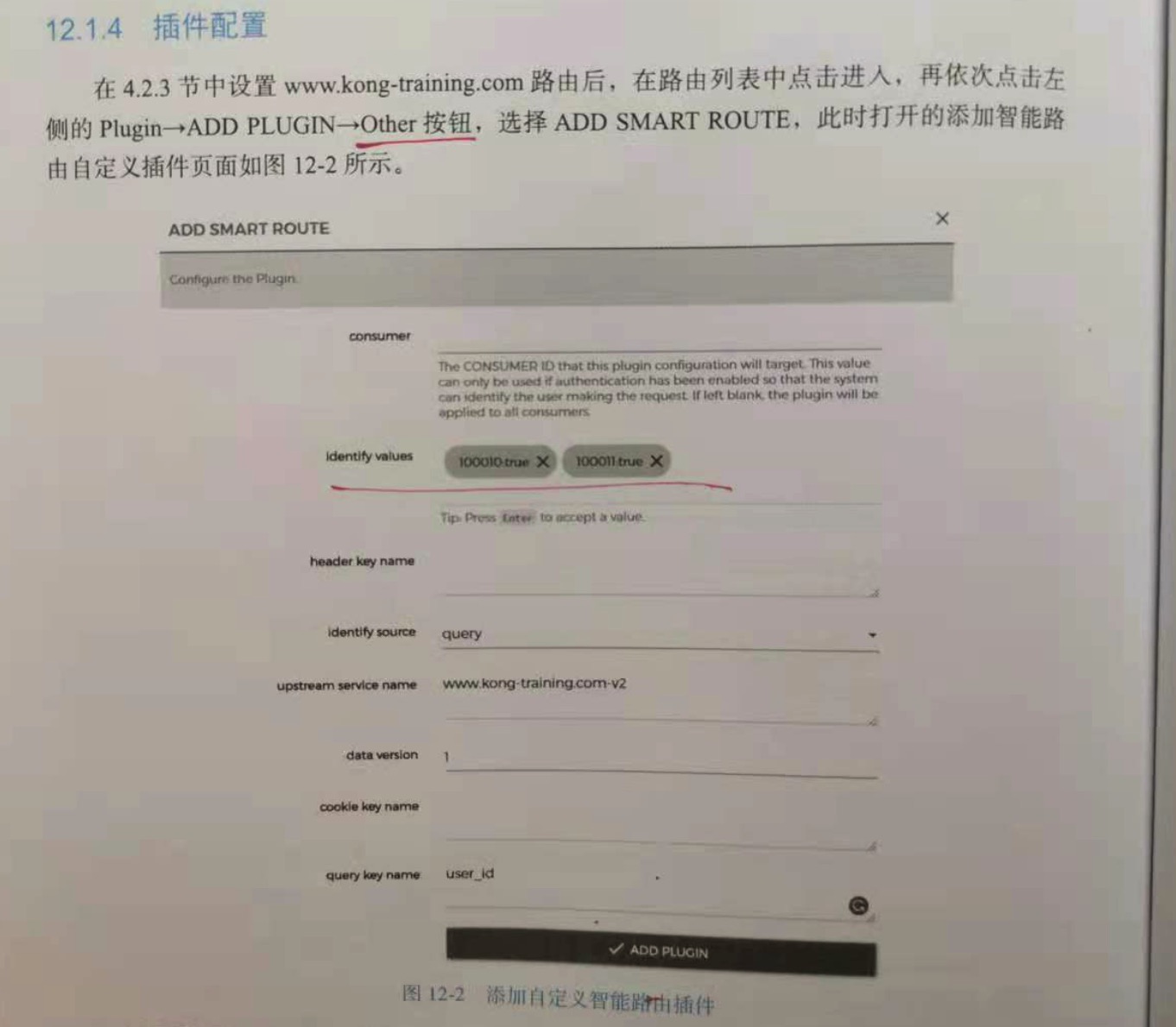

12.1.4 插件配置

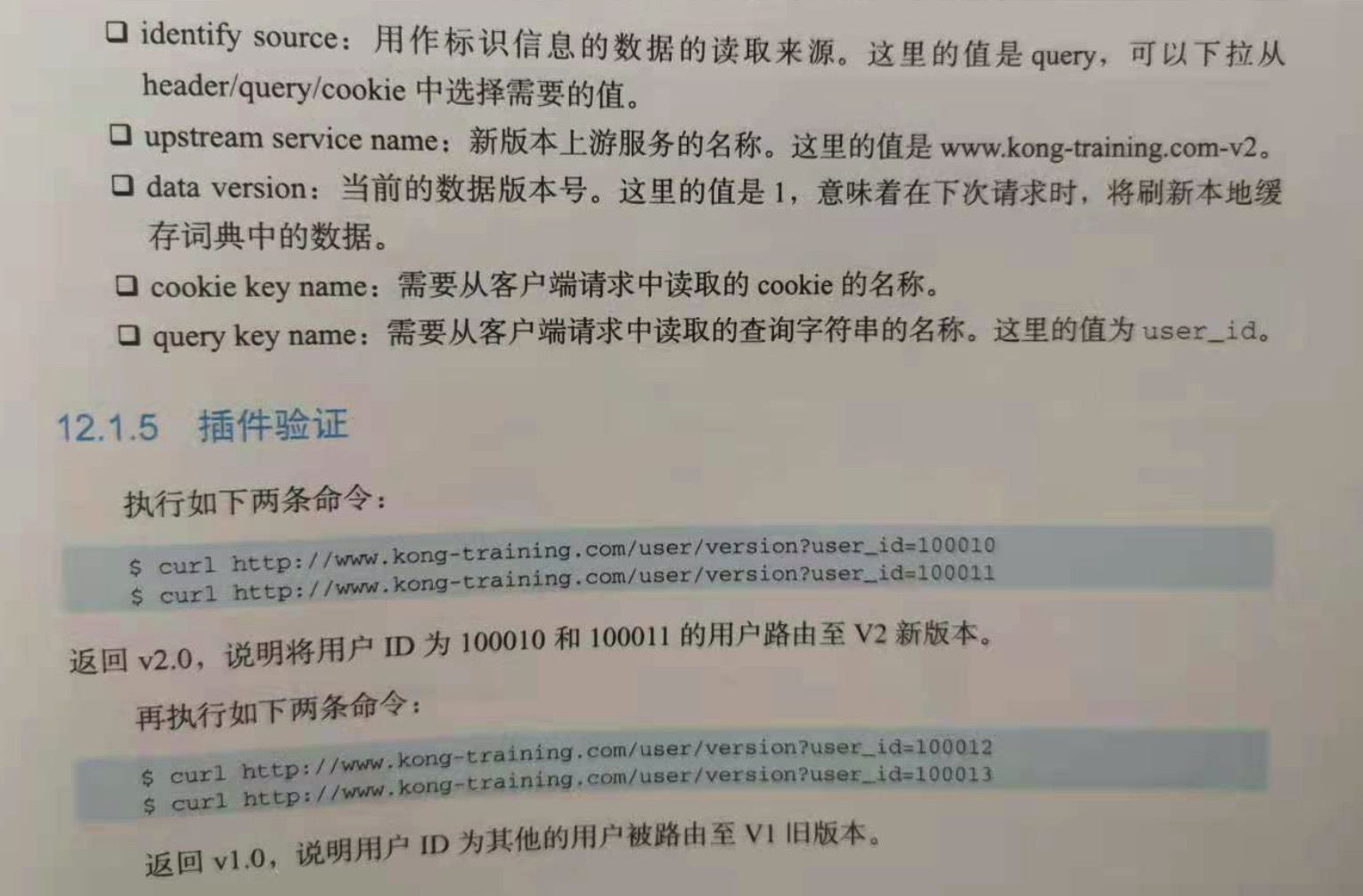

12.1.5 插件验证

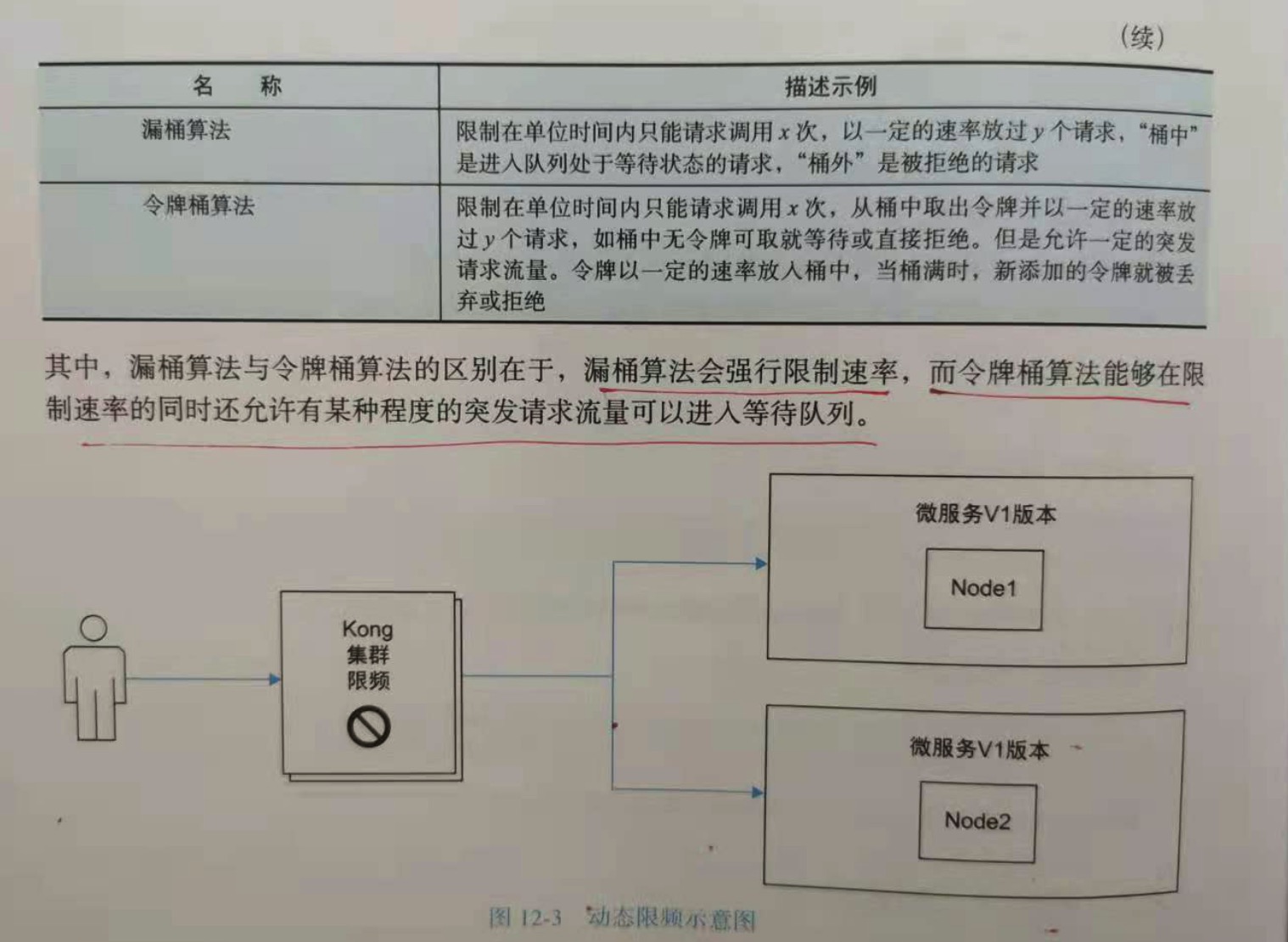

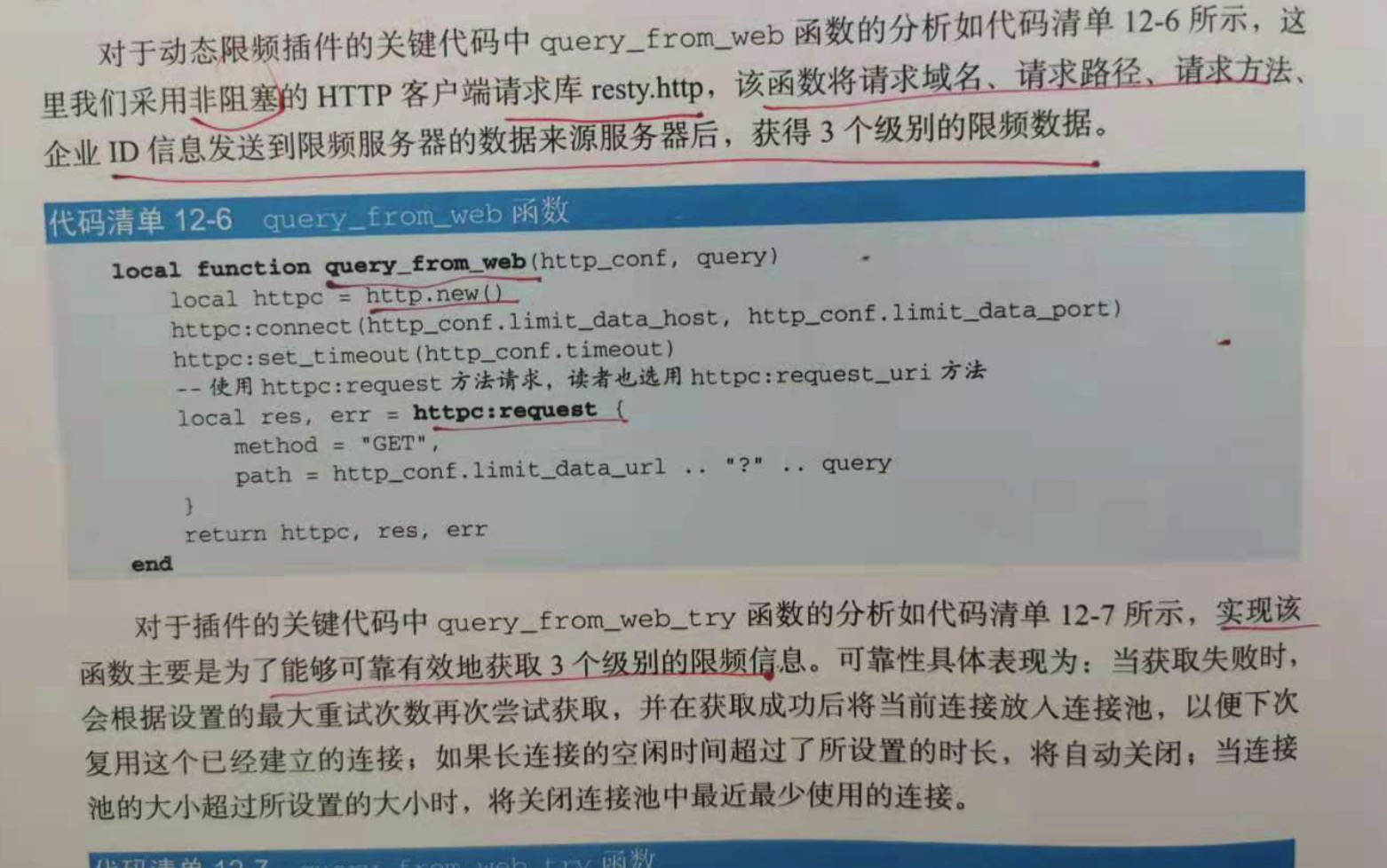

12.2 案例 2:动态限频

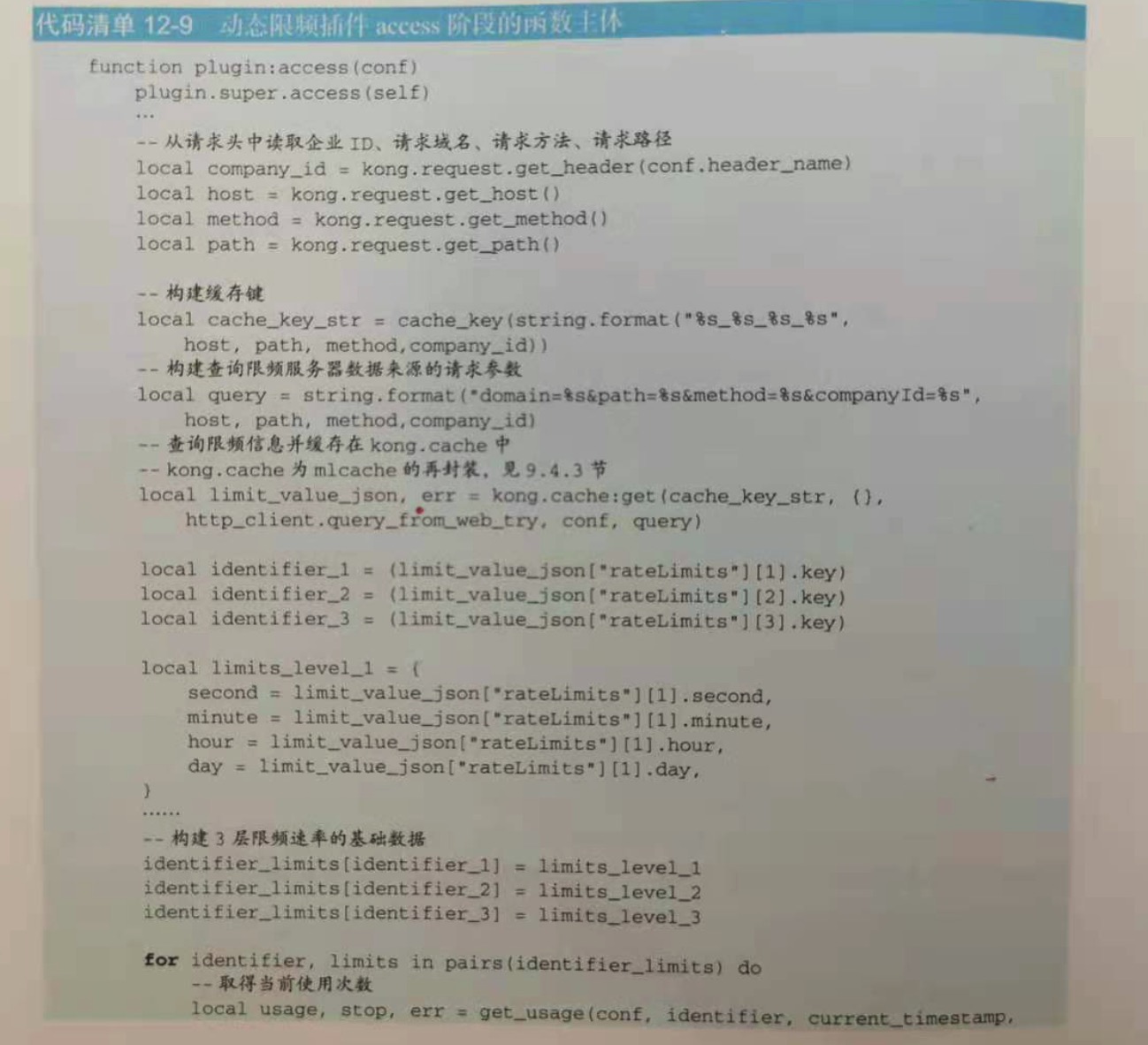

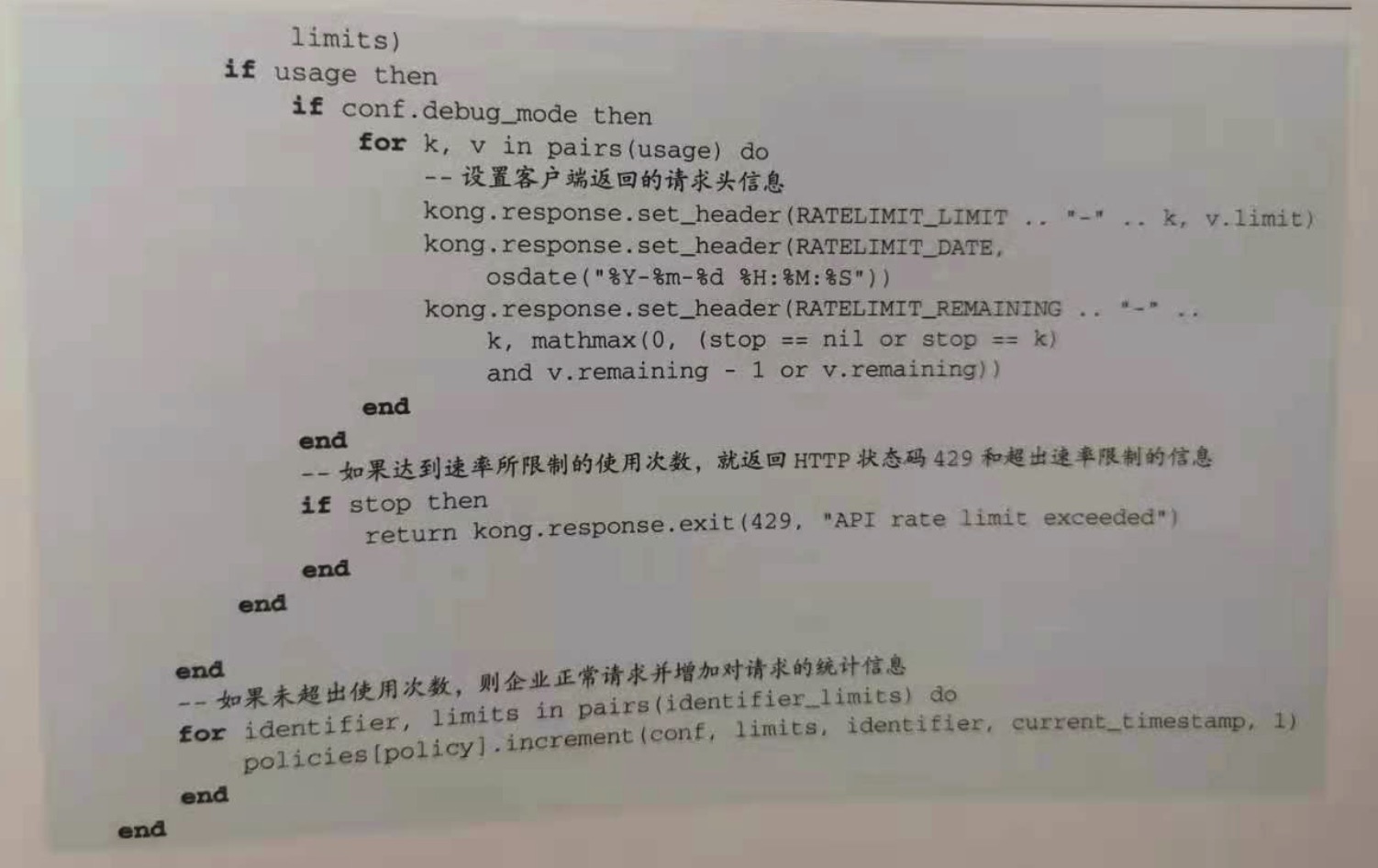

动态限频插件可以控制微服务站点的请求流量。当微服务站点面对大流量访问的时候,可以通过动态限频插件在网关层面对请求流量进行并发控制,以防止大流量对后端

核心服务造成影响和破坏,从而导致系统被拖垮且不可用。换言之就是通过调节请求流量的阈值来控制系统的最大请求流量值,以最大限度的保障服务的可用性。

常用的限频方案:

1.总并发连接数限制

2.固定时间窗口限制

3.滑动时间窗口限制

4.漏桶算法

5.令牌筒算法

另外,还可以通过以下几种方式实现多维度限频:

1.对请求的目标url进行限频

2.对客户端的访问ip进行限频

3.对特定用户或者企业进行限频

4.多维度,细粒度的朵组合限频

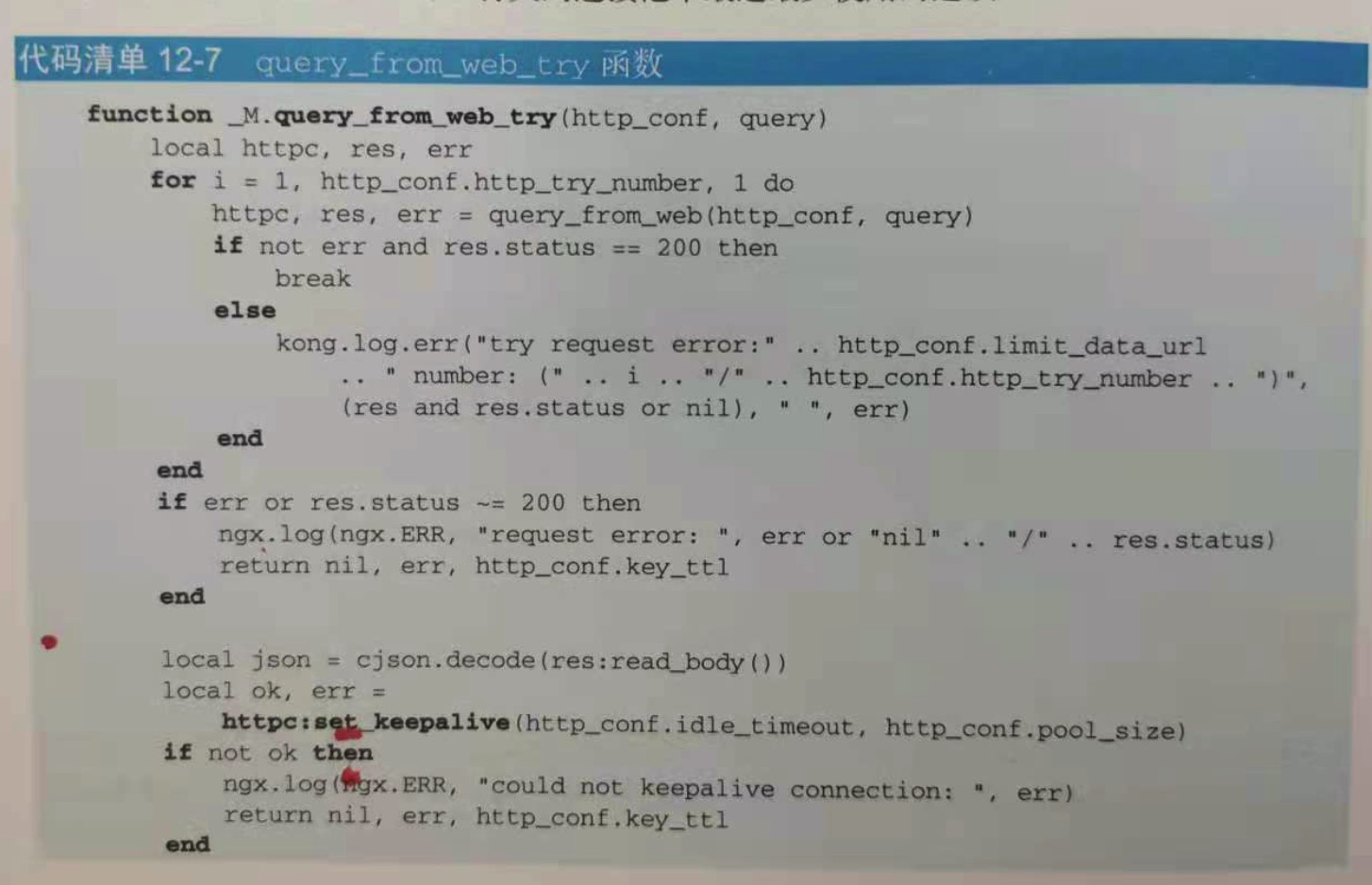

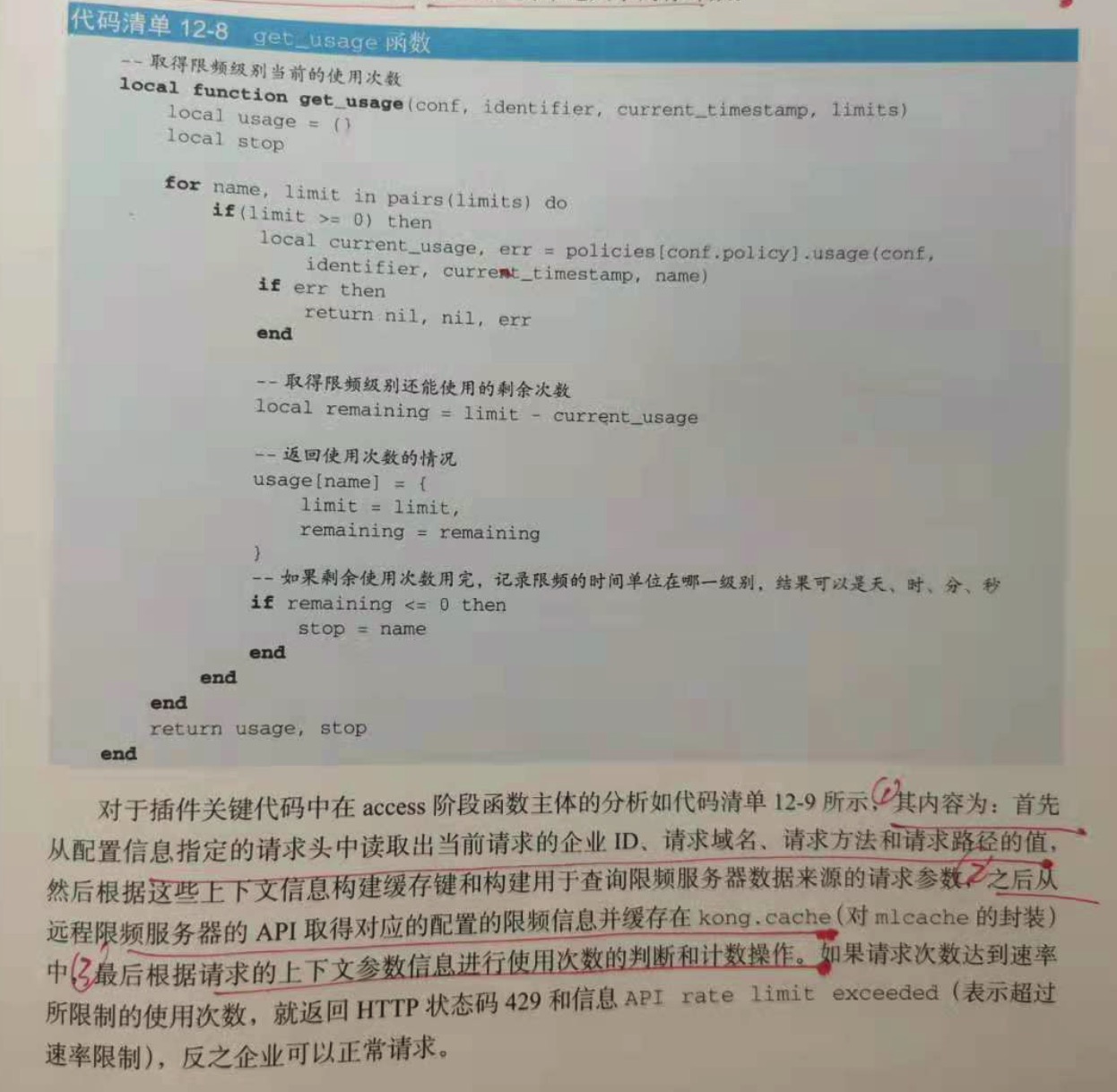

12.2.1 插件需求

12.2.2 插件开发

12.2.3 插件部署

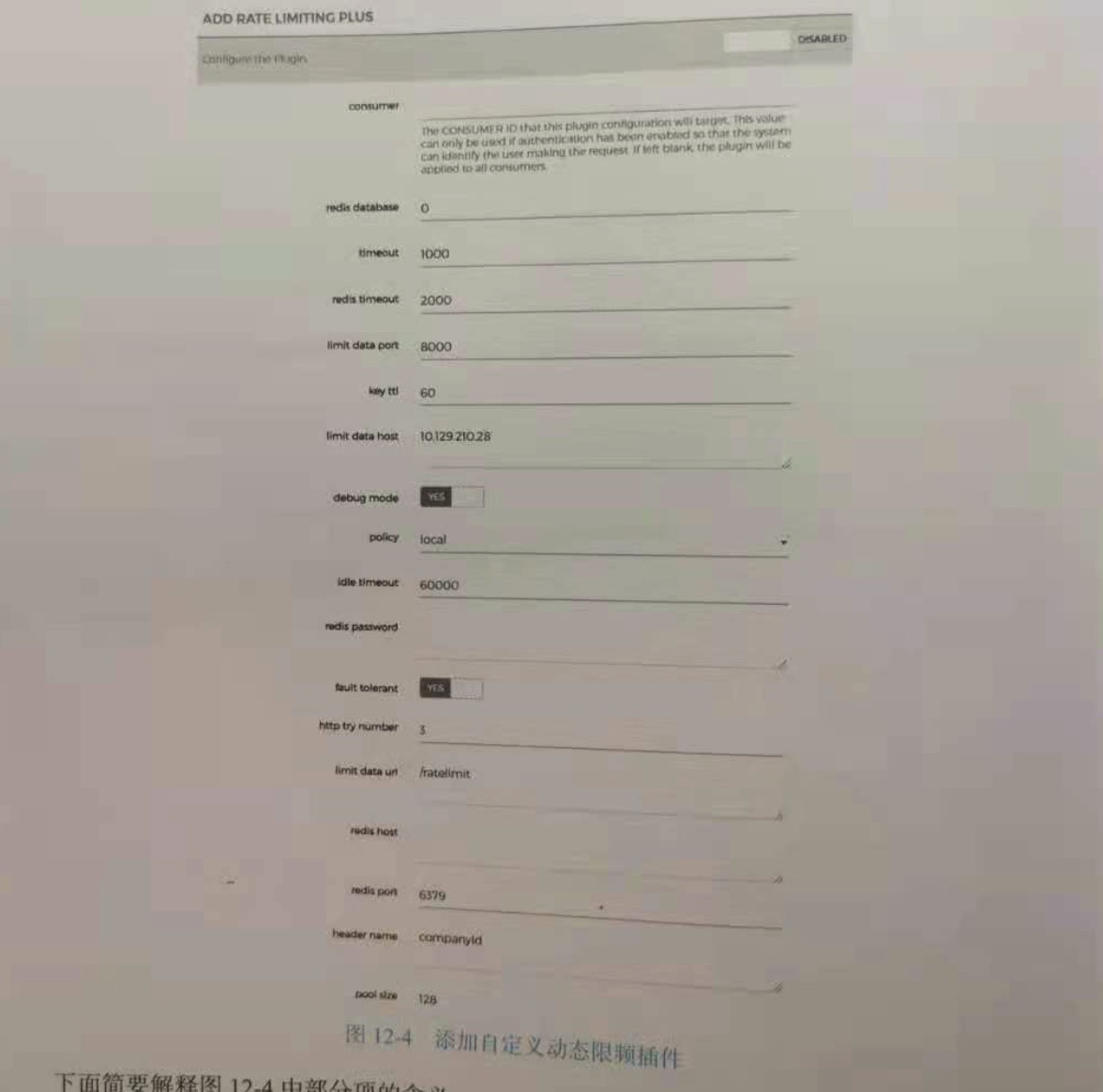

12.2.4 插件配置

12.2.5 插件验证

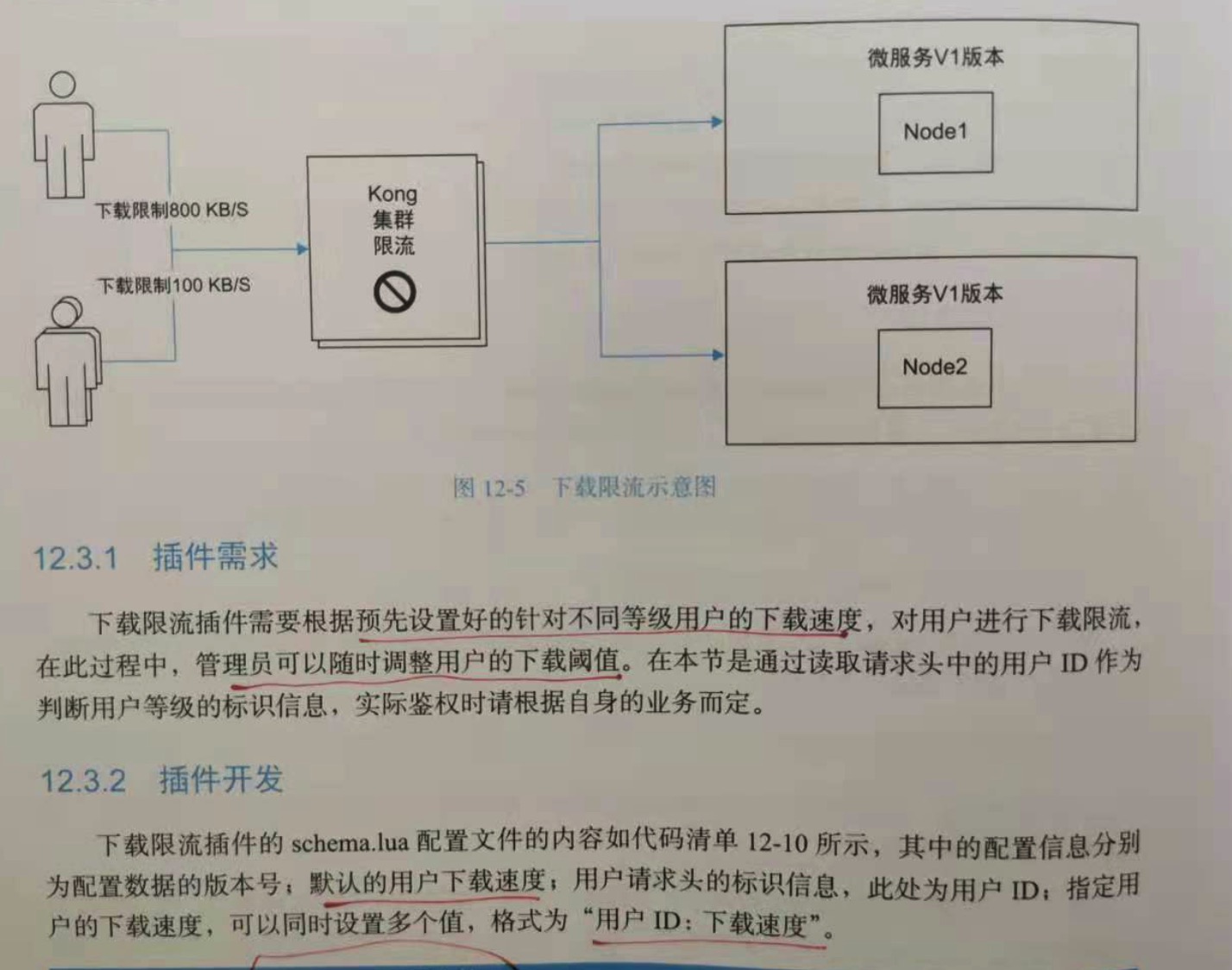

12.3 案例 3:下载限流

平台是如何做到限流的?通常是在网关层对限流做全局控制,针对不同等级的用户分别设置不同的下载速度。

12.3.1 插件需求

12.3.2 插件开发

12.3.3 插件部署

12.3.4 插件配置

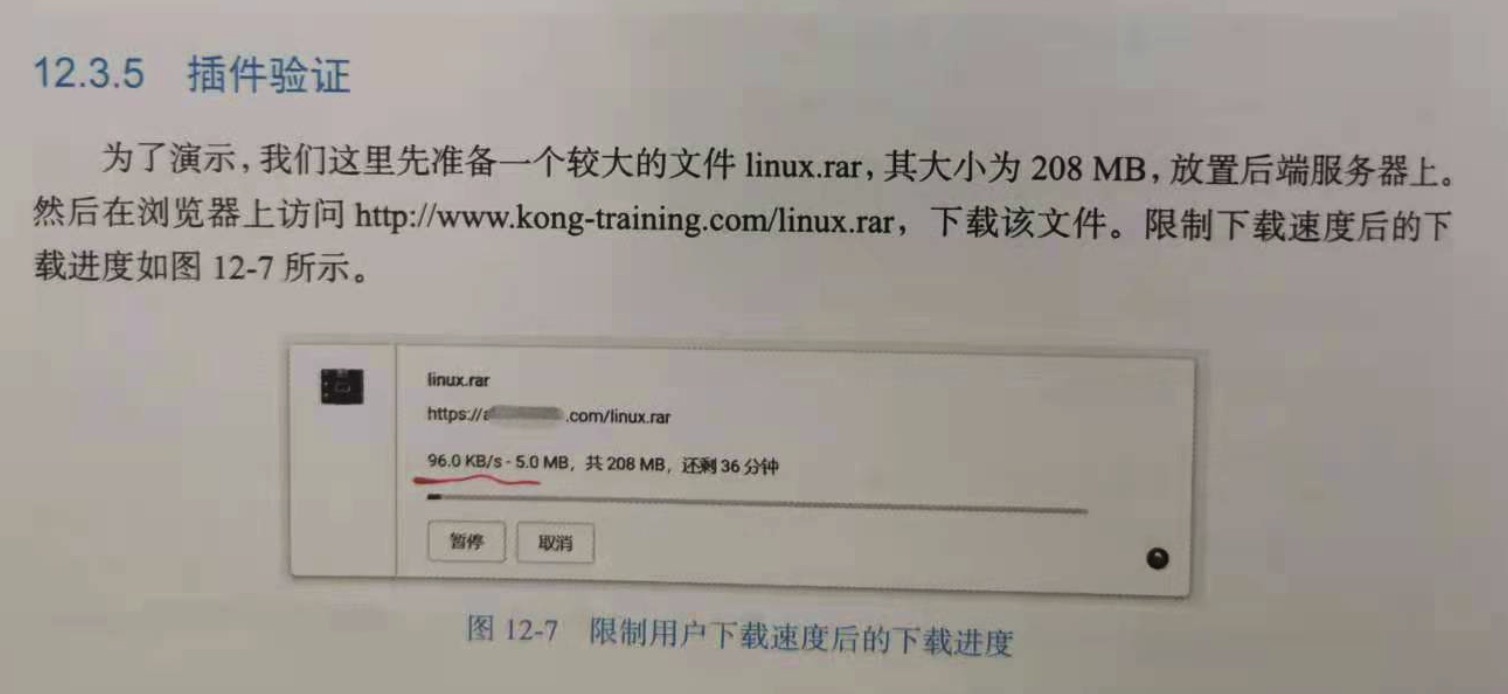

12.3.5 插件验证

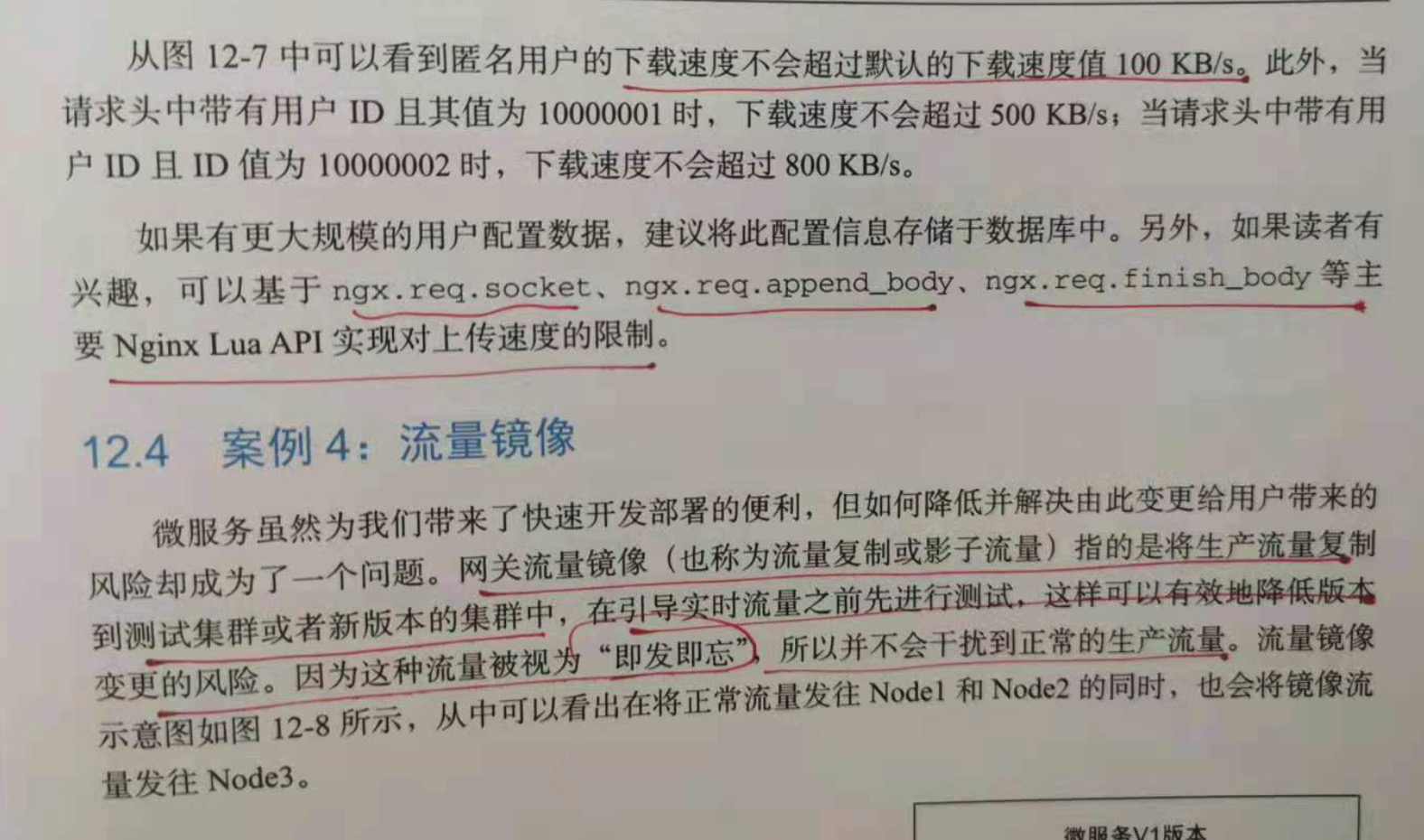

12.4 案例 4:流量镜像

网关流量镜像(也称为流量复制或影响流量)指的是将生产流量复制到测试集群或者新版本的集群中,在引导实时流量之前先进行测试,这样可以有效的降低版本变更的

风险。因为这种流量被视为"即发即忘",所以并不会干扰到正常的生产流量。

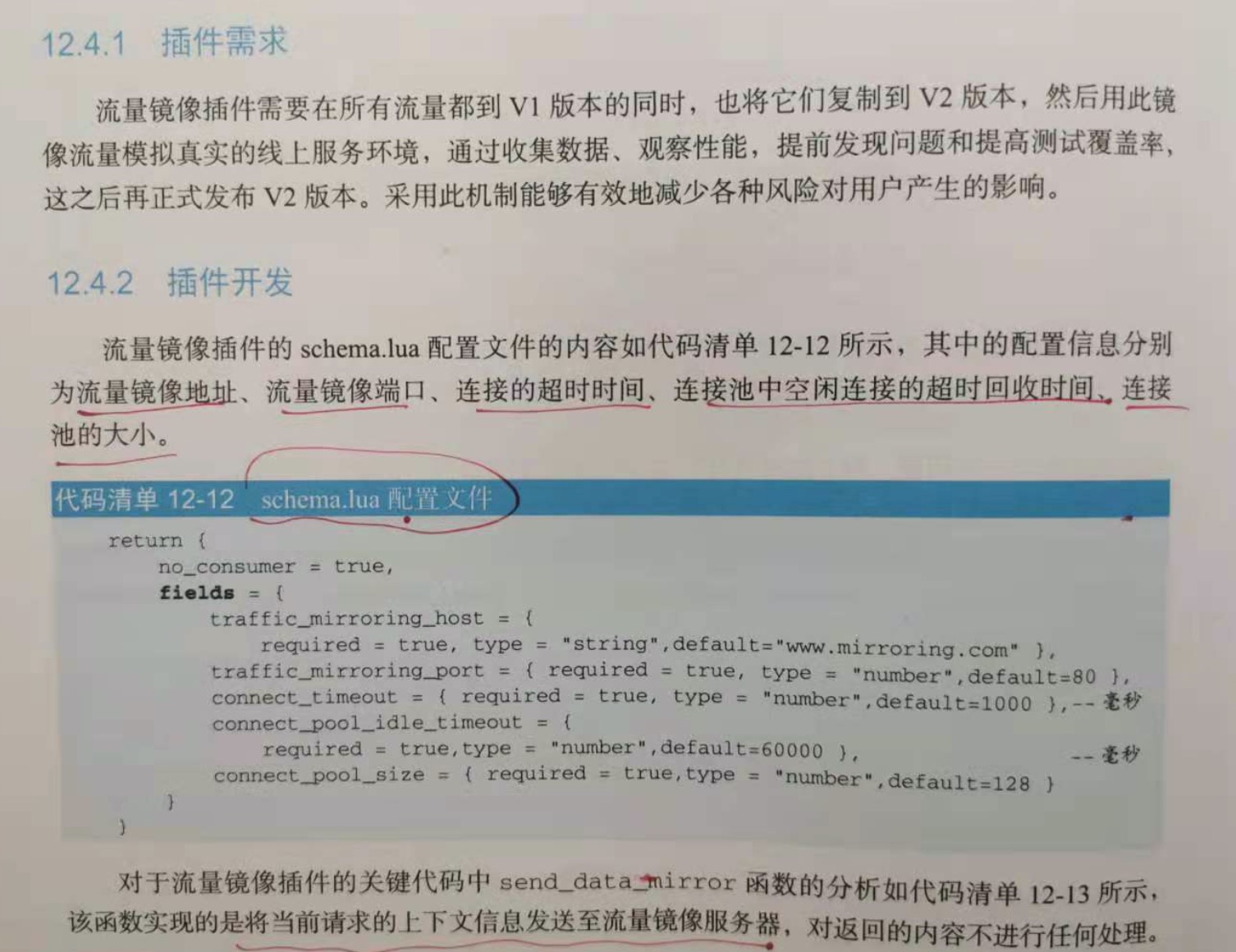

12.4.1 插件需求

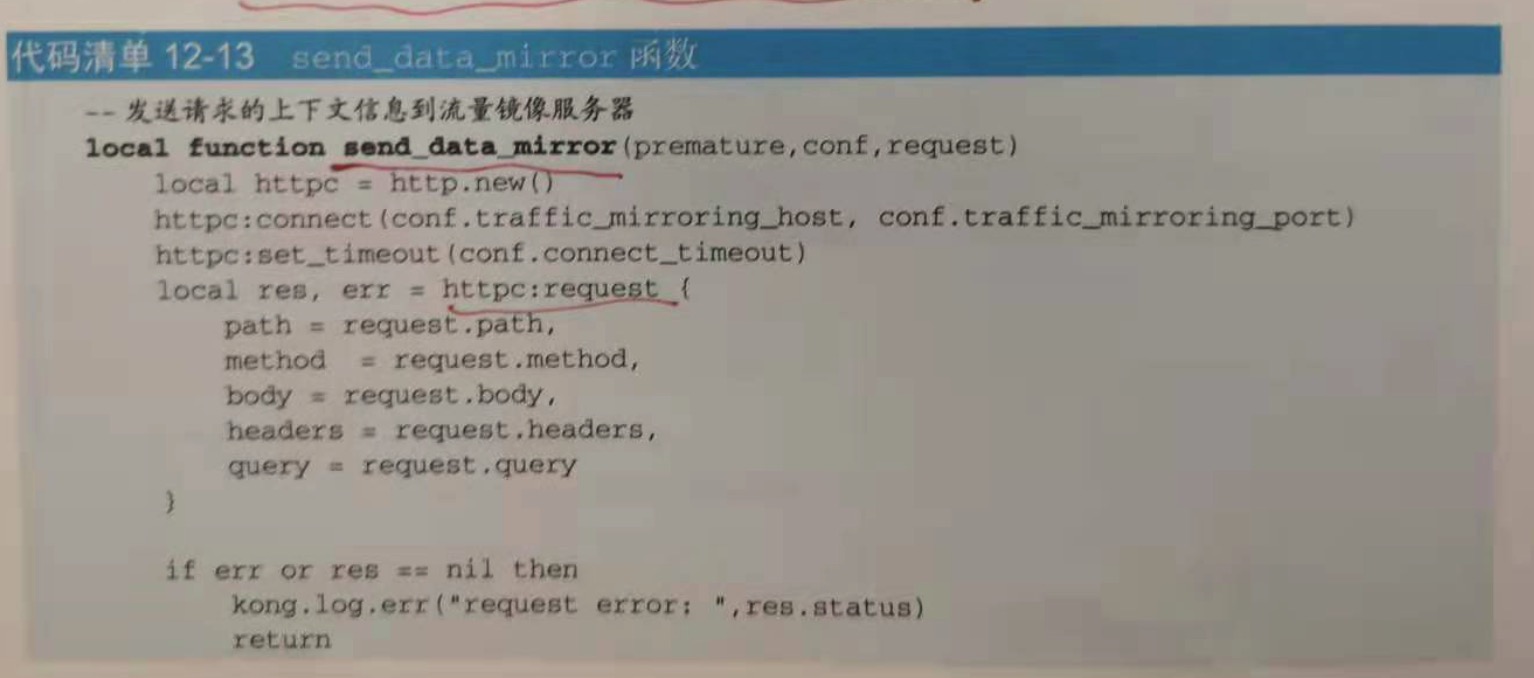

12.4.2 插件开发

12.4.3 插件部署

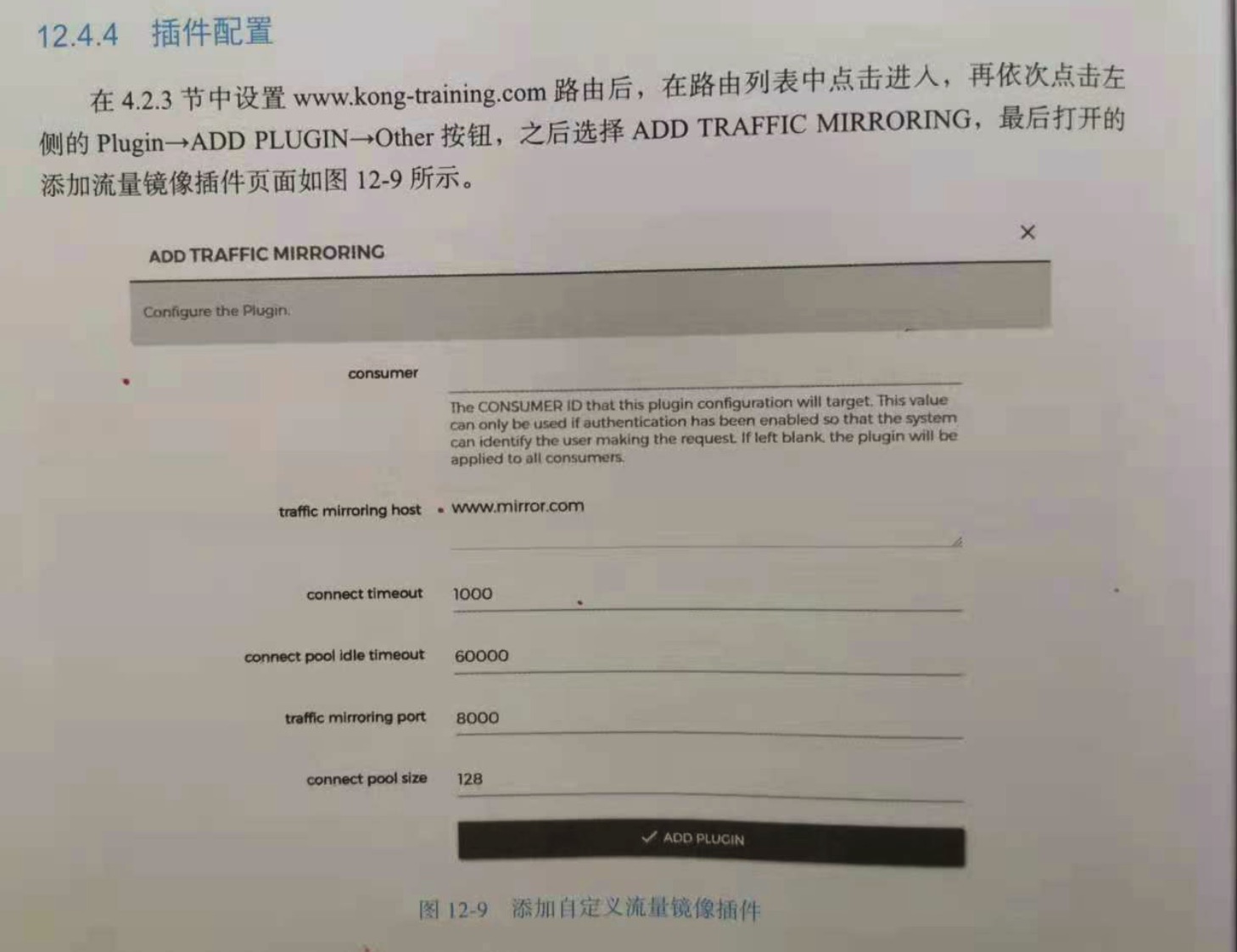

12.4.4 插件配置

12.4.5 插件验证

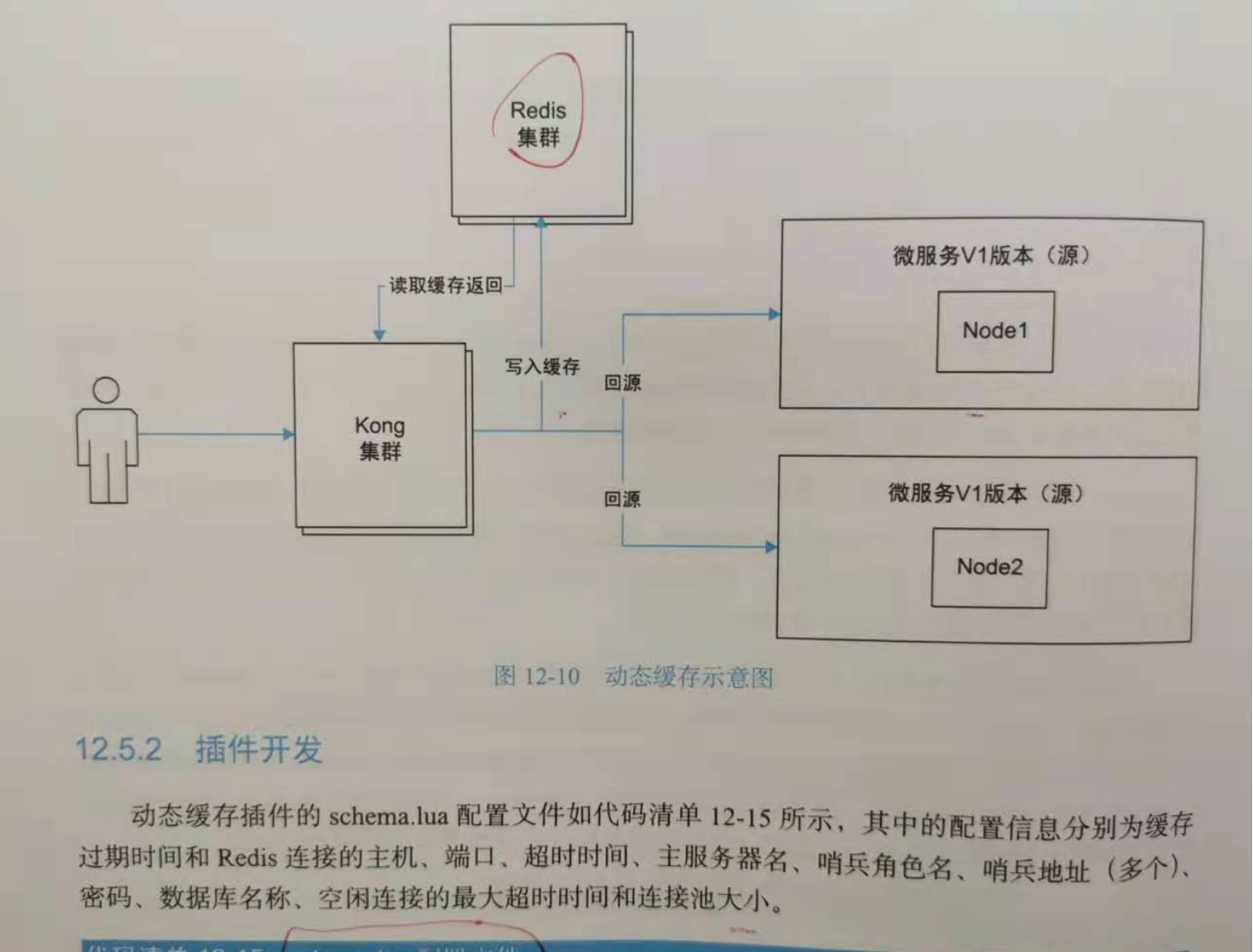

12.5 案例 5:动态缓存

在客户端与服务器之间存在着多层对于用户无感知,不可见的缓存策略,比如浏览器缓存,dns缓存,cdn缓存,专用redis,memcached,varnish缓存服务,反向代理

缓存,请求内容缓存,mac缓存,arp缓存,路由表缓存,磁盘缓存,ssd缓存,内存缓存,页缓存,寄存器高速缓存等。

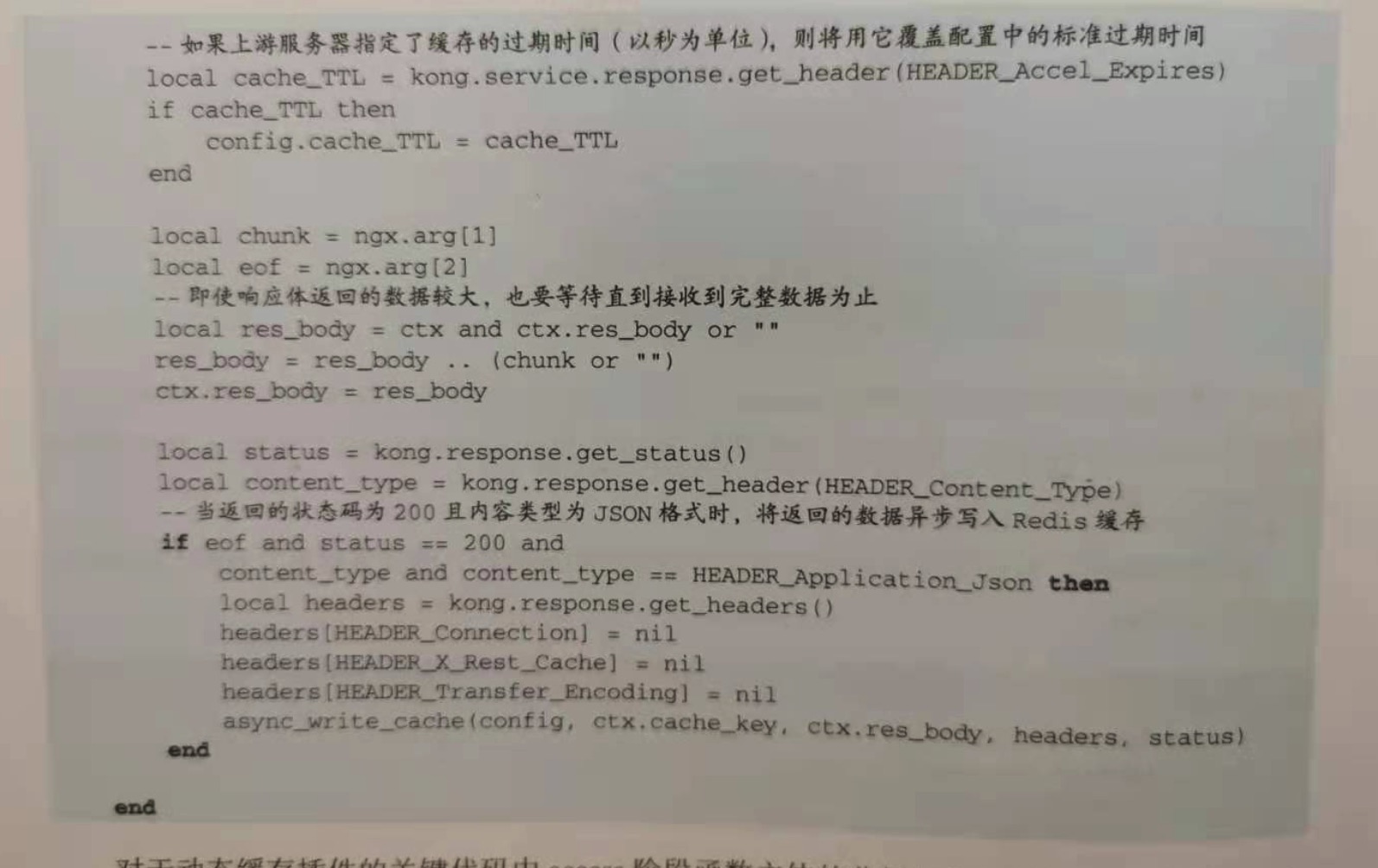

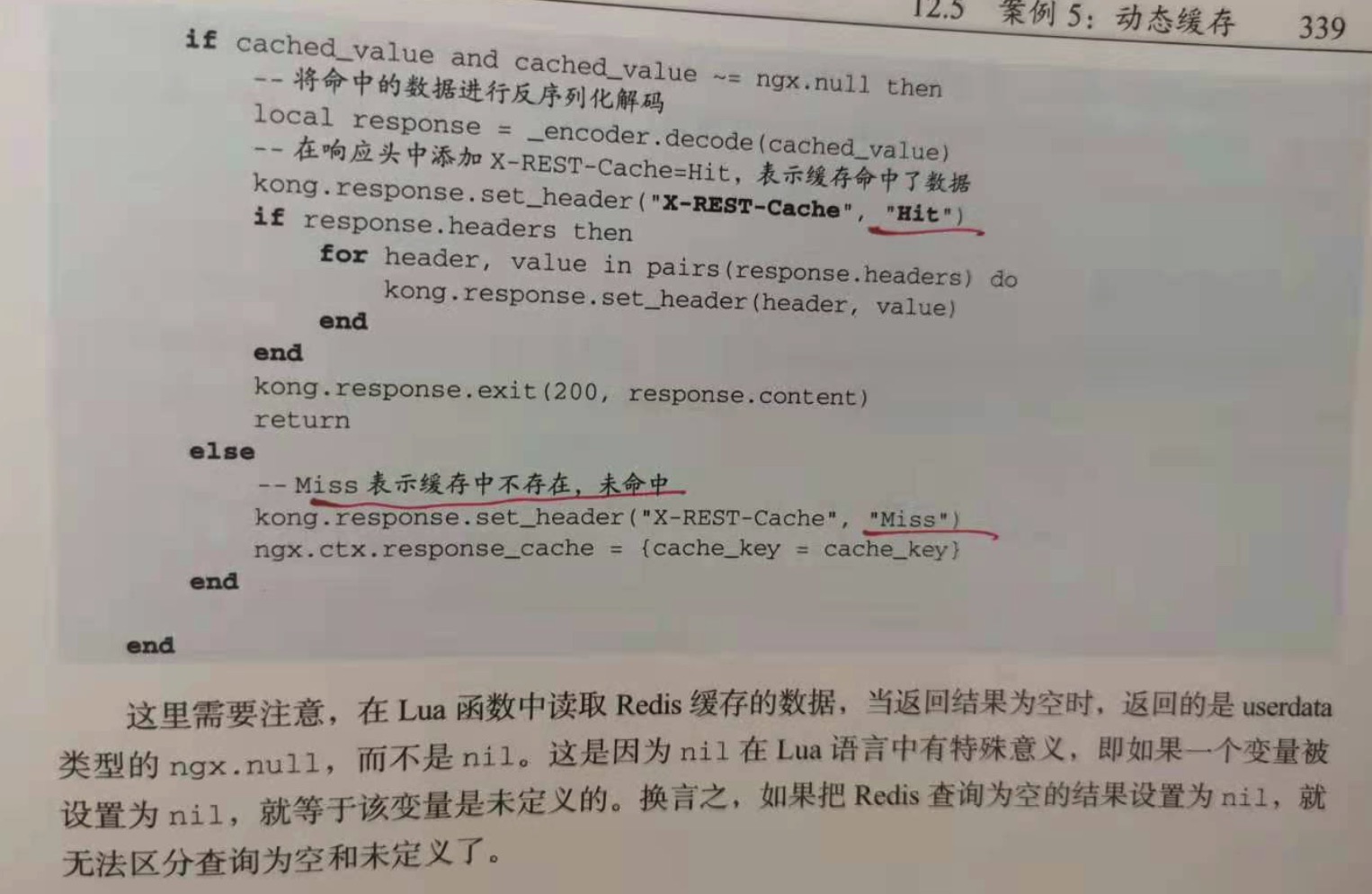

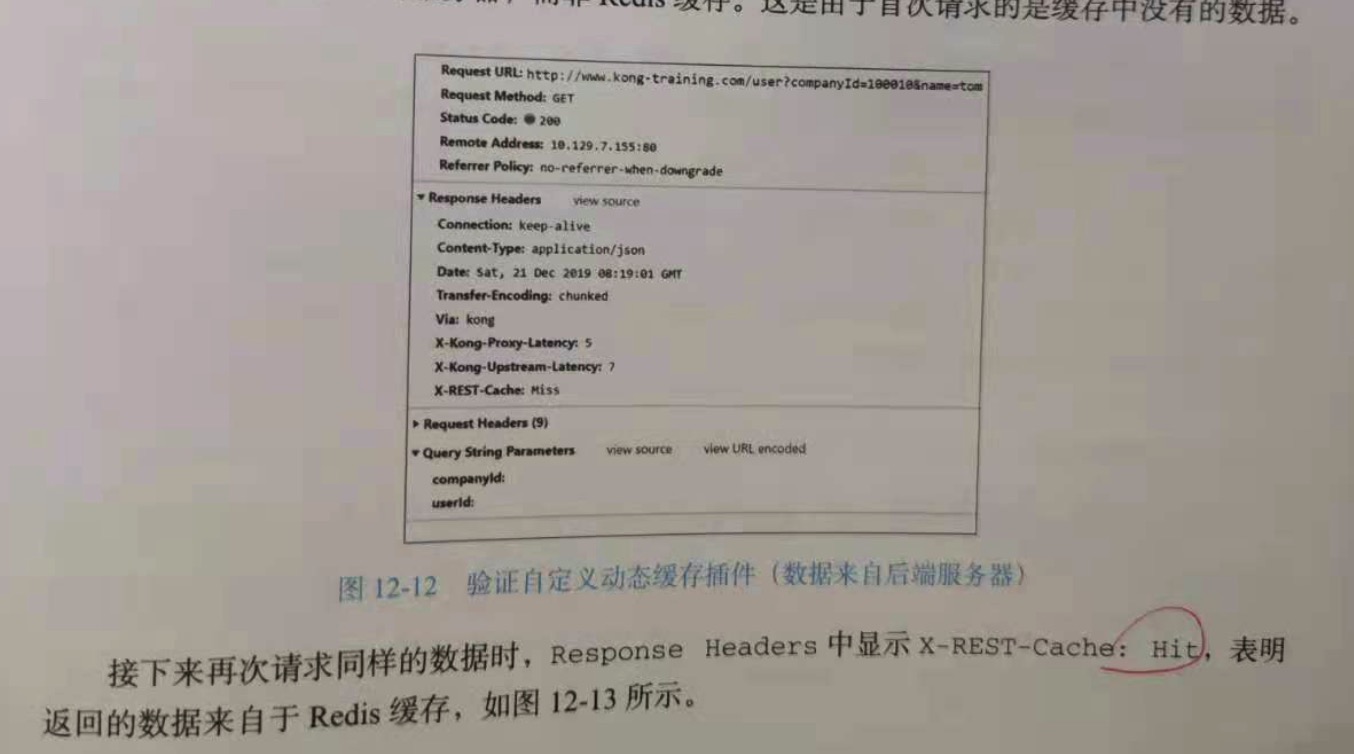

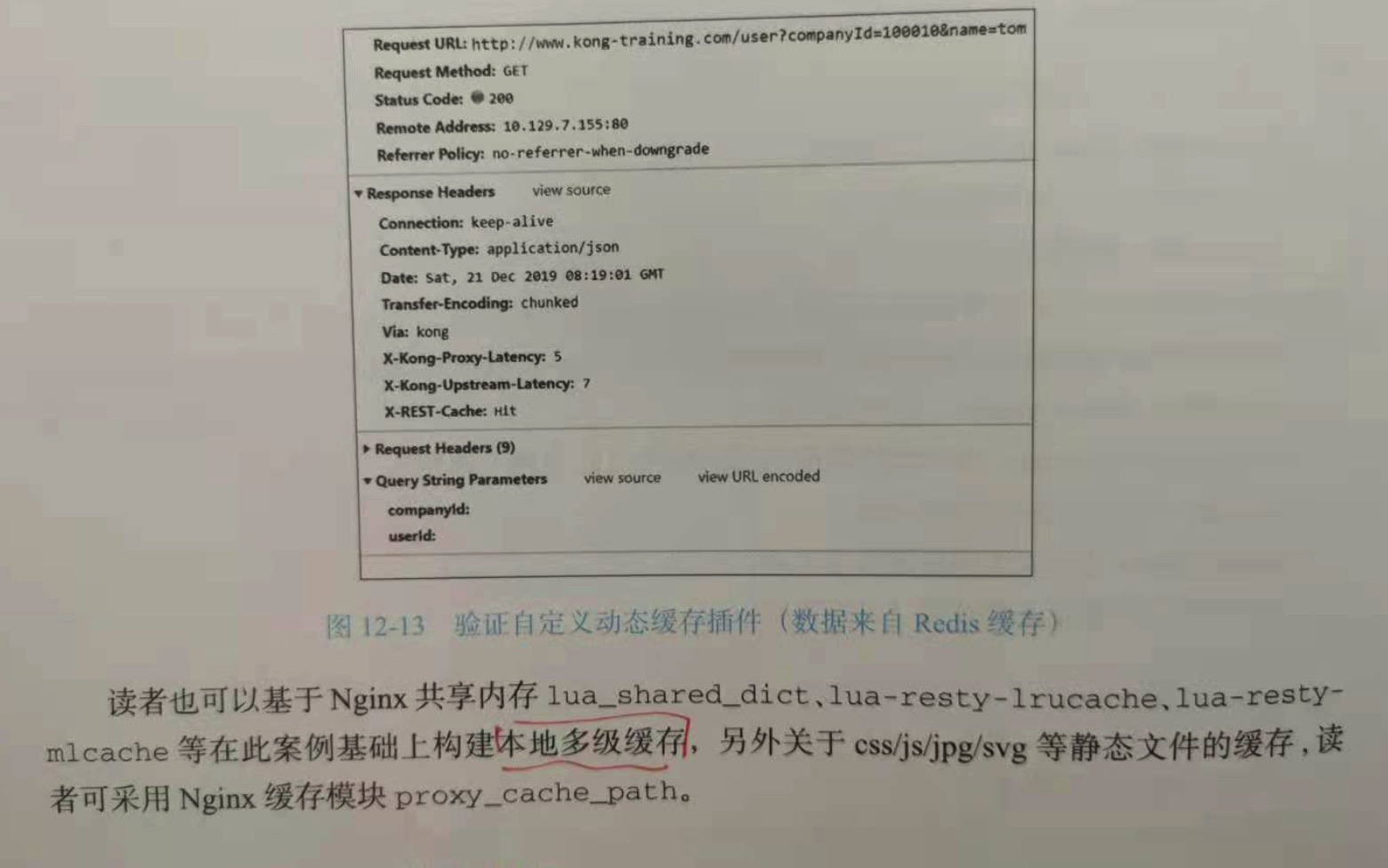

12.5.1 插件需求

12.5.2 插件开发

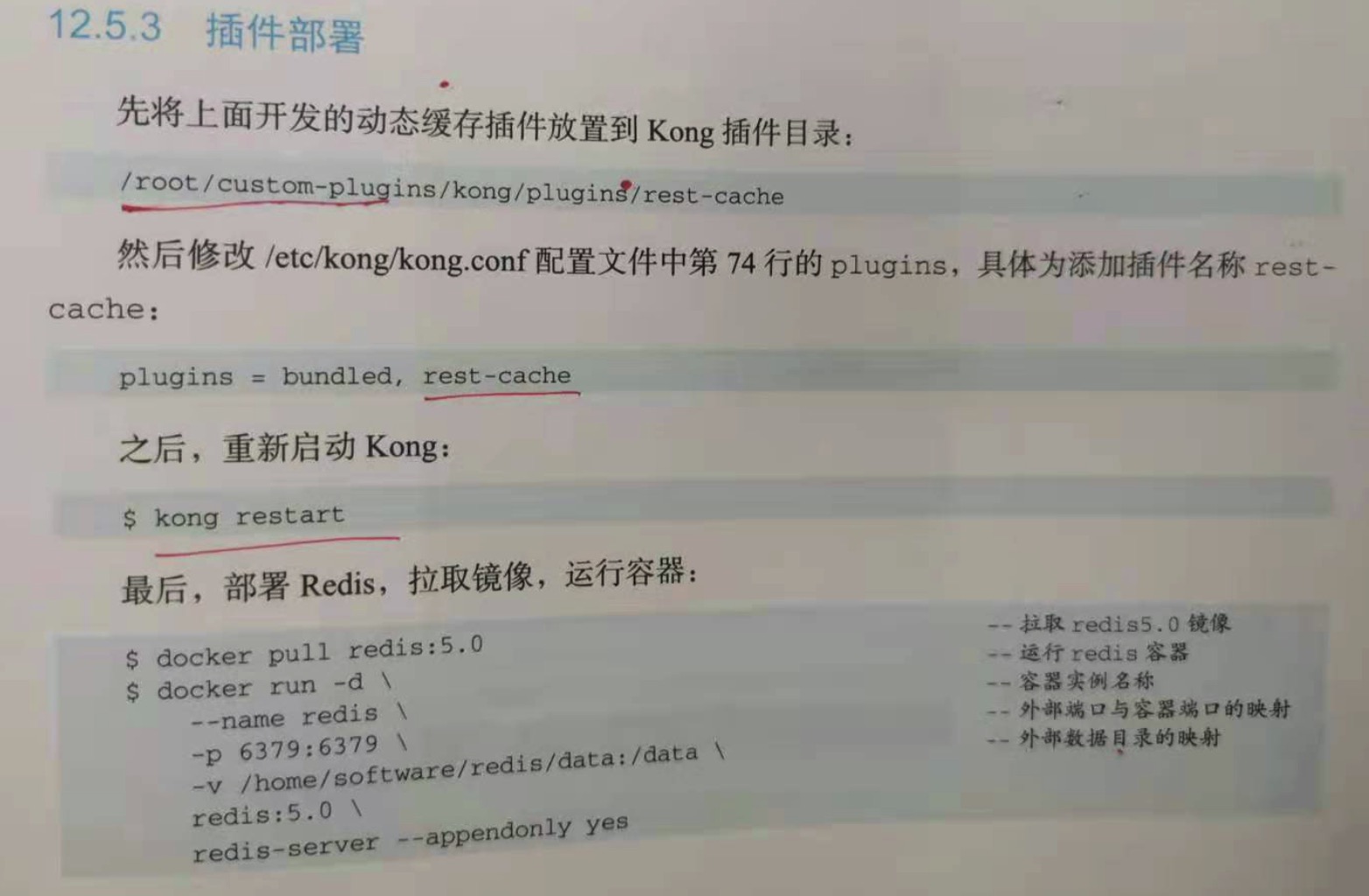

12.5.3 插件部署

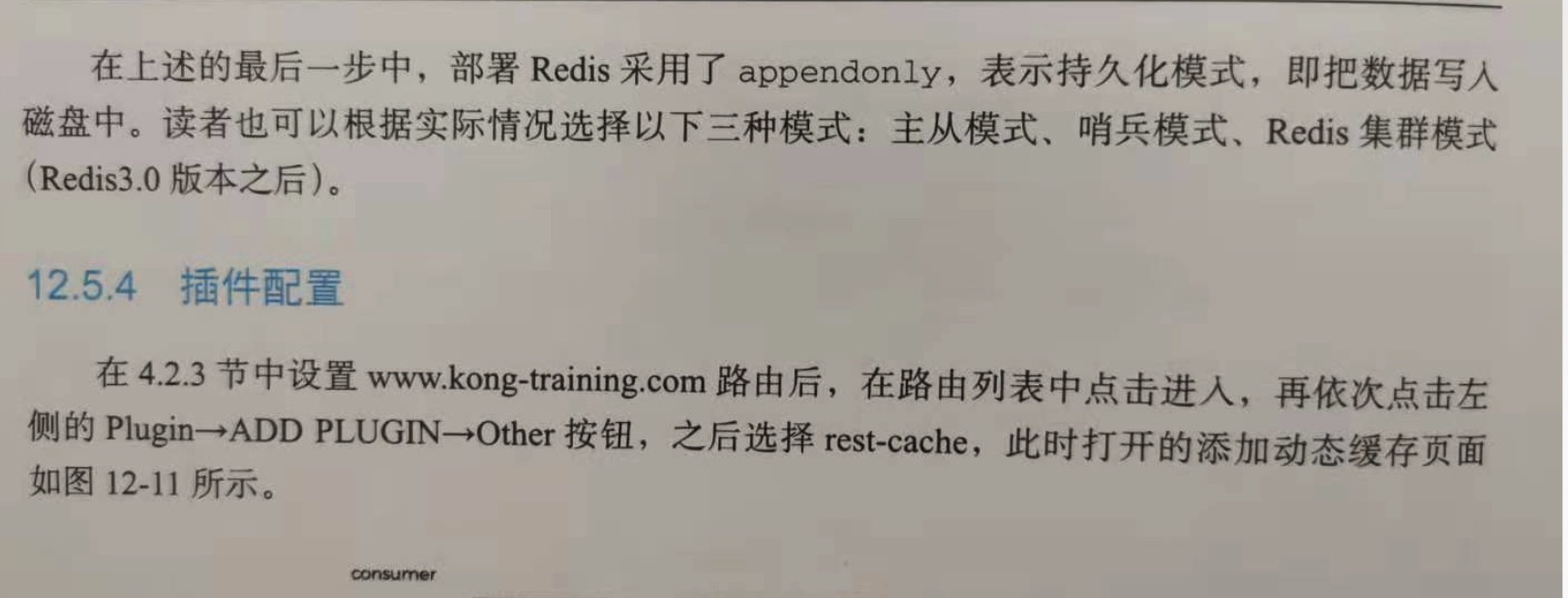

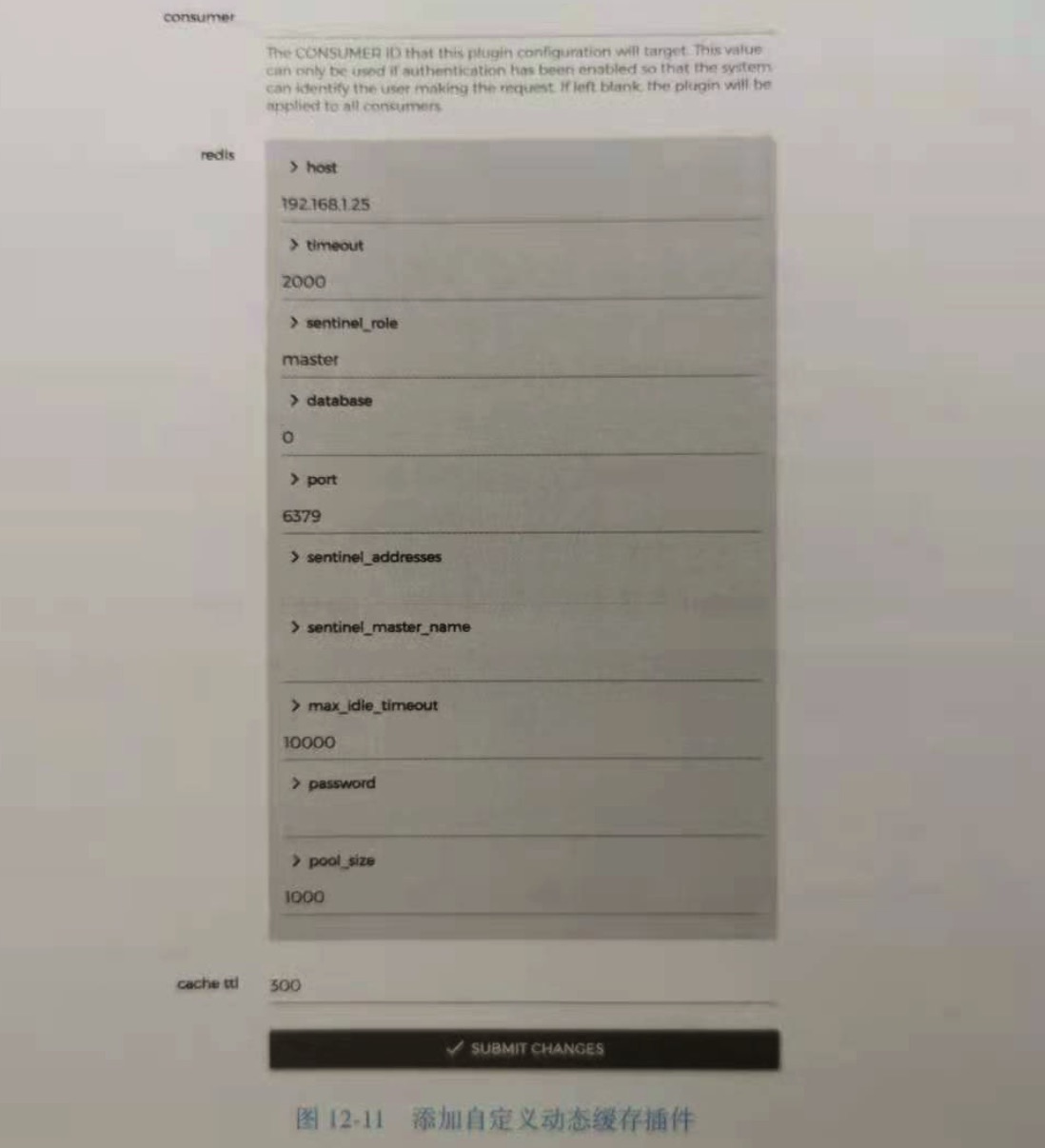

12.5.4 插件配置

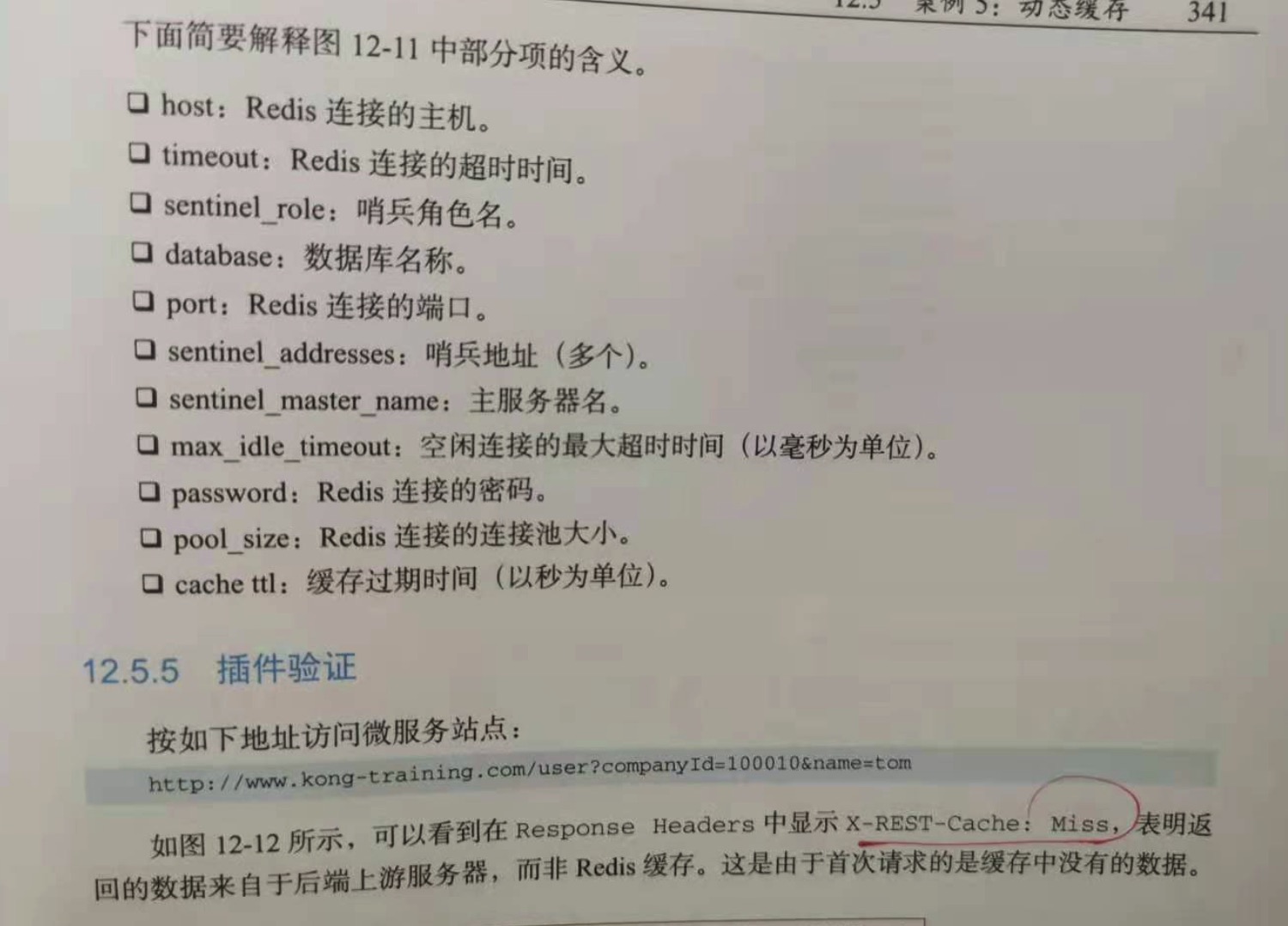

12.5.5 插件验证

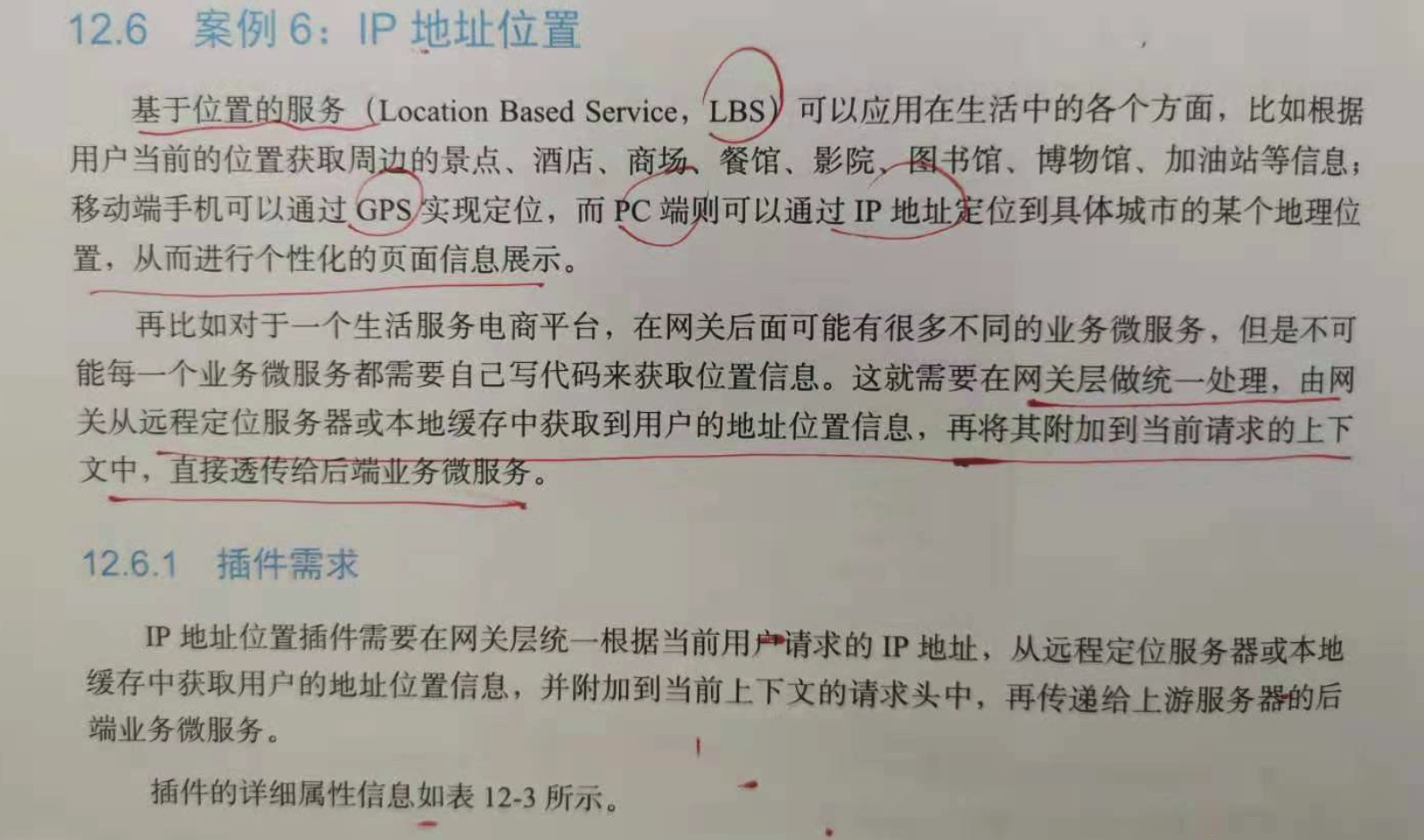

12.6 案例 6:IP 地址位置

基于位置的服务(LBS),可以应用在很多场景。移动端手机可以通过GPS实现定位,而PC端可以通过IP地址定位到具体城市的某个地理位置。

可以在网关层做统一处理,由网关从远程定位服务器或者本地缓存中获取用户的地址位置信息,再将其附加到当前请求的上下文中,直接透传给后端业务服务。

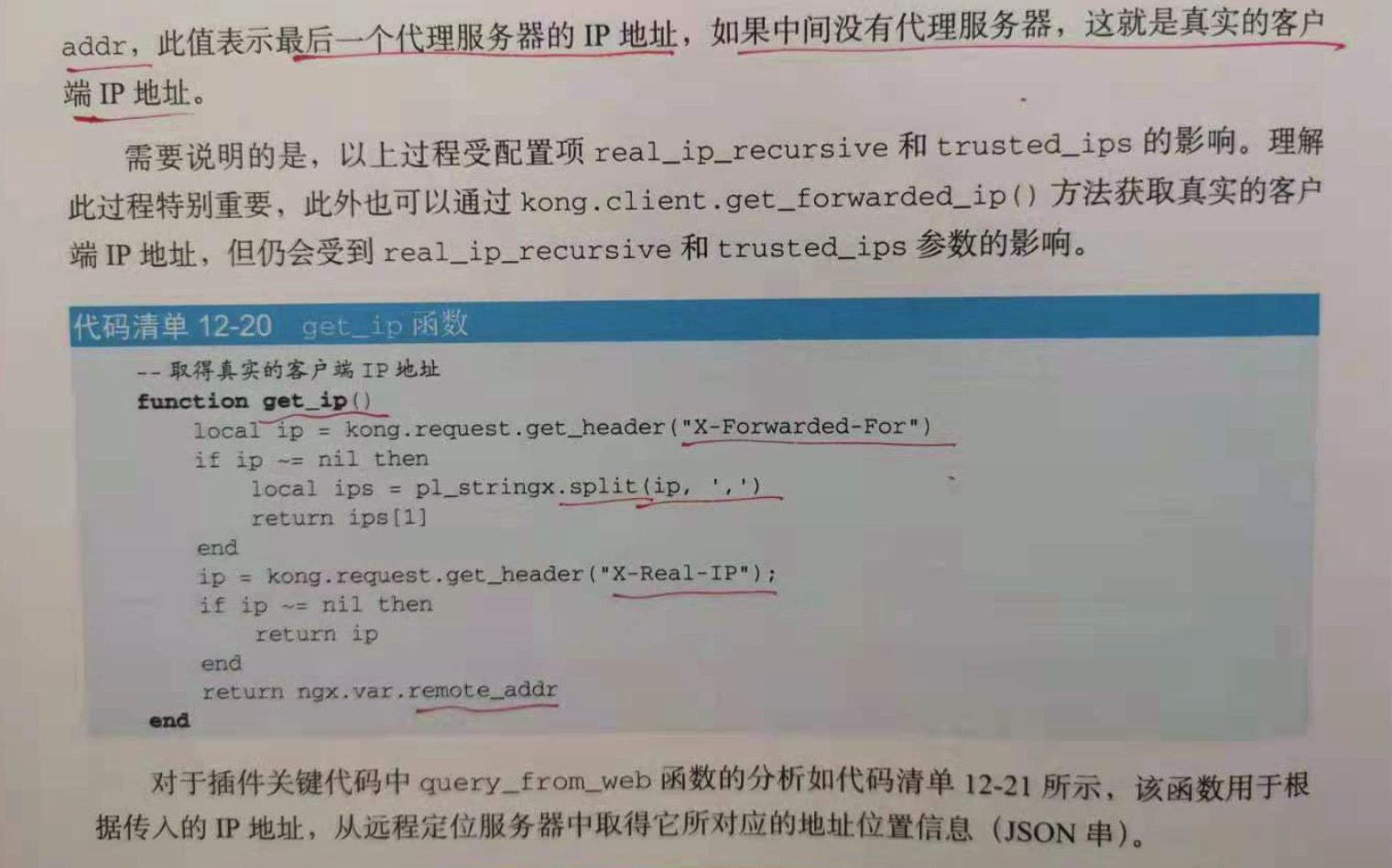

获取真实IP:

首先从 X-Forwarded-For 中(该请求头用于记录经过的代理服务器的ip地址信息)中取值,如果有此请求,直接取出取中第1个值即可,这是由于每经过一级代理,

就会把这次请求的来源ip追加在 X-Forwarded-For 中,因此取出第1个即意味着拿到了真实ip;如果无此请求头,则从 X-Real-IP 中取(需要有realip_module)

模块,此值记录了客户端的真实ip地址;如果前2着都没有,再从nginx变量中取 remote_addr,此值表示最后一个代理服务器的ip地址,如果中间没有代理服务器,这

就是真实的客户端ip地址。

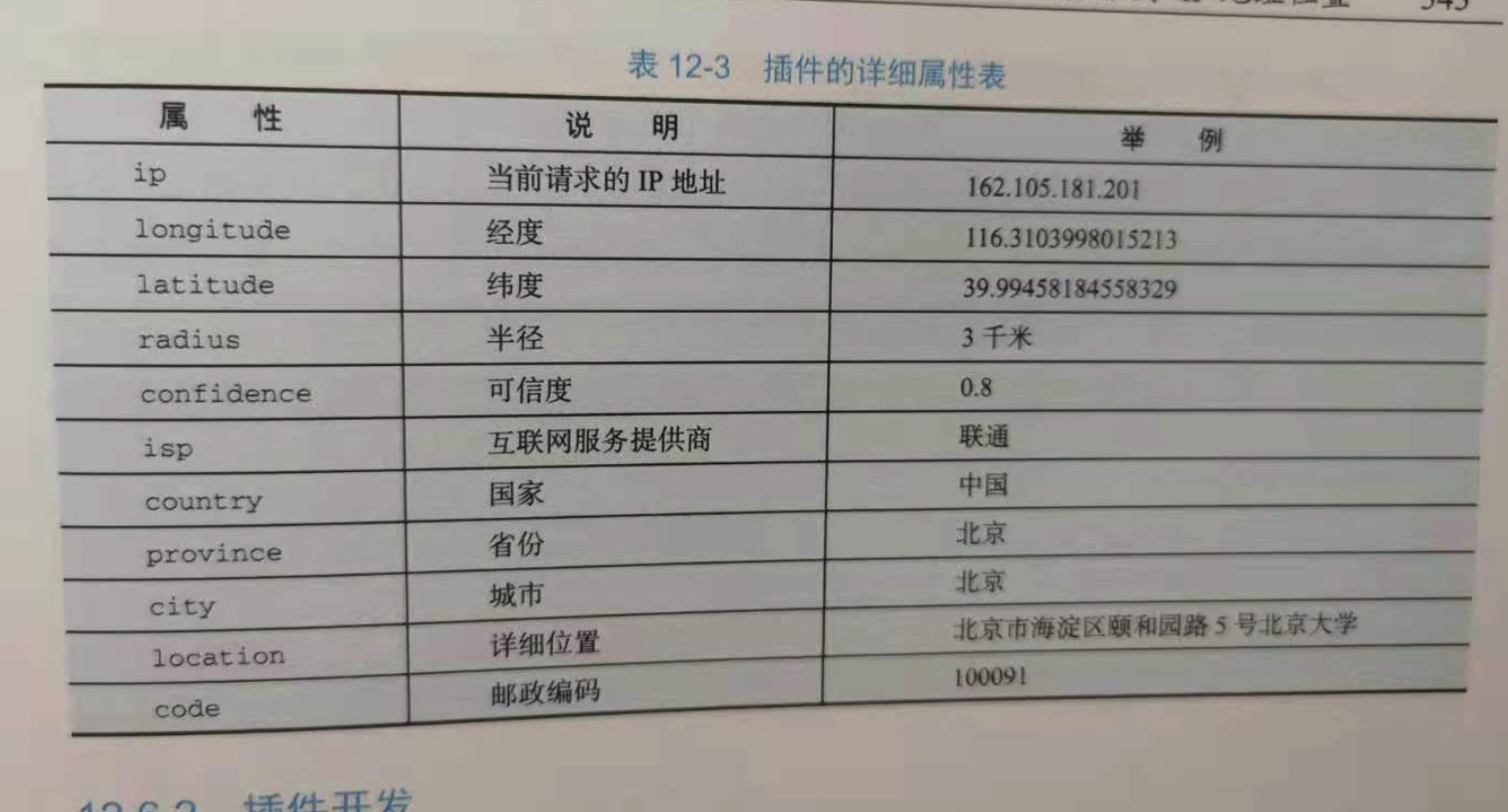

12.6.1 插件需求

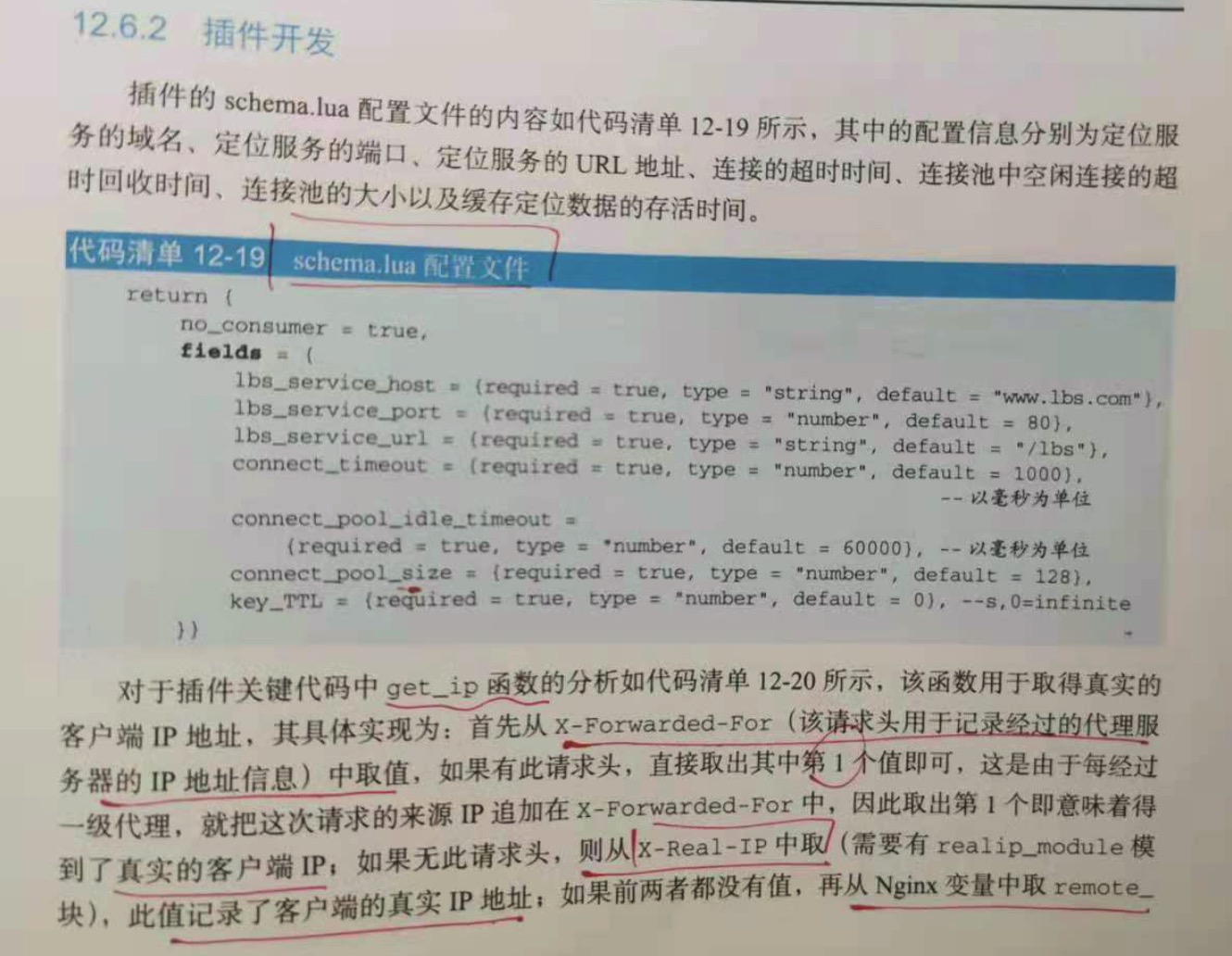

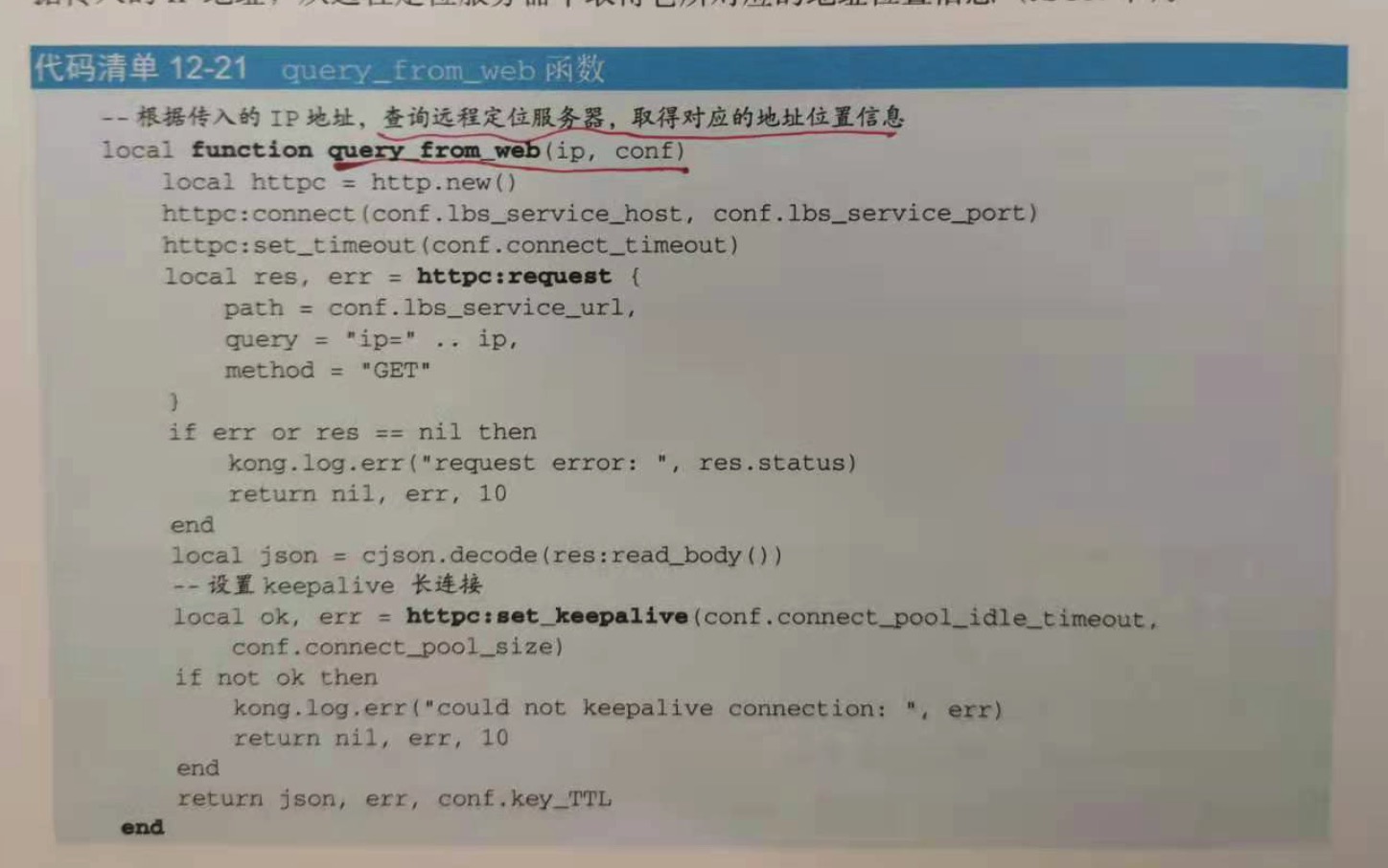

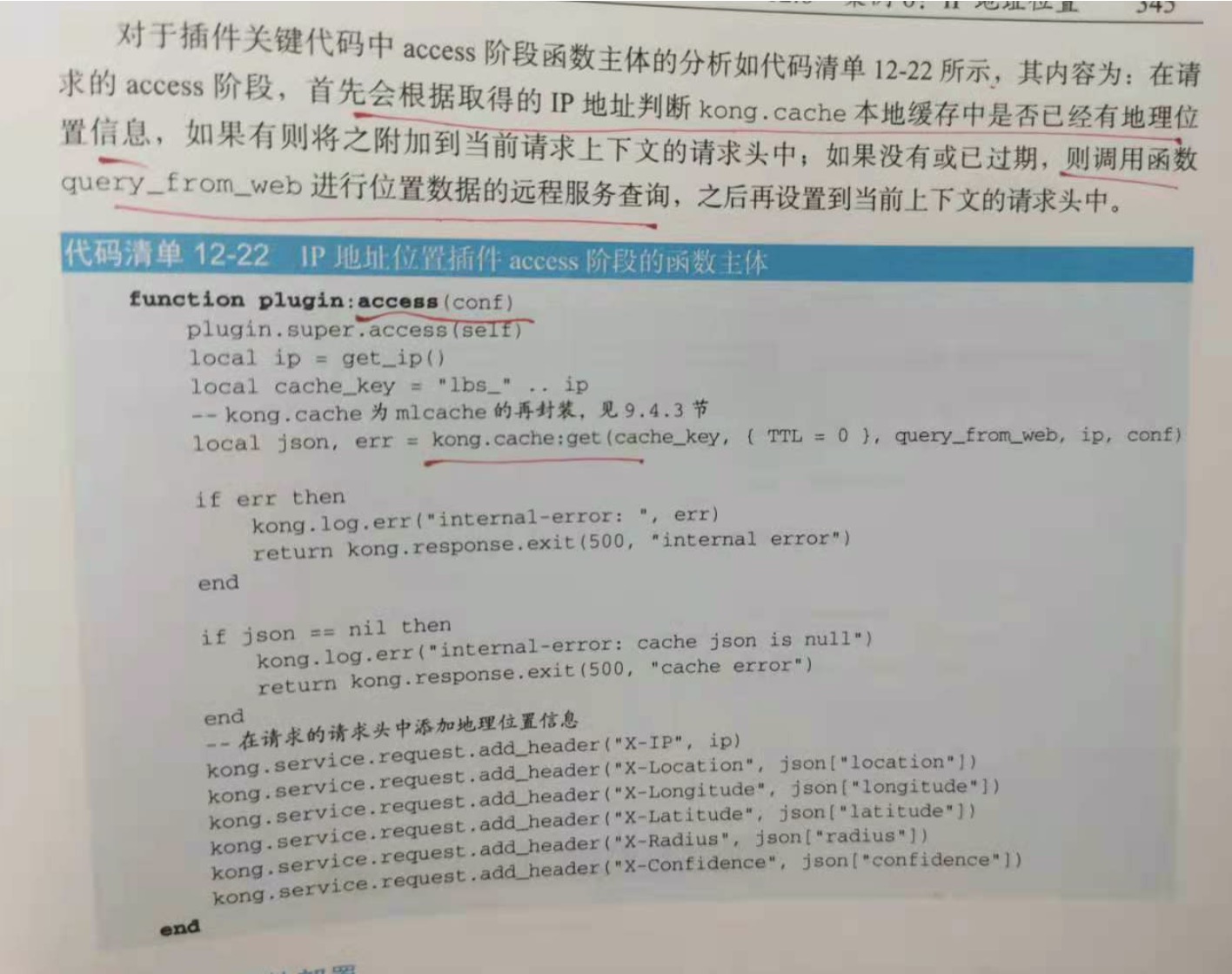

12.6.2 插件开发

12.6.3 插件部署

12.6.4 插件配置

12.6.5 插件验证

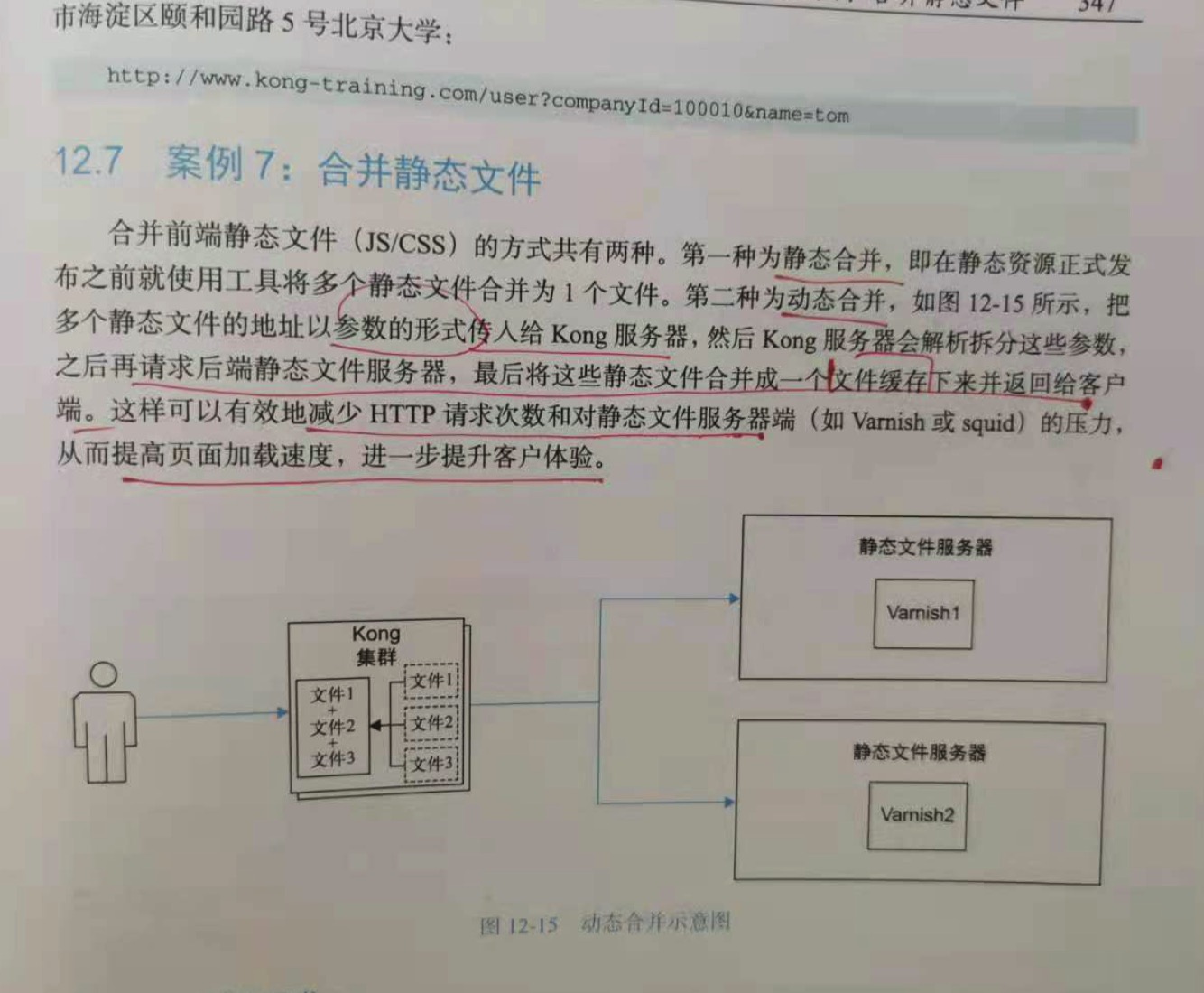

12.7 案例 7:合并静态文件

合并前端静态文件(js/css)的方式有两种:第一种为静态合并,即在静态资源正式发布之前就使用工具将多个静态文件合并为1个文件。第二种为动态合并,把多个静态文件

的地址以参数的形式传入给kong服务器,然后kong服务器会解析拆分这些参数,之后再请求后端静态文件服务器,最后将这些静态文件合并成一个文件缓存并返回给客户端。这样

可以有效的减少http请求次数和对静态文件服务端的压力,从而提高页面加载速度,进一步提升客户体验。

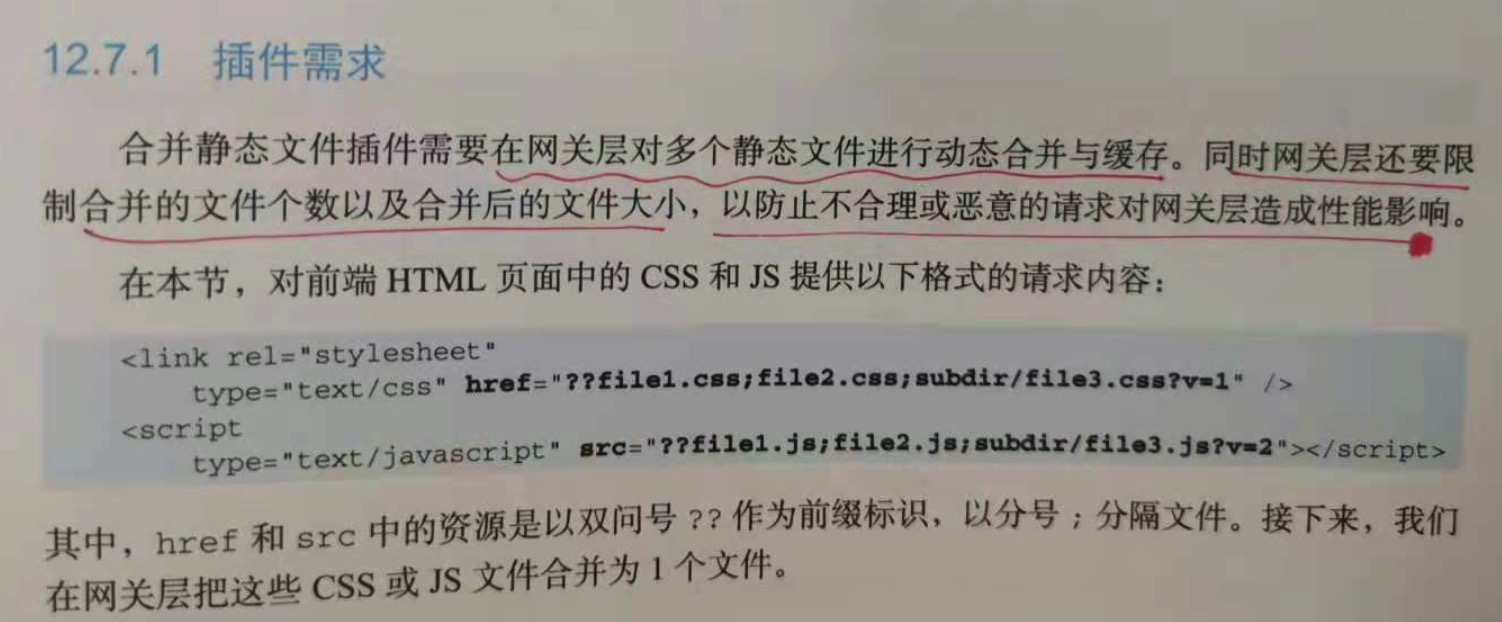

12.7.1 插件需求

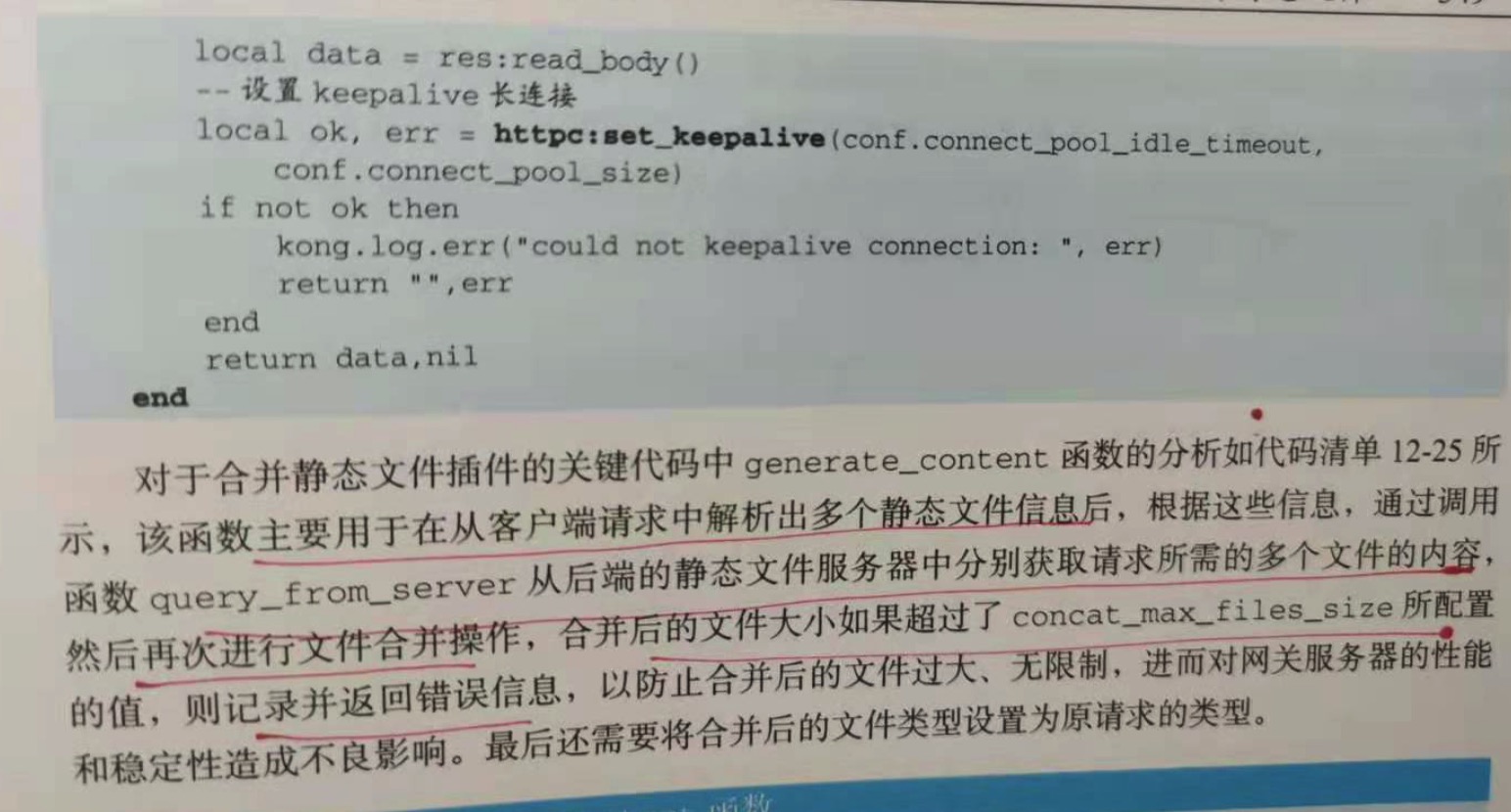

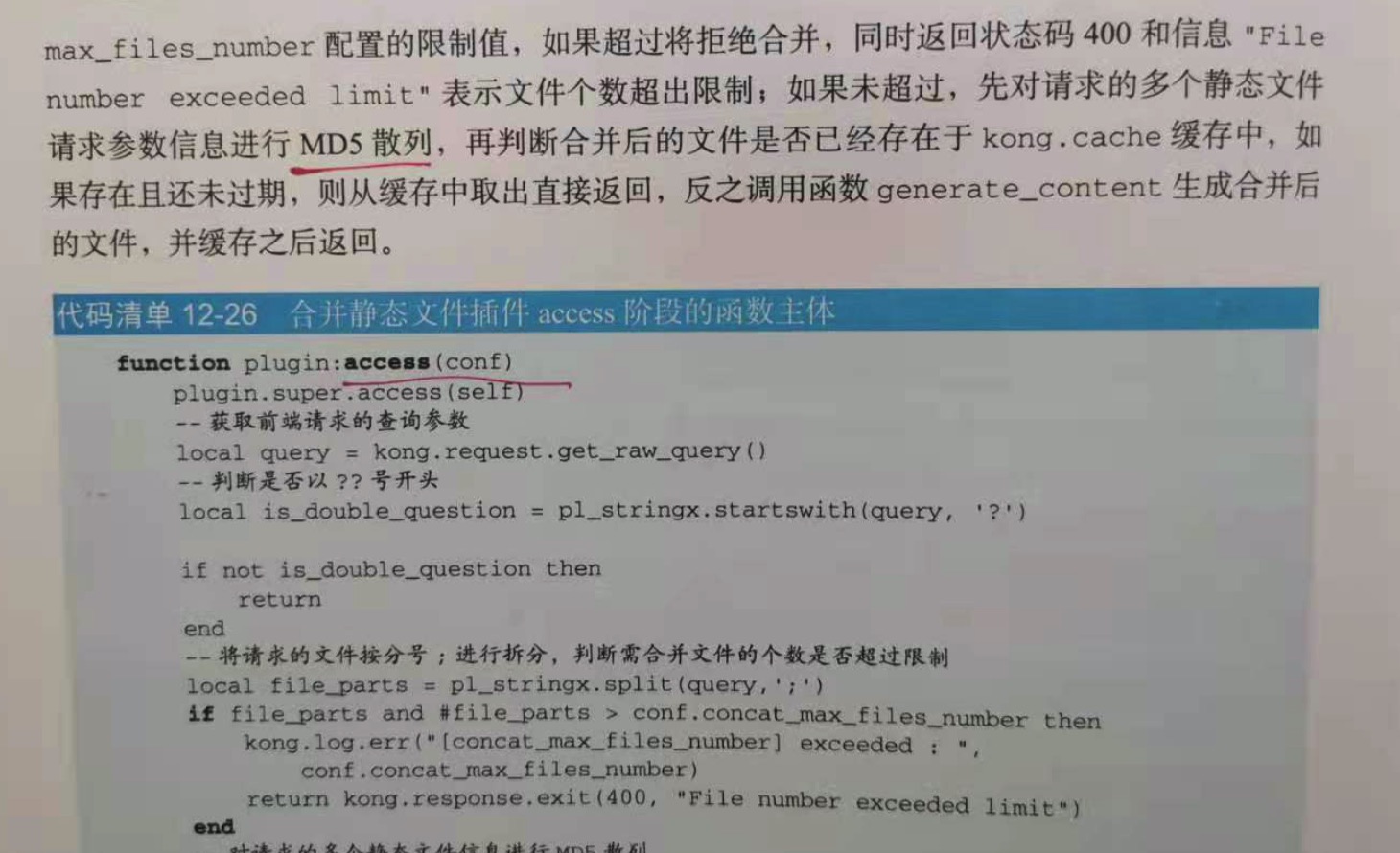

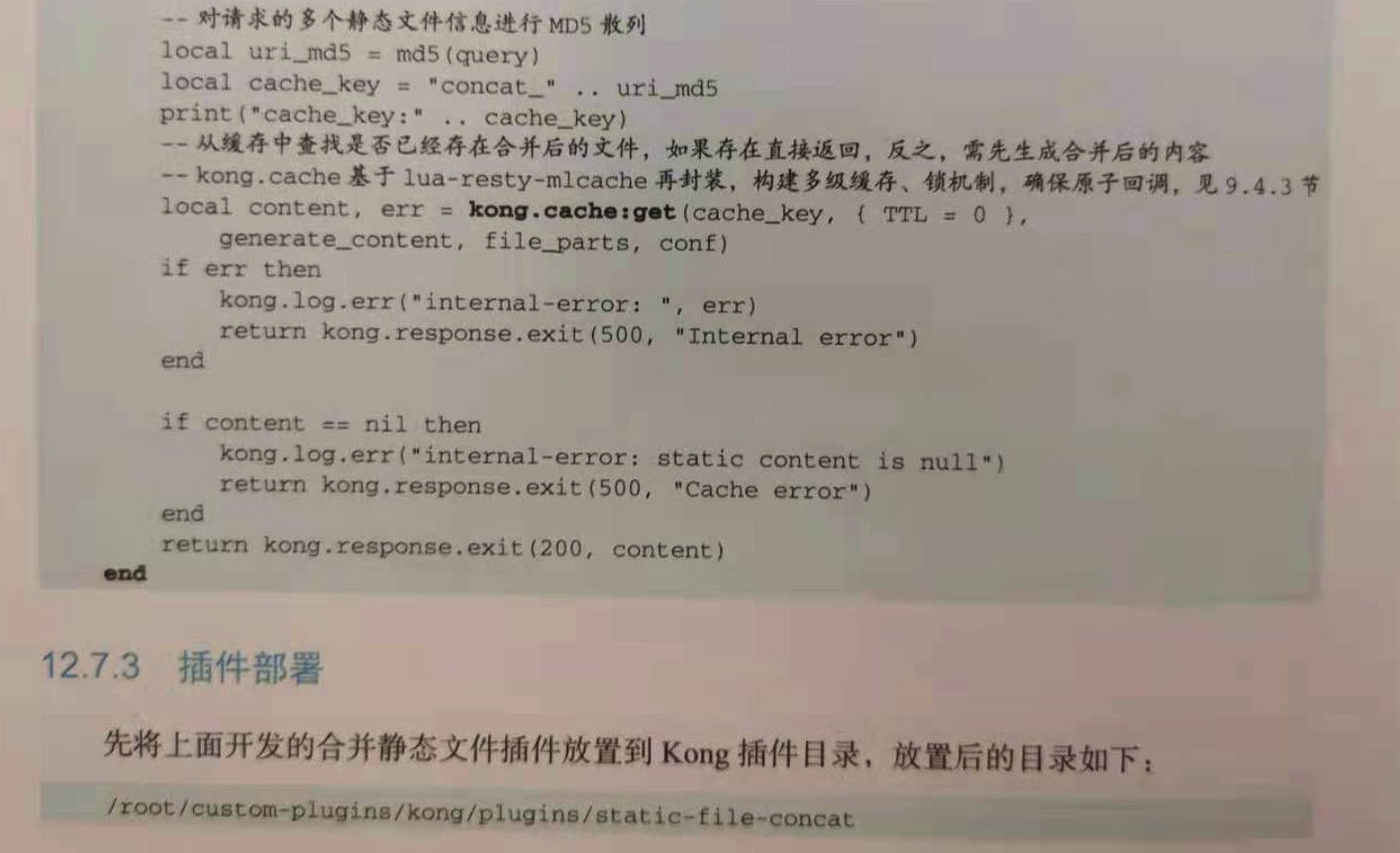

12.7.2 插件开发

12.7.3 插件部署

12.7.4 插件配置

12.7.5 插件验证

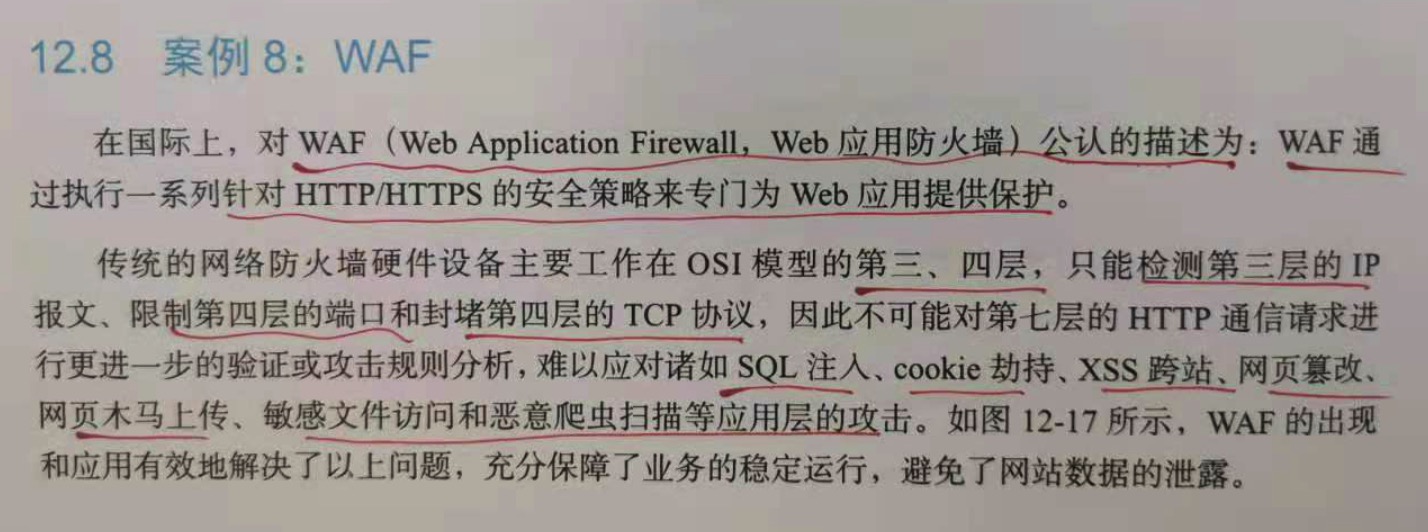

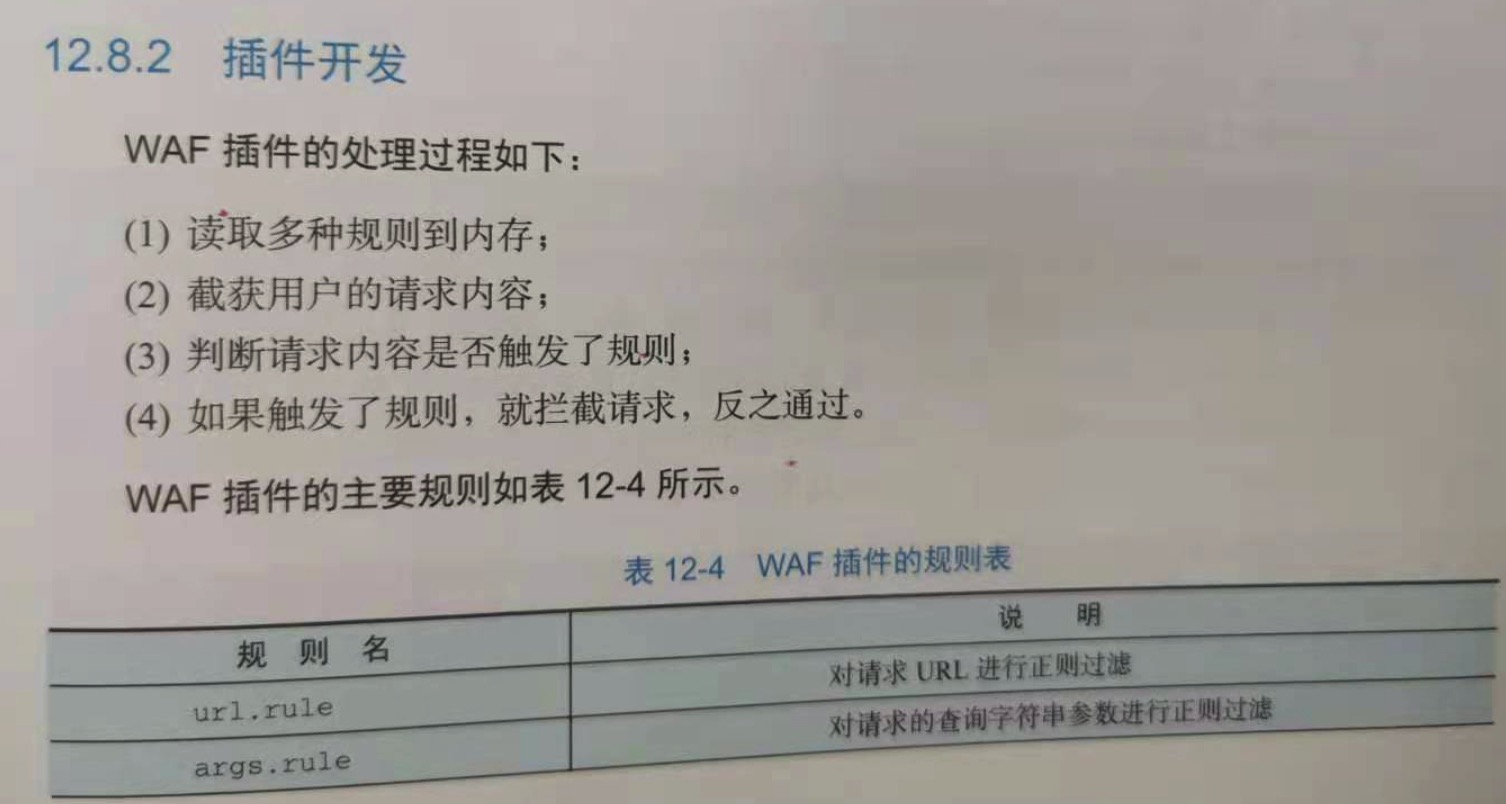

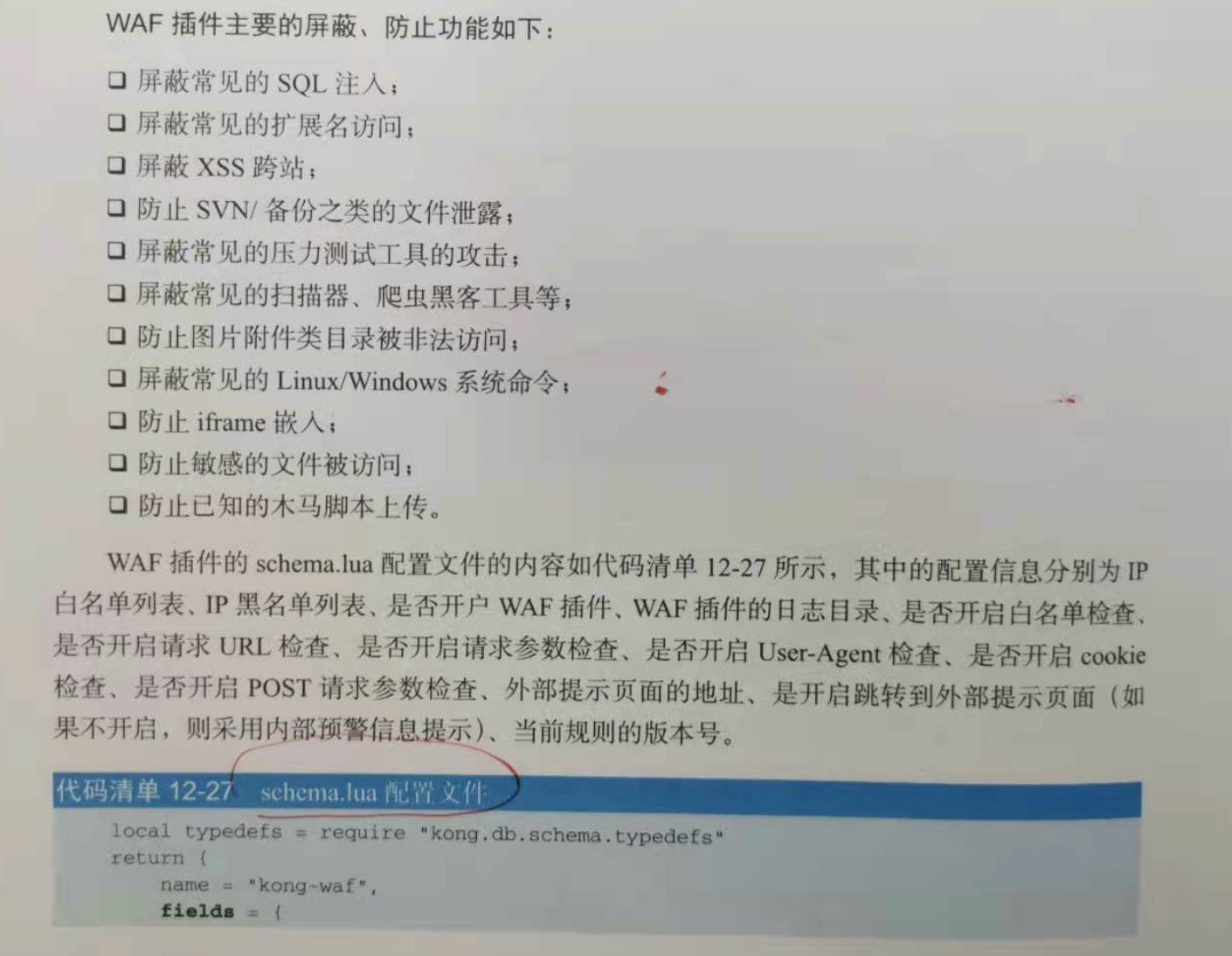

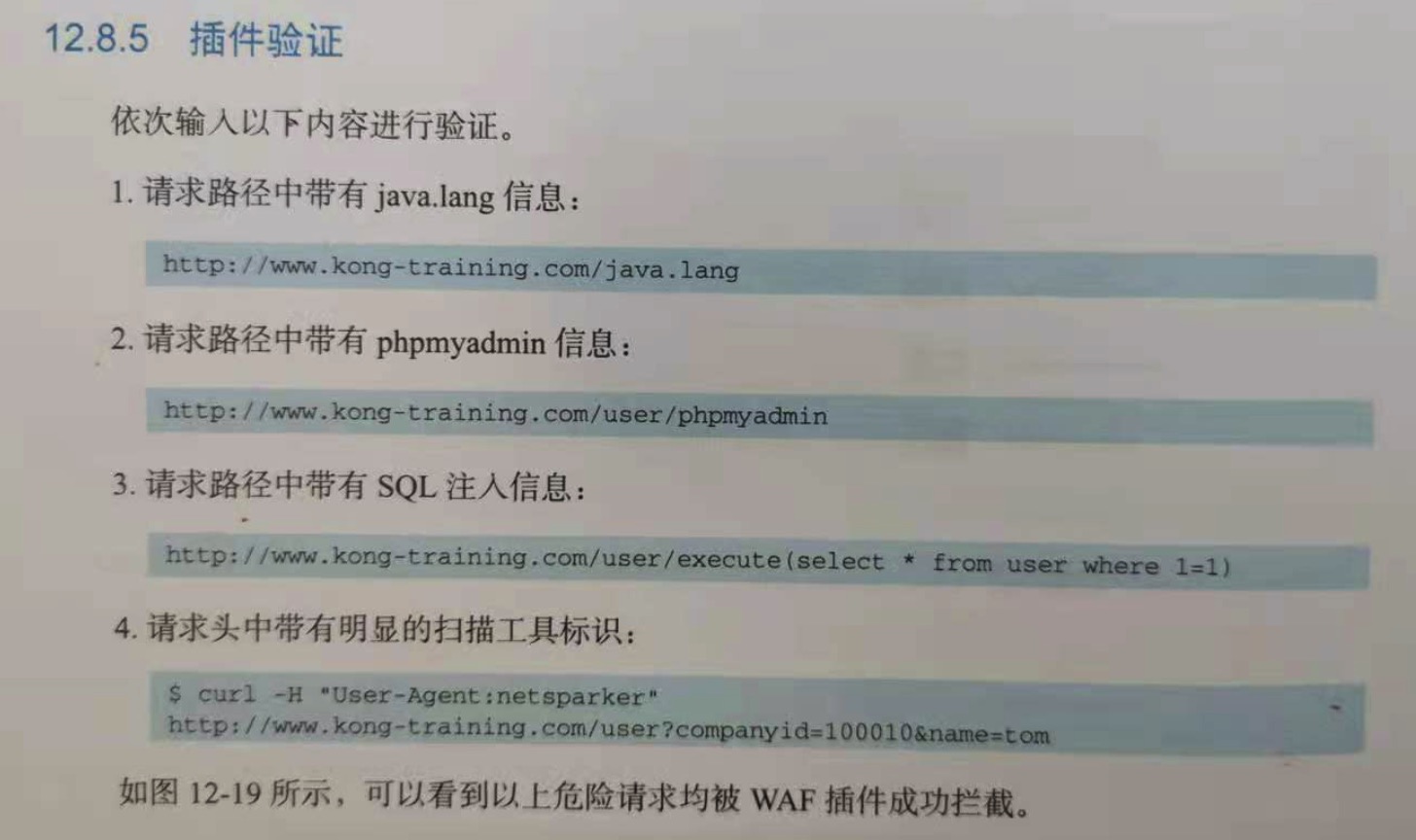

12.8 案例 8:WAF

waf(web应用防火墙),通过一系列针对http/https 的安全策略来专门为web应用提供保护。

传统的网络防火墙硬件设备主要工作在OSI模型的第三,第四层,只能检测第三次的ip报文,限制第四层的端口和封堵第四层的tcp协议,因此不可能对第七层的http通信

请求进行更进一步的验证或者攻击规则分析,难以应对诸如 sql 注入,cookie劫持,xss跨站,网页篡改,网页木马上传,敏感文件访问和恶意爬虫扫描等应用层攻击。waf的

出现有效的解决了上述问题。

12.8.1 插件需求

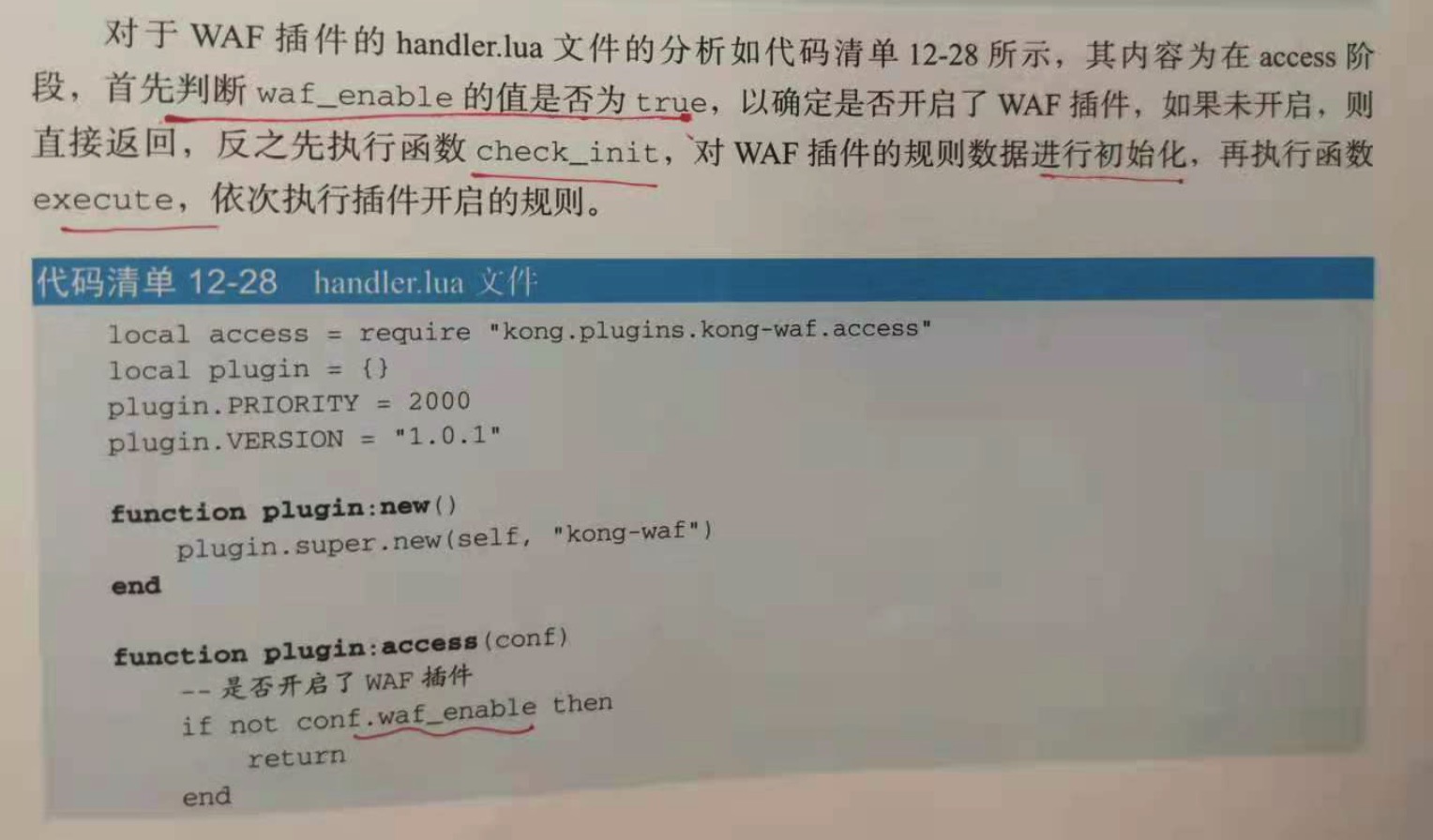

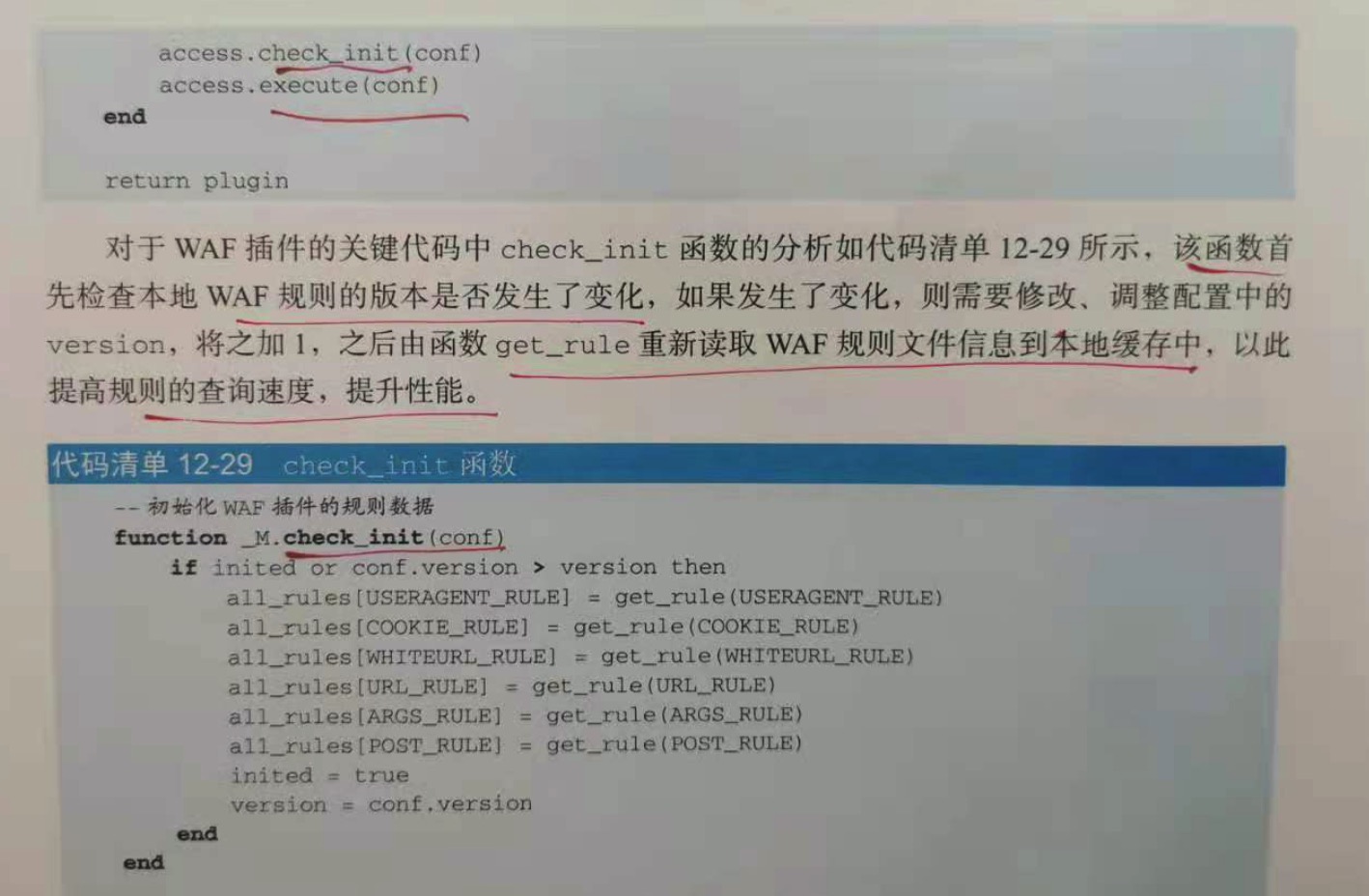

12.8.2 插件开发

12.8.3 插件部署

12.8.4 插件配置

12.8.5 插件验证

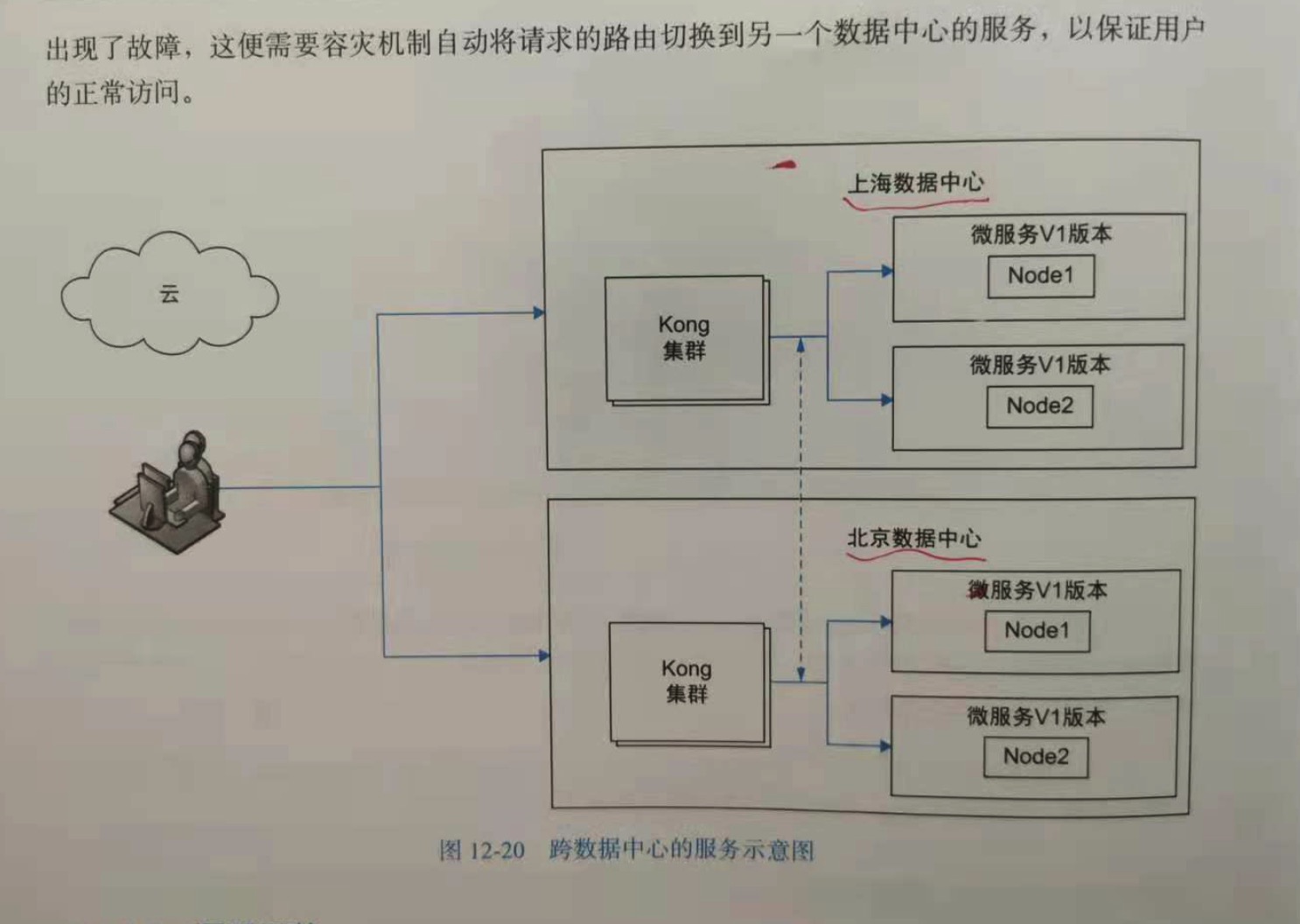

12.9 案例 9:跨数据中心

很多公司都有自己的 多数据中心机房,其主要目的是保证服务的高可用性,提升哦用户体验以及服务的数据容灾,使得任意一个数据中心的服务发生故障时,都能自动

调度转移到另一个数据中心,而且中间不需要人工介入。这里需要强调的是,假如数据中心的服务发生了故障,第一时间需要考虑的是可用性而非性能。

如果是整个数据中心整个机房发生灾难性故障,则需要结合GSLB(全局负载均衡)或者GTM(全局流量管理),根据资源的健康情况和流量负载做出全局的智能的数据中心

调度策略。

12.9.1 插件需求

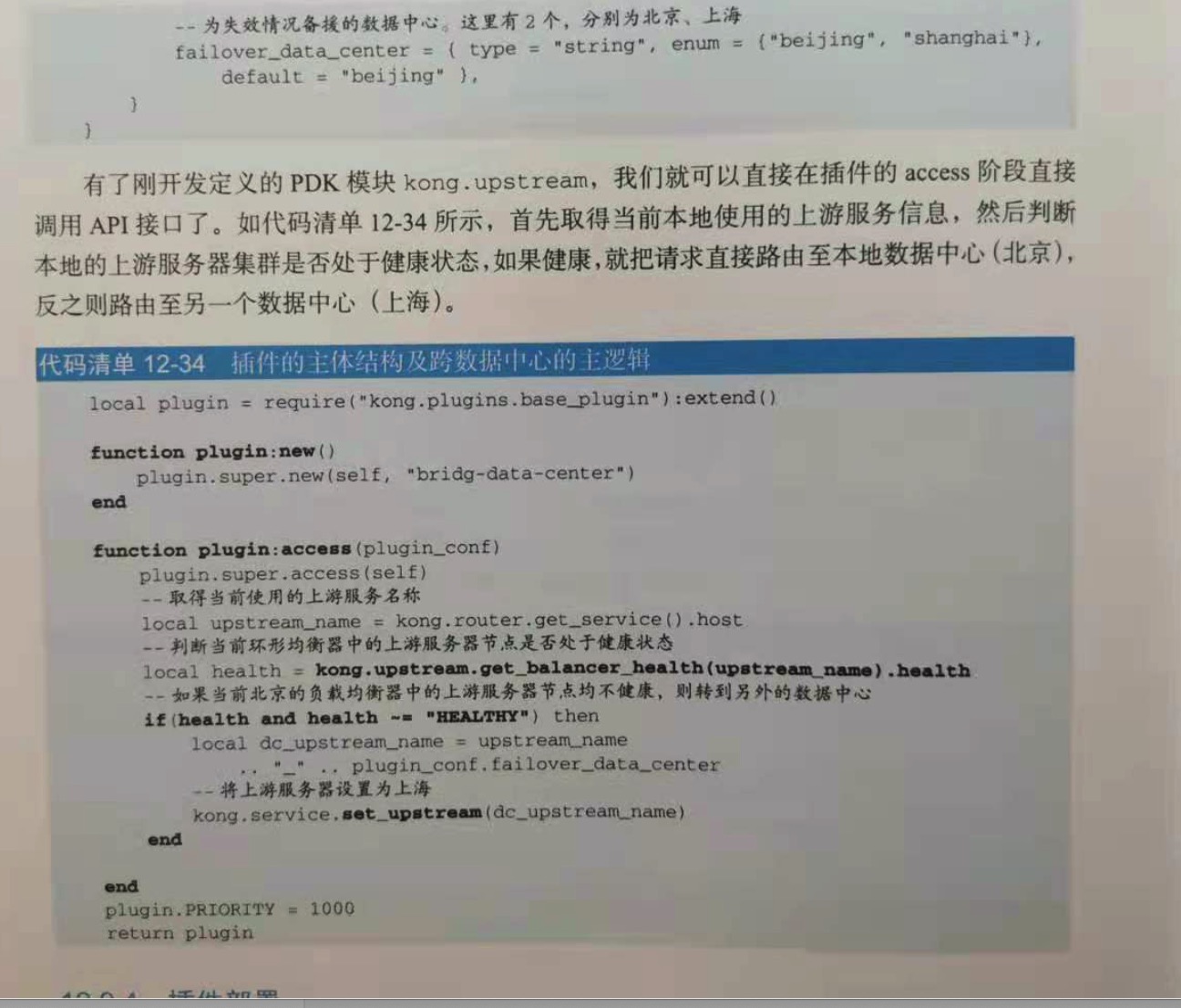

12.9.2 源码调整

12.9.3 插件开发

12.9.4 插件部署

12.9.5 插件配置

12.9.6 插件验证

以上是关于云原生第五周--k8s实战案例的主要内容,如果未能解决你的问题,请参考以下文章

云原生Kubernetes系列第五篇kubeadm v1.20 部署K8S 集群架构(人生这道选择题,总会有遗憾)

云原生之kubernetes实战在k8s环境下部署KubeGems云管理平台

云原生之kubernetes实战在k8s环境下部署KubeGems云管理平台