[LeetCode] 1042. Flower Planting With No Adjacent

Posted CNoodle

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[LeetCode] 1042. Flower Planting With No Adjacent相关的知识,希望对你有一定的参考价值。

You have n gardens, labeled from 1 to n, and an array paths where paths[i] = [xi, yi] describes a bidirectional path between garden xi to garden yi. In each garden, you want to plant one of 4 types of flowers.

All gardens have at most 3 paths coming into or leaving it.

Your task is to choose a flower type for each garden such that, for any two gardens connected by a path, they have different types of flowers.

Return any such a choice as an array answer, where answer[i] is the type of flower planted in the (i+1)th garden. The flower types are denoted 1, 2, 3, or 4. It is guaranteed an answer exists.

Example 1:

Input: n = 3, paths = [[1,2],[2,3],[3,1]] Output: [1,2,3] Explanation: Gardens 1 and 2 have different types. Gardens 2 and 3 have different types. Gardens 3 and 1 have different types. Hence, [1,2,3] is a valid answer. Other valid answers include [1,2,4], [1,4,2], and [3,2,1].

Example 2:

Input: n = 4, paths = [[1,2],[3,4]] Output: [1,2,1,2]

Example 3:

Input: n = 4, paths = [[1,2],[2,3],[3,4],[4,1],[1,3],[2,4]] Output: [1,2,3,4]

Constraints:

1 <= n <= 1040 <= paths.length <= 2 * 104paths[i].length == 21 <= xi, yi <= nxi != yi- Every garden has at most 3 paths coming into or leaving it.

不邻接植花。

有 n 个花园,按从 1 到 n 标记。另有数组 paths ,其中 paths[i] = [xi, yi] 描述了花园 xi 到花园 yi 的双向路径。在每个花园中,你打算种下四种花之一。

另外,所有花园 最多 有 3 条路径可以进入或离开.

你需要为每个花园选择一种花,使得通过路径相连的任何两个花园中的花的种类互不相同。

以数组形式返回 任一 可行的方案作为答案 answer,其中 answer[i] 为在第 (i+1) 个花园中种植的花的种类。花的种类用 1、2、3、4 表示。保证存在答案。

来源:力扣(LeetCode)

链接:https://leetcode.cn/problems/flower-planting-with-no-adjacent

著作权归领扣网络所有。商业转载请联系官方授权,非商业转载请注明出处。

思路是 DFS + 染色。题目说每个花园你都要种花,那么意味着你需要遍历整个图。这里我用 hashmap 把图建立起来,之后开始遍历,遍历的时候我还用了一个长度为 5 的 boolean 数组记录每个 node 的邻居节点是否有被染色过。长度为什么是 5 是因为题目说可以用1 - 4来染色,为了处理 0 方便我就把长度定为 5。注意题目给的 path 里面的数字在建图的时候需要 - 1。其余部分参见代码注释。

时间O(V + E)

空间O(n)

Java实现

class Solution public int[] gardenNoAdj(int n, int[][] paths) // 建图 HashMap<Integer, Set<Integer>> g = new HashMap<>(); for (int i = 0; i < n; i++) g.put(i, new HashSet<>()); for (int[] p : paths) int from = p[0] - 1; int to = p[1] - 1; g.get(from).add(to); g.get(to).add(from); // 记录每个位置填什么颜色 int[] res = new int[n]; for (int i = 0; i < n; i++) // 如果邻居节点有上过色的,标记一下 boolean[] used = new boolean[5]; for (int j : g.get(i)) used[res[j]] = true; for (int c = 1; c <= 4; c++) // 给当前位置上色 if (used[c] == false) res[i] = c; return res;

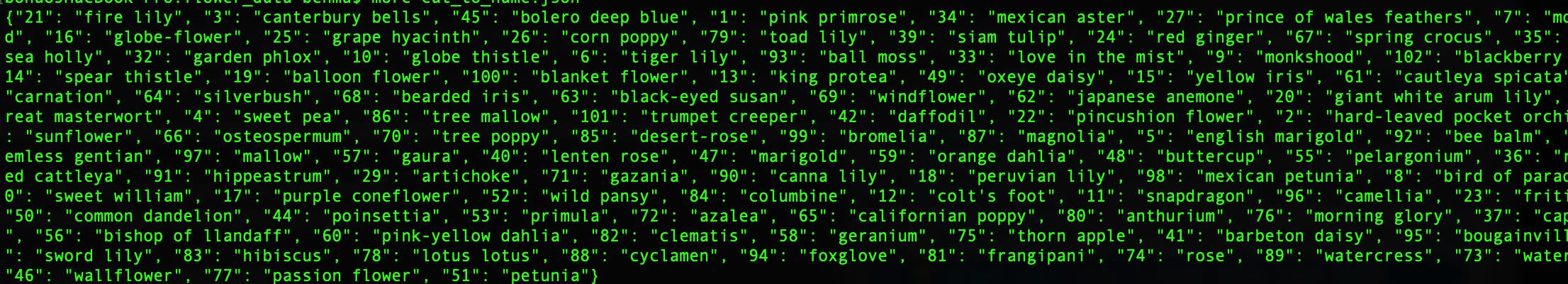

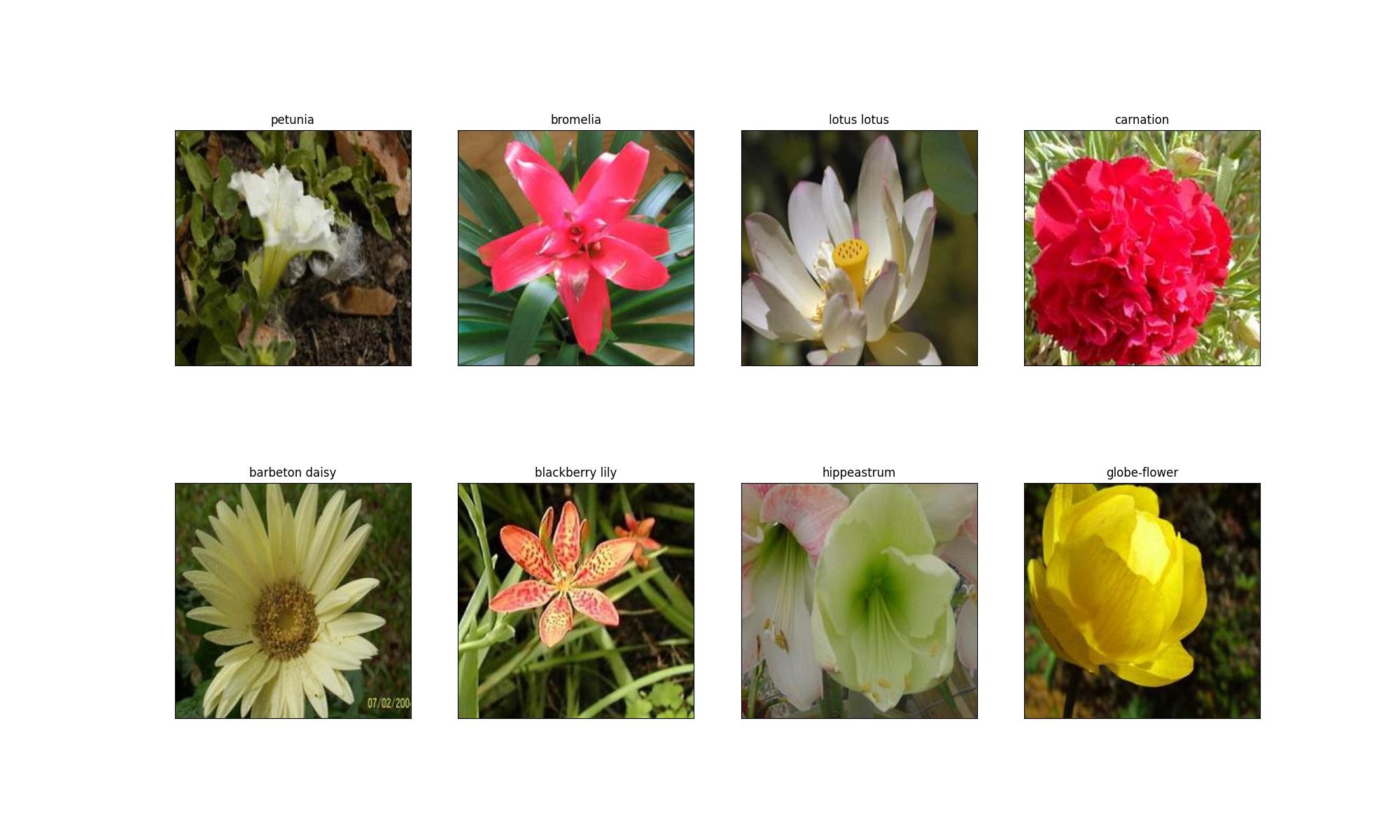

基于resnet训练flower图像分类模型(p31-p37)

概述

本文属于跟着b站学习pytorch笔记系列。网上很多大佬 关于renet模型图像识别的分类文章很多,但是图像部分通常就是一笔带过,给个链接。还是看了知乎的文章从头准备了数据集。

一 数据集准备

该数据集由102类产自英国的花卉组成。每类由40-258张图片组成

Visual Geometry Group - University of Oxford

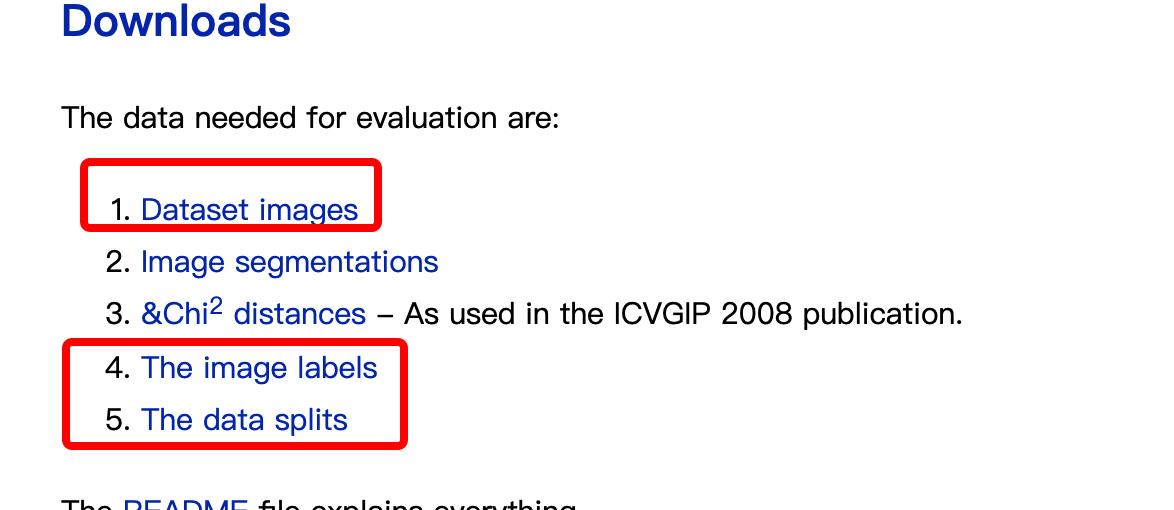

就是红圈的1,4,5部分。 1 是8000多张图片压缩包,

4,5 可以用wget下载后拷到 工程文件夹

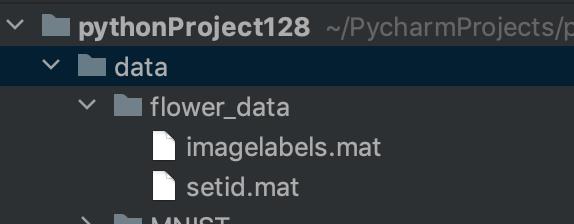

imagelabels.mat

总共有8189列,每列上的数字代表类别号。setid.mat

-trnid字段:总共有1020列,每10列为一类花卉的图片,每列上的数字代表图片号。

-valid字段:总共有1020列,每10列为一类花卉的图片,每列上的数字代表图片号。

-tstid字段:总共有6149列,每一类花卉的列数不定,每列上的数字代表图片号。

import scipy.io # 用于加载mat文件

import numpy as np

import os

from PIL import Image

import shutil

labels = scipy.io.loadmat('./data/flower_data/imagelabels.mat')

labels = np.array(labels['labels'][0]) - 1

print("labels:", labels)

######## flower dataset: train test valid 数据id标识 ########

setid = scipy.io.loadmat('./data/flower_data/setid.mat')

validation = np.array(setid['valid'][0]) - 1

np.random.shuffle(validation)

train = np.array(setid['trnid'][0]) - 1

np.random.shuffle(train)

test = np.array(setid['tstid'][0]) - 1

np.random.shuffle(test)

######## flower data path 数据保存路径 ########

flower_dir = list()

######## flower data dirs 生成保存数据的绝对路径和名称 ########

for img in os.listdir("/Users/benmu/Downloads/jpg"):

######## flower data ########

flower_dir.append(os.path.join("/Users/benmu/Downloads/jpg", img))

######## flower data dirs sort 数据的绝对路径和名称排序 从小到大 ########

flower_dir.sort()

# print(flower_dir)

des_folder_train = "/Users/benmu/PycharmProjects/pythonProject128/data/flower_data/train"

for tid in train:

######## open image and get label ########

img = Image.open(flower_dir[tid])

# print(flower_dir[tid])

img = img.resize((256, 256), Image.ANTIALIAS)

lable = labels[tid]+1

# print(lable)

path = flower_dir[tid]

print("path:", path)

base_path = os.path.basename(path)

print("base_path:", base_path)

classes = str(lable)

class_path = os.path.join(des_folder_train, classes)

# 判断结果

if not os.path.exists(class_path):

os.makedirs(class_path)

print("class_path:", class_path)

despath = os.path.join(class_path, base_path)

print("despath:", despath)

img.save(despath)

des_folder_validation = "/Users/benmu/PycharmProjects/pythonProject128/data/flower_data/validation"

for tid in validation:

######## open image and get label ########

img = Image.open(flower_dir[tid])

# print(flower_dir[tid])

img = img.resize((256, 256), Image.ANTIALIAS)

lable = labels[tid]+1

# print(lable)

path = flower_dir[tid]

print("path:", path)

base_path = os.path.basename(path)

print("base_path:", base_path)

classes = str(lable)

class_path = os.path.join(des_folder_validation, classes)

# 判断结果

if not os.path.exists(class_path):

os.makedirs(class_path)

print("class_path:", class_path)

despath = os.path.join(class_path, base_path)

print("despath:", despath)

img.save(despath)

des_folder_test = "/Users/benmu/PycharmProjects/pythonProject128/data/flower_data/test"

for tid in test:

######## open image and get label ########

img = Image.open(flower_dir[tid])

# print(flower_dir[tid])

img = img.resize((256, 256), Image.ANTIALIAS)

lable = labels[tid]+1

# print(lable)

path = flower_dir[tid]

print("path:", path)

base_path = os.path.basename(path)

print("base_path:", base_path)

classes = str(lable)

class_path = os.path.join(des_folder_test, classes)

# 判断结果

if not os.path.exists(class_path):

os.makedirs(class_path)

print("class_path:", class_path)

despath = os.path.join(class_path, base_path)

print("despath:", despath)

img.save(despath)

分类号效果:

这里图像统一调整尺寸256x256. 常见的模型需要这个或者224x224的尺寸。也可以 不调整后面的图像增强部分会处理。

train,validation 都是1020,test 6149可以自己调整,我就是把test 改为train.

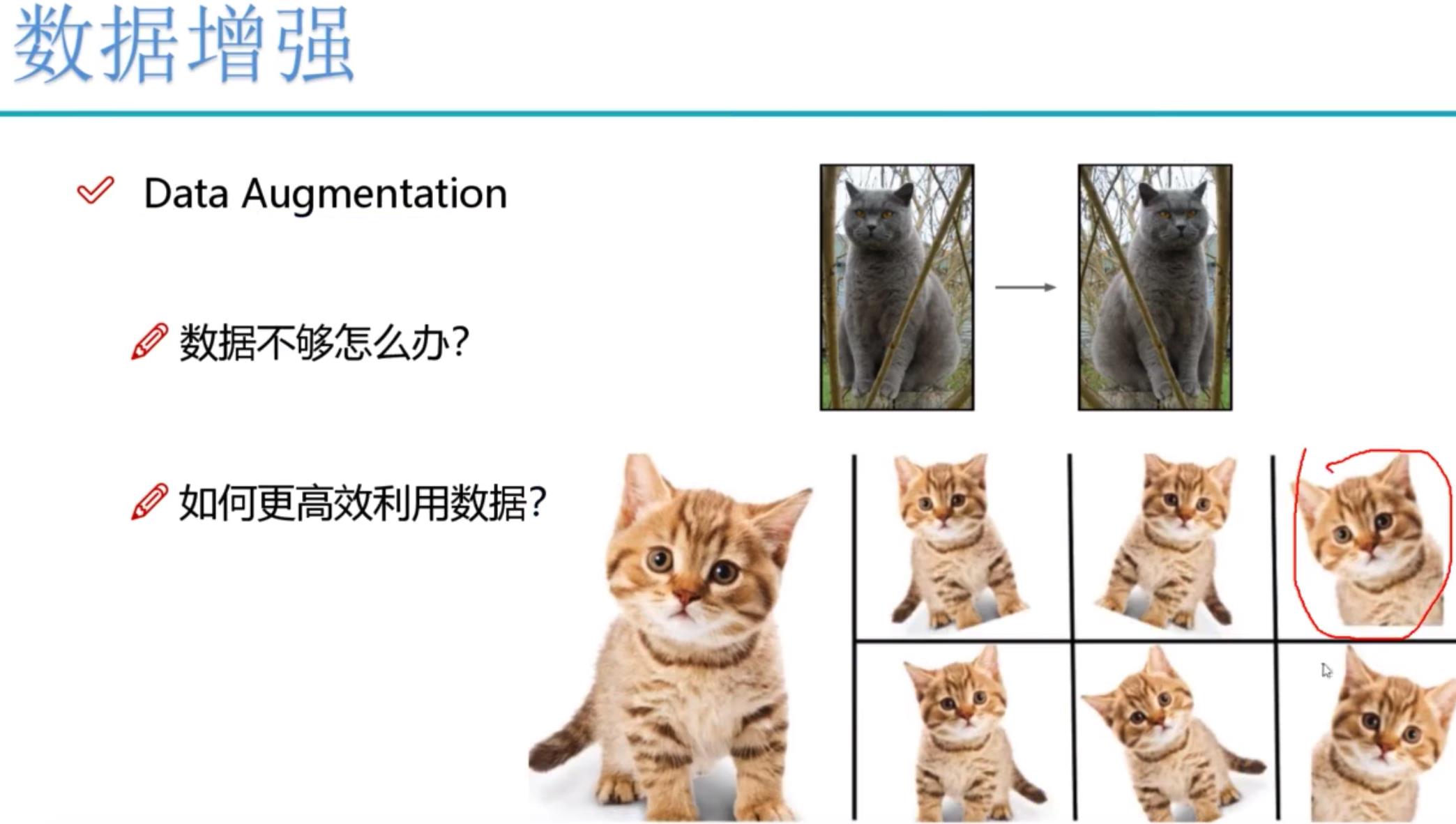

数据增强:

框架已经实现好了,不需要opencv单独处理 。

data_dir = './data/flower_data/'

train_dir = data_dir + '/train'

valid_dir = data_dir + '/valid'

# 进行数据增强操作

data_transforms =

'train': transforms.Compose([

transforms.RandomRotation(45), # 随机旋转,-45到45度

transforms.CenterCrop(224), # 从中心开始裁剪,将其剪为224

transforms.RandomHorizontalFlip(p=0.5), # 有p的概率随机水平反转

transforms.RandomVerticalFlip(p=0.5), # 垂直反转

transforms.ColorJitter(brightness=0.2, contrast=0.1, saturation=0.1, hue=0.1), ##亮度,对比度,饱和度,色相

transforms.RandomGrayscale(p=0.025), # 有0.025%的概率变为灰度图像

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]), # 均值,方差

]),

'valid': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

图像增加就是对训练集上,旋转、裁剪、最后是归一化。valid 不需要旋转 。

batch_size = 8

# 入参:path、增强

image_datasets = x: datasets.ImageFolder(os.path.join(data_dir, x), data_transforms[x]) for x in ['train', 'valid']

dataloaders = x: torch.utils.data.DataLoader(image_datasets[x], batch_size=batch_size, shuffle=True) for x in

['train', 'valid']

dataset_sizes = x: len(image_datasets[x]) for x in ['train', 'valid']

class_names = image_datasets['train'].classes

print(image_datasets)打印image_datasets包含的信息:

'train': Dataset ImageFolder

Number of datapoints: 6149

Root location: ./data/flower_data/train

StandardTransform

Transform: Compose(

RandomRotation(degrees=[-45.0, 45.0], interpolation=nearest, expand=False, fill=0)

CenterCrop(size=(224, 224))

RandomHorizontalFlip(p=0.5)

RandomVerticalFlip(p=0.5)

ColorJitter(brightness=[0.8, 1.2], contrast=[0.9, 1.1], saturation=[0.9, 1.1], hue=[-0.1, 0.1])

RandomGrayscale(p=0.025)

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

), 'valid': Dataset ImageFolder

Number of datapoints: 1020

Root location: ./data/flower_data/valid

StandardTransform

Transform: Compose(

Resize(size=256, interpolation=bilinear, max_size=None, antialias=None)

CenterCrop(size=(224, 224))

ToTensor()

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

)标签对应的实际名字 cat_to_name.json

展示数据

注意tensor的数据需要转换成numpy的格式,而且还需要还原回标准化的结果

def im_convert(tensor):

image = tensor.to("cpu").clone().detach()

image = image.numpy().squeeze()

##因为tensor是c*h*w,我们需要把他变成h*w*c

image = image.transpose(1, 2, 0)

image = image * np.array((0.229, 0.224, 0.225)) + np.array((0.485, 0.456, 0.406))

##clip函数,将小于0的数字变为0,将大于1的数字变为1

image = image.clip(0, 1)

return image

fig=plt.figure(figsize=(20, 12))

columns = 4

rows = 2

dataiter = iter(dataloaders['valid'])

inputs, classes = dataiter.next()

for idx in range (columns*rows):

ax = fig.add_subplot(rows, columns, idx+1, xticks=[], yticks=[])

ax.set_title(cat_to_name[str(int(class_names[classes[idx]]))])

plt.imshow(im_convert(inputs[idx]))

plt.show()

二 .加载models中提供的模型

迁移 学习的目的,使用已有模型的权重跟偏置参数,作为我们的初始化的参数。尽可能吧自己的模型与已有的模型近似。

迁移 学习的目的,使用已有模型的权重跟偏置参数,作为我们的初始化的参数。尽可能吧自己的模型与已有的模型近似。

学习什么?通常两种策略:A 接着训练,B冻住模型层,只更改全连接层。

2.1是否用GPU训练

# 是否用GPU训练

train_on_gpu = torch.cuda.is_available()

if not train_on_gpu:

print('CUDA is not available. Training on CPU ...')

else:

print('CUDA is available! Training on GPU ...')

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

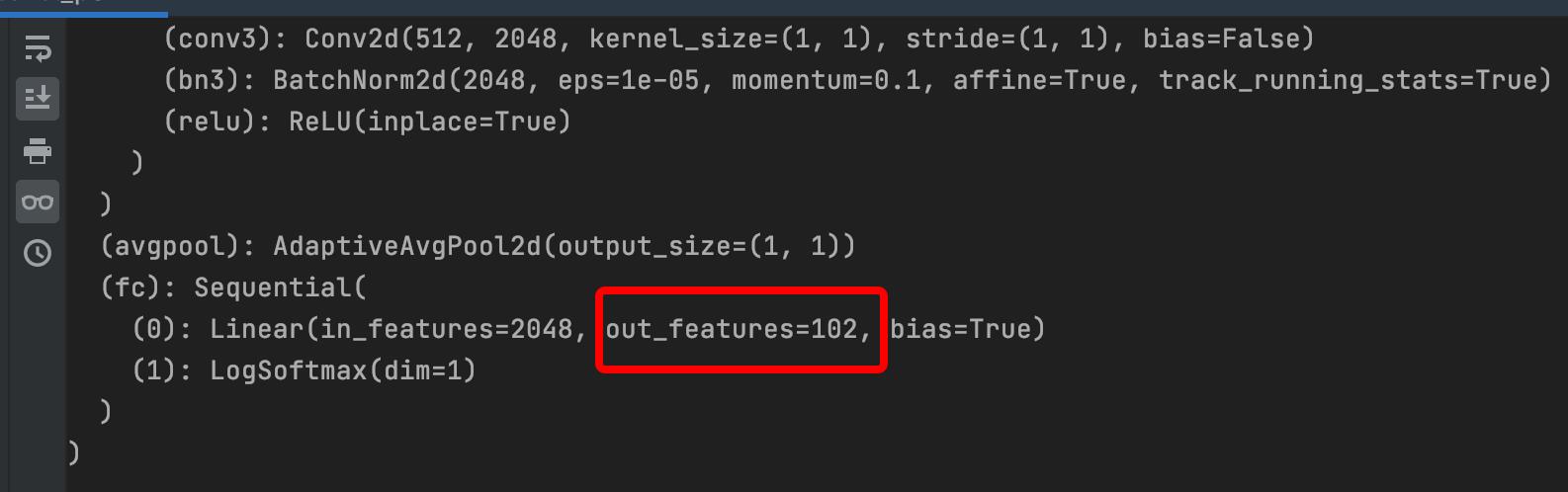

2.2使用训练好的renet

model_ft = models.resnet152()因为我的mac没有cuda,用不了GPU,输入的图片224x224x3,所以模型:resnet152估计不行。

全连接层out_feature=1000,要修改为自己的输出102

2.3 pytorch官网例子,定义图像分类模型

这里只是列举了resnet. vgg ,其他的没贴上

model_name = 'resnet' # 可选的比较多 ['resnet', 'alexnet', 'vgg', 'squeezenet', 'densenet', 'inception']

# 是否用人家训练好的特征来做

feature_extract = True

def set_parameter_requires_grad(model, feature_extracting): # 使用resnet训练好的权重参数,不再训练

if feature_extracting:

for param in model.parameters():

param.requires_grad = False

def initialize_model(model_name, num_classes, feature_extract, use_pretrained=True):

# 选择合适的模型,不同模型的初始化方法稍微有点区别

model_ft = None

input_size = 0

if model_name == "resnet":

""" Resnet152

"""

model_ft = models.resnet50(pretrained=use_pretrained) # 下载resnet模型到本地

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Sequential(nn.Linear(num_ftrs, 102), # 全连接层输出改为我们的图像类别102

nn.LogSoftmax(dim=1)) # 在softmax的结果上再做多一次log运算

input_size = 224

elif model_name == "vgg":

""" VGG11_bn

"""

model_ft = models.vgg16(pretrained=use_pretrained)

set_parameter_requires_grad(model_ft, feature_extract)

num_ftrs = model_ft.classifier[6].in_features

model_ft.classifier[6] = nn.Linear(num_ftrs, num_classes)

input_size = 224

else:

print("Invalid model name, exiting...")

exit()

return model_ft, input_size

2.4设置哪些层需要训练

model_ft, input_size = initialize_model(model_name, 102, feature_extract, use_pretrained=True)

#GPU计算

model_ft = model_ft.to(device)

# 模型保存

filename='checkpoint.pth'

# 是否训练所有层

params_to_update = model_ft.parameters()

print("Params to learn:")

if feature_extract:

params_to_update = []

for name,param in model_ft.named_parameters():

if param.requires_grad == True:

params_to_update.append(param)

print("\\t",name)

else:

for name,param in model_ft.named_parameters():

if param.requires_grad == True:

print("\\t",name)

输出参数:

Params to learn:

fc.0.weight

fc.0.bias

打印模型,可以看到最后全连接层的输出已经改为102

注意:models.resnet152(pretrained=use_pretrained) #下载resnet模型到本地

这个200多M可能慢一些,可以看一下本地的路径:.cache/torch/hub/checkpoints

2.5 优化器设置

# 优化器设置

optimizer_ft = optim.Adam(params_to_update, lr=1e-2)

scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)#optim.lr_scheduler学习率调整策略,学习率每7个epoch衰减成原来的1/10

#最后一层已经LogSoftmax()了,所以不能nn.CrossEntropyLoss()来计算了,nn.CrossEntropyLoss()相当于logSoftmax()和nn.NLLLoss()整合

criterion = nn.NLLLoss()这里 老师讲了损失函数为什么不用交叉熵改用NLLLoss

2.6 训练模块

def train_model(model, dataloaders, criterion, optimizer, num_epochs=25, is_inception=False,filename=filename): #is_inception是否使用其他的网络

since = time.time()

best_acc = 0

"""

checkpoint = torch.load(filename)

best_acc = checkpoint['best_acc']

model.load_state_dict(checkpoint['state_dict'])

optimizer.load_state_dict(checkpoint['optimizer'])

model.class_to_idx = checkpoint['mapping']

"""

model.to(device)

val_acc_history = []

train_acc_history = []

train_losses = []

valid_losses = []

LRs = [optimizer.param_groups[0]['lr']]

best_model_wts = copy.deepcopy(model.state_dict())

for epoch in range(num_epochs):

print('Epoch /'.format(epoch, num_epochs - 1))

print('-' * 10)

# 训练和验证

for phase in ['train', 'valid']:

if phase == 'train':

model.train() # 训练

else:

model.eval() # 验证

running_loss = 0.0

running_corrects = 0

# 把数据都取个遍

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

# 清零

optimizer.zero_grad()

# 只有训练的时候计算和更新梯度

with torch.set_grad_enabled(phase == 'train'):

if is_inception and phase == 'train':

outputs, aux_outputs = model(inputs)

loss1 = criterion(outputs, labels)

loss2 = criterion(aux_outputs, labels)

loss = loss1 + 0.4*loss2

else:#resnet执行的是这里

outputs = model(inputs)

loss = criterion(outputs, labels)

_, preds = torch.max(outputs, 1)

# 训练阶段更新权重

if phase == 'train':

loss.backward()

optimizer.step()

# 计算损失

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / len(dataloaders[phase].dataset)

epoch_acc = running_corrects.double() / len(dataloaders[phase].dataset)

time_elapsed = time.time() - since

print('Time elapsed :.0fm :.0fs'.format(time_elapsed // 60, time_elapsed % 60))

print(' Loss: :.4f Acc: :.4f'.format(phase, epoch_loss, epoch_acc))

# 得到最好那次的模型

if phase == 'valid' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

state =

'state_dict': model.state_dict(),

'best_acc': best_acc,

'optimizer' : optimizer.state_dict(),

torch.save(state, filename)

if phase == 'valid':

val_acc_history.append(epoch_acc)

valid_losses.append(epoch_loss)

scheduler.step(epoch_loss)

if phase == 'train':

train_acc_history.append(epoch_acc)

train_losses.append(epoch_loss)

print('Optimizer learning rate : :.7f'.format(optimizer.param_groups[0]['lr']))

LRs.append(optimizer.param_groups[0]['lr'])

print()

time_elapsed = time.time() - since

print('Training complete in :.0fm :.0fs'.format(time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: :4f'.format(best_acc))

# 训练完后用最好的一次当做模型最终的结果

model.load_state_dict(best_model_wts)

return model, val_acc_history, train_acc_history, valid_losses, train_losses, LRs

开始训练 :

model_ft, val_acc_history, train_acc_history, valid_losses, train_losses, LRs = train_model(model_ft, dataloaders, criterion, optimizer_ft, num_epochs=20, is_inception=(model_name=="inception"))然后我可怜的电脑 就开始嗡嗡的响。19分钟才跑一次。起码要20次吧,老师建议是50次。那么这种级别的数据,不用GPU基本上低配的电脑就没戏了。

Epoch 0/19

----------

Time elapsed 16m 29s

train Loss: 9.5165 Acc: 0.3410

Time elapsed 18m 49s

valid Loss: 10.0126 Acc: 0.5216

Optimizer learning rate : 0.0010000

明天找个别的windows本子,重新折腾下环境试试。

参考:

基于tensorflow_slim模型调参的flower102鲜花分类过程 - 知乎

以上是关于[LeetCode] 1042. Flower Planting With No Adjacent的主要内容,如果未能解决你的问题,请参考以下文章

Leetcode-5056 Flower Planting With No Adjacent(不邻接植花)

flower 转自:https://www.jianshu.com/p/4a408657ef76

基于resnet训练flower图像分类模型(p31-p37)