DNN的BP算法Python简单实现

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了DNN的BP算法Python简单实现相关的知识,希望对你有一定的参考价值。

BP算法是神经网络的基础,也是最重要的部分。由于误差反向传播的过程中,可能会出现梯度消失或者爆炸,所以需要调整损失函数。在LSTM中,通过sigmoid来实现三个门来解决记忆问题,用tensorflow实现的过程中,需要进行梯度修剪操作,以防止梯度爆炸。RNN的BPTT算法同样存在着这样的问题,所以步数超过5步以后,记忆效果大大下降。LSTM的效果能够支持到30多步数,太长了也不行。如果要求更长的记忆,或者考虑更多的上下文,可以把多个句子的LSTM输出组合起来作为另一个LSTM的输入。下面上传用Python实现的普通DNN的BP算法,激活为sigmoid.

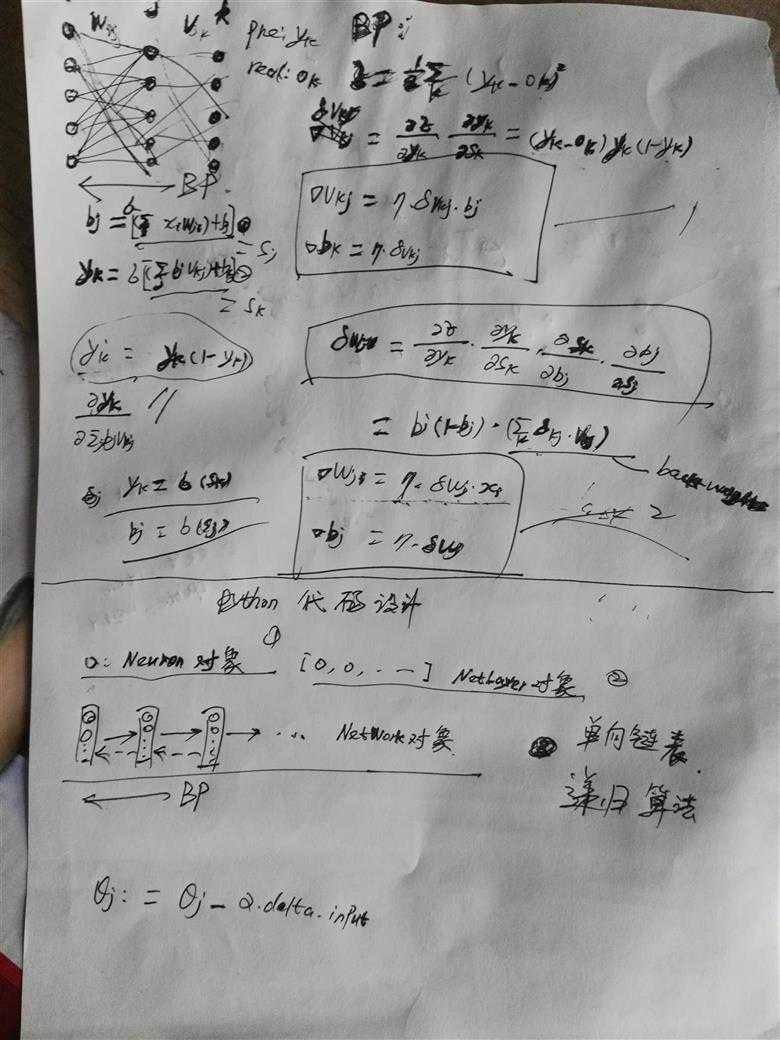

字迹有些潦草,凑合用吧,习惯了手动绘图,个人习惯。后面的代码实现思路是最重要的:每个层有多个节点,层与层之间单向链接(前馈网络),因此数据结构可以设计为单向链表。实现的过程属于典型的递归,递归调用到最后一层后把每一层的back_weights反馈给上一层,直到推导结束。上传代码(未经过优化的代码):

测试代码:

import numpy as np

import NeuralNetWork as nw

if __name__ == ‘__main__‘:

print("test neural network")

data = np.array([[1, 0, 0, 0, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 1, 0, 0, 0, 0],

[0, 0, 0, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 0],

[0, 0, 0, 0, 0, 0, 0, 1]])

np.set_printoptions(precision=3, suppress=True)

for i in range(10):

network = nw.NeuralNetWork([8, 20, 8])

# 让输入数据与输出数据相等

network.fit(data, data, learning_rate=0.1, epochs=150)

print("\\n\\n", i, "result")

for item in data:

print(item, network.predict(item))

#NeuralNetWork.py

# encoding: utf-8

#NeuralNetWork.py

import numpy as np;

def logistic(inX):

return 1 / (1+np.exp(-inX))

def logistic_derivative(x):

return logistic(x) * (1 - logistic(x))

class Neuron:

‘‘‘

构建神经元单元,每个单元都有如下属性:1.input;2.output;3.back_weight;4.deltas_item;5.weights.

每个神经元单元更新自己的weights,多个神经元构成layer,形成weights矩阵

‘‘‘

def __init__(self,len_input):

#输入的初始参数,随机取很小的值(<0.1)

self.weights = np.random.random(len_input) * 0.1

#当前实例的输入

self.input = np.ones(len_input)

#对下一层的输出值

self.output = 1.0

#误差项

self.deltas_item = 0.0

# 上一次权重增加的量,记录起来方便后面扩展时可考虑增加冲量

self.last_weight_add = 0

def calculate_output(self,x):

#计算输出值

self.input = x;

self.output = logistic(np.dot(self.weights,self.input))

return self.output

def get_back_weight(self):

#获取反馈差值

return self.weights * self.deltas_item

def update_weight(self,target = 0,back_weight = 0,learning_rate=0.1,layer="OUTPUT"):

#更新权重

if layer == "OUTPUT":

self.deltas_item = (target - self.output) * logistic_derivative(self.input)

elif layer == "HIDDEN":

self.deltas_item = back_weight * logistic_derivative(self.input)

delta_weight = self.input * self.deltas_item * learning_rate + 0.9 * self.last_weight_add #添加冲量

self.weights += delta_weight

self.last_weight_add = delta_weight

class NetLayer:

‘‘‘

网络层封装,管理当前网络层的神经元列表

‘‘‘

def __init__(self,len_node,in_count):

‘‘‘

:param len_node: 当前层的神经元数

:param in_count: 当前层的输入数

‘‘‘

# 当前层的神经元列表

self.neurons = [Neuron(in_count) for _ in range(len_node)];

# 记录下一层的引用,方便递归操作

self.next_layer = None

def calculate_output(self,inX):

output = np.array([node.calculate_output(inX) for node in self.neurons])

if self.next_layer is not None:

return self.next_layer.calculate_output(output)

return output

def get_back_weight(self):

return sum([node.get_back_weight() for node in self.neurons])

def update_weight(self,learning_rate,target):

layer = "OUTPUT"

back_weight = np.zeros(len(self.neurons))

if self.next_layer is not None:

back_weight = self.next_layer.update_weight(learning_rate,target)

layer = "HIDDEN"

for i,node in enumerate(self.neurons):

target_item = 0 if len(target) <= i else target[i]

node.update_weight(target = target_item,back_weight = back_weight[i],learning_rate=learning_rate,layer=layer)

return self.get_back_weight()

class NeuralNetWork:

def __init__(self, layers):

self.layers = []

self.construct_network(layers)

pass

def construct_network(self, layers):

last_layer = None

for i, layer in enumerate(layers):

if i == 0:

continue

cur_layer = NetLayer(layer, layers[i - 1])

self.layers.append(cur_layer)

if last_layer is not None:

last_layer.next_layer = cur_layer

last_layer = cur_layer

def fit(self, x_train, y_train, learning_rate=0.1, epochs=100000, shuffle=False):

‘‘‘‘‘

训练网络, 默认按顺序来训练

方法 1:按训练数据顺序来训练

方法 2: 随机选择测试

:param x_train: 输入数据

:param y_train: 输出数据

:param learning_rate: 学习率

:param epochs:权重更新次数

:param shuffle:随机取数据训练

‘‘‘

indices = np.arange(len(x_train))

for _ in range(epochs):

if shuffle:

np.random.shuffle(indices)

for i in indices:

self.layers[0].calculate_output(x_train[i])

self.layers[0].update_weight(learning_rate, y_train[i])

pass

def predict(self, x):

return self.layers[0].calculate_output(x)

以上是关于DNN的BP算法Python简单实现的主要内容,如果未能解决你的问题,请参考以下文章