Yolov5--从模块解析到网络结构修改(添加注意力机制)

Posted 幼儿园总园长

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Yolov5--从模块解析到网络结构修改(添加注意力机制)相关的知识,希望对你有一定的参考价值。

文章目录

最近在进行yolov5的二次开发,软件开发完毕后才想着对框架进行一些整理和进一步学习,以下将记录一些我的学习记录。

1.模块解析(common.py)

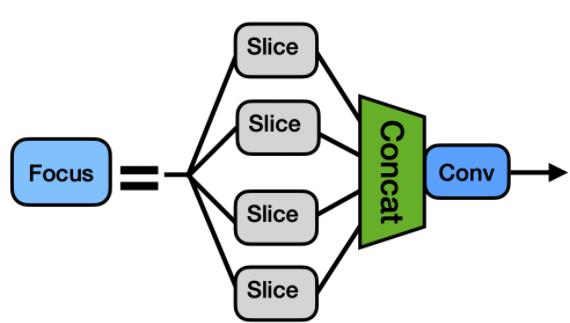

01. Focus模块

作用:下采样

输入:data( 3×640×640 彩色图片)

Focus模块的作用是对图片进行切片,类似于下采样,先将图片变为320×320×12的特征图,再经过3×3的卷积操作,输出通道32,最终变为320×320×32的特征图,是一般卷积计算量的4倍,如此做下采样将无信息丢失。

输出:32×320×320特征图

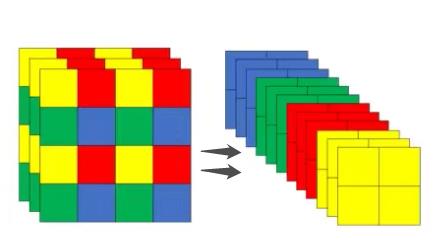

结构图片描述:

图示切分过程,channels变为4倍

代码实现:

class Focus(nn.Module):

# Focus wh information into c-space

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

# c1输入,c2输出,s为步长,k为卷积核大小

super(Focus, self).__init__()

self.conv = Conv(c1 * 4, c2, k, s, p, g, act) # 输入channel数量变为4倍

def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)

# 进行切分,再进行concat

return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))

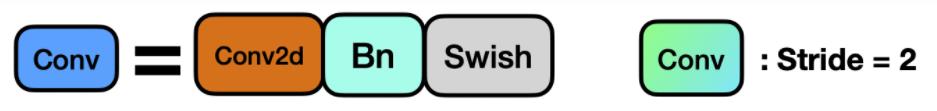

02. CONV模块

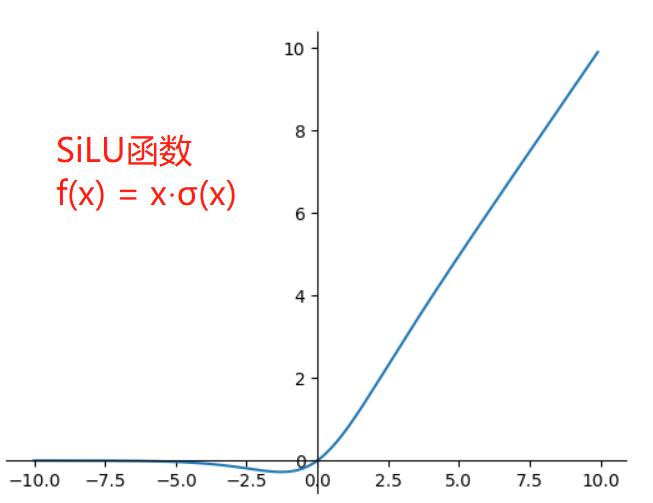

- 作者在这个基本卷积模块中封装了三个功能,包括卷积(Conv2d)、BN以及Activate函数(在新版yolov5中,作者采用了SiLU函数作为激活函数),同时autopad(k, p)实现了自动计算padding的效果。

- 总的来说Conv实现了将输入特征经过卷积层,激活函数,归一化层,得到输出层。

输出:输入大小的一半

结构图片描述:

代码实现:

class Conv(nn.Module):

# Standard convolution

# ch_in, ch_out, kernel, stride, padding, groups

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True):

# k为卷积核大小,s为步长

# g即group,当g=1时,相当于普通卷积,当g>1时,进行分组卷积。

# 分组卷积相对与普通卷积减少了参数量,提高训练效率

super(Conv, self).__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = nn.Hardswish() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def fuseforward(self, x):

return self.act(self.conv(x))

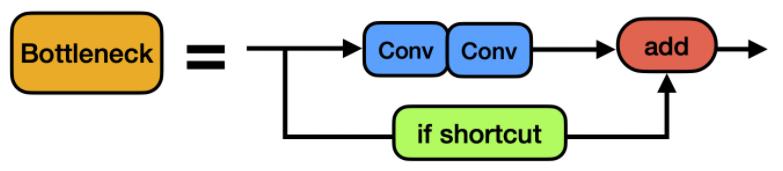

03.Bottleneck模块:

- 先将channel 数减小再扩大(默认减小到一半),具体做法是先进行1×1卷积将channel减小一半,再通过3×3卷积将通道数加倍,并获取特征(共使用两个标准卷积模块),其输入与输出的通道数是不发生改变的。

- shortcut参数控制是否进行残差连接(使用ResNet)。

- 在yolov5的backbone中的Bottleneck都默认使shortcut为True,在head中的Bottleneck都不使用shortcut。

- 与ResNet对应的,使用add而非concat进行特征融合,使得融合后的特征数不变。

结构图片描述:

代码实现:

class Bottleneck(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansion

# 特别参数

# shortcut:是否给bottleneck结构部添加shortcut连接,添加后即为ResNet模块;

# e,即expansion。bottleneck结构中的瓶颈部分的通道膨胀率,默认使用0.5即变为输入的1/2

super(Bottleneck, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_, c2, 3, 1, g=g)

self.add = shortcut and c1 == c2

def forward(self, x):

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

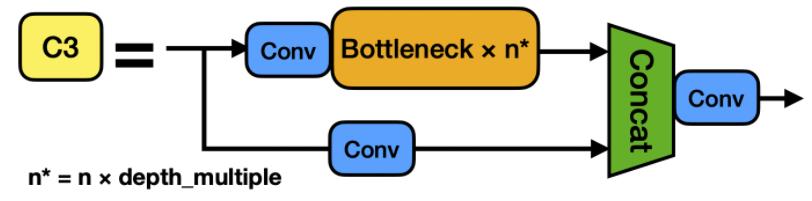

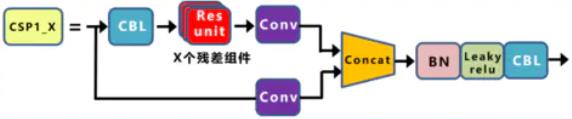

04.C3模块

- 在新版yolov5中,作者将BottleneckCSP(瓶颈层)模块转变为了C3模块,其结构作用基本相同均为CSP架构,只是在修正单元的选择上有所不同,其包含了3个标准卷积层以及多个Bottleneck模块(数量由配置文件.yaml的n和depth_multiple参数乘积决定)

- 从下图可以看出,C3相对于BottleneckCSP模块不同的是,经历过残差输出后的Conv模块被去掉了,concat后的标准卷积模块中的激活函数也由LeakyRelu变为了SiLU(同上)。

- 该模块是对残差特征进行学习的主要模块,其结构分为两支,一支使用了上述指定多个Bottleneck堆叠和3个标准卷积层,另一支仅经过一个基本卷积模块,最后将两支进行concat操作。

结构图片描述:

C3模块:

BottleNeckCSP模块:

代码实现:

class C3(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super(C3, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1) # act=FReLU(c2)

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

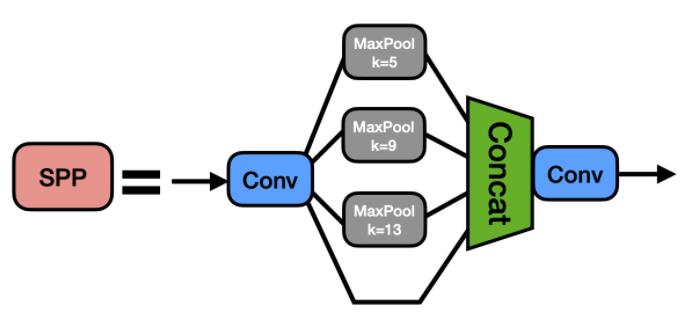

05.SPP模块

- SPP是空间金字塔池化的简称,其先通过一个标准卷积模块将输入通道减半,然后分别做kernel-size为5,9,13的maxpooling(对于不同的核大小,padding是自适应的)。

- 对三次最大池化的结果与未进行池化操作的数据进行concat,最终合并后channel数是原来的2倍。

结构图片描述:

代码实现:

class SPP(nn.Module):

# Spatial pyramid pooling layer used in YOLOv3-SPP

def __init__(self, c1, c2, k=(5, 9, 13)):

super(SPP, self).__init__()

c_ = c1 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1)

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])

def forward(self, x):

x = self.cv1(x)

return self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))

2.为yolov5添加CBAM注意力机制

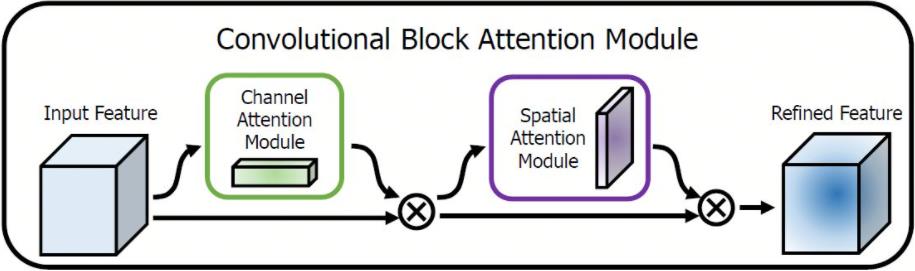

01.CBAM机制

采用CBAM混合域注意力机制,同时对通道注意力和空间注意力进行评价打分。CBAM 包含2个子模块,Channel Attention Module(CAM)和Spartial Attention Module (SAM) 分别实现通道和空间的Attention。

此处参考1. 注意力机制参考链接

2. CBAM参考链接

02.具体步骤

①.以yolov5l结构为例(其实只是深度和宽度因子不同),修改yolov5l.yaml,将C3模块修改为添加注意力机制后的模块CBAMC3,参数不变即可。

②.在common.py中添加CBAMC3模块

class ChannelAttention(nn.Module):

def __init__(self, in_planes, ratio=16):

super(ChannelAttention, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.max_pool = nn.AdaptiveMaxPool2d(1)

self.f1 = nn.Conv2d(in_planes, in_planes // ratio, 1, bias=False)

self.relu = nn.ReLU()

self.f2 = nn.Conv2d(in_planes // ratio, in_planes, 1, bias=False)

# 写法二,亦可使用顺序容器

# self.sharedMLP = nn.Sequential(

# nn.Conv2d(in_planes, in_planes // ratio, 1, bias=False), nn.ReLU(),

# nn.Conv2d(in_planes // rotio, in_planes, 1, bias=False))

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avg_out = self.f2(self.relu(self.f1(self.avg_pool(x))))

max_out = self.f2(self.relu(self.f1(self.max_pool(x))))

out = self.sigmoid(avg_out + max_out)

return torch.mul(x, out)

class SpatialAttention(nn.Module):

def __init__(self, kernel_size=7):

super(SpatialAttention, self).__init__()

assert kernel_size in (3, 7), 'kernel size must be 3 or 7'

padding = 3 if kernel_size == 7 else 1

self.conv = nn.Conv2d(2, 1, kernel_size, padding=padding, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

avg_out = torch.mean(x, dim=1, keepdim=True)

max_out, _ = torch.max(x, dim=1, keepdim=True)

out = torch.cat([avg_out, max_out], dim=1)

out = self.sigmoid(self.conv(out))

return torch.mul(x, out)

class CBAMC3(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super(CBAMC3, self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1)

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])

self.channel_attention = ChannelAttention(c2, 16)

self.spatial_attention = SpatialAttention(7)

# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])

def forward(self, x):

# 将最后的标准卷积模块改为了注意力机制提取特征

return self.spatial_attention(

self.channel_attention(self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))))

③.修改yolo.py,添加额外的判断语句

if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, DWConv, MixConv2d, Focus, CrossConv, BottleneckCSP,

C3, C3TR, CBAMC3]:

c1, c2 = ch[f], args[0]

if c2 != no: # if not output

c2 = make_divisible(c2 * gw, 8)

args = [c1, c2, *args[1:]]

if m in [BottleneckCSP, C3, C3TR, CBAMC3]:

args.insert(2, n) # number of repeats

n = 1

elif m is nn.BatchNorm2d:

args = [ch[f]]

elif m is Concat:

c2 = sum([ch[x] for x in f])

elif m is Detect:

args.append([ch[x] for x in f])

if isinstance(args[1], int): # number of anchors

args[1] = [list(range(args[1] * 2))] * len(f)

elif m is Contract:

c2 = ch[f] * args[0] ** 2

elif m is Expand:

c2 = ch[f] // args[0] ** 2

else:

c2 = ch[f]

至此,在训练模型时调用我们修改后的yolov5l.yaml,即可在验证注意力机制在yolov5模型上的有效性。

手把手带你Yolov5 (v6.1)添加注意力机制(二)(在C3模块中加入注意力机制)

之前在《手把手带你Yolov5 (v6.1)添加注意力机制(并附上30多种顶会Attention原理图)》文章中已经介绍过了如何在主干网络里添加单独的注意力层,今天这篇将会介绍如何在C3模块里面加入注意力层。

文章目录

1.添加方式介绍

1.1 C3SE

第一步;要把注意力结构代码放到common.py文件中,以C3SE举例,将这段代码粘贴到common.py文件中

class SEBottleneck(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5, ratio=16): # ch_in, ch_out, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_, c2, 3, 1, g=g)

self.add = shortcut and c1 == c2

# self.se=SE(c1,c2,ratio)

self.avgpool = nn.AdaptiveAvgPool2d(1)

self.l1 = nn.Linear(c1, c1 // ratio, bias=False)

self.relu = nn.ReLU(inplace=True)

self.l2 = nn.Linear(c1 // ratio, c1, bias=False)

self.sig = nn.Sigmoid()

def forward(self, x):

x1 = self.cv2(self.cv1(x))

b, c, _, _ = x.size()

y = self.avgpool(x1).view(b, c)

y = self.l1(y)

y = self.relu(y)

y = self.l2(y)

y = self.sig(y)

y = y.view(b, c, 1, 1)

out = x1 * y.expand_as(x1)

# out=self.se(x1)*x1

return x + out if self.add else out

class C3SE(C3):

# C3 module with SEBottleneck()

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*(SEBottleneck(c_, c_, shortcut) for _ in range(n)))

第二步;找到yolo.py文件里的parse_model函数,将类名加入进去

第三步;修改配置文件(我这里拿yolov5s.yaml举例子),将C3层替换为我们新引入的C3SE层

yolov5s_C3SE.yaml

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3SE, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3SE, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3SE, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3SE, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

其它注意力机制同理

1.2 C3CA

class CABottleneck(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5, ratio=32): # ch_in, ch_out, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_, c2, 3, 1, g=g)

self.add = shortcut and c1 == c2

# self.ca=CoordAtt(c1,c2,ratio)

self.pool_h = nn.AdaptiveAvgPool2d((None, 1))

self.pool_w = nn.AdaptiveAvgPool2d((1, None))

mip = max(8, c1 // ratio)

self.conv1 = nn.Conv2d(c1, mip, kernel_size=1, stride=1, padding=0)

self.bn1 = nn.BatchNorm2d(mip)

self.act = h_swish()

self.conv_h = nn.Conv2d(mip, c2, kernel_size=1, stride=1, padding=0)

self.conv_w = nn.Conv2d(mip, c2, kernel_size=1, stride=1, padding=0)

def forward(self, x):

x1=self.cv2(self.cv1(x))

n, c, h, w = x.size()

# c*1*W

x_h = self.pool_h(x1)

# c*H*1

# C*1*h

x_w = self.pool_w(x1).permute(0, 1, 3, 2)

y = torch.cat([x_h, x_w], dim=2)

# C*1*(h+w)

y = self.conv1(y)

y = self.bn1(y)

y = self.act(y)

x_h, x_w = torch.split(y, [h, w], dim=2)

x_w = x_w.permute(0, 1, 3, 2)

a_h = self.conv_h(x_h).sigmoid()

a_w = self.conv_w(x_w).sigmoid()

out = x1 * a_w * a_h

# out=self.ca(x1)*x1

return x + out if self.add else out

class C3CA(C3):

# C3 module with CABottleneck()

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*(CABottleneck(c_, c_,shortcut) for _ in range(n)))

1.3 C3CBAM

class CBAMBottleneck(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5,ratio=16,kernel_size=7): # ch_in, ch_out, shortcut, groups, expansion

super(CBAMBottleneck,self).__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_, c2, 3, 1, g=g)

self.add = shortcut and c1 == c2

self.channel_attention = ChannelAttention(c2, ratio)

self.spatial_attention = SpatialAttention(kernel_size)

#self.cbam=CBAM(c1,c2,ratio,kernel_size)

def forward(self, x):

x1 = self.cv2(self.cv1(x))

out = self.channel_attention(x1) * x1

# print('outchannels:'.format(out.shape))

out = self.spatial_attention(out) * out

return x + out if self.add else out

class C3CBAM(C3):

# C3 module with CBAMBottleneck()

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*(CBAMBottleneck(c_, c_, shortcut) for _ in range(n)))

1.4 C3ECA

class ECABottleneck(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5, ratio=16, k_size=3): # ch_in, ch_out, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_, c2, 3, 1, g=g)

self.add = shortcut and c1 == c2

# self.eca=ECA(c1,c2)

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv = nn.Conv1d(1, 1, kernel_size=k_size, padding=(k_size - 1) // 2, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x1 = self.cv2(self.cv1(x))

# out=self.eca(x1)*x1

y = self.avg_pool(x1)

y = self.conv(y.squeeze(-1).transpose(-1, -2)).transpose(-1, -2).unsqueeze(-1)

y = self.sigmoid(y)

out = x1 * y.expand_as(x1)

return x + out if self.add else out

class C3ECA(C3):

# C3 module with ECABottleneck()

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*(ECABottleneck(c_, c_, shortcut) for _ in range(n)))

本人更多Yolov5(v6.1)实战内容导航🍀

3.手把手带你Yolov5 (v6.1)添加注意力机制(并附上30多种顶会Attention原理图)

6.连夜看了30多篇改进YOLO的中文核心期刊 我似乎发现了一个能发论文的规律

以上是关于Yolov5--从模块解析到网络结构修改(添加注意力机制)的主要内容,如果未能解决你的问题,请参考以下文章

改进YOLOv5系列:8.增加ACmix结构的修改,自注意力和卷积集成

yolov5改进之加入CBAM,SE,ECA,CA,SimAM,ShuffleAttention,Criss-CrossAttention,CrissCrossAttention多种注意力机制