基于python的opencv中SGBM立体匹配算法实现

Posted 小张Tt

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于python的opencv中SGBM立体匹配算法实现相关的知识,希望对你有一定的参考价值。

文章目录

前言

SGBM的核心是SGM算法,自OpenCV2.4.6开始就已经被开源,非常的方便,并被广泛使用。

一、SGBM和SGM的区别?

参考大佬的文章:立体匹配算法推理笔记 - SGBM算法(一)

【算法】OpenCV-SGBM算法及源码的简明分析

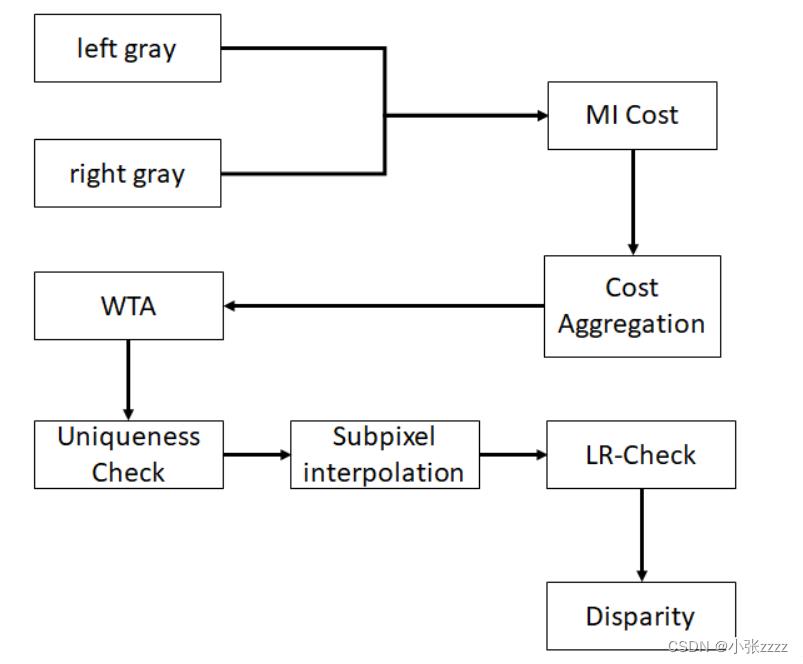

原始的SGM算法流程如下:

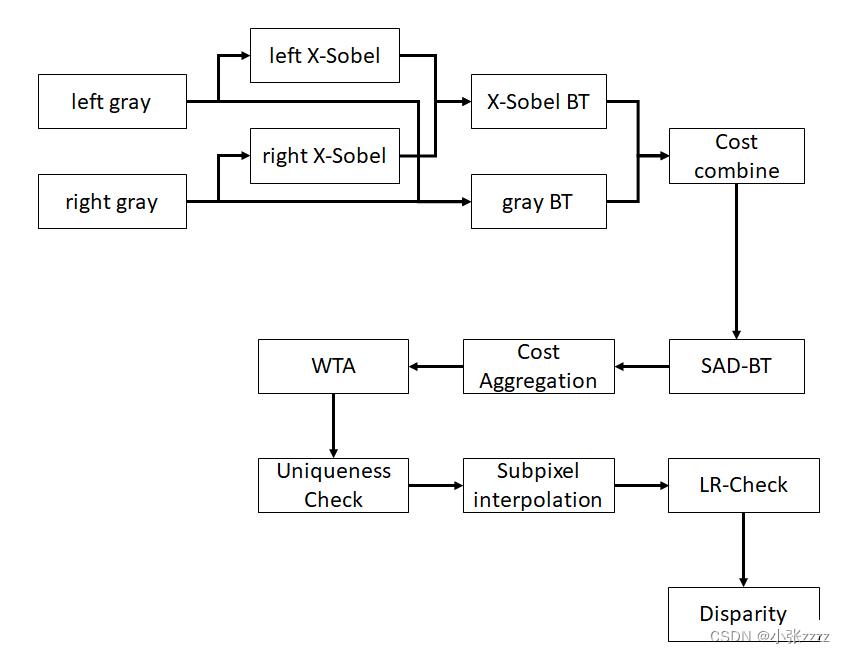

SGBM的算法流程如下:

对比之后可以发现,SGBM和SGM区别的地方在于匹配代价的计算:SGBM采用的是SAD-BT,而SGM采用的是MI。

1.预处理

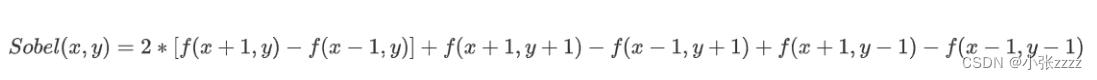

SGBM采用水平Sobel算子进行图像预处理,公式为

将x-sobel算子的结果做一个映射[0,preFilterCap*2],preFilterCap 为一个常数参数。

2.代价计算

代价有两部分组成:

1、经过预处理得到的图像的梯度信息

2、经过基于采样的方法得到的梯度代价。

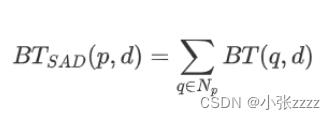

3、原图像经过基于采样的方法得到的SAD代价,因为BT代价是一维匹配,所以通常要结合SAD的思路,采用邻域求和的方法,计算SAD-BT,这样计算出来的代价就是局部块代价,每个像素点的匹配代价会包含周围局部区域的信息。

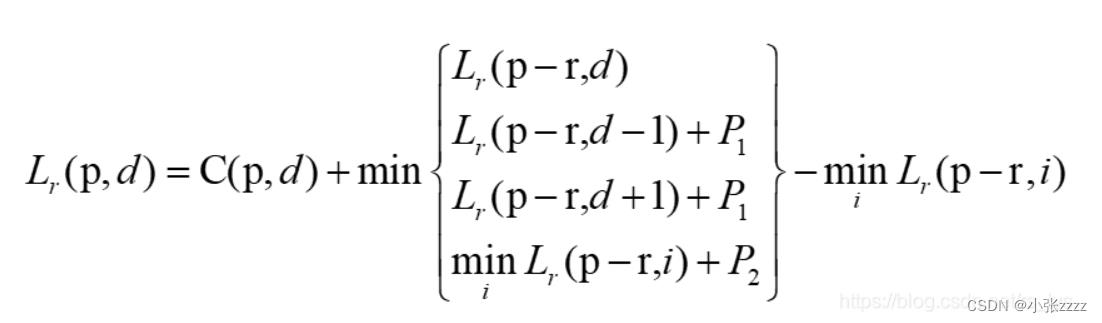

3.动态规划

动态规划算法本身存在拖尾效应,视差突变处易产生错误的匹配,利用态规划进行一维能量累积累,会将错误的视差信息传播给后面的路径上。半全局算法利用多个方向上的信息,试图消除错误信息的干扰,能明显减弱动态规划算法产生的拖尾效应。

半全局算法试图通过影像上多个方向上一维路径的约束,来建立一个全局的马尔科夫能量方程,每个像素最终的匹配代价是所有路径信息的叠加,每个像素的视差选择都只是简单通过 WTA(Winner Takes All)决定的。

其中动态规划很重要两个参数P1,P2是这样设定的:

P1 =8cnsgbm.SADWindowSizesgbm.SADWindowSize;

P2 = 32cnsgbm.SADWindowSizesgbm.SADWindowSize;

cn是图像的通道数, SADWindowSize是SAD窗口大小,数值为奇数。

4.后处理

opencvSGBM的后处理包含以下几个步骤:

唯一性检测

亚像素插值

左右一致性检测

连通区域的检测

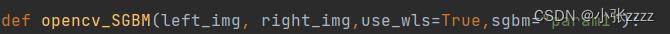

二、SGBM的python-opencv的实现

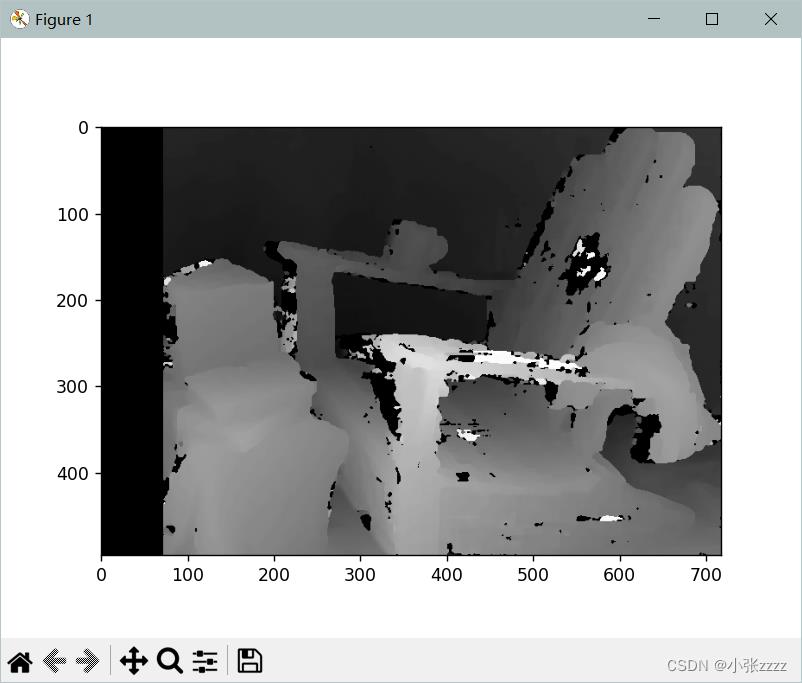

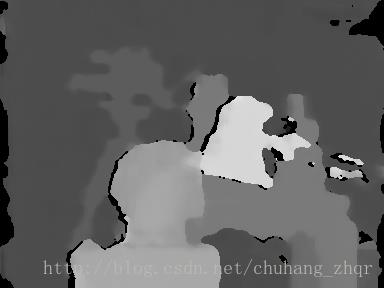

使用python-opencv的结果如下,已经写好,大家直接下载就好了。

基于python-opencv实现SGBM

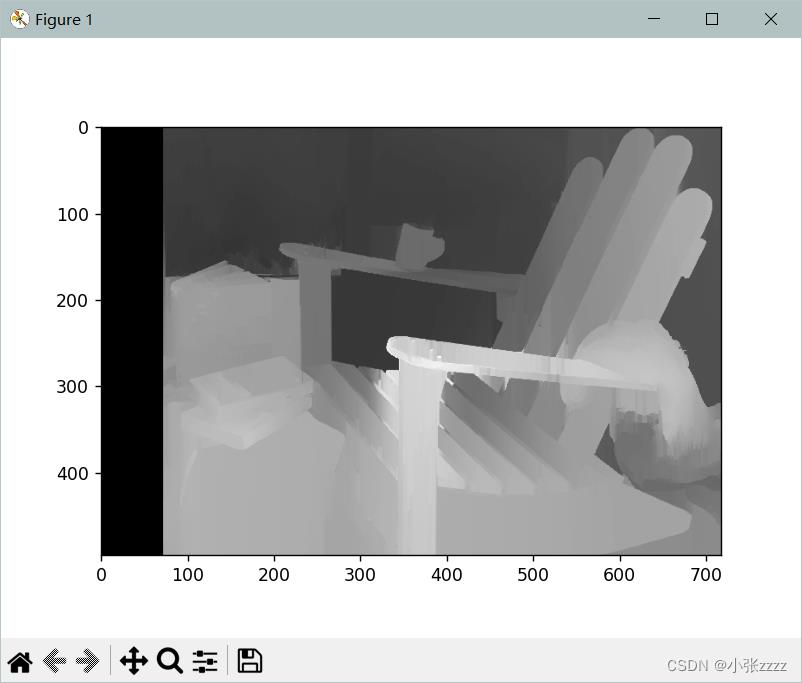

此外,还增加了wls滤波,增加图形连接效果。

直接修改图片位置即可运行,如下:

使用wls滤波:设置use_wls = True即可,

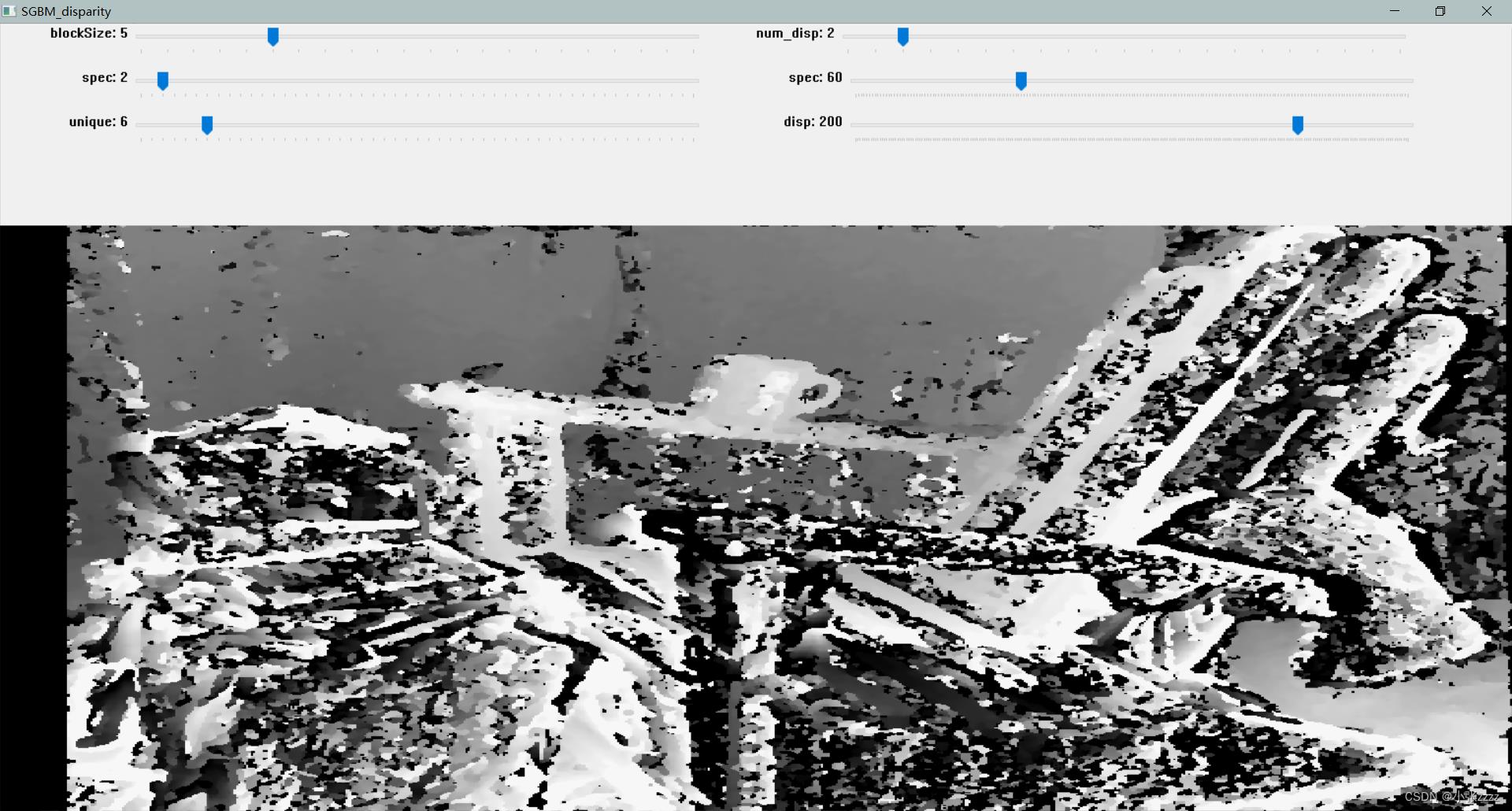

为了方便使用,增加了SGBM_slider.py,直接替换图片文件即可,方便大家观察参数效果,直接下载运行即可,运行结果如下:

基于python-opencv实现SGBM,带有滑动窗口

SGBM 参数选择

参考文章

参考几位大佬的文章,非常不错!

opencv中的SGBM原理+参数解释

【算法】OpenCV-SGBM算法及源码的简明分析

双目立体匹配算法SGBM

Python SGBM

基于Opencv的几种立体匹配算法+ELAS

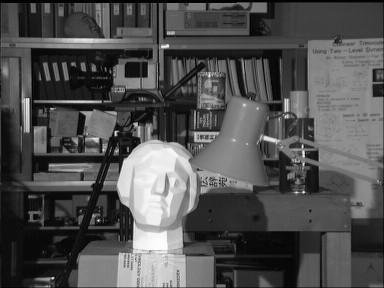

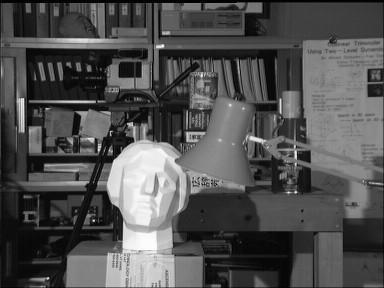

同http://blog.csdn.net/chuhang_zhqr/article/details/51179881类似,采用

这两个经典的图片进行测试。

关于BM和SGBM以及VAR的参数设置请参考

晨宇思远

本文代码基于opencv2.4.9

本文源码地址在我的CSDN代码资源:

http://download.csdn.net/detail/chuhang_zhqr/9703763

0:ELAS

这里要去下载Elas的开源库函数。

int StereoMatch::ElasMatch()

{

cv::Mat disp_l,disp_r,disp8u_l,disp8u_r;

double minVal; double maxVal; //视差图的极值

cv::Mat leftImage = cv::imread("../test_images/leftr31.png",0);

cv::Mat rightImage = cv::imread("../test_images/rightr31.png",0);

// 计算视差

// generate disparity image using LIBELAS

int bd = 0;

const int32_t dims[3] = {leftImage.cols,leftImage.rows,leftImage.cols};

cv::Mat leftdpf = cv::Mat::zeros(cv::Size(leftImage.cols,leftImage.rows), CV_32F);

cv::Mat rightdpf = cv::Mat::zeros(cv::Size(leftImage.cols,leftImage.rows), CV_32F);

Elas::parameters param;

param.postprocess_only_left = false;

Elas elas(param);

elas.process(leftImage.data,rightImage.data,leftdpf.ptr<float>(0),rightdpf.ptr<float>(0),dims);

cv::Mat(leftdpf(cv::Rect(bd,0,leftImage.cols,leftImage.rows))).copyTo(disp_l);

cv::Mat(rightdpf(cv::Rect(bd,0,rightImage.cols,rightImage.rows))).copyTo(disp_r);

//-- Check its extreme values

cv::minMaxLoc( disp_l, &minVal, &maxVal );

cout<<"Min disp: Max value"<< minVal<<maxVal; //numberOfDisparities.= (maxVal - minVal)

//-- Display it as a CV_8UC1 image

disp_l.convertTo(disp8u_l, CV_8U, 255/(maxVal - minVal));//(numberOfDisparities*16.)

cv::minMaxLoc( disp_r, &minVal, &maxVal );

cout<<"Min disp: Max value"<< minVal<<maxVal; //numberOfDisparities.= (maxVal - minVal)

//-- Display it as a CV_8UC1 image

disp_r.convertTo(disp8u_r, CV_8U, 255/(maxVal - minVal));//(numberOfDisparities*16.)

cv::normalize(disp8u_l, disp8u_l, 0, 255, CV_MINMAX, CV_8UC1); // obtain normalized image

cv::normalize(disp8u_r, disp8u_r, 0, 255, CV_MINMAX, CV_8UC1); // obtain normalized image

cv::imshow("Left",leftImage);

cv::imshow("Right",rightImage);

cv::imshow("Elas_left",disp8u_l);

cv::imshow("Elas_right",disp8u_r);

cv::imwrite("Elas_left.png",disp8u_l);

cv::imwrite("Elas_right.png",disp8u_r);

cout<<endl<<"Over"<<endl;

cv::waitKey(0);

return 0;

}

1:BM算法

int StereoMatch::BMMatching()

{

cv::Mat disp,disp8u;

double minVal; double maxVal; //视差图的极值

cv::Mat leftImage = cv::imread("../test_images/leftr.png",0);

cv::Mat rightImage = cv::imread("../test_images/rightr.png",0);

int SADWindowSize = 19;

int numberOfDisparities =16*3; /**< Range of disparity */

numberOfDisparities = numberOfDisparities > 0 ? numberOfDisparities : ((leftImage.cols/8) + 15) & -16;

//bm.state->roi1 = remapMat.Calib_Roi_L;//左右视图的有效像素区域,一般由双目校正阶段的 cvStereoRectify 函数传递,也可以自行设定。

//bm.state->roi2 = remapMat.Calib_Roi_R;//一旦在状态参数中设定了 roi1 和 roi2,OpenCV 会通过cvGetValidDisparityROI 函数计算出视差图的有效区域,在有效区域外的视差值将被清零。

//bm.State->preFilterSize=41;//预处理滤波器窗口大小,5-21,odd

bm.state->preFilterCap = 31; //63,1-31//预处理滤波器的截断值,预处理的输出值仅保留[-preFilterCap, preFilterCap]范围内的值,

bm.state->SADWindowSize = SADWindowSize > 0 ? SADWindowSize : 9; //SAD窗口大小5-21

bm.state->minDisparity = 0; //64 最小视差,默认值为 0

bm.state->numberOfDisparities = numberOfDisparities; //128视差窗口,即最大视差值与最小视差值之差, 窗口大小必须是 16 的整数倍

bm.state->textureThreshold = 10;//低纹理区域的判断阈值。如果当前SAD窗口内所有邻居像素点的x导数绝对值之和小于指定阈值,则该窗口对应的像素点的视差值为 0

bm.state->uniquenessRatio = 15;//5-15 视差唯一性百分比, 视差窗口范围内最低代价是次低代价的(1 + uniquenessRatio/100)倍时,最低代价对应的视差值才是该像素点的视差,否则该像素点的视差为 0

bm.state->speckleWindowSize = 100;//检查视差连通区域变化度的窗口大小, 值为 0 时取消 speckle 检查

bm.state->speckleRange = 32;//视差变化阈值,当窗口内视差变化大于阈值时,该窗口内的视差清零

bm.state->disp12MaxDiff = 1;//左视差图(直接计算得出)和右视差图(通过cvValidateDisparity计算得出)之间的最大容许差异。超过该阈值的视差值将被清零。该参数默认为 -1,即不执行左右视差检查。

//注意在程序调试阶段最好保持该值为 -1,以便查看不同视差窗口生成的视差效果。

// 计算视差

bm(leftImage, rightImage, disp);

//-- Check its extreme values

cv::minMaxLoc( disp, &minVal, &maxVal );

cout<<"Min disp: Max value"<< minVal<<maxVal; //numberOfDisparities.= (maxVal - minVal)

//-- 4. Display it as a CV_8UC1 image

disp.convertTo(disp8u, CV_8U, 255/(maxVal - minVal));//(numberOfDisparities*16.)

cv::normalize(disp8u, disp8u, 0, 255, CV_MINMAX, CV_8UC1); // obtain normalized image

cv::imshow("left",leftImage);

cv::imshow("right",leftImage);

cv::imshow("Disp",disp8u);

cv::imwrite("bm.png",disp8u);

cv::waitKey(0);

}

2:SGBM

int StereoMatch::SGBMMatching()

{

cv::Mat disp,disp8u;

double minVal; double maxVal; //视差图的极值

cv::Mat leftImage = cv::imread("../test_images/leftr.png",0);

cv::Mat rightImage = cv::imread("../test_images/rightr.png",0);

int numberOfDisparities =16*2; /**< Range of disparity */

numberOfDisparities = numberOfDisparities > 0 ? numberOfDisparities : ((leftImage.cols/8) + 15) & -16;

int SADWindowSize = 11;

sgbm.preFilterCap = 63;

sgbm.SADWindowSize = SADWindowSize > 0 ? SADWindowSize : 3; //3-11

int cn = leftImage.channels();

sgbm.P1 = 8*cn*sgbm.SADWindowSize*sgbm.SADWindowSize;//P1、P2的值越大,视差越平滑。P2>P1,可取(50,800)或者(40,2500)

sgbm.P2 = 32*cn*sgbm.SADWindowSize*sgbm.SADWindowSize;

sgbm.minDisparity = 0;

sgbm.numberOfDisparities = numberOfDisparities; //128,256

sgbm.uniquenessRatio = 10; //10,0

sgbm.speckleWindowSize = 100; //200

sgbm.speckleRange = 32;

sgbm.disp12MaxDiff = 1;

sgbm.fullDP = 1;

// 计算视差

sgbm(leftImage, rightImage, disp);

//-- Check its extreme values

cv::minMaxLoc( disp, &minVal, &maxVal );

cout<<"Min disp: Max value"<< minVal<<maxVal; //numberOfDisparities.= (maxVal - minVal)

//-- 4. Display it as a CV_8UC1 image

disp.convertTo(disp8u, CV_8U, 255/(maxVal - minVal));//(numberOfDisparities*16.)

cv::normalize(disp8u, disp8u, 0, 255, CV_MINMAX, CV_8UC1); // obtain normalized image

cv::imshow("left",leftImage);

cv::imshow("right",leftImage);

cv::imshow("Disp",disp8u);

cv::imwrite("sgbm.png",disp8u);

cv::waitKey(0);

}

3:VAR

int StereoMatch::VARMatching()

{

cv::Mat disp,disp8u;

double minVal; double maxVal; //视差图的极值

cv::Mat leftImage = cv::imread("../test_images/leftr.png",0);

cv::Mat rightImage = cv::imread("../test_images/rightr.png",0);

int numberOfDisparities =16*2; /**< Range of disparity */

numberOfDisparities = numberOfDisparities > 0 ? numberOfDisparities : ((leftImage.cols/8) + 15) & -16;

var.levels = 3; // ignored with USE_AUTO_PARAMS

var.pyrScale = 0.5; // ignored with USE_AUTO_PARAMS

var.nIt = 25;

var.minDisp = -numberOfDisparities;

var.maxDisp = 0;

var.poly_n = 3;

var.poly_sigma = 0.0;

var.fi = 15.0f;

var.lambda = 0.03f;

var.penalization = var.PENALIZATION_TICHONOV; // ignored with USE_AUTO_PARAMS

var.cycle = var.CYCLE_V; // ignored with USE_AUTO_PARAMS

var.flags = var.USE_SMART_ID | var.USE_AUTO_PARAMS | var.USE_INITIAL_DISPARITY | var.USE_MEDIAN_FILTERING ;

// 计算视差

var(leftImage, rightImage, disp);

//-- Check its extreme values

cv::minMaxLoc( disp, &minVal, &maxVal );

cout<<"Min disp: Max value"<< minVal<<endl<<maxVal; //numberOfDisparities.= (maxVal - minVal)

//-- 4. Display it as a CV_8UC1 image

disp.convertTo(disp8u, CV_8U, 255/(maxVal - minVal));//(numberOfDisparities*16.)

cv::normalize(disp8u, disp8u, 0, 255, CV_MINMAX, CV_8UC1); // obtain normalized image

cv::imshow("left",leftImage);

cv::imshow("right",leftImage);

cv::imshow("Disp",disp8u);

cv::imwrite("var.png",disp8u);

cv::waitKey(0);

}4:GC

GC好像只有在c版本的,我这里做了两个,一个是C的,一个是改了接口的C++版本的。

int StereoMatch::GCMatching()

{

IplImage * leftImage = cvLoadImage("../test_images/leftr31.png",0);

IplImage * rightImage = cvLoadImage("../test_images/rightr31.png",0);

CvStereoGCState* state = cvCreateStereoGCState( 16, 4 );

IplImage * left_disp_ =cvCreateImage(cvGetSize(leftImage),leftImage->depth,1);

IplImage * right_disp_ =cvCreateImage(cvGetSize(leftImage),leftImage->depth,1);

cvFindStereoCorrespondenceGC( leftImage, rightImage, left_disp_, right_disp_, state, 0 );

cvReleaseStereoGCState( &state );

cvNamedWindow("Left",1);

cvNamedWindow("Right",1);

cvNamedWindow("GC_left",1);

cvNamedWindow("GC_right",1);

cvShowImage("Left",leftImage);

cvShowImage("Right",rightImage);

cvNormalize(left_disp_,left_disp_,0,255,CV_MINMAX,CV_8UC1);

cvNormalize(right_disp_,right_disp_,0,255,CV_MINMAX,CV_8UC1);

cvShowImage("GC_left",left_disp_);

cvShowImage("GC_right",right_disp_);

cvSaveImage("GC_left.png",left_disp_);

cvSaveImage("GC_right.png",right_disp_);

cout<<endl<<"Over"<<endl;

cvWaitKey(0);

cvDestroyAllWindows();

cvReleaseImage(&leftImage);

cvReleaseImage(&rightImage);

return 0;

}int StereoMatch::GCMatching_Mat()

{

double minVal; double maxVal; //视差图的极值

cv::Mat disp8u_l,disp8u_r;

cv::Mat leftImage = cv::imread("../test_images/leftr31.png",0);

cv::Mat rightImage = cv::imread("../test_images/rightr31.png",0);

CvStereoGCState* state = cvCreateStereoGCState( 16, 5 );

cv::Mat left_disp_ =leftImage.clone();

cv::Mat right_disp_ =rightImage.clone();

IplImage temp = (IplImage)leftImage;

IplImage* leftimg = &temp;

IplImage temp1 = (IplImage)rightImage;

IplImage* rightimg = &temp1;

IplImage temp2 = (IplImage)left_disp_;

IplImage* leftdisp = &temp2;

IplImage temp3 = (IplImage)right_disp_;

IplImage* rightdisp = &temp3;

cvFindStereoCorrespondenceGC( leftimg, rightimg, leftdisp, rightdisp, state, 0 );

cvReleaseStereoGCState( &state );

cv::namedWindow("Left",1);

cv::namedWindow("Right",1);

cv::namedWindow("GC_left",1);

cv::namedWindow("GC_right",1);

cv::imshow("Left",leftImage);

cv::imshow("Right",rightImage);

/* //-- Check its extreme values

cv::minMaxLoc(right_disp_, &minVal, &maxVal );

cout<<"Min disp: Max value"<< minVal<<maxVal; //numberOfDisparities.= (maxVal - minVal)

//-- Display it as a CV_8UC1 image

right_disp_.convertTo(disp8u_r, CV_8U, 255/(maxVal - minVal));//(numberOfDisparities*16.)

cv::normalize(left_disp_,left_disp_,0,255,CV_MINMAX,CV_8UC1);

cv::normalize(disp8u_r,disp8u_r,0,255,CV_MINMAX,CV_8UC1);

*/

cv::normalize(left_disp_,left_disp_,0,255,CV_MINMAX,CV_8UC1);

cv::normalize(right_disp_,right_disp_,0,255,CV_MINMAX,CV_8UC1);

cv::imshow("GC_left",left_disp_);

cv::imshow("GC_right",right_disp_);

cv::imwrite("GC_left.png",left_disp_);

cv::imwrite("GC_right.png",right_disp_);

cout<<endl<<"Over"<<endl;

cv::waitKey(0);

return 0;

}

以上是关于基于python的opencv中SGBM立体匹配算法实现的主要内容,如果未能解决你的问题,请参考以下文章