监控HDFS每天数据增量(Python2实现)

Posted 小基基o_O

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了监控HDFS每天数据增量(Python2实现)相关的知识,希望对你有一定的参考价值。

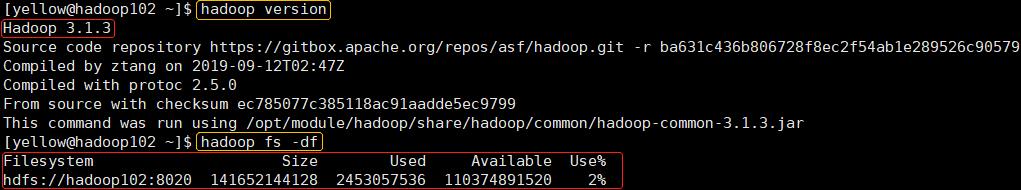

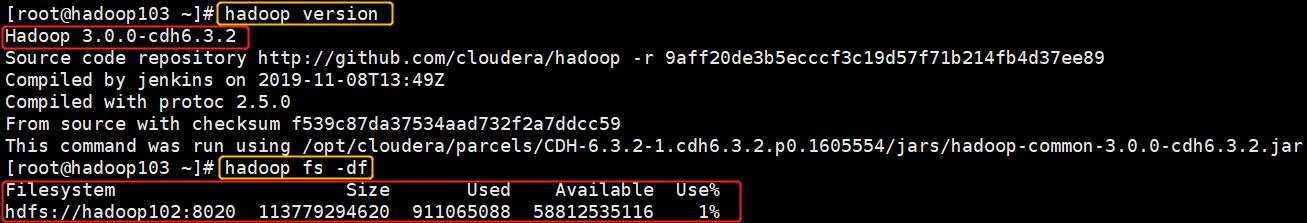

查看HDFS数据量

hadoop fs -df

命令结果截图

命令结果截图(CDH)

数据量监控

1、Python脚本

touch /root/monitor_hdfs_df.py

chmod 777 /root/monitor_hdfs_df.py

vim /root/monitor_hdfs_df.py

#!/usr/bin/python2

# coding=utf-8

from time import strftime

from os import path

from subprocess import check_output

from re import split

from sys import argv

SEP = ','

SCALING = 70 # 条形图长度缩放规模

FILE_NAME = 'fs-df.txt' # 存放数据的文件名

file_name = path.join(path.dirname(__file__), FILE_NAME) # 数据文件的全路径

def fs():

"""查询HDFS数据量"""

result = check_output('hadoop fs -df', shell=True)

now = strftime('%Y-%m-%d %H:%M:%S')

dt = k: v for k, v in zip(*(split('\\s+', line) for line in result.strip().split('\\n')))

return now, dt['Used']

def write():

"""把当前HDFS数据量写到文件"""

with open(file_name, 'a') as f:

f.write(SEP.join(fs()) + '\\n')

def read():

"""读文件"""

with open(file_name, 'r') as f:

for line in f.read().strip().split('\\n'):

now, used = line.split(SEP)

yield now, int(used)

def used_bars():

"""查看HDFS已占用的数据量"""

values = []

labels = []

for now, used in read():

values.append(used)

labels.append(now)

# 条形图

maximum = max(values)

label_max_length = max(len(label) for label in labels)

for value, label in zip(values, labels):

length = int((SCALING * 1.5 * value) / maximum)

print(' '.format('%s'.format(label_max_length) % label, '#' * length, value))

def increase_bars():

"""查看HDFS数据增量"""

lines = list(read())

values = []

labels = []

for (today, used_today), (_, used_yesterday) in zip(lines[1:], lines[:-1]):

increase = used_today - used_yesterday

values.append(increase)

labels.append(today)

# https://yellow520.blog.csdn.net/article/details/121498190

get_bar_length = lambda _value, _max_abs: int((abs(_value) * SCALING) / _max_abs)

labels = labels or [str(i + 1) for i in range(len(values))]

label_max_length = max(len(label) for label in labels)

maximum = max(values)

minimum = min(values)

max_abs = max(abs(maximum), abs(minimum))

if max_abs == 0:

for value, label in zip(values, labels):

print(label, value)

else:

if minimum < 0:

value_length = len(str(minimum))

bar_length_left = get_bar_length(minimum, max_abs)

length_left = value_length + bar_length_left + 1

else:

length_left = 0

for value, label in zip(values, labels):

mid = ' %s '.format(label_max_length) % label

bar_length = get_bar_length(value, max_abs)

if value > 0:

left = ' ' * length_left

right = ' '.format('#' * bar_length, value)

elif value < 0:

left = ' '.format(value, '#' * bar_length).rjust(length_left, ' ')

right = ''

else:

left = ' ' * length_left

right = '0'

print(left + mid + right)

def usage():

print('使用方法:')

print(__file__ + ' + 数字选项(1、2、3)')

print('1:' + write.__doc__)

print('2:' + increase_bars.__doc__)

print('3:' + used_bars.__doc__)

exit()

if len(argv) != 2:

usage()

a = argv[1]

if a == '1':

write()

elif a == '2':

increase_bars()

elif a == '3':

used_bars()

else:

usage()

2、定时任务,每天零点执行

添加或删除定时任务(edit)

crontab -e

以vim模式写入并保存

# 每天0点跑一次,记录HDFS数据量

0 0 * * * /root/monitor_hdfs_df.py 1

检查一下定时任务

crontab -l

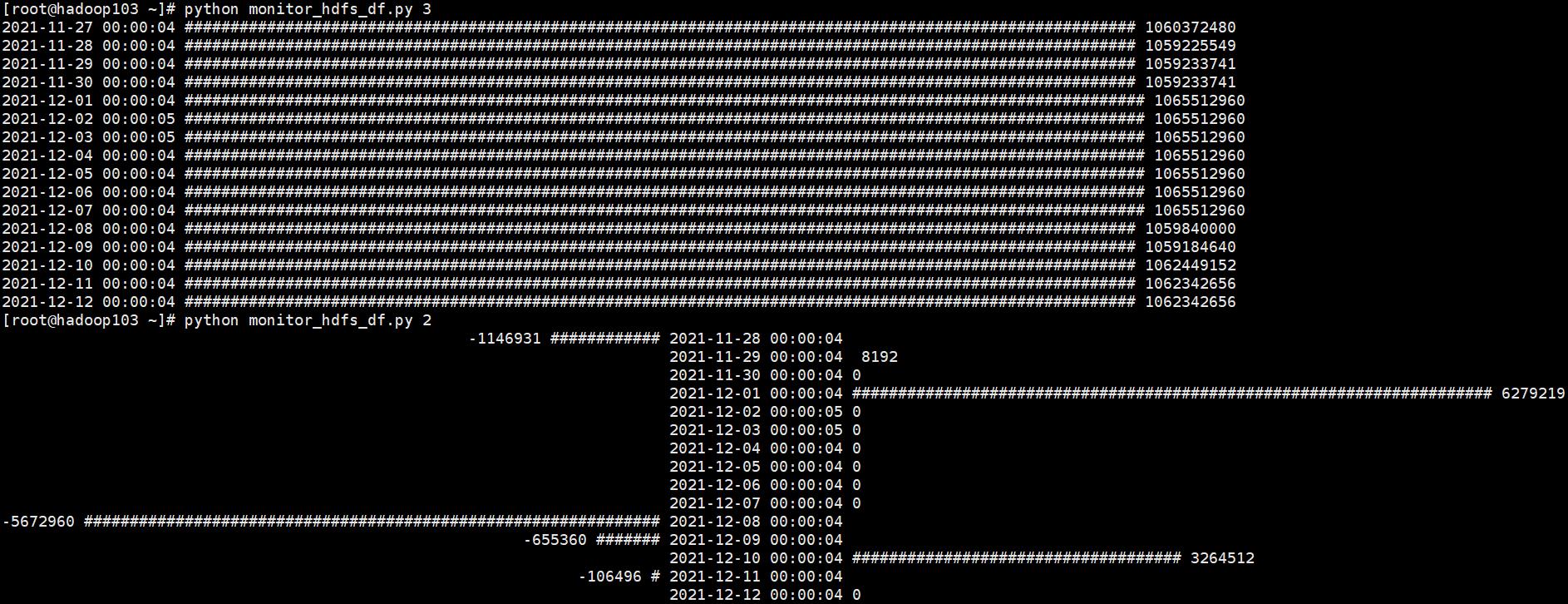

3、查看数据

查看数据量

python monitor_hdfs_df.py 3

查看数据增量

python monitor_hdfs_df.py 2

截图

有负数…

以上是关于监控HDFS每天数据增量(Python2实现)的主要内容,如果未能解决你的问题,请参考以下文章