大数据问题排查系列-大数据集群开启 kerberos 认证后 HIVE 作业执行失败

Posted 明哥的IT随笔

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了大数据问题排查系列-大数据集群开启 kerberos 认证后 HIVE 作业执行失败相关的知识,希望对你有一定的参考价值。

大数据问题排查系列-大数据集群开启 kerberos 认证后 HIVE 作业执行失败

1 前言

大家好,我是明哥!

本文是大数据问题排查系列 的 kerberos问题排查子序列博文之一,讲述大数据集群开启 kerberos 安全认证后,hive作业执行失败的根本原因,解决方法与背后的原理和机制。

以下是正文。

2 问题现象

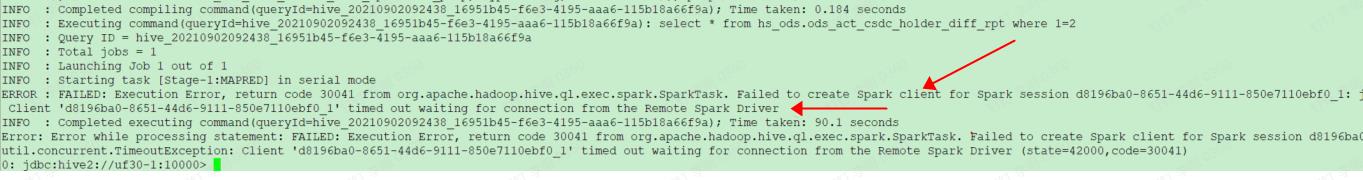

大数据集群开启 kerberos 安全认证后,HIVE ON SPARK 作业执行失败。通过客户端 beeline 提交作业,报错 spark client 创建失败,其报错信息是:

Failed to create spark client for spark session xxx: java.util.concurrent.TimeoutException: client xxx timedout waiting for connection from the remote spark driver

或者是:

Failed to create spark client for spark session xxx: java.lang.RuntimeException: spark-submit

客户端 beeline 的报错信息截图如下图所示:

3 问题分析

按照问题排查的常规思路,我们首先查看 hiveserver2 的日志,能发现核心报错信息 “Error while waiting for Remote Spark Driver to connect back to HiveServer2”,hiveserver2 的完整相关日志如下所示:

2021-09-02 11:01:29,496 ERROR org.apache.hive.spark.client.SparkClientImpl: [HiveServer2-Background-Pool: Thread-135]: Error while waiting for Remote Spark Driver to connect back to HiveServer2.

java.util.concurrent.ExecutionException: java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41) ~[netty-common-4.1.17.Final.jar:4.1.17.Final]

at org.apache.hive.spark.client.SparkClientImpl.<init>(SparkClientImpl.java:103) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:90) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:104) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.<init>(RemoteHiveSparkClient.java:100) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:77) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:131) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1334) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:256) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.access$600(SQLOperation.java:92) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:345) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_201]

at javax.security.auth.Subject.doAs(Subject.java:422) [?:1.8.0_201]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875) [hadoop-common-3.0.0-cdh6.3.2.jar:?]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork.run(SQLOperation.java:357) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_201]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_201]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_201]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_201]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_201]

Caused by: java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

at org.apache.hive.spark.client.SparkClientImpl$2.run(SparkClientImpl.java:495) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

... 1 more

2021-09-02 11:01:29,505 ERROR org.apache.hadoop.hive.ql.exec.spark.SparkTask: [HiveServer2-Background-Pool: Thread-135]: Failed to execute Spark task "Stage-1"

org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client for Spark session f43a158c-168a-4117-8993-8f1780913715_0: java.lang.RuntimeException: spark-submit process failed with exit code 1 and error ?

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.getHiveException(SparkSessionImpl.java:286) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:135) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:132) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:131) ~[hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:122) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2200) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1843) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1563) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1339) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1334) [hive-exec-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:256) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation.access$600(SQLOperation.java:92) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:345) [hive-service-2.1.1-cdh6.3.2.jar:2.1.1-cdh6.3.2]

at java.security.AccessController.doPrivileged(Native Method) ~[?以上是关于大数据问题排查系列-大数据集群开启 kerberos 认证后 HIVE 作业执行失败的主要内容,如果未能解决你的问题,请参考以下文章

开启 Kerberos 安全的大数据环境中,Yarn Container 启动失败导致作业失败