开源Keras Faster RCNN 模型介绍

Posted ZSYL

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了开源Keras Faster RCNN 模型介绍相关的知识,希望对你有一定的参考价值。

开源Keras Faster RCNN 模型介绍

开源Keras Faster RCNN 模型地址:https://github.com/jinfagang/keras_frcnn

1. 环境需求

- 1、由于该源代码由keras单独库编写所以需要下载keras,必须是2.0.3版本

pip install keras==2.0.3

- 2、该源码读取图片以及处理图片标记图片工具使用opecv需要安装

pip install opencv-python

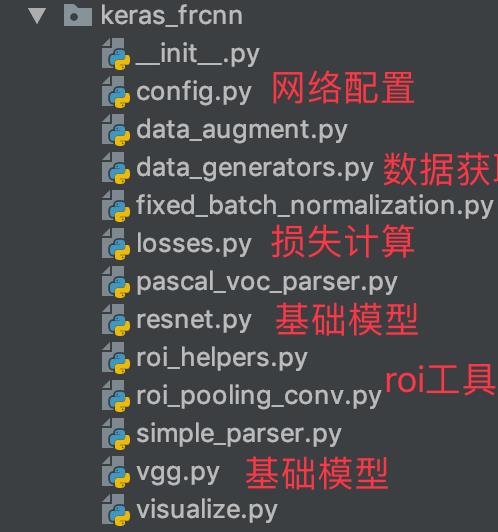

2. keras Faster RCNN代码结构

源码组成结构:

3. FaterRCNN源码解析

- detector:FasterRCNNDetector目标检测器代码

img_input = Input(shape=input_shape_img)

roi_input = Input(shape=(None, 4))

# define the base network (resnet here, can be VGG, Inception, etc)

shared_layers = nn.nn_base(img_input, trainable=True)

# define the RPN, built on the base layers

num_anchors = len(cfg.anchor_box_scales) * len(cfg.anchor_box_ratios)

rpn = nn.rpn(shared_layers, num_anchors)

classifier = nn.classifier(shared_layers, roi_input, cfg.num_rois, nb_classes=len(classes_count), trainable=True)

model_rpn = Model(img_input, rpn[:2])

model_classifier = Model([img_input, roi_input], classifier)

# this is a model that holds both the RPN and the classifier, used to load/save weights for the models

model_all = Model([img_input, roi_input], rpn[:2] + classifier)

try:

print('loading weights from {}'.format(cfg.base_net_weights))

model_rpn.load_weights(cfg.model_path, by_name=True)

model_classifier.load_weights(cfg.model_path, by_name=True)

except Exception as e:

print(e)

print('Could not load pretrained model weights. Weights can be found in the keras application folder '

'https://github.com/fchollet/keras/tree/master/keras/applications')

optimizer = Adam(lr=1e-5)

optimizer_classifier = Adam(lr=1e-5)

model_rpn.compile(optimizer=optimizer,

loss=[losses_fn.rpn_loss_cls(num_anchors), losses_fn.rpn_loss_regr(num_anchors)])

model_classifier.compile(optimizer=optimizer_classifier,

loss=[losses_fn.class_loss_cls, losses_fn.class_loss_regr(len(classes_count) - 1)],

metrics={'dense_class_{}'.format(len(classes_count)): 'accuracy'})

model_all.compile(optimizer='sgd', loss='mae')

4. RPN 与 classifier定义

- RPN结构

def rpn(base_layers, num_anchors):

x = Convolution2D(512, (3, 3), padding='same', activation='relu', kernel_initializer='normal', name='rpn_conv1')(

base_layers)

x_class = Convolution2D(num_anchors, (1, 1), activation='sigmoid', kernel_initializer='uniform',

name='rpn_out_class')(x)

x_regr = Convolution2D(num_anchors * 4, (1, 1), activation='linear', kernel_initializer='zero',

name='rpn_out_regress')(x)

return [x_class, x_regr, base_layers]

- classifier结构

def classifier(base_layers, input_rois, num_rois, nb_classes=21, trainable=False):

# compile times on theano tend to be very high, so we use smaller ROI pooling regions to workaround

if K.backend() == 'tensorflow':

pooling_regions = 14

input_shape = (num_rois, 14, 14, 1024)

elif K.backend() == 'theano':

pooling_regions = 7

input_shape = (num_rois, 1024, 7, 7)

# ROI pooling计算定义

out_roi_pool = RoiPoolingConv(pooling_regions, num_rois)([base_layers, input_rois])

out = classifier_layers(out_roi_pool, input_shape=input_shape, trainable=True)

out = TimeDistributed(Flatten())(out)

# 分类

out_class = TimeDistributed(Dense(nb_classes, activation='softmax', kernel_initializer='zero'),

name='dense_class_{}'.format(nb_classes))(out)

# note: no regression target for bg class

# 回归

out_regr = TimeDistributed(Dense(4 * (nb_classes - 1), activation='linear', kernel_initializer='zero'),

name='dense_regress_{}'.format(nb_classes))(out)

return [out_class, out_regr]

5. data_generators.py:传递图像参数,增广配置参数,是否进行图像增广

- IoU计算:

from __future__ import absolute_import

import numpy as np

import cv2

import random

import copy

from . import data_augment

import threading

import itertools

#并集

def union(au, bu, area_intersection):

area_a = (au[2] - au[0]) * (au[3] - au[1])

area_b = (bu[2] - bu[0]) * (bu[3] - bu[1])

area_union = area_a + area_b - area_intersection

return area_union

#交集

def intersection(ai, bi):

x = max(ai[0], bi[0])

y = max(ai[1], bi[1])

w = min(ai[2], bi[2]) - x

h = min(ai[3], bi[3]) - y

if w < 0 or h < 0:

return 0

return w*h

#交并比

def iou(a, b):

# a and b should be (x1,y1,x2,y2)

if a[0] >= a[2] or a[1] >= a[3] or b[0] >= b[2] or b[1] >= b[3]:

return 0.0

area_i = intersection(a, b)

area_u = union(a, b, area_i)

return float(area_i) / float(area_u + 1e-6)

6. 损失计算 losses.py

- rpn的损失回归和分类

def rpn_loss_regr(num_anchors):

def rpn_loss_regr_fixed_num(y_true, y_pred):

if K.image_dim_ordering() == 'th':

x = y_true[:, 4 * num_anchors:, :, :] - y_pred

x_abs = K.abs(x)

x_bool = K.less_equal(x_abs, 1.0)

return lambda_rpn_regr * K.sum(

y_true[:, :4 * num_anchors, :, :] * (x_bool * (0.5 * x * x) + (1 - x_bool) * (x_abs - 0.5))) / K.sum(epsilon + y_true[:, :4 * num_anchors, :, :])

else:

x = y_true[:, :, :, 4 * num_anchors:] - y_pred

x_abs = K.abs(x)

x_bool = K.cast(K.less_equal(x_abs, 1.0), tf.float32)

return lambda_rpn_regr * K.sum(

y_true[:, :, :, :4 * num_anchors] * (x_bool * (0.5 * x * x) + (1 - x_bool) * (x_abs - 0.5))) / K.sum(epsilon + y_true[:, :, :, :4 * num_anchors])

return rpn_loss_regr_fixed_num

def rpn_loss_cls(num_anchors):

def rpn_loss_cls_fixed_num(y_true, y_pred):

if K.image_dim_ordering() == 'tf':

return lambda_rpn_class * K.sum(y_true[:, :, :, :num_anchors] * K.binary_crossentropy(y_pred[:, :, :, :], y_true[:, :, :, num_anchors:])) / K.sum(epsilon + y_true[:, :, :, :num_anchors])

else:

return lambda_rpn_class * K.sum(y_true[:, :num_anchors, :, :] * K.binary_crossentropy(y_pred[:, :, :, :], y_true[:, num_anchors:, :, :])) / K.sum(epsilon + y_true[:, :num_anchors, :, :])

return rpn_loss_cls_fixed_num

- fastrcnn的分类和回归

def class_loss_regr(num_classes):

def class_loss_regr_fixed_num(y_true, y_pred):

x = y_true[:, :, 4*num_classes:] - y_pred

x_abs = K.abs(x)

x_bool = K.cast(K.less_equal(x_abs, 1.0), 'float32')

return lambda_cls_regr * K.sum(y_true[:, :, :4*num_classes] * (x_bool * (0.5 * x * x) + (1 - x_bool) * (x_abs - 0.5))) / K.sum(epsilon + y_true[:, :, :4*num_classes])

return class_loss_regr_fixed_num

def class_loss_cls(y_true, y_pred):

return lambda_cls_class * K.mean(categorical_crossentropy(y_true[0, :, :], y_pred[0, :, :]))

加油!

感谢!

努力!

以上是关于开源Keras Faster RCNN 模型介绍的主要内容,如果未能解决你的问题,请参考以下文章

[Python图像识别] 四十八.Pytorch构建Faster-RCNN模型实现小麦目标检测

深度学习和目标检测系列教程 10-300:通过torch训练第一个Faster-RCNN模型

『深度应用』一小时教你上手MaskRCNN·Keras开源实战(Windows&Linux)