「深度学习一遍过」必修7:模型训练验证与可视化

Posted 荣仔!最靓的仔!

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了「深度学习一遍过」必修7:模型训练验证与可视化相关的知识,希望对你有一定的参考价值。

本专栏用于记录关于深度学习的笔记,不光方便自己复习与查阅,同时也希望能给您解决一些关于深度学习的相关问题,并提供一些微不足道的人工神经网络模型设计思路。

专栏地址:「深度学习一遍过」必修篇

目录

1 Create Dataset

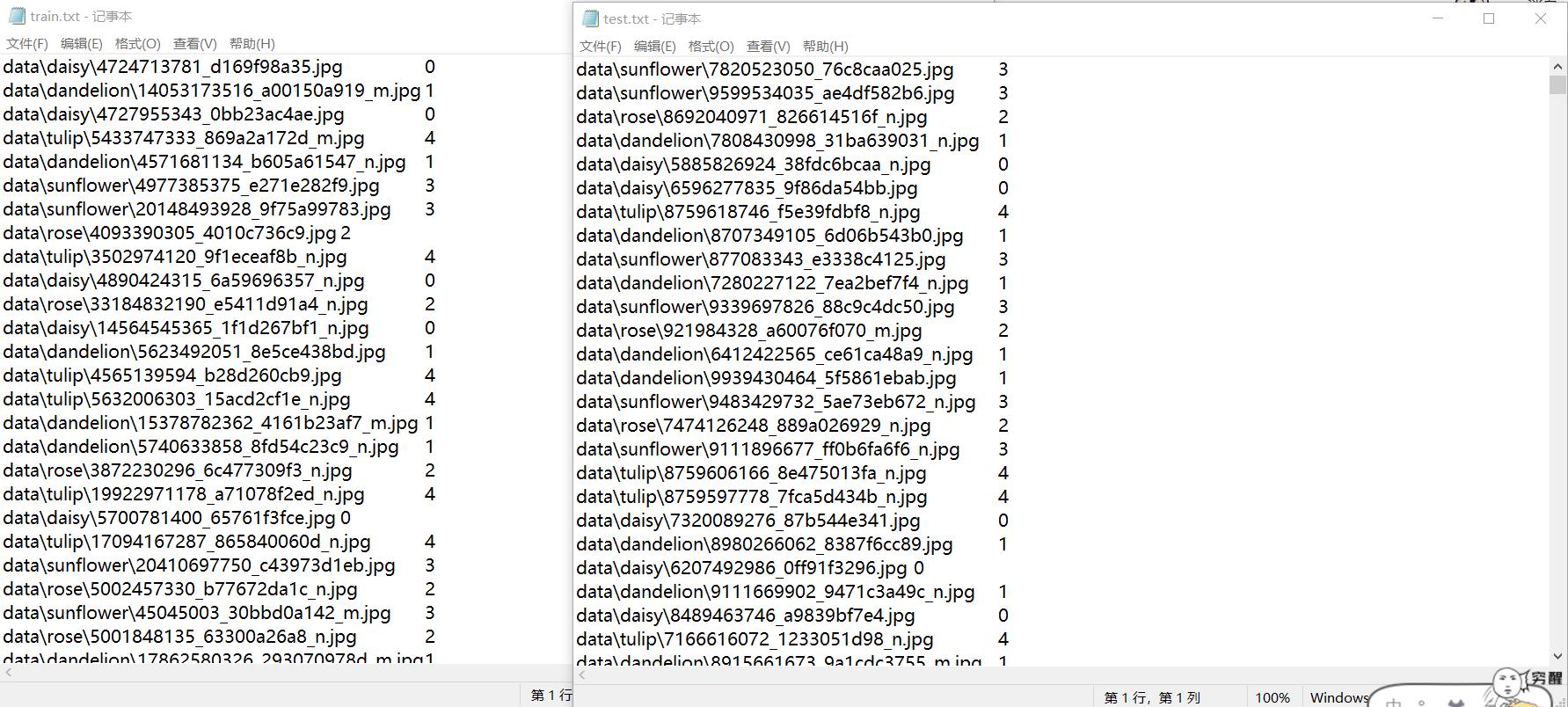

1.1 生成训练集和测试集

import os

import random # 打乱数据用的

def CreateTrainingSet():

# 百分之80用来当训练集

train_ratio = 0.8

# 用来当测试集

test_ratio = 1-train_ratio

rootdata = r"data" # 数据的根目录

train_list, test_list = [], [] # 读取里面每一类的类别

data_list = []

# 生产train.txt和test.txt

class_flag = -1

for a,b,c in os.walk(rootdata):

print(a)

for i in range(len(c)):

data_list.append(os.path.join(a,c[i]))

for i in range(0,int(len(c)*train_ratio)):

train_data = os.path.join(a, c[i])+'\\t'+str(class_flag)+'\\n'

train_list.append(train_data)

for i in range(int(len(c) * train_ratio),len(c)):

test_data = os.path.join(a, c[i]) + '\\t' + str(class_flag)+'\\n'

test_list.append(test_data)

class_flag += 1

print(train_list)

random.shuffle(train_list) # 打乱次序

random.shuffle(test_list)

with open('train.txt','w',encoding='UTF-8') as f:

for train_img in train_list:

f.write(str(train_img))

with open('test.txt','w',encoding='UTF-8') as f:

for test_img in test_list:

f.write(test_img)

if __name__ == "__main__":

CreateTrainingSet()

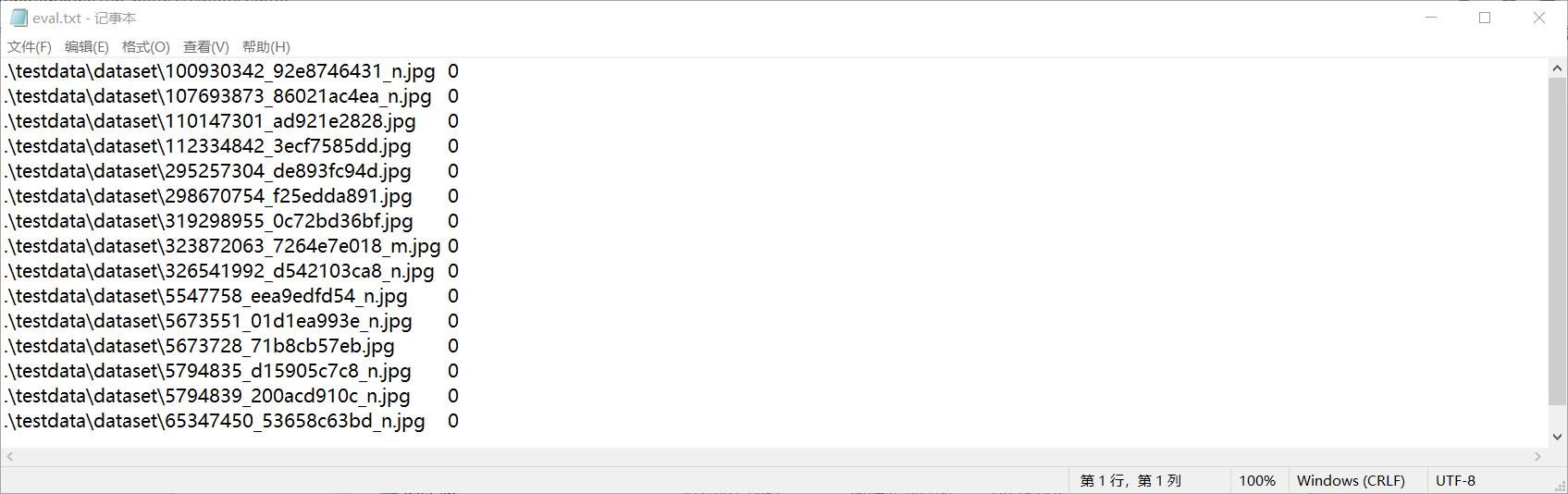

1.2 生成验证集

import os

import random#打乱数据用的

def CreateEvalData():

data_list = []

test_root = r".\\testdata"

for a, b, c in os.walk(test_root):

for i in range(len(c)):

data_list.append(os.path.join(a, c[i]))

print(data_list)

with open('eval.txt', 'w', encoding='UTF-8') as f:

for test_img in data_list:

f.write(test_img + '\\t' + "0" + '\\n')

if __name__ == "__main__":

CreateEvalData()

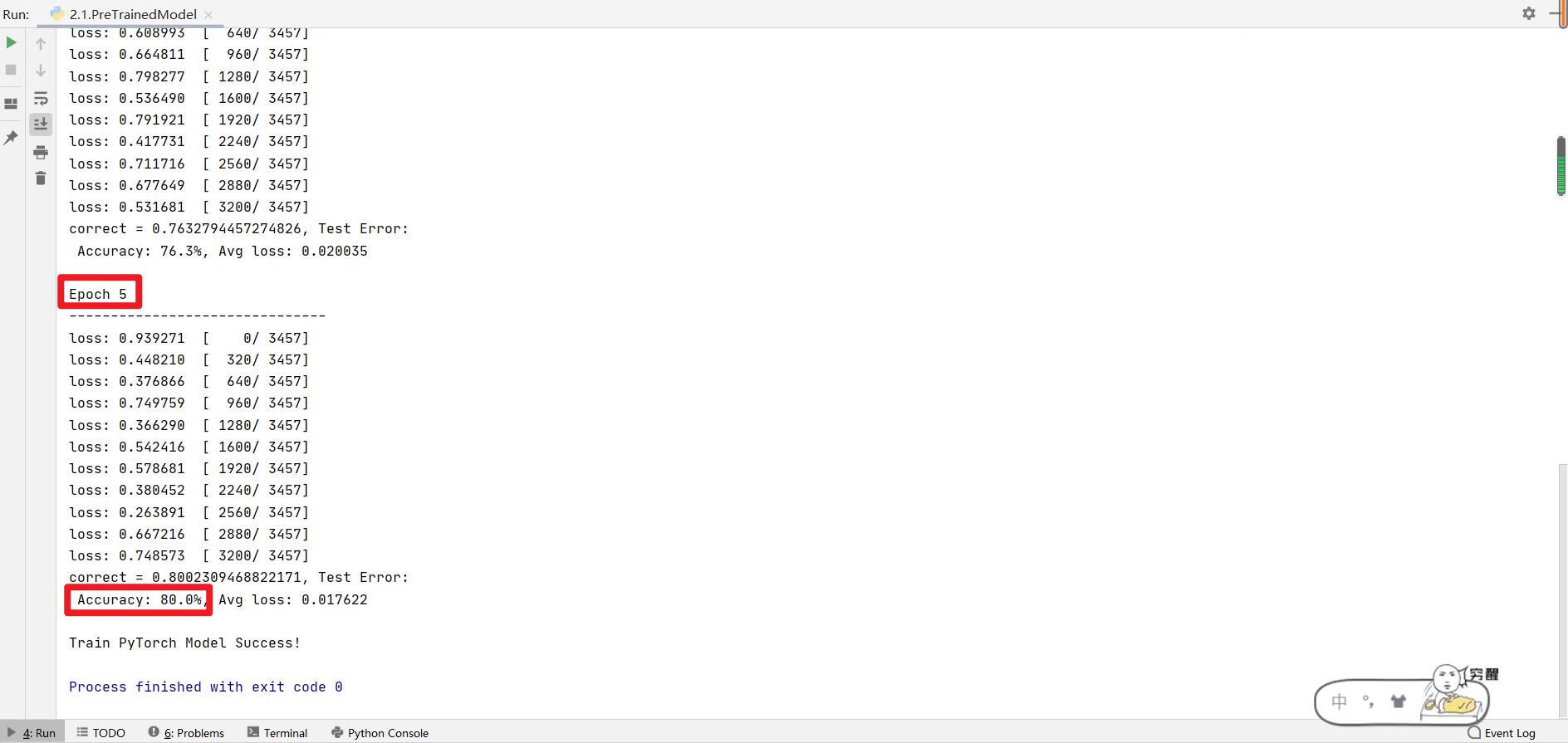

2 模型训练

2.1 都进行微调

'''

纪录训练信息,包括:

1. train loss

2. test loss

3. test accuracy

'''

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision.models import resnet18

from utils import LoadData, write_result

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

avg_total = 0.0

# 从数据加载器中读取batch(一次读取多少张,即批次数),X(图片数据),y(图片真实标签)。

for batch, (X, y) in enumerate(dataloader):

# 将数据存到显卡

X, y = X.cuda(), y.cuda()

# 得到预测的结果pred

pred = model(X)

# 计算预测的误差

loss = loss_fn(pred, y)

avg_total = avg_total+loss.item()

# 反向传播,更新模型参数

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 每训练10次,输出一次当前信息

if batch % 10 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>5f} [{current:>5d}/{size:>5d}]")

avg_loss = f"{(avg_total % batch_size):>5f}"

return avg_loss

def test(dataloader, model):

size = len(dataloader.dataset)

# 将模型转为验证模式

model.eval()

# 初始化test_loss 和 correct, 用来统计每次的误差

test_loss, correct = 0, 0

# 测试时模型参数不用更新,所以no_gard()

# 非训练, 推理期用到

with torch.no_grad():

# 加载数据加载器,得到里面的X(图片数据)和y(真实标签)

for X, y in dataloader:

# 将数据转到GPU

X, y = X.cuda(), y.cuda()

# 将图片传入到模型当中就,得到预测的值pred

pred = model(X)

# 计算预测值pred和真实值y的差距

test_loss += loss_fn(pred, y).item()

# 统计预测正确的个数

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= size

correct /= size

accuracy = f"{(100*correct):>0.1f}"

avg_loss = f"{test_loss:>8f}"

print(f"correct = {correct}, Test Error: \\n Accuracy: {accuracy}%, Avg loss: {avg_loss} \\n")

# 增加数据写入功能

return accuracy, avg_loss

if __name__ == '__main__':

batch_size = 32

# # 给训练集和测试集分别创建一个数据集加载器

train_data = LoadData("train.txt", True)

valid_data = LoadData("test.txt", False)

train_dataloader = DataLoader(dataset=train_data, num_workers=4, pin_memory=True, batch_size=batch_size, shuffle=True)

test_dataloader = DataLoader(dataset=valid_data, num_workers=4, pin_memory=True, batch_size=batch_size)

# 如果显卡可用,则用显卡进行训练

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using {device} device")

'''

修改ResNet18模型的最后一层

'''

pretrain_model = resnet18(pretrained=False)

num_ftrs = pretrain_model.fc.in_features # 获取全连接层的输入

pretrain_model.fc = nn.Linear(num_ftrs, 5) # 全连接层改为不同的输出

pretrained_dict = torch.load('./resnet18_pretrain.pth')

# # 弹出fc层的参数

pretrained_dict.pop('fc.weight')

pretrained_dict.pop('fc.bias')

# # 自己的模型参数变量,在开始时里面参数处于初始状态,所以很多0和1

model_dict = pretrain_model.state_dict()

# # 去除一些不需要的参数

pretrained_dict = {k: v for k, v in pretrained_dict.items() if k in model_dict}

# # 模型参数列表进行参数更新,加载参数

model_dict.update(pretrained_dict)

# 改进过的预训练模型结构,加载刚刚的模型参数列表

pretrain_model.load_state_dict(model_dict)

'''

冻结部分层

'''

# 将满足条件的参数的 requires_grad 属性设置为False

for name, value in pretrain_model.named_parameters():

if (name != 'fc.weight') and (name != 'fc.bias'):

value.requires_grad = False

#

# filter 函数将模型中属性 requires_grad = True 的参数选出来

params_conv = filter(lambda p: p.requires_grad, pretrain_model.parameters()) # 要更新的参数在parms_conv当中

model = pretrain_model.to(device)

# 定义损失函数,计算相差多少,交叉熵,

loss_fn = nn.CrossEntropyLoss()

''' 控制优化器只更新需要更新的层 '''

optimizer = torch.optim.SGD(params_conv, lr=1e-3) # 初始学习率

#

# 一共训练5次

epochs = 5

best = 0.0

for t in range(epochs):

print(f"Epoch {t + 1}\\n-------------------------------")

train_loss = train(train_dataloader, model, loss_fn, optimizer)

accuracy, avg_loss = test(test_dataloader, model)

write_result("mobilenet_36_traindata.txt", t+1, train_loss, avg_loss, accuracy)

if (t+1) % 10 == 0:

torch.save(model.state_dict(), "resnet_epoch_"+str(t+1)+"_acc_"+str(accuracy)+".pth")

if float(accuracy) > best:

best = float(accuracy)

torch.save(model.state_dict(), "BEST_resnet_epoch_" + str(t+1) + "_acc_" + str(accuracy) + ".pth")

print("Train PyTorch Model Success!")

2.2 只微调最后

'''

纪录训练信息,包括:

1. train loss

2. test loss

3. test accuracy

'''

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision.models import resnet18

from utils import LoadData, write_result

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

avg_total = 0.0

# 从数据加载器中读取batch(一次读取多少张,即批次数),X(图片数据),y(图片真实标签)。

for batch, (X, y) in enumerate(dataloader):

# 将数据存到显卡

# X, y = X.cuda(), y.cuda()

# 得到预测的结果pred

pred = model(X)

# 计算预测的误差

loss = loss_fn(pred, y)

avg_total = avg_total+loss.item()

# 反向传播,更新模型参数

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 每训练10次,输出一次当前信息

if batch % 10 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>5f} [{current:>5d}/{size:>5d}]")

avg_loss = f"{(avg_total % batch_size):>5f}"

return avg_loss

def test(dataloader, model):

size = len(dataloader.dataset)

# 将模型转为验证模式

model.eval()

# 初始化test_loss 和 correct, 用来统计每次的误差

test_loss, correct = 0, 0

# 测试时模型参数不用更新,所以no_gard()

# 非训练, 推理期用到

with torch.no_grad():

# 加载数据加载器,得到里面的X(图片数据)和y(真实标签)

for X, y in dataloader:

# 将数据转到GPU

# X, y = X.cuda(), y.cuda()

# 将图片传入到模型当中就,得到预测的值pred

pred = model(X)

# 计算预测值pred和真实值y的差距

test_loss += loss_fn(pred, y).item()

# 统计预测正确的个数

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= size

correct /= size

accuracy = f"{(100*correct):>0.1f}"

avg_loss = f"{test_loss:>8f}"

print(f"correct = {correct}, Test Error: \\n Accuracy: {accuracy}%, Avg loss: {avg_loss} \\n")

# 增加数据写入功能

return accuracy, avg_loss

if __name__ == '__main__':

batch_size = 32

# # 给训练集和测试集分别创建一个数据集加载器

train_data = LoadData("train.txt", True)

valid_data = LoadData("test.txt", False)

train_dataloader = DataLoader(dataset=train_data, num_workers=4, pin_memory=True, batch_size=batch_size, shuffle=True)

test_dataloader = DataLoader(dataset=valid_data, num_workers=4, pin_memory=True, batch_size=batch_size)

# 如果显卡可用,则用显卡进行训练

device = "cpu"

print(f"Using {device} device")

'''

修改ResNet18模型的最后一层

'''

finetune_net = resnet18(pretrained=True)

finetune_net.fc = nn.Linear(finetune_net.fc.in_features, 5)

nn.init.xavier_normal_(finetune_net.fc.weight)

'''

冻结部分层

'''

parms_1x = [value for name, value in finetune_net.named_parameters() if name not in ["fc.weight", "fc.bias"]]

# 最后一层10倍学习率

parms_10x = [value for name, value in finetune_net.named_parameters() if name in ["fc.weight", "fc.bias"]]

model = finetune_net.to(device)

# 定义损失函数,计算相差多少,交叉熵,

loss_fn = nn.CrossEntropyLoss()

''' 控制优化器只更新需要更新的层 '''

learning_rate = 1e-4

optimizer = torch.optim.SGD([

{

'params': parms_1x

},

{

'params':parms_10x,

'lr':learning_rate * 10

}],lr=learning_rate)

#

# 一共训练5次

epochs = 5

best = 0.0

for t in range(epochs):

print(f"Epoch {t + 1}\\n-------------------------------")

train_loss = train(train_dataloader, model, loss_fn, optimizer)

accuracy, avg_loss = test(test_dataloader, model)

write_result("model_traindata.txt", t+1, train_loss, avg_loss, accuracy)

if (t+1) % 10 == 0:

torch.save(model.state_dict(), "resnet_epoch_"+str(t+1)+"_acc_"+str(accuracy)+".pth")

if float(accuracy) > best:

best = float(accuracy)

torch.save(model.state_dict(), "BEST_resnet_epoch_" + str(t+1) + "_acc_" + str(accuracy) + ".pth")

print("Train PyTorch Model Success!")

2.3 从头开始训练不微调

'''

纪录训练信息,包括:

1. train loss

2. test loss

3. test accuracy

'''

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision.models import resnet18

from utils import LoadData, write_result

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

avg_total = 0.0

# 从数据加载器中读取batch(一次读取多少张,即批次数),X(图片数据),y(图片真实标签)。

for batch, (X, y) in enumerate(dataloader):

# 将数据存到显卡

# X, y = X.cuda(), y.cuda()

# 得到预测的结果pred

pred = model(X)

# 计算预测的误差

loss = loss_fn(pred, y)

avg_total = avg_total+loss.item()

# 反向传播,更新模型参数

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 每训练10次,输出一次当前信息

if batch % 10 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>5f} [{current:>5d}/{size:>5d}]")

avg_loss = f"{(avg_total % batch_size):>5f}"

return avg_loss

def test(dataloader, model):

size = len(dataloader.dataset)

# 将模型转为验证模式

model.eval()

# 初始化test_loss 和 correct, 用来统计每次的误差

test_loss, correct = 0, 0

# 测试时模型参数不用更新,所以no_gard()

# 非训练, 推理期用到

with torch.no_grad():

# 加载数据加载器,得到里面的X(图片数据)和y(真实标签)

for X, y in dataloader:

# 将数据转到GPU

# X, y = X.cuda(), y.cuda()

# 将图片传入到模型当中就,得到预测的值pred

pred = model(X)

# 计算预测值pred和真实值y的差距

test_loss += loss_fn(pred, y).item()

# 统计预测正确的个数

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= size

correct /= size

accuracy = f"{(100*correct):>0.1f}"

avg_loss = f"{test_loss:>8f}"

print(f"correct = {correct}, Test Error: \\n Accuracy: {accuracy}%, Avg loss: {avg_loss} \\n")

# 增加数据写入功能

return accuracy, avg_loss

if __name__ == '__main__':

batch_size = 32

# # 给训练集和测试集分别创建一个数据集加载器

train_data = LoadData("train.txt", True)

valid_data = LoadData("test.txt", False)

train_dataloader = DataLoader(dataset=train_data, num_workers=4, pin_memory=True, batch_size=batch_size, shuffle=True)

test_dataloader = DataLoader(dataset=valid_data, num_workers=4, pin_memory=True, batch_size=batch_size)

# 如果显卡可用,则用显卡进行训练

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using {device} device")

'''

修改ResNet18模型的最后一层

'''

model = resnet18(pretrained=False, num_classes=5)

# 定义损失函数,计算相差多少,交叉熵,

loss_fn = nn.CrossEntropyLoss()

''' 控制优化器只更新需要更新的层 '''

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3) # 初始学习率

#

# 一共训练5次

epochs = 5

best = 0.0

for t in range(epochs):

print(f"Epoch {t + 1}\\n-------------------------------")

train_loss = train(train_dataloader, model, loss_fn, optimizer)

accuracy, avg_loss = test(test_dataloader, model)

write_result("model_traindata.txt", t+1, train_loss, avg_loss, accuracy)

if (t+1) % 10 == 0:

torch.save(model.state_dict(), "resnet_epoch_"+str(t+1)+"_acc_"+str(accuracy)+".pth")

if float(accuracy) > best:

best = float(accuracy)

torch.save(model.state_dict(), "BEST_resnet_epoch_" + str(t+1) + "_acc_" + str(accuracy) + ".pth")

print("Train PyTorch Model Success!")

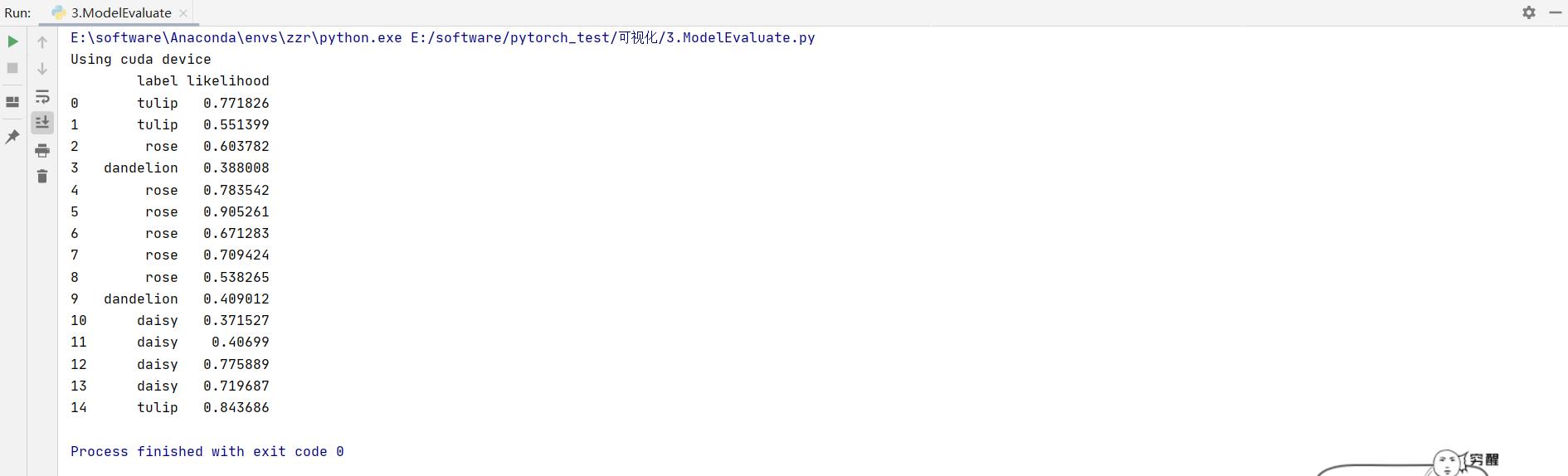

3 模型验证与可视化

3.1 模型验证

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision.models import resnet18

from utils import LoadData, write_result

import pandas as pd

def eval(dataloader, model):

label_list = []

likelihood_list = []

model.eval()

with torch.no_grad():

# 加载数据加载器,得到里面的X(图片数据)和y(真实标签)

for X, y in dataloader:

# 将数据转到GPU

X = X.cuda()

# 将图片传入到模型当中就,得到预测的值pred

pred = model(X)

# 获取可能性最大的标签

label = torch.softmax(pred,1).cpu().numpy().argmax()

label_list.append(label)

# 获取可能性最大的值(即概率)

likelihood = torch.softmax(pred,1).cpu().numpy().max()

likelihood_list.append(likelihood)

return label_list,likelihood_list

if __name__ == "__main__":

'''

1. 导入模型结构

'''

model = resnet18(pretrained=False)

num_ftrs = model.fc.in_features # 获取全连接层的输入

model.fc = nn.Linear(num_ftrs, 5) # 全连接层改为不同的输出

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using {device} device")

'''

2. 加载模型参数

'''

model_loc = "./BEST_resnet_epoch_10_acc_80.9.pth"

model_dict = torch.load(model_loc)

model.load_state_dict(model_dict)

model = model.to(device)

'''

3. 加载图片

'''

valid_data = LoadData("eval.txt", train_flag=False)

test_dataloader = DataLoader(dataset=valid_data, num_workers=2, pin_memory=True, batch_size=1)

'''

4. 获取结果

'''

label_list, likelihood_list = eval(test_dataloader, model)

label_names = ["daisy", "dandelion","rose","sunflower","tulip"]

result_names = [label_names[i] for i in label_list]

list = [result_names, likelihood_list]

df = pd.DataFrame(data=list)

df2 = pd.DataFrame(df.values.T, columns=["label", "likelihood"])

print(df2)

df2.to_csv('testdata.csv', encoding='gbk')

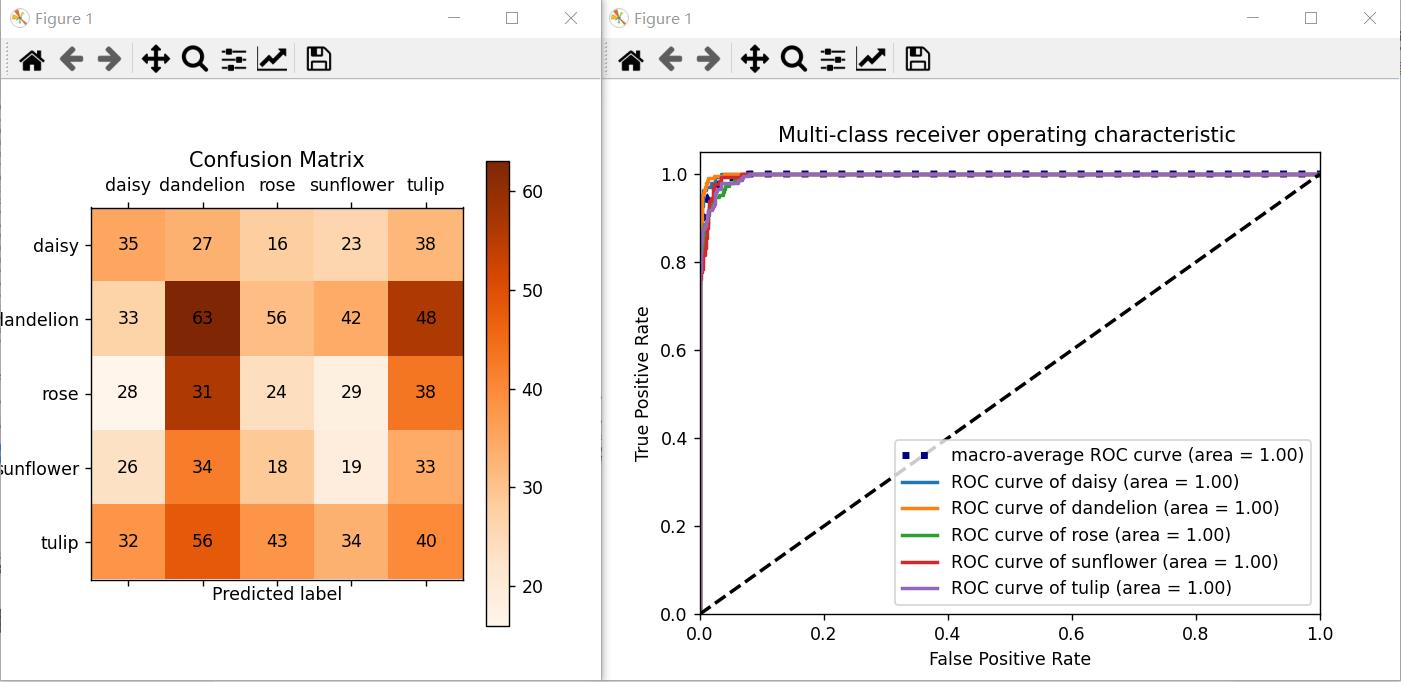

3.2 混淆矩阵、召回率、精准率、ROC曲线可视化

'''

模型性能度量

'''

from sklearn.metrics import * # pip install scikit-learn

import matplotlib.pyplot as plt # pip install matplotlib

import numpy as np # pip install numpy

from numpy import interp

from sklearn.preprocessing import label_binarize

import pandas as pd # pip install pandas

'''

读取数据

需要读取模型输出的标签(predict_label)以及原本的标签(true_label)

'''

target_loc = "test.txt"

target_data = pd.read_csv(target_loc, sep="\\t", names=["loc","type"])

true_label = [i for i in target_data["type"]]

predict_loc = "testdata.csv"

predict_data = pd.read_csv(predict_loc)#,index_col=0)

predict_label = predict_data.to_numpy().argmax(axis=1)

predict_score = predict_data.to_numpy().max(axis=1)

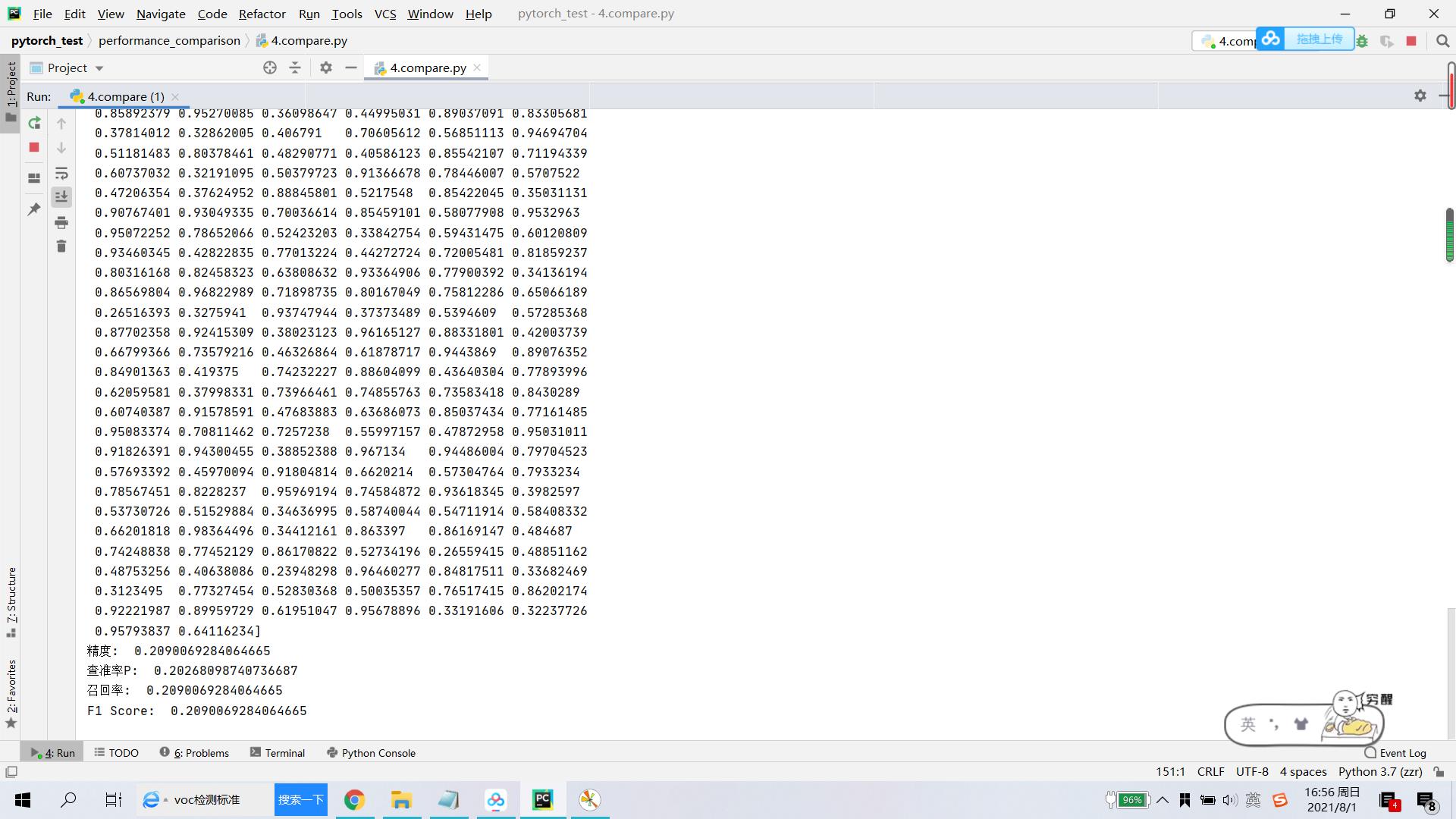

print("predict_score = ",predict_score )

'''

常用指标:精度,查准率,召回率,F1-Score

'''

# 精度,准确率, 预测正确的占所有样本种的比例

accuracy = accuracy_score(true_label, predict_label)

print("精度: ",accuracy)

# 查准率P(准确率),precision(查准率)=TP/(TP+FP)

precision = precision_score(true_label, predict_label, labels=None, pos_label=1, average='macro') # 'micro', 'macro', 'weighted'

print("查准率P: ",precision)

# 查全率R(召回率),原本为对的,预测正确的比例;recall(查全率)=TP/(TP+FN)

recall = recall_score(true_label, predict_label, average='micro') # 'micro', 'macro', 'weighted'

print("召回率: ",recall)

# F1-Score

f1 = f1_score(true_label, predict_label, average='micro') # 'micro', 'macro', 'weighted'

print("F1 Score: ",f1)

'''

混淆矩阵

'''

label_names = ["daisy", "dandelion","rose","sunflower","tulip"]

confusion = confusion_matrix(true_label, predict_label, labels=[i for i in range(len(label_names))])

# print("混淆矩阵: \\n",confusion)

plt.matshow(confusion, cmap=plt.cm.Oranges) # Greens, Blues, Oranges, Reds

plt.colorbar()

for i in range(len(confusion)):

for j in range(len(confusion)):

plt.annotate(confusion[i,j], xy=(i, j), horizontalalignment='center', verticalalignment='center')

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.xticks(range(len(label_names)), label_names)

plt.yticks(range(len(label_names)), label_names)

plt.title("Confusion Matrix")

plt.show()

'''

ROC曲线(多分类)

'''

n_classes = len(label_names)

binarize_predict = label_binarize(predict_label, classes=[i for i in range(n_classes)])

# 读取预测结果

predict_score = predict_data.to_numpy()

# 计算每一类的ROC

fpr = dict()

tpr = dict()

roc_auc = dict()

for i in range(n_classes):

fpr[i], tpr[i], _ = roc_curve(binarize_predict[:,i], [socre_i[i] for socre_i in predict_score])

roc_auc[i] = auc(fpr[i], tpr[i])

all_fpr = np.unique(np.concatenate([fpr[i] for i in range(n_classes)]))

# Then interpolate all ROC curves at this points

mean_tpr = np.zeros_like(all_fpr)

for i in range(n_classes):

mean_tpr += interp(all_fpr, fpr[i], tpr[i])

# Finally average it and compute AUC

mean_tpr /= n_classes

fpr["macro"] = all_fpr

tpr["macro"] = mean_tpr

roc_auc["macro"] = auc(fpr["macro"], tpr["macro"])

# Plot all ROC curves

lw = 2

plt.figure()

plt.plot(fpr["macro"], tpr["macro"],

label='macro-average ROC curve (area = {0:0.2f})'

''.format(roc_auc["macro"]),

color='navy', linestyle=':', linewidth=4)

for i in range(n_classes):

plt.plot(fpr[i], tpr[i], lw=lw, label='ROC curve of {0} (area = {1:0.2f})'.format(label_names[i], roc_auc[i]))

plt.plot([0, 1], [0, 1], 'k--', lw=lw)

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Multi-class receiver operating characteristic ')

plt.legend(loc="lower right")

plt.show()

欢迎大家交流评论,一起学习

希望本文能帮助您解决您在这方面遇到的问题

感谢阅读

END

以上是关于「深度学习一遍过」必修7:模型训练验证与可视化的主要内容,如果未能解决你的问题,请参考以下文章

「深度学习一遍过」必修28:基于C3D预训练模型训练自己的视频分类数据集的设计与实现

「深度学习一遍过」必修28:基于C3D预训练模型训练自己的视频分类数据集的设计与实现