Hadoop 2.7.3 完全分布式维护-动态增加datanode篇

Posted 一泽涟漪

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hadoop 2.7.3 完全分布式维护-动态增加datanode篇相关的知识,希望对你有一定的参考价值。

原有环境

http://www.cnblogs.com/ilifeilong/p/7406944.html

| IP | host | JDK | linux | hadop | role |

| 172.16.101.55 | sht-sgmhadoopnn-01 | 1.8.0_111 | CentOS release 6.5 | hadoop-2.7.3 | NameNode,SecondaryNameNode,ResourceManager |

| 172.16.101.58 | sht-sgmhadoopdn-01 | 1.8.0_111 | CentOS release 6.5 | hadoop-2.7.3 | DataNode,NodeManager |

| 172.16.101.59 | sht-sgmhadoopdn-02 | 1.8.0_111 | CentOS release 6.5 | hadoop-2.7.3 | DataNode,NodeManager |

| 172.16.101.60 | sht-sgmhadoopdn-03 | 1.8.0_111 | CentOS release 6.5 | hadoop-2.7.3 | DataNode,NodeManager |

| 172.16.101.66 | sht-sgmhadoopdn-04 | 1.8.0_111 | CentOS release 6.5 | hadoop-2.7.3 | DataNode,NodeManager |

现计划向集群新增一台datanode,如表格所示

1. 配置系统环境

主机名,ssh互信,环境变量等

2. 修改namenode节点的slave文件,增加新节点信息

$ cat slaves sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03 sht-sgmhadoopdn-04

3. 在namenode节点上,将hadoop-2.7.3复制到新节点上,并在新节点上删除data和logs目录中的文件

$ hostname sht-sgmhadoopnn-01 $ rsync -az --progress /usr/local/hadoop-2.7.3/* hduser@sht-sgmhadoopdn-04:/usr/local/hadoop-2.7.3/ $ hostname sht-sgmhadoopdn-04 $ rm -rf /usr/local/hadoop-2.7.3/logs/* $ rm -rf /usr/local/hadoop-2.7.3/data/*

4. 启动新datanode的datanode进程

$ hadoop-daemon.sh start datanode starting datanode, logging to /usr/local/hadoop-2.7.3/logs/hadoop-hduser-datanode-sht-sgmhadoopdn-04.out $ jps 31875 Jps 31821 DataNode

5. 在namenode查看当前集群情况,确认信节点已经正常加入

5.1 以命令行方式

$ hdfs dfsadmin -report Configured Capacity: 303324561408 (282.49 GB) Present Capacity: 83729309696 (77.98 GB) DFS Remaining: 83081265152 (77.38 GB) DFS Used: 648044544 (618.02 MB) DFS Used%: 0.77% Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 Missing blocks (with replication factor 1): 0 ------------------------------------------------- Live datanodes (4): Name: 172.16.101.66:50010 (sht-sgmhadoopdn-04) Hostname: sht-sgmhadoopdn-04 Decommission Status : Normal Configured Capacity: 75831140352 (70.62 GB) DFS Used: 24576 (24 KB) Non DFS Used: 35573932032 (33.13 GB) DFS Remaining: 40257183744 (37.49 GB) DFS Used%: 0.00% DFS Remaining%: 53.09% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Fri Sep 01 22:50:16 CST 2017 Name: 172.16.101.60:50010 (sht-sgmhadoopdn-03) Hostname: sht-sgmhadoopdn-03 Decommission Status : Normal Configured Capacity: 75831140352 (70.62 GB) DFS Used: 216006656 (206 MB) Non DFS Used: 61714608128 (57.48 GB) DFS Remaining: 13900525568 (12.95 GB) DFS Used%: 0.28% DFS Remaining%: 18.33% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Fri Sep 01 22:50:15 CST 2017 Name: 172.16.101.59:50010 (sht-sgmhadoopdn-02) Hostname: sht-sgmhadoopdn-02 Decommission Status : Normal Configured Capacity: 75831140352 (70.62 GB) DFS Used: 216006656 (206 MB) Non DFS Used: 62057410560 (57.80 GB) DFS Remaining: 13557723136 (12.63 GB) DFS Used%: 0.28% DFS Remaining%: 17.88% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Fri Sep 01 22:50:14 CST 2017 Name: 172.16.101.58:50010 (sht-sgmhadoopdn-01) Hostname: sht-sgmhadoopdn-01 Decommission Status : Normal Configured Capacity: 75831140352 (70.62 GB) DFS Used: 216006656 (206 MB) Non DFS Used: 60249300992 (56.11 GB) DFS Remaining: 15365832704 (14.31 GB) DFS Used%: 0.28% DFS Remaining%: 20.26% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 1 Last contact: Fri Sep 01 22:50:15 CST 2017

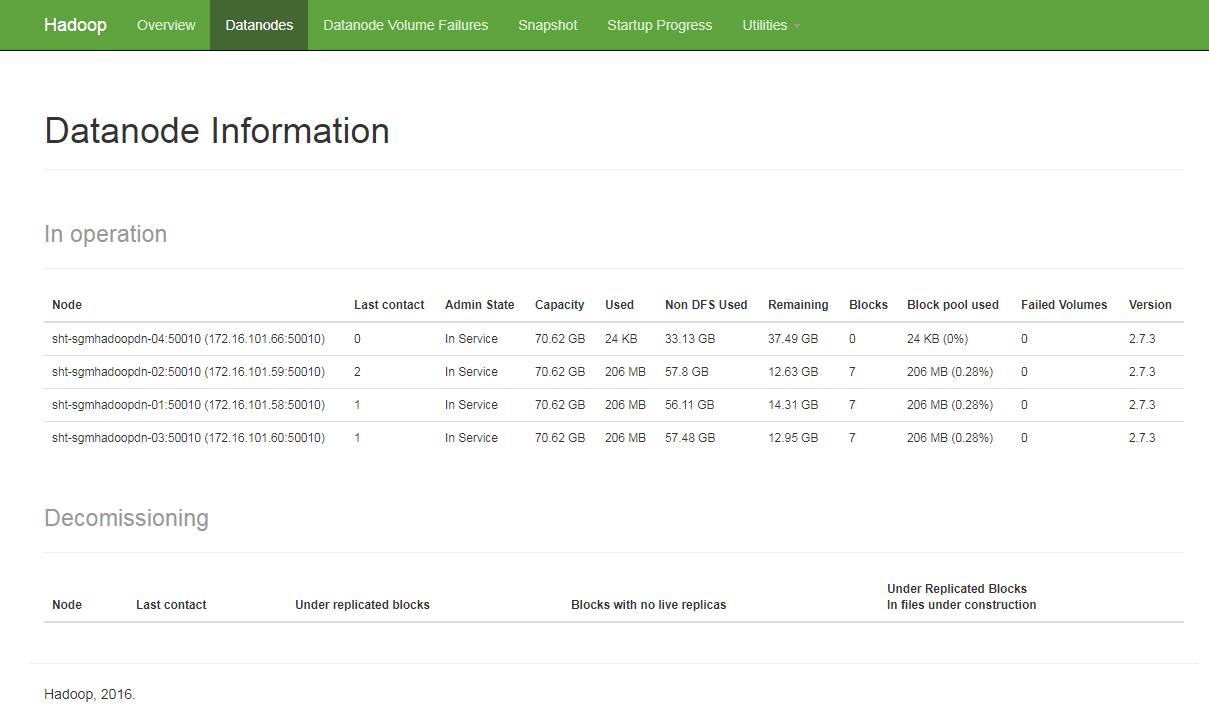

5.2 以web方式

6. 在namenoe上设置 hdfs 的负载均衡

$ hdfs dfsadmin -setBalancerBandwidth 67108864 Balancer bandwidth is set to 67108864 $ start-balancer.sh -threshold 5 starting balancer, logging to /usr/local/hadoop-2.7.3/logs/hadoop-hduser-balancer-sht-sgmhadoopnn-01.out

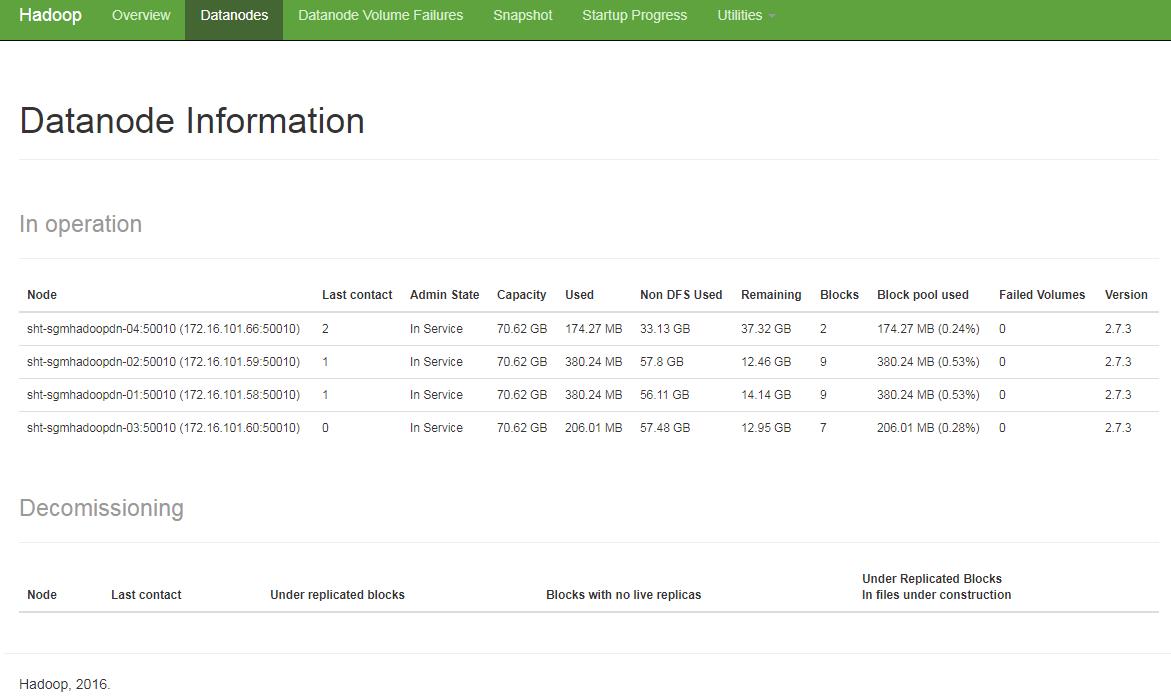

7. 查看hdfs负载信息(有时候节点数据量较小,看出来数据量变化,可以上传大文件测试)

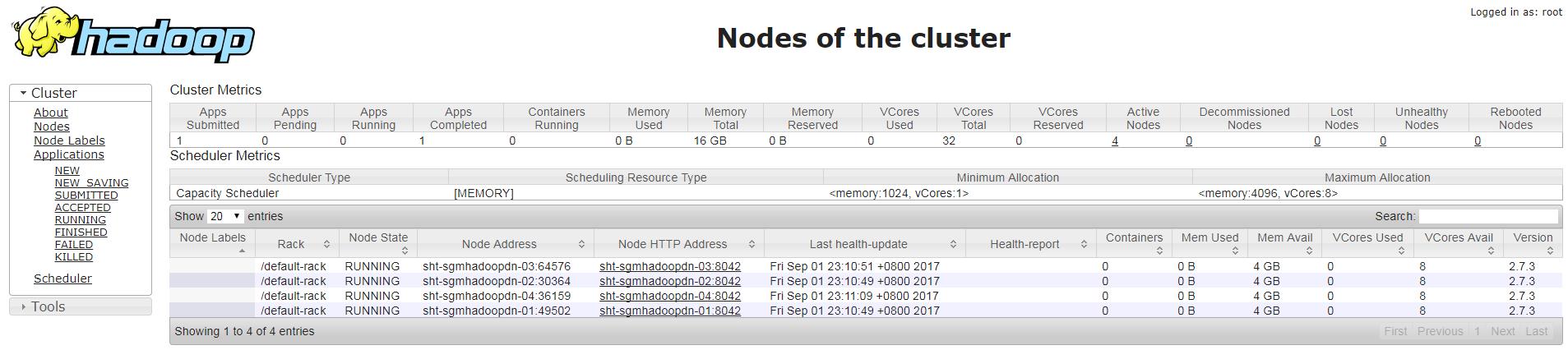

8. 启动新节点的nodemanager进程

$ yarn-daemon.sh start nodemanager starting nodemanager, logging to /usr/local/hadoop-2.7.3/logs/yarn-hduser-nodemanager-sht-sgmhadoopdn-04.out $ jps 32562 NodeManager 32599 Jps 31821 DataNode

以上是关于Hadoop 2.7.3 完全分布式维护-动态增加datanode篇的主要内容,如果未能解决你的问题,请参考以下文章

阿里云ECS服务器部署HADOOP集群:ZooKeeper 完全分布式集群搭建

阿里云ECS服务器部署HADOOP集群:HBase完全分布式集群搭建(使用外置ZooKeeper)