Hadoop退出安全模式

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hadoop退出安全模式相关的知识,希望对你有一定的参考价值。

启动hive时,在发现 HDFS已经处于安全模式【安全模式什么网上讲解很多】了,没有办法操作了!

1 Logging initialized using configuration in jar:file:/apps/hive/lib/hive-common-1.2.1.jar!/hive-log4j.properties 2 Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create directory /tmp/hive/root/a6844814-495e-45f6-a665-ff006311cdc1. Name node is in safe mode. 3 The reported blocks 0 needs additional 22 blocks to reach the threshold 0.9990 of total blocks 22. 4 The number of live datanodes 0 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 5 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1366) 6 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4258) 7 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4233) 8 at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:853) 9 at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:600) 10 at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) 11 at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619) 12 at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:975) 13 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040) 14 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2036) 15 at java.security.AccessController.doPrivileged(Native Method) 16 at javax.security.auth.Subject.doAs(Subject.java:415) 17 at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1656) 18 at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034) 19 20 at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:522) 21 at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:677) 22 at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:621) 23 at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) 24 at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) 25 at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) 26 at java.lang.reflect.Method.invoke(Method.java:606) 27 at org.apache.hadoop.util.RunJar.run(RunJar.java:221) 28 at org.apache.hadoop.util.RunJar.main(RunJar.java:136) 29 Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create directory /tmp/hive/root/a6844814-495e-45f6-a665-ff006311cdc1. Name node is in safe mode. 30 The reported blocks 0 needs additional 22 blocks to reach the threshold 0.9990 of total blocks 22. 31 The number of live datanodes 0 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 32 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1366) 33 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4258) 34 at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4233) 35 at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:853) 36 at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:600) 37 at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) 38 at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619) 39 at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:975) 40 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040) 41 at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2036) 42 at java.security.AccessController.doPrivileged(Native Method) 43 at javax.security.auth.Subject.doAs(Subject.java:415) 44 at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1656) 45 at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034) 46 47 at org.apache.hadoop.ipc.Client.call(Client.java:1469) 48 at org.apache.hadoop.ipc.Client.call(Client.java:1400) 49 at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232) 50 at com.sun.proxy.$Proxy19.mkdirs(Unknown Source) 51 at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:539) 52 at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) 53 at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) 54 at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) 55 at java.lang.reflect.Method.invoke(Method.java:606) 56 at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187) 57 at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) 58 at com.sun.proxy.$Proxy20.mkdirs(Unknown Source) 59 at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2742) 60 at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:2713) 61 at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:870) 62 at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:866) 63 at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) 64 at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:866) 65 at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:859) 66 at org.apache.hadoop.hive.ql.session.SessionState.createPath(SessionState.java:639) 67 at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:574) 68 at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:508) 69 ... 8 more

于是使用下面命令退出安全模式~

1 1 hadoop dfsadmin -safemode leave

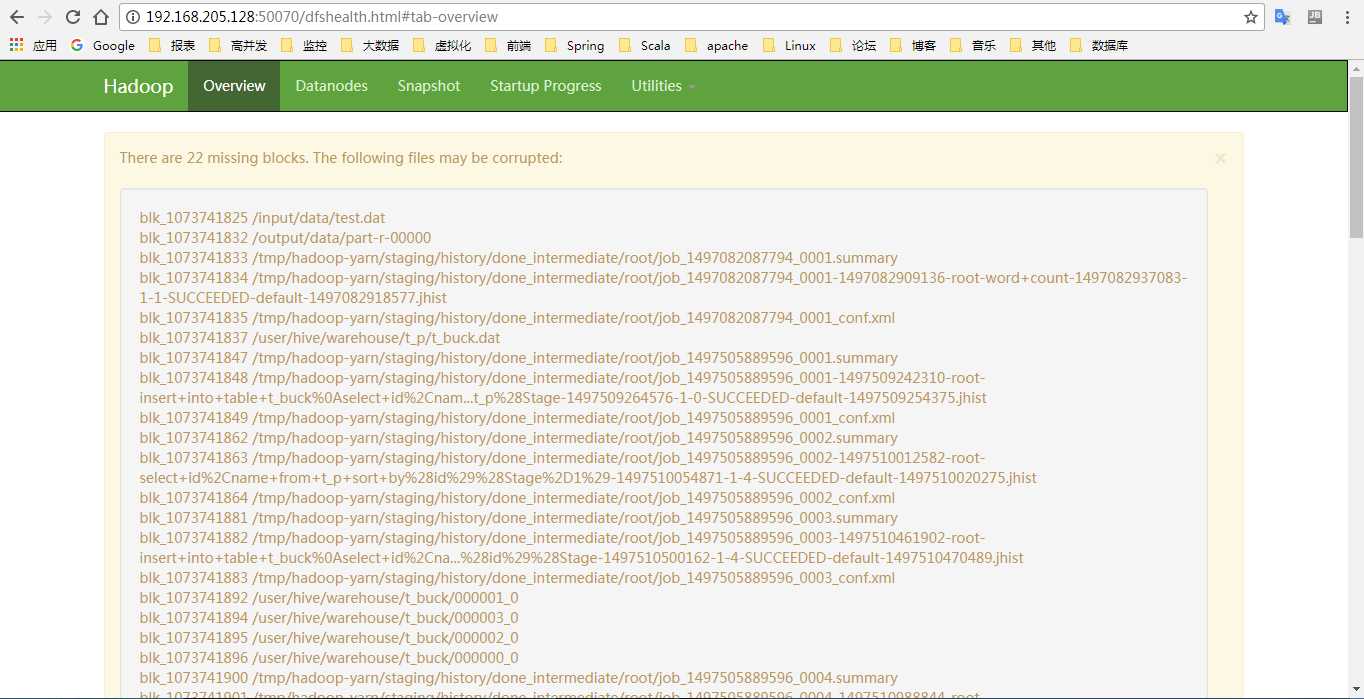

但是又出现了这样的问题 让我如何是好~

以上是关于Hadoop退出安全模式的主要内容,如果未能解决你的问题,请参考以下文章

Hadoop集群安全模式退出失败问题处理,Safe mode is ON。hdfs dfsadmin -safemode leave 或 forceExit