DenseNet——CNN经典网络模型详解(pytorch实现)

Posted 浩波的笔记

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了DenseNet——CNN经典网络模型详解(pytorch实现)相关的知识,希望对你有一定的参考价值。

一个CV小白,写文章目的为了让和我意义的小白轻松如何,让大佬巩固基础(手动狗头),大家有任何问题可以一起在评论区留言讨论~

一、概述

论文:Densely Connected Convolutional Networks 论文链接:https://arxiv.org/pdf/1608.06993.pdf

作为CVPR2017年的Best Paper, DenseNet脱离了加深网络层数(ResNet)和加宽网络结构(Inception)来提升网络性能的定式思维,从特征的角度考虑,通过特征重用和旁路(Bypass)设置,既大幅度减少了网络的参数量,又在一定程度上缓解了gradient vanishing问题的产生.结合信息流和特征复用的假设,DenseNet当之无愧成为2017年计算机视觉顶会的年度最佳论文.

卷积神经网络在沉睡了近20年后,如今成为了深度学习方向最主要的网络结构之一.从一开始的只有五层结构的LeNet, 到后来拥有19层结构的VGG, 再到首次跨越100层网络的Highway Networks与ResNet, 网络层数的加深成为CNN发展的主要方向之一.

随着CNN网络层数的不断增加,gradient vanishing和model degradation问题出现在了人们面前,BatchNormalization的广泛使用在一定程度上缓解了gradient vanishing的问题,而ResNet和Highway Networks通过构造恒等映射设置旁路,进一步减少了gradient vanishing和model degradation的产生.Fractal Nets通过将不同深度的网络并行化,在获得了深度的同时保证了梯度的传播,随机深度网络通过对网络中一些层进行失活,既证明了ResNet深度的冗余性,又缓解了上述问题的产生. 虽然这些不同的网络框架通过不同的实现加深的网络层数,但是他们都包含了相同的核心思想,既将feature map进行跨网络层的连接.

DenseNet作为另一种拥有较深层数的卷积神经网络,具有如下优点:

-

(1) 相比ResNet拥有更少的参数数量. -

(2) 旁路加强了特征的重用. -

(3) 网络更易于训练,并具有一定的正则效果. -

(4) 缓解了gradient vanishing和model degradation的问题.

何恺明先生在提出ResNet时做出了这样的假设:若某一较深的网络多出另一较浅网络的若干层有能力学习到恒等映射,那么这一较深网络训练得到的模型性能一定不会弱于该浅层网络.

通俗的说就是如果对某一网络中增添一些可以学到恒等映射的层组成新的网路,那么最差的结果也是新网络中的这些层在训练后成为恒等映射而不会影响原网络的性能.同样DenseNet在提出时也做过假设:与其多次学习冗余的特征,特征复用是一种更好的特征提取方式.

二、DenseNet

在深度学习网络中,随着网络深度的加深,梯度消失问题会愈加明显,目前很多论文都针对这个问题提出了解决方案,比如ResNet,Highway Networks,Stochastic depth,FractalNets等,尽管这些算法的网络结构有差别,但是核心都在于:create short paths from early layers to later layers。那么作者是怎么做呢?延续这个思路,那就是在保证网络中层与层之间最大程度的信息传输的前提下,直接将所有层连接起来!

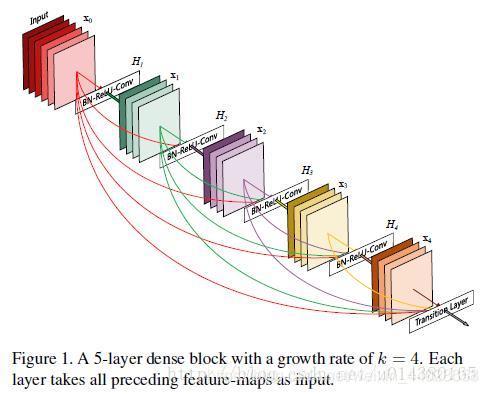

先放一个dense block的结构图。在传统的卷积神经网络中,如果你有L层,那么就会有L个连接,但是在DenseNet中,会有L(L+1)/2个连接。简单讲,就是每一层的输入来自前面所有层的输出。如下图:x0是input,H1的输入是x0(input),H2的输入是x0和x1(x1是H1的输出)……

DenseNet的一个优点是网络更窄,参数更少,很大一部分原因得益于这种dense block的设计,后面有提到在dense block中每个卷积层的输出feature map的数量都很小(小于100),而不是像其他网络一样动不动就几百上千的宽度。同时这种连接方式使得特征和梯度的传递更加有效,网络也就更加容易训练。

DenseNet的一个优点是网络更窄,参数更少,很大一部分原因得益于这种dense block的设计,后面有提到在dense block中每个卷积层的输出feature map的数量都很小(小于100),而不是像其他网络一样动不动就几百上千的宽度。同时这种连接方式使得特征和梯度的传递更加有效,网络也就更加容易训练。

原文的一句话非常喜欢:Each layer has direct access to the gradients from the loss function and the original input signal, leading to an implicit deep supervision.直接解释了为什么这个网络的效果会很好。前面提到过梯度消失问题在网络深度越深的时候越容易出现,原因就是输入信息和梯度信息在很多层之间传递导致的,而现在这种dense connection相当于每一层都直接连接input和loss,因此就可以减轻梯度消失现象,这样更深网络不是问题。另外作者还观察到这种dense connection有正则化的效果,因此对于过拟合有一定的抑制作用,博主认为是因为参数减少了(后面会介绍为什么参数会减少),所以过拟合现象减轻。

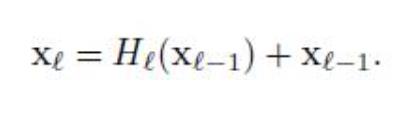

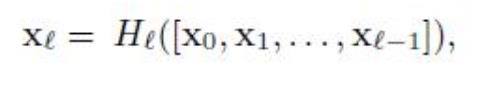

这篇文章的一个优点就是基本上没有公式,不像灌水文章一样堆复杂公式把人看得一愣一愣的。文章中只有两个公式,是用来阐述DenseNet和ResNet的关系,对于从原理上理解这两个网络还是非常重要的。

-

第一个公式是ResNet的。这里的l表示层,xl表示l层的输出,Hl表示一个非线性变换。所以对于ResNet而言,l层的输出是l-1层的输出加上对l-1层输出的非线性变换。

-

第二个公式是DenseNet的。[x0,x1,…,xl-1]表示将0到l-1层的输出feature map做concatenation。concatenation是做通道的合并,就像Inception那样。而前面resnet是做值的相加,通道数是不变的。Hl包括BN,ReLU和3*3的卷积。  所以从这两个公式就能看出DenseNet和ResNet在本质上的区别,太精辟了。

所以从这两个公式就能看出DenseNet和ResNet在本质上的区别,太精辟了。

接着说下论文中一直提到的Identity function:很简单 就是输出等于输入 传统的前馈网络结构可以看成处理网络状态(特征图?)的算法,状态从层之间传递,每个层从之前层读入状态,然后写入之后层,可能会改变状态,也会保持传递不变的信息。ResNet是通过Identity transformations来明确传递这种不变信息。

传统的前馈网络结构可以看成处理网络状态(特征图?)的算法,状态从层之间传递,每个层从之前层读入状态,然后写入之后层,可能会改变状态,也会保持传递不变的信息。ResNet是通过Identity transformations来明确传递这种不变信息。

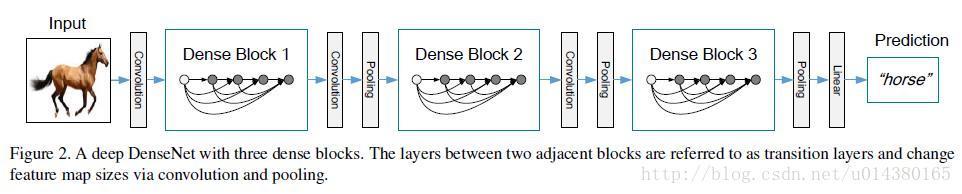

再看下面,前面的Figure 1表示的是dense block,而下面的Figure 2表示的则是一个DenseNet的结构图,在这个结构图中包含了3个dense block。作者将DenseNet分成多个dense block,原因是希望各个dense block内的feature map的size统一,这样在做concatenation就不会有size的问题。

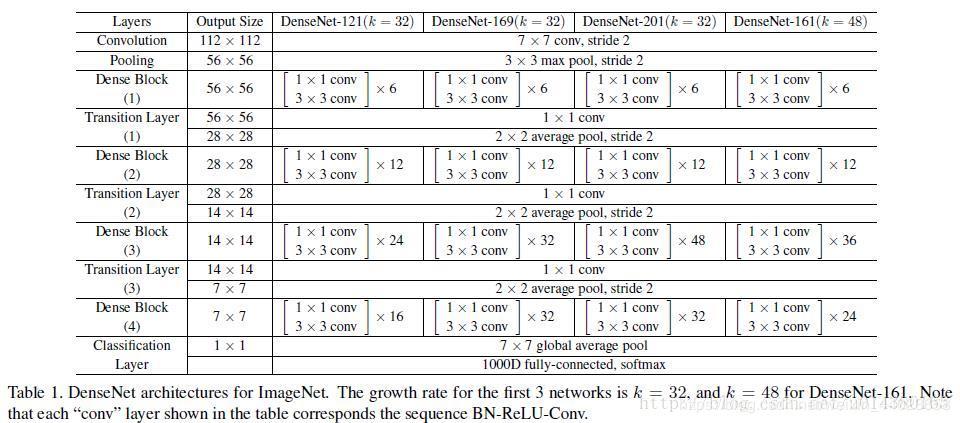

这个Table1(下图)就是整个网络的结构图。

这个Table1(下图)就是整个网络的结构图。

这个表中的k=32,k=48中的k是growth rate,表示每个dense block中每层输出的feature map个数。为了避免网络变得很宽,作者都是采用较小的k,比如32这样,

作者的实验也表明小的k可以有更好的效果。根据dense block的设计,后面几层可以得到前面所有层的输入,因此concat后的输入channel还是比较大的。另外这里每个dense block的33卷积前面都包含了一个11的卷积操作,就是所谓的bottleneck layer,目的是减少输入的feature map数量,既能降维减少计算量,又能融合各个通道的特征,何乐而不为。

另外作者为了进一步压缩参数,在每两个dense block之间又增加了11的卷积操作。因此在后面的实验对比中,如果你看到DenseNet-C这个网络,表示增加了这个Translation layer,该层的11卷积的输出channel默认是输入channel到一半。如果你看到DenseNet-BC这个网络,表示既有bottleneck layer,又有Translation layer。

再详细说下bottleneck和transition layer操作

在每个Dense Block中都包含很多个子结构,以DenseNet-169的Dense Block(3)为例,包含32个11和33的卷积操作,也就是第32个子结构的输入是前面31层的输出结果,每层输出的channel是32(growth rate),那么如果不做bottleneck操作,第32层的33卷积操作的输入就是3132+(上一个Dense Block的输出channel),近1000了。而加上11的卷积,代码中的11卷积的channel是growth rate4,也就是128,然后再作为33卷积的输入。这就大大减少了计算量,这就是bottleneck。 至于transition layer,放在两个Dense Block中间,是因为每个Dense Block结束后的输出channel个数很多,需要用11的卷积核来降维。还是以DenseNet-169的Dense Block(3)为例,虽然第32层的33卷积输出channel只有32个(growth rate),但是紧接着还会像前面几层一样有通道的concat操作,即将第32层的输出和第32层的输入做concat,前面说过第32层的输入是1000左右的channel,所以最后每个Dense Block的输出也是1000多的channel。因此这个transition layer有个参数reduction(范围是0到1),表示将这些输出缩小到原来的多少倍,默认是0.5,这样传给下一个Dense Block的时候channel数量就会减少一半,这就是transition layer的作用。文中还用到dropout操作来随机减少分支,避免过拟合,毕竟这篇文章的连接确实多。

至于transition layer,放在两个Dense Block中间,是因为每个Dense Block结束后的输出channel个数很多,需要用11的卷积核来降维。还是以DenseNet-169的Dense Block(3)为例,虽然第32层的33卷积输出channel只有32个(growth rate),但是紧接着还会像前面几层一样有通道的concat操作,即将第32层的输出和第32层的输入做concat,前面说过第32层的输入是1000左右的channel,所以最后每个Dense Block的输出也是1000多的channel。因此这个transition layer有个参数reduction(范围是0到1),表示将这些输出缩小到原来的多少倍,默认是0.5,这样传给下一个Dense Block的时候channel数量就会减少一半,这就是transition layer的作用。文中还用到dropout操作来随机减少分支,避免过拟合,毕竟这篇文章的连接确实多。

实验结果:

作者在不同数据集上采用的DenseNet网络会有一点不一样,比如在Imagenet数据集上,DenseNet-BC有4个dense block,但是在别的数据集上只用3个dense block。其他更多细节可以看论文3部分的Implementation Details。训练的细节和超参数的设置可以看论文4.2部分,在ImageNet数据集上测试的时候有做224*224的center crop。

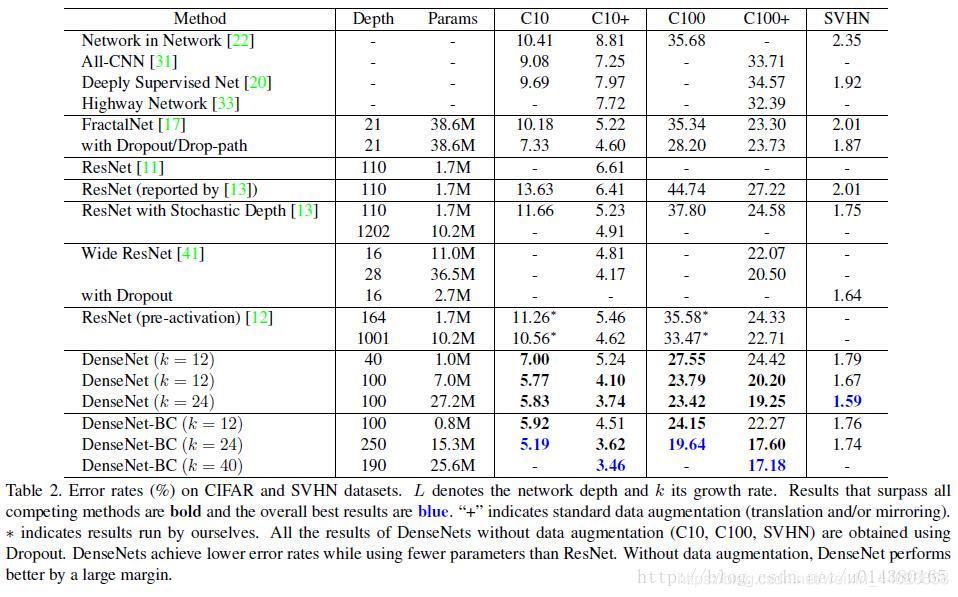

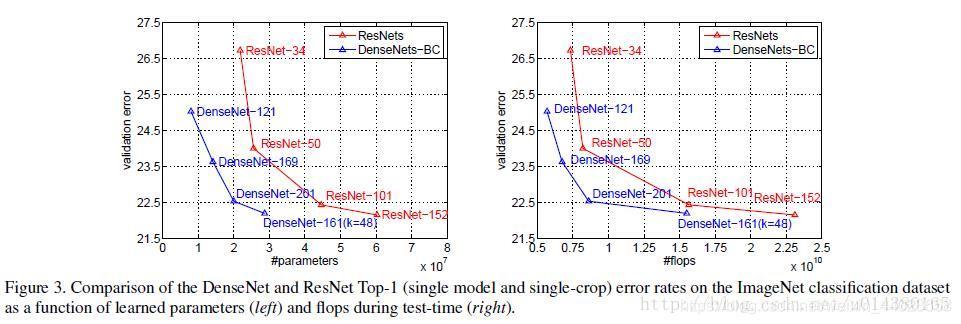

Table2是在三个数据集(C10,C100,SVHN)上和其他算法的对比结果。ResNet[11]就是kaiming He的论文,对比结果一目了然。DenseNet-BC的网络参数和相同深度的DenseNet相比确实减少了很多!参数减少除了可以节省内存,还能减少过拟合这里对于SVHN数据集,DenseNet-BC的结果并没有DenseNet(k=24)的效果好,作者认为原因主要是SVHN这个数据集相对简单,更深的模型容易过拟合。在表格的倒数第二个区域的三个不同深度L和k的DenseNet的对比可以看出随着L和k的增加,模型的效果是更好的。 Figure3是DenseNet-BC和ResNet在Imagenet数据集上的对比,左边那个图是参数复杂度和错误率的对比,你可以在相同错误率下看参数复杂度,也可以在相同参数复杂度下看错误率,提升还是很明显的!右边是flops(可以理解为计算复杂度)和错误率的对比,同样有效果。

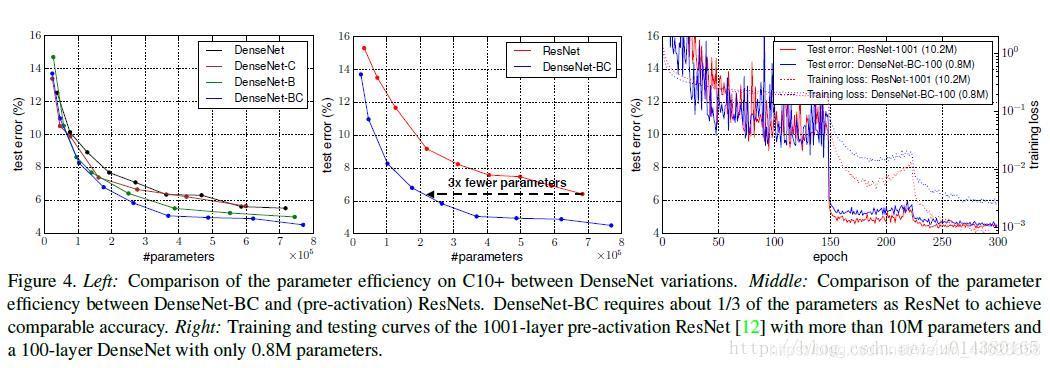

Figure3是DenseNet-BC和ResNet在Imagenet数据集上的对比,左边那个图是参数复杂度和错误率的对比,你可以在相同错误率下看参数复杂度,也可以在相同参数复杂度下看错误率,提升还是很明显的!右边是flops(可以理解为计算复杂度)和错误率的对比,同样有效果。 Figure4也很重要。左边的图表示不同类型DenseNet的参数和error对比。中间的图表示DenseNet-BC和ResNet在参数和error的对比,相同error下,DenseNet-BC的参数复杂度要小很多。右边的图也是表达DenseNet-BC-100只需要很少的参数就能达到和ResNet-1001相同的结果。

Figure4也很重要。左边的图表示不同类型DenseNet的参数和error对比。中间的图表示DenseNet-BC和ResNet在参数和error的对比,相同error下,DenseNet-BC的参数复杂度要小很多。右边的图也是表达DenseNet-BC-100只需要很少的参数就能达到和ResNet-1001相同的结果。

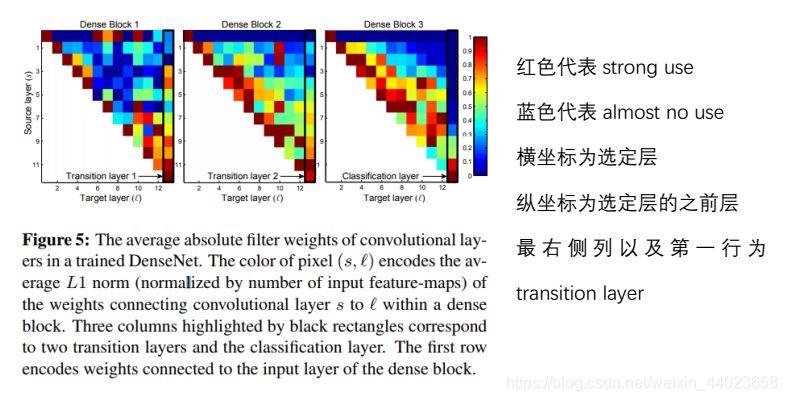

在设计初,DenseNet便被设计成让一层网络可以使用所有之前层网络feature map的网络结构,为了探索feature的复用情况,作者进行了相关实验.作者训练的L=40,K=12的DenseNet,对于任意Denseblock中的所有卷积层,计算之前某层feature map在该层权重的绝对值平均数.这一平均数表明了这一层对于之前某一层feature的利用率,下图为由该平均数绘制出的热力图:

在设计初,DenseNet便被设计成让一层网络可以使用所有之前层网络feature map的网络结构,为了探索feature的复用情况,作者进行了相关实验.作者训练的L=40,K=12的DenseNet,对于任意Denseblock中的所有卷积层,计算之前某层feature map在该层权重的绝对值平均数.这一平均数表明了这一层对于之前某一层feature的利用率,下图为由该平均数绘制出的热力图: 从图中我们可以得出以下结论:

从图中我们可以得出以下结论:

-

a一些较早层提取出的特征仍可能被较深层直接使用 -

b 即使是Transition layer也会使用到之前Denseblock中所有层的特征 -

c 第2-3个Denseblock中的层对之前Transition layer利用率很低,说明transition layer输出大量冗余特征.这也为DenseNet-BC提供了证据支持,既Compression的必要性. -

d 最后的分类层虽然使用了之前Denseblock中的多层信息,但更偏向于使用最后几个feature map的特征,说明在网络的最后几层,某些high-level的特征可能被产生.

另外提一下DenseNet和stochastic depth的关系,在stochastic depth中,residual中的layers在训练过程中会被随机drop掉,其实这就会使得相邻层之间直接连接,这和DenseNet是很像的。

总结:

该文章提出的DenseNet核心思想在于建立了不同层之间的连接关系,充分利用了feature,进一步减轻了梯度消失问题,加深网络不是问题,而且训练效果非常好。另外,利用bottleneck layer,Translation layer以及较小的growth rate使得网络变窄,参数减少,有效抑制了过拟合,同时计算量也减少了。DenseNet优点很多,而且在和ResNet的对比中优势还是非常明显的。

pytorch实现(参考自官网densenet121)

model.py

整体为

1.输入:图片

2.经过feature block(图中的第一个convolution层,后面可以加一个pooling层,这里没有画出来)

3.经过第一个dense block, 该Block中有n个dense layer,灰色圆圈表示,每个dense layer都是dense connection,即每一层的输入都是前面所有层的输出的拼接

4.经过第一个transition block,由convolution和poolling组成

5.经过第二个dense block

6.经过第二个transition block

7.经过第三个dense block

8.经过classification block,由pooling,linear层组成,输出softmax的score

9.经过prediction层,softmax分类

10.输出:分类概率

Dense Layer最开始输出(56 * 56 * 64)或者是上一层dense layer的输出 1.Batch Normalization, 输出(56 * 56 * 64) 2.ReLU ,输出(56 * 56 * 64) 3. -1x1 Convolution, kernel_size=1, channel = bn_size *growth_rate, 则输出为(56 * 56 * 128) -Batch Normalization(56 * 56 * 128) -ReLU(56 * 56 * 128) 4.Convolution, kernel_size=3, channel = growth_rate (56 * 56 * 32) 5.Dropout,可选的,用于防止过拟合(56 * 56 * 32)

class _DenseLayer(nn.Sequential):

def __init__(self, num_input_features, growth_rate, bn_size, drop_rate, memory_efficient=False):#num_input_features特征层数

super(_DenseLayer, self).__init__()#growth_rate=32增长率 bn_size=4

#(56 * 56 * 64)

self.add_module('norm1', nn.BatchNorm2d(num_input_features)),

self.add_module('relu1', nn.ReLU(inplace=True)),

self.add_module('conv1', nn.Conv2d(num_input_features, bn_size *

growth_rate, kernel_size=1, stride=1,

bias=False)),

self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate)),

self.add_module('relu2', nn.ReLU(inplace=True)),

self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1,

bias=False)),

#(56 * 56 * 32)

self.drop_rate = drop_rate

self.memory_efficient = memory_efficient

def forward(self, *prev_features):

bn_function = _bn_function_factory(self.norm1, self.relu1, self.conv1)#(56 * 56 * 64*3)

if self.memory_efficient and any(prev_feature.requires_grad for prev_feature in prev_features):

bottleneck_output = cp.checkpoint(bn_function, *prev_features)

else:

bottleneck_output = bn_function(*prev_features)

# bn1 + relu1 + conv1

new_features = self.conv2(self.relu2(self.norm2(bottleneck_output)))

if self.drop_rate > 0:

new_features = F.dropout(new_features, p=self.drop_rate,

training=self.training)

return new_features

def _bn_function_factory(norm, relu, conv):

def bn_function(*inputs):

# type(List[Tensor]) -> Tensor

concated_features = torch.cat(inputs, 1)#按通道合并

# bn1 + relu1 + conv1

bottleneck_output = conv(relu(norm(concated_features)))

return bottleneck_output

return bn_function

Dense Block

Dense Block有L层dense layer组成

layer 0:输入(56 * 56 * 64)->输出(56 * 56 * 32)

layer 1:输入(56 * 56 (32 * 1))->输出(56 * 56 * 32)

layer 2:输入(56 * 56 (32 * 2))->输出(56 * 56 * 32)

…

layer L:输入(56 * 56 * (32 * L))->输出(56 * 56 * 32)

注意,L层dense layer的输出都是不变的,而每层的输入channel数是增加的,因为如上所述,每层的输入是前面所有层的拼接。

class _DenseBlock(nn.Module):

def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate, memory_efficient=False):

super(_DenseBlock, self).__init__()#num_layers层重复次数

for i in range(num_layers):

layer = _DenseLayer(

num_input_features + i * growth_rate, #for一次层数增加32

growth_rate=growth_rate,

bn_size=bn_size,

drop_rate=drop_rate,

memory_efficient=memory_efficient,

)

self.add_module('denselayer%d' % (i + 1), layer) # 追加denselayer层到字典里面

def forward(self, init_features):

features = [init_features] #原来的特征,64

for name, layer in self.named_children(): # 依次遍历添加的6个layer层,

new_features = layer(*features) #计算特征

features.append(new_features) # 追加特征

return torch.cat(features, 1) # 按通道数合并特征 64 + 6*32=256

Transition Block

Transition Block是在两个Dense Block之间的,由一个卷积+一个pooling组成(下面的数据维度以第一个transition block为例):

输入:Dense Block的输出(56 * 56 * 32)

1.Batch Normalization 输出(56 * 56 * 32)

2.ReLU 输出(56 * 56 * 32)

3.1x1 Convolution,kernel_size=1,此处可以根据预先设定的压缩系数(0-1之间)来压缩原来的channel数,以减小参数,输出(56 * 56 *(32 * compression))

4.2x2 Average Pooling 输出(28 * 28 * (32 * compression))

class DenseNet(nn.Module):

r"""Densenet-BC model class, based on

`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`_

Args:

growth_rate (int) - how many filters to add each layer (`k` in paper)

block_config (list of 4 ints) - how many layers in each pooling block

num_init_features (int) - the number of filters to learn in the first convolution layer

bn_size (int) - multiplicative factor for number of bottle neck layers

(i.e. bn_size * k features in the bottleneck layer)

drop_rate (float) - dropout rate after each dense layer

num_classes (int) - number of classification classes

memory_efficient (bool) - If True, uses checkpointing. Much more memory efficient,

but slower. Default: *False*. See `"paper" <https://arxiv.org/pdf/1707.06990.pdf>`_

"""

def __init__(self, growth_rate=32, block_config=(6, 12, 24, 16),

num_init_features=64, bn_size=4, drop_rate=0, num_classes=1000, memory_efficient=False):

super(DenseNet, self).__init__()

# First convolution

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2,

padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

]))

# Each denseblock

num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = _DenseBlock(

num_layers=num_layers, # 层数重复次数

num_input_features=num_features, # 特征层数64

bn_size=bn_size,

growth_rate=growth_rate,

drop_rate=drop_rate, # dropout值 0

memory_efficient=memory_efficient

)

self.features.add_module('denseblock%d' % (i + 1), block) # 追加denseblock

num_features = num_features + num_layers * growth_rate # 更新num_features=64+6*32 = 256

if i != len(block_config) - 1:#每两个dense block之间增加一个过渡层,i != (4-1),即 i != 3 非最后一个denseblock,后面跟_Transition层

trans = _Transition(num_input_features=num_features,

num_output_features=num_features // 2) # 输出通道数减半

self.features.add_module('transition%d' % (i + 1), trans)

num_features = num_features // 2 # 更新num_features= num_features//2 取整数部分

# Final batch norm

self.features.add_module('norm5', nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features, num_classes)

# Official init from torch repo.

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

features = self.features(x) # 特征提取层

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1)) # 自适应均值池化,输出大小为(1,1)

out = torch.flatten(out, 1)

out = self.classifier(out) # 分类器

return out

# def _load_state_dict(model, model_url, progress):

# # '.'s are no longer allowed in module names, but previous _DenseLayer

# # has keys 'norm.1', 'relu.1', 'conv.1', 'norm.2', 'relu.2', 'conv.2'.

# # They are also in the checkpoints in model_urls. This pattern is used

# # to find such keys.

# pattern = re.compile(

# r'^(.*denselayerd+.(?:norm|relu|conv)).((?:[12]).(?:weight|bias|running_mean|running_var))$')

#

# state_dict = load_state_dict_from_url(model_url, progress=progress)

# for key in list(state_dict.keys()):

# res = pattern.match(key)

# if res:

# new_key = res.group(1) + res.group(2)

# state_dict[new_key] = state_dict[key]

# del state_dict[key]

# model.load_state_dict(state_dict)

def _densenet(arch, growth_rate, block_config, num_init_features, pretrained, progress,

**kwargs):

model = DenseNet(growth_rate, block_config, num_init_features, **kwargs)

if pretrained:

_load_state_dict(model, model_urls[arch], progress)

return model

def densenet121(pretrained=False, progress=True, **kwargs):

r"""Densenet-121 model from

`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`_

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

memory_efficient (bool) - If True, uses checkpointing. Much more memory efficient,

but slower. Default: *False*. See `"paper" <https://arxiv.org/pdf/1707.06990.pdf>`_

"""

return _densenet('densenet121', 32, (6, 12, 24, 16), 64, pretrained, progress,

**kwargs)

整合以上过程

#model.py

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.checkpoint as cp

from collections import OrderedDict

#from .utils import load_state_dict_from_url

__all__ = ['DenseNet', 'densenet121', 'densenet169', 'densenet201', 'densenet161']

class _DenseLayer(nn.Sequential):

def __init__(self, num_input_features, growth_rate, bn_size, drop_rate, memory_efficient=False):#num_input_features特征层数

super(_DenseLayer, self).__init__()#growth_rate=32增长率 bn_size=4

#(56 * 56 * 64)

self.add_module('norm1', nn.BatchNorm2d(num_input_features)),

self.add_module('relu1', nn.ReLU(inplace=True)),

self.add_module('conv1', nn.Conv2d(num_input_features, bn_size *

growth_rate, kernel_size=1, stride=1,

bias=False)),

self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate)),

self.add_module('relu2', nn.ReLU(inplace=True)),

self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1,

bias=False)),

#(56 * 56 * 32)

self.drop_rate = drop_rate

self.memory_efficient = memory_efficient

def forward(self, *prev_features):

bn_function = _bn_function_factory(self.norm1, self.relu1, self.conv1)#(56 * 56 * 64*3)

if self.memory_efficient and any(prev_feature.requires_grad for prev_feature in prev_features):

bottleneck_output = cp.checkpoint(bn_function, *prev_features)

else:

bottleneck_output = bn_function(*prev_features)

# bn1 + relu1 + conv1

new_features = self.conv2(self.relu2(self.norm2(bottleneck_output)))

if self.drop_rate > 0:

new_features = F.dropout(new_features, p=self.drop_rate,

training=self.training)

return new_features

def _bn_function_factory(norm, relu, conv):

def bn_function(*inputs):

# type(List[Tensor]) -> Tensor

concated_features = torch.cat(inputs, 1)#按通道合并

# bn1 + relu1 + conv1

bottleneck_output = conv(relu(norm(concated_features)))

return bottleneck_output

return bn_function

class _DenseBlock(nn.Module):

def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate, memory_efficient=False):

super(_DenseBlock, self).__init__()#num_layers层重复次数

for i in range(num_layers):

layer = _DenseLayer(

num_input_features + i * growth_rate, #for一次层数增加32

growth_rate=growth_rate,

bn_size=bn_size,

drop_rate=drop_rate,

memory_efficient=memory_efficient,

)

self.add_module('denselayer%d' % (i + 1), layer) # 追加denselayer层到字典里面

def forward(self, init_features):

features = [init_features] #原来的特征,64

for name, layer in self.named_children(): # 依次遍历添加的6个layer层,

new_features = layer(*features) #计算特征

features.append(new_features) # 追加特征

return torch.cat(features, 1) # 按通道数合并特征

class _Transition(nn.Sequential):

def __init__(self, num_input_features, num_output_features):

super(_Transition, self).__init__()

self.add_module('norm', nn.BatchNorm2d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,

kernel_size=1, stride=1, bias=False))

self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))

class DenseNet(nn.Module):

r"""Densenet-BC model class, based on

`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`_

Args:

growth_rate (int) - how many filters to add each layer (`k` in paper)

block_config (list of 4 ints) - how many layers in each pooling block

num_init_features (int) - the number of filters to learn in the first convolution layer

bn_size (int) - multiplicative factor for number of bottle neck layers

(i.e. bn_size * k features in the bottleneck layer)

drop_rate (float) - dropout rate after each dense layer

num_classes (int) - number of classification classes

memory_efficient (bool) - If True, uses checkpointing. Much more memory efficient,

but slower. Default: *False*. See `"paper" <https://arxiv.org/pdf/1707.06990.pdf>`_

"""

def __init__(self, growth_rate=32, block_config=(6, 12, 24, 16),

num_init_features=64, bn_size=4, drop_rate=0, num_classes=1000, memory_efficient=False):

super(DenseNet, self).__init__()

# First convolution

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2,

padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

]))

# Each denseblock

num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = _DenseBlock(

num_layers=num_layers, # 层数重复次数

num_input_features=num_features, # 特征层数64

bn_size=bn_size,

growth_rate=growth_rate,

drop_rate=drop_rate, # dropout值 0

memory_efficient=memory_efficient

)

self.features.add_module('denseblock%d' % (i + 1), block) # 追加denseblock

num_features = num_features + num_layers * growth_rate # 更新num_features=64+6*32 = 256

if i != len(block_config) - 1:#每两个dense block之间增加一个过渡层,i != (4-1),即 i != 3 非最后一个denseblock,后面跟_Transition层

trans = _Transition(num_input_features=num_features,

num_output_features=num_features // 2) # 输出通道数减半

self.features.add_module('transition%d' % (i + 1), trans)

num_features = num_features // 2 # 更新num_features= num_features//2 取整数部分

# Final batch norm

self.features.add_module('norm5', nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features, num_classes)

# Official init from torch repo.

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

features = self.features(x) # 特征提取层

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1)) # 自适应均值池化,输出大小为(1,1)

out = torch.flatten(out, 1)

out = self.classifier(out) # 分类器

return out

# def _load_state_dict(model, model_url, progress):

# # '.'s are no longer allowed in module names, but previous _DenseLayer

# # has keys 'norm.1', 'relu.1', 'conv.1', 'norm.2', 'relu.2', 'conv.2'.

# # They are also in the checkpoints in model_urls. This pattern is used

# # to find such keys.

# pattern = re.compile(

# r'^(.*denselayerd+.(?:norm|relu|conv)).((?:[12]).(?:weight|bias|running_mean|running_var))$')

#

# state_dict = load_state_dict_from_url(model_url, progress=progress)

# for key in list(state_dict.keys()):

# res = pattern.match(key)

# if res:

# new_key = res.group(1) + res.group(2)

# state_dict[new_key] = state_dict[key]

# del state_dict[key]

# model.load_state_dict(state_dict)

def _densenet(arch, growth_rate, block_config, num_init_features, pretrained, progress,

**kwargs):

model = DenseNet(growth_rate, block_config, num_init_features, **kwargs)

if pretrained:

_load_state_dict(model, model_urls[arch], progress)

return model

def densenet121(pretrained=False, progress=True, **kwargs):

r"""Densenet-121 model from

`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`_

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

memory_efficient (bool) - If True, uses checkpointing. Much more memory efficient,

but slower. Default: *False*. See `"paper" <https://arxiv.org/pdf/1707.06990.pdf>`_

"""

return _densenet('densenet121', 32, (6, 12, 24, 16), 64, pretrained, progress,

**kwargs)

def densenet161(pretrained=False, progress=True, **kwargs):

r"""Densenet-161 model from

`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`_

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

memory_efficient (bool) - If True, uses checkpointing. Much more memory efficient,

but slower. Default: *False*. See `"paper" <https://arxiv.org/pdf/1707.06990.pdf>`_

"""

return _densenet('densenet161', 48, (6, 12, 36, 24), 96, pretrained, progress,

**kwargs)

def densenet169(pretrained=False, progress=True, **kwargs):

r"""Densenet-169 model from

`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`_

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

memory_efficient (bool) - If True, uses checkpointing. Much more memory efficient,

but slower. Default: *False*. See `"paper" <https://arxiv.org/pdf/1707.06990.pdf>`_

"""

return _densenet('densenet169', 32, (6, 12, 32, 32), 64, pretrained, progress,

**kwargs)

def densenet201(pretrained=False, progress=True, **kwargs):

r"""Densenet-201 model from

`"Densely Connected Convolutional Networks" <https://arxiv.org/pdf/1608.06993.pdf>`_

Args:

pretrained (bool): If True, returns a model pre-trained on ImageNet

progress (bool): If True, displays a progress bar of the download to stderr

memory_efficient (bool) - If True, uses checkpointing. Much more memory efficient,

but slower. Default: *False*. See `"paper" <https://arxiv.org/pdf/1707.06990.pdf>`_

"""

return _densenet('densenet201', 32, (6, 12, 48, 32), 64, pretrained, progress,

**kwargs)

注:本次训练集下载在AlexNet博客有详细解说:https://blog.csdn.net/weixin_44023658/article/details/105798326

#train.py

import torch

import torch.nn as nn

from torchvision import transforms, datasets

import json

import matplotlib.pyplot as plt

from model import densenet121

import os

import torch.optim as optim

import torchvision.models.densenet

import torchvision.models as models

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),#来自官网参数

"val": transforms.Compose([transforms.Resize(256),#将最小边长缩放到256

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../../..")) # get data root path

image_path = data_root + "/data_set/flower_data/" # flower data set path

train_dataset = datasets.ImageFolder(root=image_path + "train",

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 16

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=0)

validate_dataset = datasets.ImageFolder(root=image_path + "/val",

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=0)

#迁移学习

net = models.densenet121(pretrained=False)

model_weight_path="./densenet121-a.pth"

missing_keys, unexpected_keys = net.load_state_dict(torch.load(model_weight_path), strict= False)

inchannel = net.classifier.in_features

net.classifier = nn.Linear(inchannel, 5)

net.to(device)

loss_function = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.0001)

#普通

# net = densenet121(pretrained=False)

# net.to(device)

# inchannel = net.classifier.in_features

# net.classifier = nn.Linear(inchannel, 5)

#

# loss_function = nn.CrossEntropyLoss()

# optimizer = optim.Adam(net.parameters(), lr=0.0001)

best_acc = 0.0

save_path = './densenet121.pth'

for epoch in range(10):

# train

net.train()

running_loss = 0.0

for step, data in enumerate(train_loader, start=0):

images, labels = data

optimizer.zero_grad()

logits = net(images.to(device))

loss = loss_function(logits, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

# print train process

rate = (step+1)/len(train_loader)

a = "*" * int(rate * 50)

b = "." * int((1 - rate) * 50)

print("

train loss: {:^3.0f}%[{}->{}]{:.4f}".format(int(rate*100), a, b, loss), end="")

print()

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

for val_data in validate_loader:

val_images, val_labels = val_data

outputs = net(val_images.to(device)) # eval model only have last output layer

# loss = loss_function(outputs, test_labels)

predict_y = torch.max(outputs, dim=1)[1]

acc += (predict_y == val_labels.to(device)).sum().item()

val_accurate = acc / val_num

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('[epoch %d] train_loss: %.3f test_accuracy: %.3f' %

(epoch + 1, running_loss / step, val_accurate))

print('Finished Training')

#predict.py

import torch

from model import densenet121

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

import json

data_transform = transforms.Compose(

[transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])

# load image

img = Image.open("./roses.jpg")

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

try:

json_file = open('./class_indices.json', 'r')

class_indict = json.load(json_file)

except Exception as e:

print(e)

exit(-1)

# create model

model = densenet121(num_classes=5)

# load model weights

model_weight_path = "./densenet121.pth"

model.load_state_dict(torch.load(model_weight_path))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img))

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print(class_indict[str(predict_cla)], predict[predict_cla].numpy())

plt.show()

以上是关于DenseNet——CNN经典网络模型详解(pytorch实现)的主要内容,如果未能解决你的问题,请参考以下文章

AlexNet--CNN经典网络模型详解(pytorch实现)

ResNet——CNN经典网络模型详解(pytorch实现)