Redis缓存高可用集群

Posted Jerry的博客

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Redis缓存高可用集群相关的知识,希望对你有一定的参考价值。

要求如下:

搭建Redis5.0集群,三主三从

扩容一主一从

通过JedisCluster向RedisCluster添加数据和取出数据

1.主从复制搭建

介绍

Redis支持主从复制功能,可以通过执行slaveof(Redis5以后改成replicaof)或者在配置文件中设置slaveof(Redis5以后改成replicaof)来开启复制功能。

主从复制原理

2.8之前:

如果你为master配置了一个slave,不管这个slave是否是第一次连接上Master,它都会发送一个SYNC命令给master请求复制数据。master收到SYNC命令后,会在后台进行数据持久化通过bgsave生成最新的rdb快照文件,持久化期间,master会继续接收客户端的请求,它会把这些可能修改数据集的请求缓存在内存中。当持久化进行完毕以后,master会把这份rdb文件数据集发送给slave,slave会把接收到的数据进行持久化生成rdb,然后再加载到内存中。然后,master再将之前缓存在内存中的命令发送给slave。

当master与slave之间的连接由于某些原因而断开时,slave能够自动重连Master,如果master收到了多个slave并发连接请求,它只会进行一次持久化,而不是一个连接一次,然后再把这一份持久化的数据发送给多个并发连接的slave。

2.8之后:

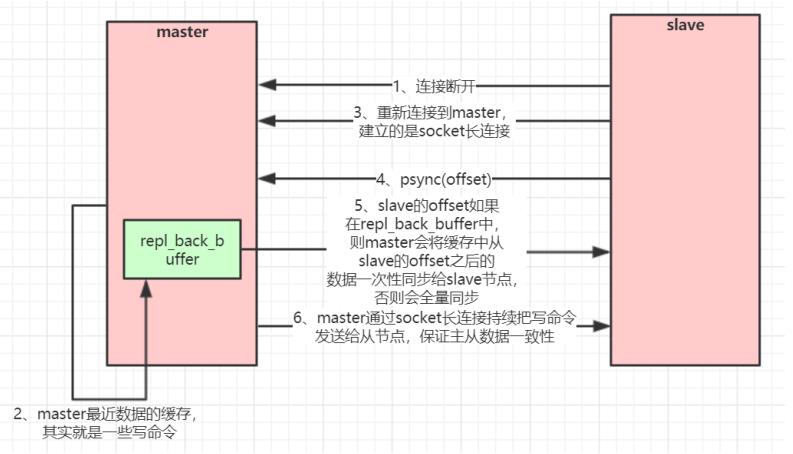

2.8版本开始,slave与master能够在网络连接断开重连后只进行部分数据复制。master会在其内存中创建一个复制数据用的缓存队列,缓存最近一段时间的数据,master和它所有的slave都维护了复制的数据下标offset和master的进程id,因此,当网络连接断开后,slave会请求master继续进行未完成的复制,从所记录的数据下标开始。如果master进程id变化了,或者从节点数据下标offset太旧,已经不在master的缓存队列里了,那么将会进行一次全量数据的复制。从2.8版本开始,redis改用可以支持部分数据复制的命令PSYNC去master同步数据

全量复制流程图:

增量复制流程图:

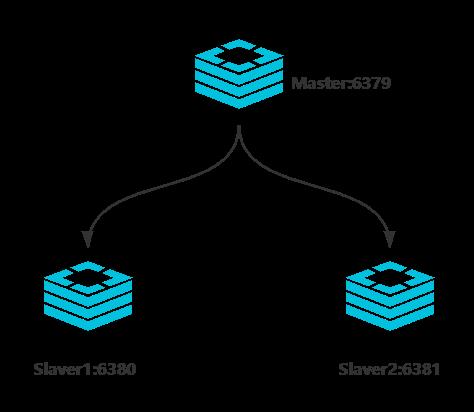

主从复制架构图

安装gcc

yum install gcc下载

下载地址:http://redis.io/download

解压

tar xzf redis‐5.0.4.tar.gz安装Master

mkdir -p /usr/local/redis-ms/redis-mastercd /usr/local/redis-5.0.4/src/make install PREFIX=/usr/local/redis-ms/redis-mastercp /usr/local/redis-5.0.4/redis.conf /usr/local/redis-ms/redis-master/bin

修改redis.conf

cd /usr/local/redis-ms/redis-master/binvim redis.conf# 将`daemonize`改为`yes`daemonize yes# 注释bind 127.0.0.1# bind 127.0.0.1# 是否开启保护模式no# 开启验证requirepass qwe1!2@3

安装Slaver1

mkdir -p /usr/local/redis-ms/redis-slaver1cp -r /usr/local/redis-ms/redis-master/* /usr/local/redis-ms/redis-slaver1# 修改配置文件vim /usr/local/redis-ms/redis-slaver1/bin/redis.confport 6380replicaof 127.0.0.1 6379# 开启验证requirepass qwe1!2@3# 开启主从验证masterauth qwe1!2@3

安装Slaver2

mkdir -p /usr/local/redis-ms/redis-slaver2cp -r /usr/local/redis-ms/redis-master/* /usr/local/redis-ms/redis-slaver2# 修改配置文件vim /usr/local/redis-ms/redis-slaver2/bin/redis.confport 6381replicaof 127.0.0.1 6379# 开启验证requirepass qwe1!2@3# 开启主从验证masterauth qwe1!2@3

启动脚本

编写启动脚本

vi master.sh# 启动mastercd /usr/local/redis-ms/redis-master/bin./redis-server redis.conf# 启动slaver1cd /usr/local/redis-ms/redis-slaver1/bin./redis-server redis.conf# 启动slaver2cd /usr/local/redis-ms/redis-slaver2/bin./redis-server redis.conf

赋予执行权限

chmod u+x master.sh执行master.sh一键启动

2. 哨兵模式搭建

介绍

哨兵(sentinel)是Redis的高可用性(High Availability)的解决方案:由一个或多个sentinel实例组成sentinel集群可以监视一个或多个主服务器和多个从服务器。当主服务器进入下线状态时,sentinel可以将该主服务器下的某一从服务器升级为主服务器继续提供服务,从而保证redis的高可用性。

哨兵模式架构图

安装过程

mkdir -p /usr/local/redis-ms/redis-sentinelcp -r /usr/local/redis-ms/redis-master/* /usr/local/redis-ms/redis-sentinel

sentinel-26379.conf

# 复制一份sentinel.conf文件cd /usr/local/redis-5.0.4/cp sentinel.conf /usr/local/redis-ms/redis-sentinel/bin/sentinel‐26379.confcd /usr/local/redis-ms/redis-sentinel/binvim sentinel‐26379.conf

# 修改相关配置port 26379daemonize yessentinel monitor mymaster 127.0.0.1 6379 2sentinel auth-pass mymaster qwe1!2@3

sentinel-26380.conf

cp sentinel‐26379.conf sentinel‐26380.confvim sentinel‐26380.conf# 修改端口号port 26380

sentinel-26381.conf

cp sentinel‐26379.conf sentinel‐26381.confvim sentinel‐26381.conf# 修改端口号port 26381

编写启动脚本start.sh

cd /usr/local/redis-ms/redis-sentinel/bin./redis-sentinel sentinel‐26379.conf./redis-sentinel sentinel‐26380.conf./redis-sentinel sentinel‐26381.conf

赋予执行权限

chmod u+x start.sh执行start.sh一键启动

查看启动状态

bin]# ps -ef |grep redisroot 13989 1 0 12:55 ? 00:00:00 ./redis-server *:6379root 13994 1 0 12:55 ? 00:00:00 ./redis-server *:6380root 13999 1 0 12:55 ? 00:00:00 ./redis-server *:6381root 14009 1 0 12:55 ? 00:00:00 ./redis-sentinel *:26379 [sentinel]root 14014 1 0 12:55 ? 00:00:00 ./redis-sentinel *:26380 [sentinel]root 14016 1 0 12:55 ? 00:00:00 ./redis-sentinel *:26381 [sentinel]root 14024 13502 0 12:55 pts/0 00:00:00 grep --color=auto redis

3.集群模式搭建

介绍

redis集群是一个由多个主从节点群组成的分布式服务器群,它具有复制、高可用和分片特性。Redis集群不需要sentinel哨兵也能完成节点移除和故障转移的功能。需要将每个节点设置成集群模式,这种集群模式没有中心节点,可水平扩展,据官方文档称可以线性扩展到上万个节点(官方推荐不超过1000个节点)。redis集群的性能和高可用性均优于之前版本的哨兵模式,且集群配置非常简单。

集群原理分析

Redis Cluster 将所有数据划分为 16384 个 slots(槽位),每个节点负责其中一部分槽位。槽位的信息存储于每个节点中。

当 Redis Cluster 的客户端来连接集群时,它也会得到一份集群的槽位配置信息并将其缓存在客户端本地。这样当客户端要查找某个 key 时,可以直接定位到目标节点。同时因为槽位的信息可能会存在客户端与服务器不一致的情况,还需要纠正机制来实现槽位信息的校验调整。

槽位定位算法

Cluster 默认会对 key 值使用 crc16 算法进行 hash 得到一个整数值,然后用这个整数值对 16384 进行取模来得到具体槽位。

HASH_SLOT = CRC16(key) mod 16384

跳转重定位

127.0.0.1:7001> set name abc-> Redirected to slot [5798] located at 127.0.0.1:7007OK127.0.0.1:7007> set address guizhou-> Redirected to slot [3680] located at 127.0.0.1:7001OK127.0.0.1:7001>

Redis集群节点间的通信机制

redis cluster节点间采取gossip协议进行通信。

维护集群的元数据有两种方式:

集中式:优点在于元数据的更新和读取,时效性非常好,一旦元数据出现变更立即就会更新到集中式的存储中,其他节点读取的时候立即就可以立即感知到;不足在于所有的元数据的更新压力全部集中在一个地方,可能导致元数据的存储压力。

gossip:gossip协议包含多种消息,包括ping,pong,meet,fail等等。

gossip协议的优点在于元数据的更新比较分散,不是集中在一个地方,更新请求会陆陆续续,打到所有节点上去更新,有一定的延时,降低了压力;缺点在于元数据更新有延时可能导致集群的一些操作会有一些滞后。

ping:每个节点都会频繁给其他节点发送ping,其中包含自己的状态还有自己维护的集群元数据,互相通过ping交换元数据;

pong: 返回ping和meet,包含自己的状态和其他信息,也可以用于信息广播和更新;

fail: 某个节点判断另一个节点fail之后,就发送fail给其他节点,通知其他节点,指定的节点宕机了。

meet:某个节点发送meet给新加入的节点,让新节点加入集群中,然后新节点就会开始与其他节点进行通信,不需要发送形成网络的所需的所有CLUSTER MEET命令。发送CLUSTER MEET消息以便每个节点能够达到其他每个节点只需通过一条已知的节点链就够了。由于在心跳包中会交换gossip信息,将会创建节点间缺失的链接。

集群选举原理

当slave发现自己的master变为FAIL状态时,便尝试进行Failover,以期成为新的master。由于挂掉的master可能会有多个slave,从而存在多个slave竞争成为master节点的过程, 其过程如下:

slave发现自己的master变为FAIL

将自己记录的集群currentEpoch加1,并广播FAILOVER_AUTH_REQUEST 信息

其他节点收到该信息,只有master响应,判断请求者的合法性,并发FAILOVER_AUTH_ACK,对每一个epoch只发送一次ack

尝试failover的slave收集master返回的FAILOVER_AUTH_ACK

slave收到超过半数master的ack后变成新Master(这里解释了集群为什么至少需要三个主节点,如果只有两个,当其中一个挂了,只剩一个主节点是不能选举成功的)

广播Pong消息通知其他集群节点。从节点并不是在主节点一进入 FAIL 状态就马上尝试发起选举,而是有一定延迟,一定的延迟确保我们等待FAIL状态在集群中传播,slave如果立即尝试选举,其它masters或许尚未意识到FAIL状态,可能会拒绝投票

延迟计算公式: DELAY = 500ms + random(0 ~ 500ms) + SLAVE_RANK * 1000ms

SLAVE_RANK表示此slave已经从master复制数据的总量的rank。Rank越小代表已复制的数据越新。这种方式下,持有最新数据的slave将会首先发起选举(理论上)。

集群模式架构图

安装过程

RedisCluster最少需要三台主服务器,三台从服务器。端口号分别为:7001~7006

mkdir -p /usr/local/redis-cluster/7001cd /usr/local/redis-5.0.4/srcmake install PREFIX=/usr/local/redis-cluster/7001cp /usr/local/redis-5.0.4/redis.conf /usr/local/redis-cluster/7001/binvim /usr/local/redis-cluster/7001/bin/redis.conf

redis.conf

# 将`daemonize`改为`yes`daemonize yes# 注释bind 127.0.0.1# bind 127.0.0.1# 是否开启保护模式protected-mode no# 开启验证requirepass qwet123321# 开启集群模式cluster-enable yes

复制7001,创建7002~7006实例,同时修改端口号

mkdir -p /usr/local/redis-cluster/7002mkdir -p /usr/local/redis-cluster/7003mkdir -p /usr/local/redis-cluster/7004mkdir -p /usr/local/redis-cluster/7005mkdir -p /usr/local/redis-cluster/7006cp -r /usr/local/redis-cluster/7001/* /usr/local/redis-cluster/7002/cp -r /usr/local/redis-cluster/7001/* /usr/local/redis-cluster/7003/cp -r /usr/local/redis-cluster/7001/* /usr/local/redis-cluster/7004/cp -r /usr/local/redis-cluster/7001/* /usr/local/redis-cluster/7005/cp -r /usr/local/redis-cluster/7001/* /usr/local/redis-cluster/7006/

vim /usr/local/redis-cluster/7002/bin/redis.confport=7002

vim /usr/local/redis-cluster/7003/bin/redis.confport=7003

vim /usr/local/redis-cluster/7004/bin/redis.confport=7004

vim /usr/local/redis-cluster/7005/bin/redis.confport=7005

vim /usr/local/redis-cluster/7006/bin/redis.confport=7006

启动脚本

编写启动脚本cluster.sh

cd /usr/local/redis-cluster/7001/bin./redis-server redis.confcd /usr/local/redis-cluster/7002/bin./redis-server redis.confcd /usr/local/redis-cluster/7003/bin./redis-server redis.confcd /usr/local/redis-cluster/7004/bin./redis-server redis.confcd /usr/local/redis-cluster/7005/bin./redis-server redis.confcd /usr/local/redis-cluster/7006/bin./redis-server redis.conf

赋予执行权限

chmod u+x cluster.sh执行启动脚本,一键启动redis集群

./cluster.sh创建集群

./redis-cli -a qwet123321 --cluster create 127.0.0.1:7001 127.0.0.1:7002 127.0.0.1:7003 127.0.0.1:7004 127.0.0.1:7005 127.0.0.1:7006 --cluster-replicas 1[root@iZ2zed97t0sgaryr3csas3Z bin]# ./redis-cli -a qwet123321 --cluster create 127.0.0.1:7001 127.0.0.1:7002 127.0.0.1:7003 127.0.0.1:7004 127.0.0.1:7005 127.0.0.1:7006 --cluster-replicas 1Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.>>> Performing hash slots allocation on 6 nodes...Master[0] -> Slots 0 - 5460Master[1] -> Slots 5461 - 10922Master[2] -> Slots 10923 - 16383Adding replica 127.0.0.1:7005 to 127.0.0.1:7001Adding replica 127.0.0.1:7006 to 127.0.0.1:7002Adding replica 127.0.0.1:7004 to 127.0.0.1:7003>>> Trying to optimize slaves allocation for anti-affinity[WARNING] Some slaves are in the same host as their masterM: f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001slots:[0-5460] (5461 slots) masterM: 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002slots:[5461-10922] (5462 slots) masterM: b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003slots:[10923-16383] (5461 slots) masterS: 8438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004replicates f426d1403a4594472e6abfe474657fbda60b8089S: ee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005replicates 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0eS: 4c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006replicates b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8cCan I set the above configuration? (type 'yes' to accept): yes>>> Nodes configuration updated>>> Assign a different config epoch to each node>>> Sending CLUSTER MEET messages to join the clusterWaiting for the cluster to join.....>>> Performing Cluster Check (using node 127.0.0.1:7001)M: f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001slots:[0-5460] (5461 slots) master1 additional replica(s)M: 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002slots:[5461-10922] (5462 slots) master1 additional replica(s)S: 8438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004slots: (0 slots) slavereplicates f426d1403a4594472e6abfe474657fbda60b8089M: b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003slots:[10923-16383] (5461 slots) master1 additional replica(s)S: 4c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006slots: (0 slots) slavereplicates b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8cS: ee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005slots: (0 slots) slavereplicates 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e[OK] All nodes agree about slots configuration.>>> Check for open slots...>>> Check slots coverage...[OK] All 16384 slots covered.[root@iZ2zed97t0sgaryr3csas3Z bin]#

连接RedisCluster

[root@iZ2zed97t0sgaryr3csas3Z bin]# ./redis-cli -p 7001 -c127.0.0.1:7001> auth qwet123321OK127.0.0.1:7001> cluster infocluster_state:okcluster_slots_assigned:16384cluster_slots_ok:16384cluster_slots_pfail:0cluster_slots_fail:0cluster_known_nodes:6cluster_size:3cluster_current_epoch:6cluster_my_epoch:1cluster_stats_messages_ping_sent:164cluster_stats_messages_pong_sent:168cluster_stats_messages_sent:332cluster_stats_messages_ping_received:163cluster_stats_messages_pong_received:164cluster_stats_messages_meet_received:5cluster_stats_messages_received:332127.0.0.1:7001>

查看集群节点

127.0.0.1:7001> cluster nodes4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002@17002 master - 0 1614064343289 2 connected 5461-109228438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004@17004 slave f426d1403a4594472e6abfe474657fbda60b8089 0 1614064341000 4 connectedb0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003@17003 master - 0 1614064344000 3 connected 10923-16383f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001@17001 myself,master - 0 1614064343000 1 connected 0-54604c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006@17006 slave b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 0 1614064344290 6 connectedee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005@17005 slave 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 0 1614064345291 5 connected

扩容

添加主节点

mkdir -p /usr/local/redis-cluster/7007cd /usr/local/redis-5.0.4/srcmake install PREFIX=/usr/local/redis-cluster/7007cp -r /usr/local/redis-cluster/7001/bin/redis.conf /usr/local/redis-cluster/7007/bincd /usr/local/redis-cluster/7007/bin

修改redis.conf配置文件

vim redis.confport=7007

启动7007实例

./redis-server redis.conf添加集群节点

./redis-cli --cluster add-node 127.0.0.1:7007 127.0.0.1:7001 -a qwet123321[root@iZ2zed97t0sgaryr3csas3Z bin]# ./redis-cli --cluster add-node 127.0.0.1:7007 127.0.0.1:7001 -a qwet123321Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.>>> Adding node 127.0.0.1:7007 to cluster 127.0.0.1:7001>>> Performing Cluster Check (using node 127.0.0.1:7001)M: f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001slots:[0-5460] (5461 slots) master1 additional replica(s)M: 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002slots:[5461-10922] (5462 slots) master1 additional replica(s)S: 8438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004slots: (0 slots) slavereplicates f426d1403a4594472e6abfe474657fbda60b8089M: b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003slots:[10923-16383] (5461 slots) master1 additional replica(s)S: 4c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006slots: (0 slots) slavereplicates b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8cS: ee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005slots: (0 slots) slavereplicates 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e[OK] All nodes agree about slots configuration.>>> Check for open slots...>>> Check slots coverage...[OK] All 16384 slots covered.>>> Send CLUSTER MEET to node 127.0.0.1:7007 to make it join the cluster.[OK] New node added correctly.[root@iZ2zed97t0sgaryr3csas3Z bin]#

查看节点

./redis-cli -h 127.0.0.1 -p 7001 -c -a qwet123321

[root@iZ2zed97t0sgaryr3csas3Z bin]# ./redis-cli -h 127.0.0.1 -p 7001 -c -a qwet123321

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:7001> cluster nodes

4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002@17002 master - 0 1614066382089 2 connected 5461-10922

8438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004@17004 slave f426d1403a4594472e6abfe474657fbda60b8089 0 1614066383092 4 connected

b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003@17003 master - 0 1614066381088 3 connected 10923-16383

f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001@17001 myself,master - 0 1614066382000 1 connected 0-5460

0a4d581c67211573c1d634b53f0d55305e98fd33 127.0.0.1:7007@17007 master - 0 1614066382000 0 connected

4c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006@17006 slave b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 0 1614066383000 6 connected

ee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005@17005 slave 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 0 1614066381000 5 connected

127.0.0.1:7001>

为7007实例分配槽

./redis-cli --cluster reshard 127.0.0.1:7007 -a qwet123321输入要分配的槽数量

How many slots do you want to move (from 1 to 16384)? 3000输入接受槽的节点id

0a4d581c67211573c1d634b53f0d55305e98fd33输入源节点id

all输入 yes 开始移动槽到目标结点 id

yes查看结果

127.0.0.1:7001> cluster nodes4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002@17002 master - 0 1614066813000 2 connected 6462-109228438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004@17004 slave f426d1403a4594472e6abfe474657fbda60b8089 0 1614066811892 4 connectedb0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003@17003 master - 0 1614066813896 3 connected 11922-16383f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001@17001 myself,master - 0 1614066810000 1 connected 999-54600a4d581c67211573c1d634b53f0d55305e98fd33 127.0.0.1:7007@17007 master - 0 1614066812000 7 connected 0-998 5461-6461 10923-119214c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006@17006 slave b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 0 1614066812000 6 connectedee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005@17005 slave 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 0 1614066812893 5 connected

添加从节点

mkdir -p /usr/local/redis-cluster/7008cd /usr/local/redis-5.0.4/srcmake install PREFIX=/usr/local/redis-cluster/7008cp -r /usr/local/redis-cluster/7007/bin/redis.conf /usr/local/redis-cluster/7008/bincd /usr/local/redis-cluster/7008/bin

修改redis.conf配置文件

vim redis.confport=7008

启动7008实例

./redis-server redis.conf将7008添加为7007的从节点

./redis-cli --cluster add-node 127.0.0.1:7008 127.0.0.1:7007 --cluster-slave --cluster-master-id 0a4d581c67211573c1d634b53f0d55305e98fd33 -a qwet123321[root@iZ2zed97t0sgaryr3csas3Z bin]# ./redis-cli --cluster add-node 127.0.0.1:7008 127.0.0.1:7007 --cluster-slave --cluster-master-id 0a4d581c67211573c1d634b53f0d55305e98fd33 -a qwet123321Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.>>> Adding node 127.0.0.1:7008 to cluster 127.0.0.1:7007>>> Performing Cluster Check (using node 127.0.0.1:7007)M: 0a4d581c67211573c1d634b53f0d55305e98fd33 127.0.0.1:7007slots:[0-998],[5461-6461],[10923-11921] (2999 slots) masterS: 8438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004slots: (0 slots) slavereplicates f426d1403a4594472e6abfe474657fbda60b8089M: f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001slots:[999-5460] (4462 slots) master1 additional replica(s)M: b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003slots:[11922-16383] (4462 slots) master1 additional replica(s)M: 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002slots:[6462-10922] (4461 slots) master1 additional replica(s)S: 4c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006slots: (0 slots) slavereplicates b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8cS: ee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005slots: (0 slots) slavereplicates 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e[OK] All nodes agree about slots configuration.>>> Check for open slots...>>> Check slots coverage...[OK] All 16384 slots covered.>>> Send CLUSTER MEET to node 127.0.0.1:7008 to make it join the cluster.Waiting for the cluster to join>>> Configure node as replica of 127.0.0.1:7007.[OK] New node added correctly.[root@iZ2zed97t0sgaryr3csas3Z bin]#

查看节点信息

[root@iZ2zed97t0sgaryr3csas3Z bin]# ./redis-cli -h 127.0.0.1 -p 7001 -c -a qwet123321Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.cluster nodes4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 127.0.0.1:7002@17002 master - 0 1614067735599 2 connected 6462-109228438294b460bc3a4e7aa89780ca485333f83b3df 127.0.0.1:7004@17004 slave f426d1403a4594472e6abfe474657fbda60b8089 0 1614067732000 4 connectedd0d9e80d4fe561b48473dbbe52b4dd8275262c6b 127.0.0.1:7008@17008 slave 0a4d581c67211573c1d634b53f0d55305e98fd33 0 1614067735000 7 connectedb0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 127.0.0.1:7003@17003 master - 0 1614067733595 3 connected 11922-16383f426d1403a4594472e6abfe474657fbda60b8089 127.0.0.1:7001@17001 myself,master - 0 1614067734000 1 connected 999-54600a4d581c67211573c1d634b53f0d55305e98fd33 127.0.0.1:7007@17007 master - 0 1614067734597 7 connected 0-998 5461-6461 10923-119214c40374f3aed1478cefdb8b47a22731129b79b46 127.0.0.1:7006@17006 slave b0b8adcb4a088837b27b2cda1ad6c87c06fb8a8c 0 1614067735599 6 connectedee9b3d76a08d1529dc28a4285829bfa305bca0fe 127.0.0.1:7005@17005 slave 4acc09aae40e9f78fbe573f2881bf8d9d4f42a0e 0 1614067733000 5 connected

4.Java客户端连接RedisCluster

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>com.lagou</groupId><artifactId>redis-cluster-work</artifactId><version>1.0-SNAPSHOT</version><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>2.2.5.RELEASE</version><relativePath/> <!-- lookup parent from repository --></parent><properties><maven.compiler.source>8</maven.compiler.source><maven.compiler.target>8</maven.compiler.target></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-data-redis</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope><exclusions><exclusion><groupId>org.junit.vintage</groupId><artifactId>junit-vintage-engine</artifactId></exclusion></exclusions></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency></dependencies><build><plugins><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId></plugin></plugins></build></project>application.yml

spring:redis:database: 0timeout: 3000password: qwet123321cluster:nodes:- 192.168.124.136:7001- 192.168.124.136:7002- 192.168.124.136:7003- 192.168.124.136:7007RedisClusterApplication.java

public class RedisClusterApplication {public static void main(String[] args) {SpringApplication.run(RedisClusterApplication.class, args);}}IndexController.java

public class IndexController {private static final Logger logger = LoggerFactory.getLogger(IndexController.class);private StringRedisTemplate stringRedisTemplate;public void testCluster( String key,String value) {logger.info("[IndexController.testCluster] key:{}, value:{}", key, value);stringRedisTemplate.opsForValue().set(key, value);String cacheValue = stringRedisTemplate.opsForValue().get(key);logger.info("[IndexController.testCluster] get:{}", cacheValue);}}

测试

访问链接:http://localhost:8080/test/cluster?key=name&value=zhangfei

以上是关于Redis缓存高可用集群的主要内容,如果未能解决你的问题,请参考以下文章

浅谈小白如何读懂Redis高速缓存与持久化并存及主从高可用集群