UA池和代理IP池

Posted J哥。

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了UA池和代理IP池相关的知识,希望对你有一定的参考价值。

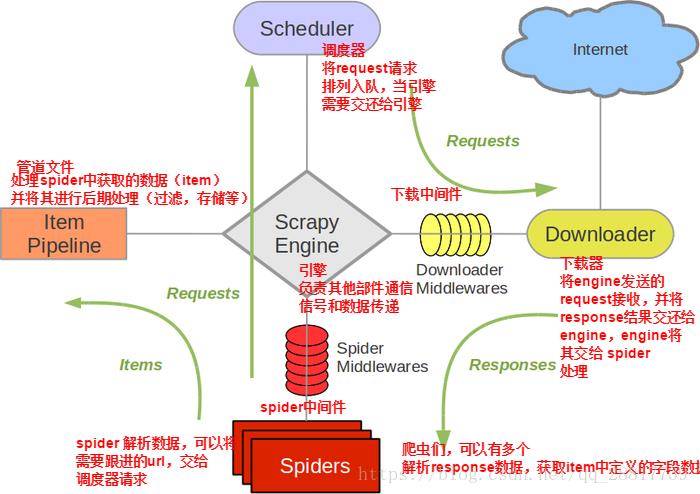

scrapy中 中间件 :位于scrapy引擎和下载器之间的一层组件

作用:

(1)引擎将请求传递给下载器过程中, 下载中间件可以对请求进行一系列处理。比如设置请求的 User-Agent,设置代理等

(2)在下载器完成将Response传递给引擎中,下载中间件可以对响应进行一系列处理。比如进行gzip解压等。

middlewares(中间件py文件)

spider : 从这里开始 ---> 作用 : 产生一个或者一批url / parse()对数据进行解析

url-->封装成请求对象-->引擎(接收spider提供的请求对象,但是还没有请求到数据)

引擎-->(请求对象)-->调度器(调度请求): 过滤器的类->去重(有可能会出现相同的请求对象)-->队列(数据类型,存的就是请求对象)

然后调度器从队列中调度出来一个请求对象给-->引擎-->给下载器-->请求对象-->互联网进行下载

互联网-->响应对象-->给下载器-->引擎(响应对象)-->给spider中的回调方法,进行解析

解析到的对象封装到item对象中-->引擎-->管道,持久化存储

然后 调度器再次从队列中调出来一个url,在.........

核心问题 : 引擎是用来触发事物的,但是引擎怎么知道在什么时候什么节点去触发那些事物?

解答 : 在工作过程中,所有的数据流都会经过'引擎',也就是说 '引擎'是根据接收到的数据流来判定应该去触发那个事物

比如 : 当 spider 给'引擎'提交了个item,引擎一旦接收了个数据类型是item的时候,

引擎马上就会知道下一步应该实例化管道,在掉管道中process_item方法

所有实例化的操作,事物的触发都是由引擎执行的

我们主要使用下载中间件处理请求时,一般会对请求设置随机的User-Agent,设置随机的代理,目的是在防止爬虫网站的反爬机制.

第一种:

UA池 : User-Agent池

#导包

from scrapy.contrib.downloadermiddleware.useragent import UserAgentMiddleware

import random

#UA池代码的编写(单独给UA池封装一个下载中间件的一个类)

class RandomUserAgent(UserAgentMiddleware):

def process_request(self, request, spider):

#从列表中随机抽选出一个ua值

ua = random.choice(user_agent_list)

#ua值进行当前拦截到请求的ua的写入操作

request.headers.setdefault('User-Agent',ua)

user_agent_list = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 "

"(Khtml, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 "

"(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 "

"(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 "

"(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 "

"(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 "

"(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 "

"(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 "

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 "

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

]代理池 :

作用 : 尽可能多的讲scrapy工程中的请求的IP设置成不同的

操作流程 :

- . 在下载中间件中拦截请求

- . 将拦截到的请求IP修改成某一代理ip

- . 在配置文件中开启下载中间件

#批量对拦截到的请求进行ip更换

#单独封装下载中间件类

class Proxy(object):

def process_request(self, request, spider):

#对拦截到请求的url进行判断(协议头到底是http还是https)

#request.url返回值:http://www.xxx.com

h = request.url.split(':')[0] #请求的协议头

if h == 'https':

ip = random.choice(PROXY_https)

request.meta['proxy'] = 'https://'+ip

else:

ip = random.choice(PROXY_http)

request.meta['proxy'] = 'http://' + ip

#可被选用的代理IP

PROXY_http = [

'153.180.102.104:80',

'195.208.131.189:56055',

]

PROXY_https = [

'120.83.49.90:9000',

'95.189.112.214:35508',

]第二种:基于scrapy

middlewares.py

# Define here the models for your spider middleware

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

# useful for handling different item types with a single interface

from itemadapter import is_item, ItemAdapter

a = []

'''

J哥提醒:部分注释的 是用不上的

'''

# class SpidersSpiderMiddleware:

# # Not all methods need to be defined. If a method is not defined,

# # scrapy acts as if the spider middleware does not modify the

# # passed objects.

#

# @classmethod

# def from_crawler(cls, crawler):

# # This method is used by Scrapy to create your spiders.

# s = cls()

# crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

# return s

#

# def process_spider_input(self, response, spider):

# # Called for each response that goes through the spider

# # middleware and into the spider.

#

# # Should return None or raise an exception.

# return None

#

# def process_spider_output(self, response, result, spider):

# # Called with the results returned from the Spider, after

# # it has processed the response.

#

# # Must return an iterable of Request, or item objects.

# for i in result:

# yield i

#

# def process_spider_exception(self, response, exception, spider):

# # Called when a spider or process_spider_input() method

# # (from other spider middleware) raises an exception.

#

# # Should return either None or an iterable of Request or item objects.

# pass

#

# def process_start_requests(self, start_requests, spider):

# # Called with the start requests of the spider, and works

# # similarly to the process_spider_output() method, except

# # that it doesn’t have a response associated.

#

# # Must return only requests (not items).

# for r in start_requests:

# yield r

#

# def spider_opened(self, spider):

# spider.logger.info('Spider opened: %s' % spider.name)

import random

class SpidersDownloaderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

# UA池:

user_agents = [

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60',

'Opera/8.0 (Windows NT 5.1; U; en)',

'Mozilla/5.0 (Windows NT 5.1; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.50',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; en) Opera 9.50',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0',

'Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2 ',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/30.0.1599.101 Safari/537.36',

'Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)',

'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0) ',

]

# 可被选用的代理IP : 如果你的请求时http类型用http,同理https类型就用https的IP

PROXY_http = [

'153.180.102.104:80',

'195.208.131.189:56055',

]

PROXY_https = [

'120.83.49.90:9000',

'95.189.112.214:35508',

]

# @classmethod

# def from_crawler(cls, crawler): # 下载中间件实例化的对象 (用不上)

# # This method is used by Scrapy to create your spiders.

# s = cls()

# crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

# return s

# 拦截请求

def process_request(self, request, spider): # 重点讲解

# UA伪装

request.headers['User-Agent'] = random.choice(self.user_agents)

# 验证操作:查看代理IP是否执行:

request.meta['proxy'] = 'http://110.18.152.229'

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

return None

# 拦截所有的响应

def process_response(self, request, response, spider): # 重点讲解

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

# 拦截发生异常的请求

def process_exception(self, request, exception, spider): # 重点讲解

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

# 代理 写在这里效率比较高

if request.url.split(':')[0] == 'http':

request.meta['proxy'] = 'http://' + random.choice(self.PROXY_http)

else:

request.meta['proxy'] = 'https://' + random.choice(self.PROXY_https)

return request # 将修正之后的请求对象进行重新的请求发送

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

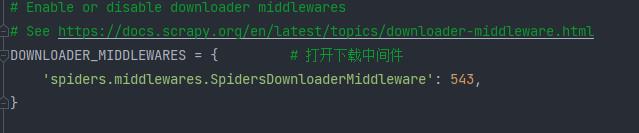

然后再配置文件中打开中间件:

虫py文件:

import scrapy

# middlewares(中间件py文件)

# 60.27.158.241(本机IP)

class MiddieSpider(scrapy.Spider):

# 爬取百度

name = 'middie'

# allowed_domains = ['www.xxx.com']

start_urls = ['http://www.baidu.com/s?wd=ip']

def parse(self, response):

page_text = response.text

with open('ip.html', 'w', encoding='utf-8') as f:

f.write(page_text)

这样就可以完成代理IP操作了 但是使用代理IP的效率并不是很高

最后最后:

- 进行UA伪装(与配置文件中的不同):写在:process_request - 代理ip的设定 :写在:process_exception :return request

以上是关于UA池和代理IP池的主要内容,如果未能解决你的问题,请参考以下文章