第140天学习打卡(Docker --link 自定义网络 网络连通 部署Redis集群 发布SpringBoot镜像)

Posted doudoutj

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了第140天学习打卡(Docker --link 自定义网络 网络连通 部署Redis集群 发布SpringBoot镜像)相关的知识,希望对你有一定的参考价值。

–link

思考一个场景,我们编写了一个微服务器, database url=ip; 项目不重启,数据库ip换掉了,我们希望可以处理这个问题,可以名字来进行访问容器?

[root@kuangshen /]# docker exec -it tomcat02 ping tomcat01

ping: tomcat01: Name or service not known

[root@kuangshen /]#

#如何能解决呢????

# 通过 --link就可以解决了

[root@kuangshen /]# docker run -d -P --name tomcat03 --link tomcat02 tomcat

7b5505b465a4b5bc8163fb84d67027cbfe7cd79ce2d5730555d1b413c15c0515

[root@kuangshen /]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.094 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.067 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.073 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=4 ttl=64 time=0.080 ms

#反向可以ping通吗?不可以

[root@kuangshen /]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

tomcat03 就是本地配置了tomcat02的配置!

#查看hosts配置,在这里原理发现!

[root@kuangshen /]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 a3aa888739e1

172.17.0.4 7b5505b465a4

–link 就是我们在hosts配置中增加了一个172.17.0.3 tomcat02 a3aa888739e1

现在Docker已经不建议使用–link了

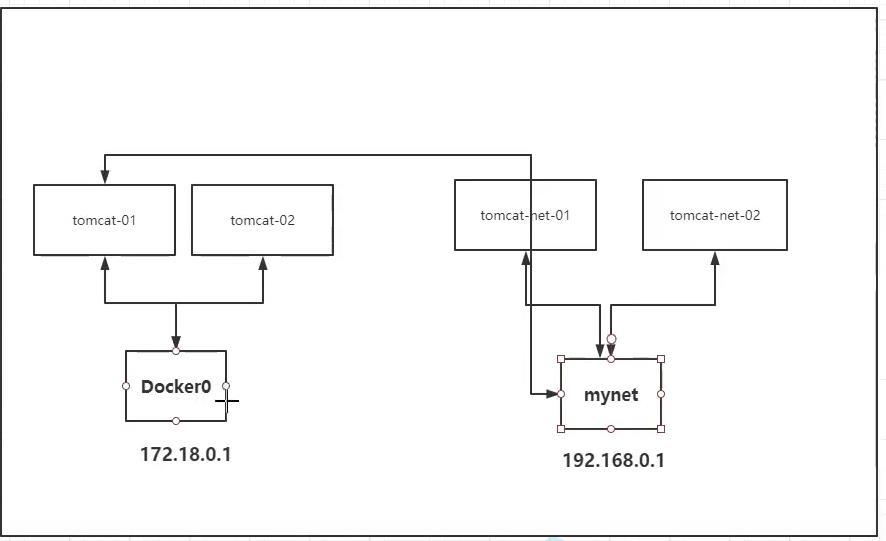

需要自定义网络!不使用docker0

docker0问题:它不支持容器名连接访问!

自定义网络

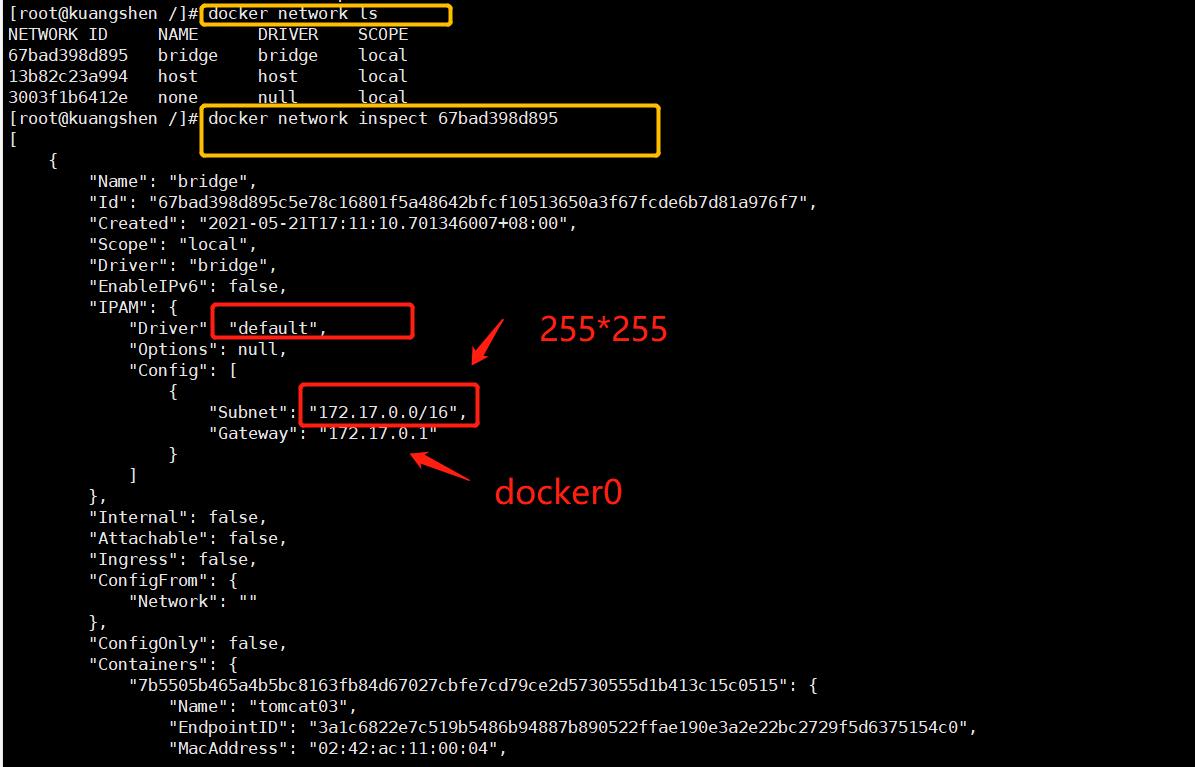

查看所有的docker网络

网络模式

bridge: 桥接docker搭桥(默认, 自己创建也使用bridge模式)

none: 不配置网络

host: 和宿主机共享网络

container:容器内网络连通(用的少!局限很大)

测试

# 首先把原来的容器删掉

[root@kuangshen /]# docker rm -f $(docker ps -aq)

7b5505b465a4

a983ec358d46

a3aa888739e1

# 我们直接启动的命令 --net bridge ,而这个就是我们的docker0

[root@kuangshen /]# docker run -d -P --name tomcat01 --net bridge tomcat

[root@kuangshen /]# docker run -d -P --name tomcat01 tomcat #原来的连接方式

#docker0特点:默认的, 域名不能访问, --link可以打通直接连接

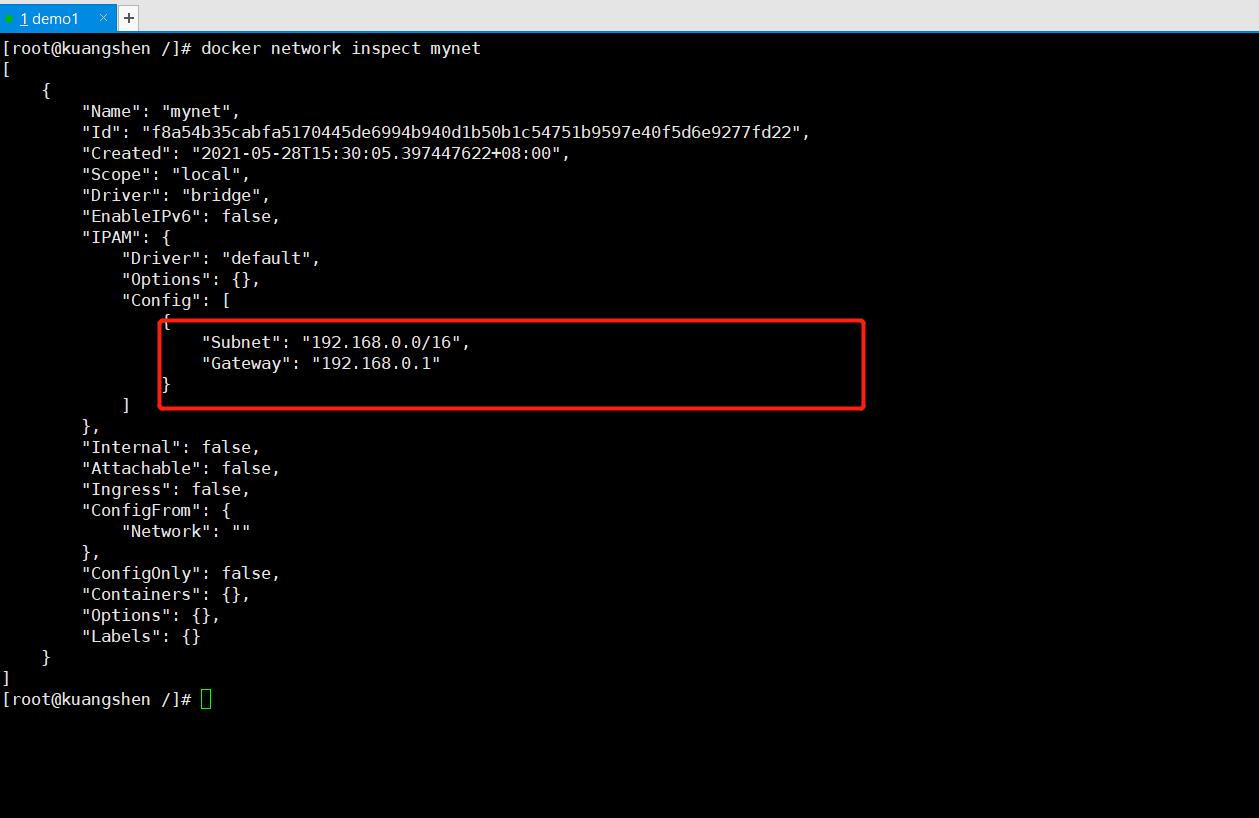

# 我们可以自定义一个网络!

#--driver bridge

#--subnet 192.168.0.0/16 子网

#--gateway 192.168.0.1 网关

[root@kuangshen /]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

f8a54b35cabfa5170445de6994b940d1b50b1c54751b9597e40f5d6e9277fd22

[root@kuangshen /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

67bad398d895 bridge bridge local

13b82c23a994 host host local

f8a54b35cabf mynet bridge local

3003f1b6412e none null local

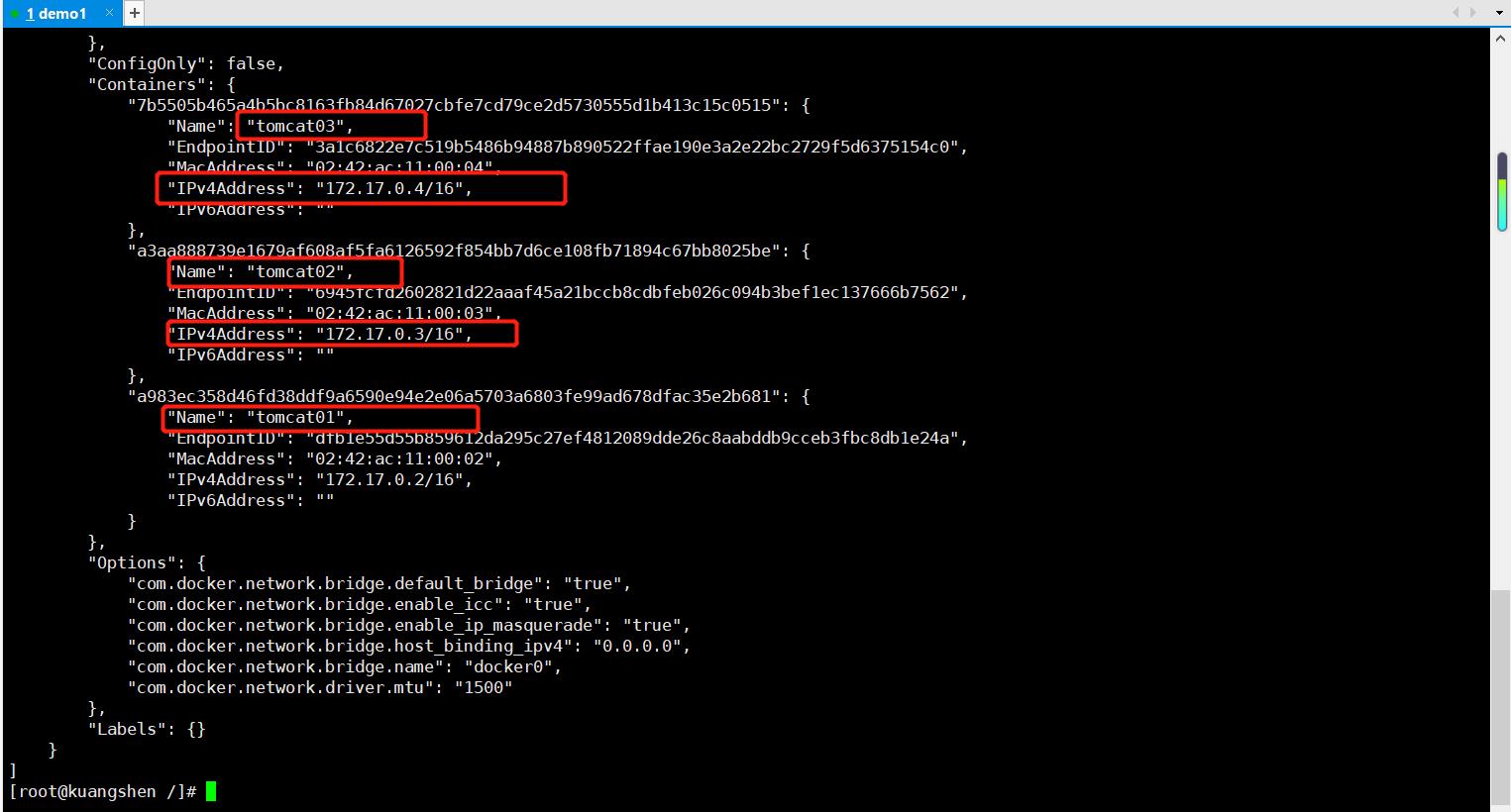

我们自己的网络就创建好了:

[root@kuangshen /]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

ed92a0d162c910dea054fabd95dbfefb2b198e627461c0e3c0b82ffbb1d40608

[root@kuangshen /]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

e2acac20abb933833c05a36ffc1cedd2239beb7f9954b27f7ec4de53451c9a88

root@kuangshen /]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "f8a54b35cabfa5170445de6994b940d1b50b1c54751b9597e40f5d6e9277fd22",

"Created": "2021-05-28T15:30:05.397447622+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"e2acac20abb933833c05a36ffc1cedd2239beb7f9954b27f7ec4de53451c9a88": {

"Name": "tomcat-net-02",

"EndpointID": "12dc69619de088580fd6704726be53704656f5f9c9a0efe4ef654702edc19f22",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"ed92a0d162c910dea054fabd95dbfefb2b198e627461c0e3c0b82ffbb1d40608": {

"Name": "tomcat-net-01",

"EndpointID": "4519625dfd9545f0c909e0f4bb7cfbfb4ea02fb1716a85dd6e2338f032984add",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@kuangshen /]#

# 再次测试连接

[root@kuangshen /]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.100 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.060 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.071 ms

64 bytes from 192.168.0.3: icmp_seq=4 ttl=64 time=0.068 ms

^C

--- 192.168.0.3 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.060/0.074/0.100/0.018 ms

# 现在不使用--link 也可以ping名字了!

[root@kuangshen /]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.058 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.066 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.068 ms

^C

--- tomcat-net-02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2ms

rtt min/avg/max/mdev = 0.058/0.064/0.068/0.004 ms

[root@kuangshen /]#

我们自定义的网络docker都已经帮我们维护好了对应的关系,推荐我们平时使用自定义的网络!

好处:

不同的集群使用不同的网络,保证集群是安全和健康的。

网络连通

[root@kuangshen /]# docker run -d -P --name tomcat01 tomcat

88085710eb2cfc01cc49288e508a578816527963033e621def1e58951643c463

[root@kuangshen /]# docker run -d -P --name tomcat02 tomcat

db121f1e61ee454f04947f2fefa7e3bf1a3c40d7fc7ca54b7dcfae7ad29a4e89

[root@kuangshen /]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

db121f1e61ee tomcat "catalina.sh run" 12 seconds ago Up 11 seconds 0.0.0.0:49162->8080/tcp, :::49162->8080/tcp tomcat02

88085710eb2c tomcat "catalina.sh run" 43 seconds ago Up 42 seconds 0.0.0.0:49161->8080/tcp, :::49161->8080/tcp tomcat01

e2acac20abb9 tomcat "catalina.sh run" 18 minutes ago Up 18 minutes 0.0.0.0:49160->8080/tcp, :::49160->8080/tcp tomcat-net-02

ed92a0d162c9 tomcat "catalina.sh run" 19 minutes ago Up 19 minutes 0.0.0.0:49159->8080/tcp, :::49159->8080/tcp tomcat-net-01

[root@kuangshen /]# docker exec -it tomcat01 ping tomcat-net-01

ping: tomcat-net-01: Name or service not known

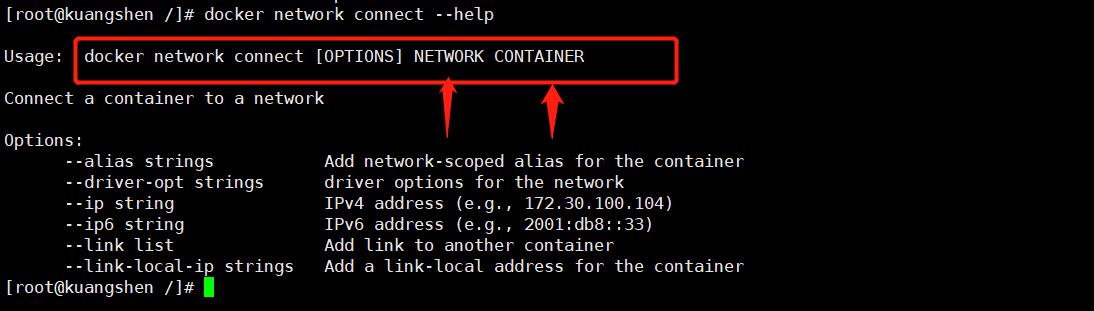

#测试打通 tomcat01 - mynet

#连通之后就是将tomcat01放到了mynet网络下

# 相当于一个容器两个ip地址

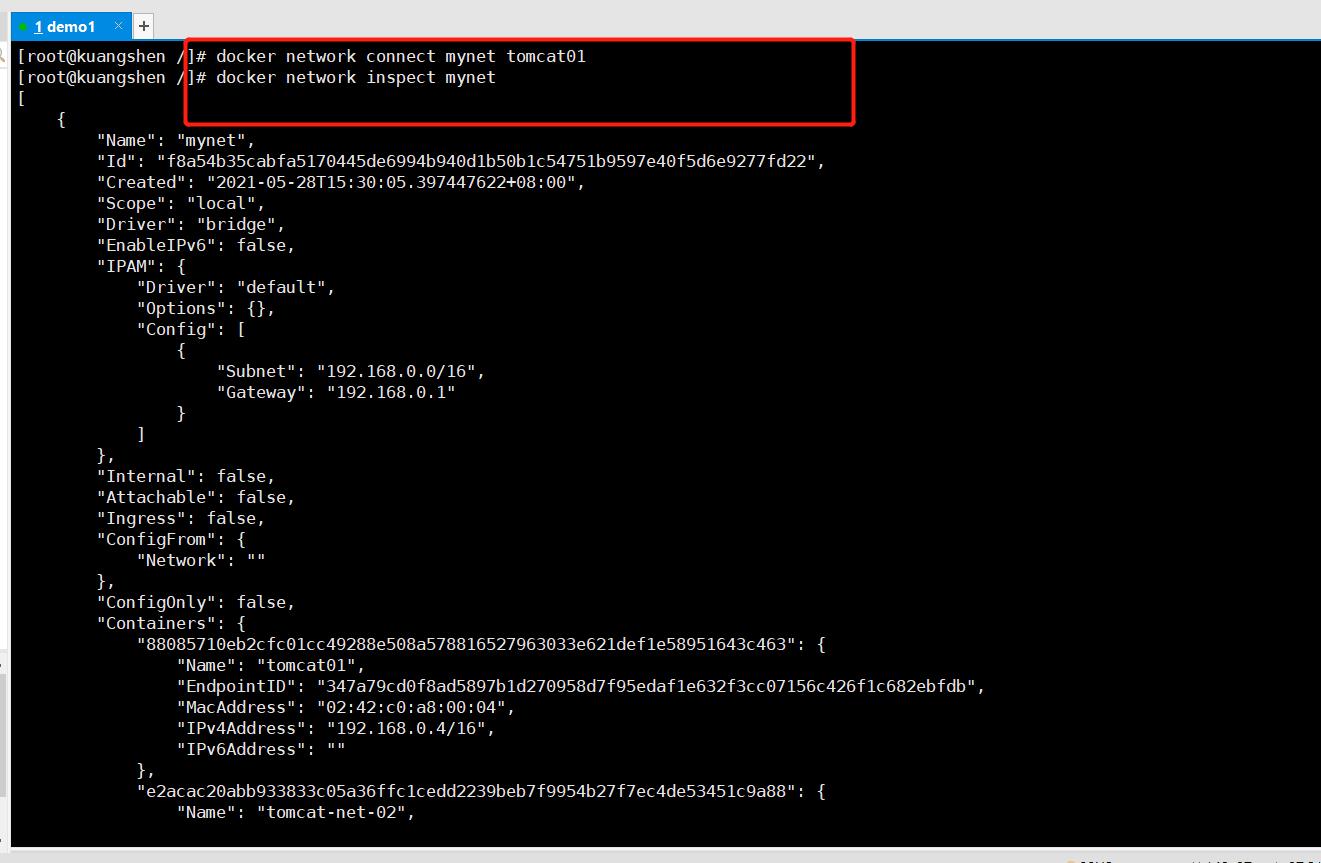

[root@kuangshen /]# docker network connect mynet tomcat01

[root@kuangshen /]# docker network inspect mynet

# 01连通ok

[root@kuangshen /]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.072 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.064 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.060 ms

^C

--- tomcat-net-01 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.060/0.065/0.072/0.008 ms

# 02是依旧打不通的

[root@kuangshen /]# docker exec -it tomcat02 ping tomcat-net-01

ping: tomcat-net-01: Name or service not known

[root@kuangshen /]#

结论:假设要跨网络操作容器, 就需要使用docker network connect连通!

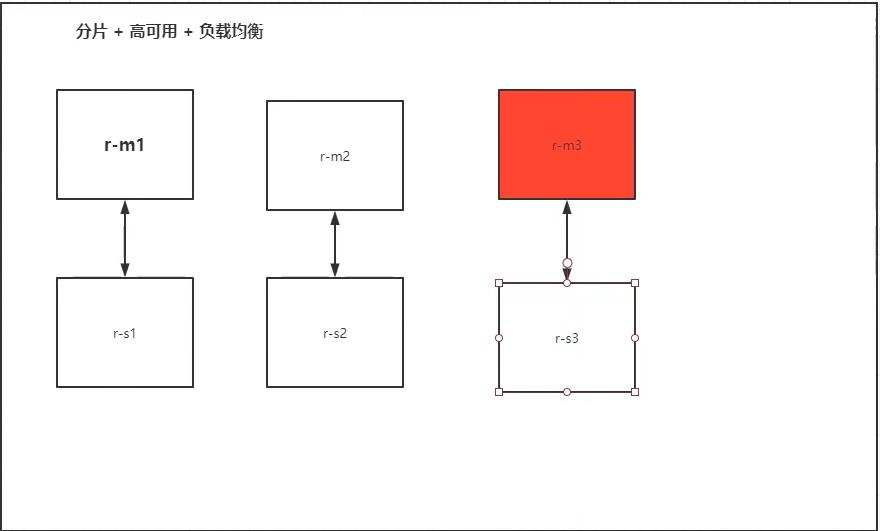

实战:部署Redis集群

shell脚本!

#首先移除的其他容器

[root@kuangshen /]# docker rm -f $(docker ps -aq)

# 创建网卡

[root@kuangshen /]# docker network create redis --subnet 172.38.0.0/16

dbfc21f8ec481c26a5c621fd444bc8fbff878a89b9625dfd79a1a73fbbbe63a8

[root@kuangshen /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

67bad398d895 bridge bridge local

13b82c23a994 host host local

f8a54b35cabf mynet bridge local

3003f1b6412e none null local

dbfc21f8ec48 redis bridge local

[root@kuangshen /]# docker network inspect redis

[

{

"Name": "redis",

"Id": "dbfc21f8ec481c26a5c621fd444bc8fbff878a89b9625dfd79a1a73fbbbe63a8",

"Created": "2021-05-28T16:18:33.026029065+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.38.0.0/16"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

# 通过脚本创建六个redis配置

for port in $(seq 1 6); \\

do \\

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-${port} \\

-v /mydata/redis/node-${port}/data:/data \\

-v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf \\

-d --net redis --ip 172.38.0.1${port} redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf; \\

docker run -p 6371:6379 -p 16371:16379 --name redis-1 \\

-v /mydata/redis/node-1/data:/data \\

-v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \\

-d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6372:6379 -p 16372:16379 --name redis-2 \\

-v /mydata/redis/node-2/data:/data \\

-v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf \\

-d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6373:6379 -p 16373:16379 --name redis-3 \\

-v /mydata/redis/node-3/data:/data \\

-v /mydata/redis/node-3/conf/redis.conf:/etc/redis/redis.conf \\

-d --net redis --ip 172.38.0.13 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6374:6379 -p 16374:16379 --name redis-4 \\

-v /mydata/redis/node-4/data:/data \\

-v /mydata/redis/node-4/conf/redis.conf:/etc/redis/redis.conf \\

-d --net redis --ip 172.38.0.14 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6375:6379 -p 16375:16379 --name redis-5 \\

-v /mydata/redis/node-5/data:/data \\

-v /mydata/redis/node-5/conf/redis.conf:/etc/redis/redis.conf \\

-d --net redis --ip 172.38.0.15 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6376:6379 -p 16376:16379 --name redis-6 \\

-v /mydata/redis/node-6/data:/data \\

-v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf \\

-d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 创建集群

redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

[root@kuangshen /]# for port in $(seq 1 6); \\

> do \\

> mkdir -p /mydata/redis/node-${port}/conf

> touch /mydata/redis/node-${port}/conf/redis.conf

> cat << EOF >>/mydata/redis/node-${port}/conf/redis.conf

> port 6379

> bind 0.0.0.0

> cluster-enabled yes

> cluster-config-file nodes.conf

> cluster-node-timeout 5000

> cluster-announce-ip 172.38.0.1${port}

> cluster-announce-port 6379

> cluster-announce-bus-port 16379

> appendonly yes

> EOF

> done

[root@kuangshen /]# cd /mydata/

[root@kuangshen mydata]# ls

redis

[root@kuangshen mydata]# cd redis/

[root@kuangshen redis]# ls

node-1 node-2 node-3 node-4 node-5 node-6

[root@kuangshen redis]# cd node-1

[root@kuangshen node-1]# ls

conf

[root@kuangshen node-1]# cd conf/

[root@kuangshen conf]# ls

redis.conf

[root@kuangshen conf]# cat redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.11

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

[root@kuangshen conf]# docker run -p 6371:6379 -p 16371:16379 --name redis-1 \\

> -v /mydata/redis/node-1/data:/data \\

> -v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \\

> -d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

Unable to find image 'redis:5.0.9-alpine3.11' locally

5.0.9-alpine3.11: Pulling from library/redis

cbdbe7a5bc2a: Pull complete

dc0373118a0d: Pull complete

cfd369fe6256: Pull complete

3e45770272d9: Pull complete

558de8ea3153: Pull complete

a2c652551612: Pull complete

Digest: sha256:83a3af36d5e57f2901b4783c313720e5fa3ecf0424ba86ad9775e06a9a5e35d0

Status: Downloaded newer image for redis:5.0.9-alpine3.11

f7f3baa2566bb226b4c779f705f443d17e5137d0c49479310d8d54251e14306d

[root@kuangshen conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f7f3baa2566b redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 10 seconds ago Up 8 seconds 0.0.0.0:6371->6379/tcp, :::6371->6379/tcp, 0.0.0.0:16371->16379/tcp, :::16371->16379/tcp redis-1

[root@kuangshen conf]# docker run -p 6372:6379 -p 16372:16379 --name redis-2 \\

> -v /mydata/redis/node-2/data:/data \\

> -v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf \\

> -d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

cacfb306e48b40cf0b610ca012cf5c0b648abb334603fff6a11f810e63956328

[root@kuangshen conf]# docker run -p 6373:6379 -p 16373:16379 --name redis-3 \\

> -v /mydata/redis/node-3/data:/data \\

> -v /mydata/redis/node-3/conf/redis.conf:/etc/redis/redis.conf \\

> -d --net redis --ip 172.38.0.13 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

2037c662f612e498032a03c77d9dced5568c2c91e9718f5eeefddfb4890bda48

[root@kuangshen conf]#

[root@kuangshen conf]# docker run -p 6374:6379 -p 16374:16379 --name redis-4 \\

> -v /mydata/redis/node-4/data:/data \\

> -v /mydata/redis/node-4/conf/redis.conf:/etc/redis/redis.conf \\

> -d --net redis --ip 172.38.0.14 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

f5e62a081f1b99668ee18758b4530cc24edb41a03fb75b384872d51d5432e6ba

[root@kuangshen conf]# docker run -p 6375:6379 -p 16375:16379 --name redis-5 \\

> -v /mydata/redis/node-5/data:/data \\

> -v /mydata/redis/node-5/conf/redis.conf:/etc/redis/redis.conf \\

> -d --net redis --ip 172.38.0.15 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

ebaa22e54a506a3078c6170c84d748101f33180f72ed8b79fb4a380a7938c7c4

[root@kuangshen conf]# docker run -p 6376:6379 -p 16376:16379 --name redis-6 \\

> -v /mydata/redis/node-6/data:/data \\

> -v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf \\

> -d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

adbf7ed3ebaae4b3d31f8777a5274d4409fd425053e85e4c43daa65db1912bbc

[root@kuangshen conf]# clear

[root@kuangshen conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

adbf7ed3ebaa redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 21 seconds ago Up 20 seconds 0.0.0.0:6376->6379/tcp, :::6376->6379/tcp, 0.0.0.0:16376->16379/tcp, :::16376->16379/tcp redis-6

ebaa22e54a50 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" About a minute ago Up About a minute 0.0.0.0:6375->6379/tcp, :::6375->6379/tcp, 0.0.0.0:16375->16379/tcp, :::16375->16379/tcp redis-5

f5e62a081f1b redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 3 minutes ago Up 2 minutes 0.0.0.0:6374->6379/tcp, :::6374->6379/tcp, 0.0.0.0:16374->16379/tcp, :::16374->16379/tcp redis-4

2037c662f612 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 4 minutes ago Up 4 minutes 0.0.0.0:6373->6379/tcp, :::6373->6379/tcp, 0.0.0.0:16373->16379/tcp, :::16373->16379/tcp redis-3

cacfb306e48b redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 5 minutes ago Up 5 minutes 0.0.0.0:6372->6379/tcp, :::6372->6379/tcp, 0.0.0.0:16372->16379/tcp, :::16372->16379/tcp redis-2

f7f3baa2566b redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 7 minutes ago Up 7 minutes 0.0.0.0:6371->6379/tcp, :::6371->6379/tcp, 0.0.0.0:16371->16379/tcp, :::16371->16379/tcp redis-1

[root@kuangshen conf]# docker exec -it redis-1 /bin/sh

/data # ls

appendonly.aof nodes.conf

/data # clear

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-re

plicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: 13b3f0c9c5d0fe341dbd52e772b894bde94742b3 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: 70065d2863d927ac8ffc5388d57614f595bc7f4e 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 12d7ad1452441e98f6484bc14497701e709141c4 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 2bd10fb25f2d8bbddaf64445ddb645f7c35e6abd 172.38.0.14:6379

replicates 12d7ad1452441e98f6484bc14497701e709141c4

S: 72517d5f4a5f3b50992bb3870b941d63ae2fd434 172.38.0.15:6379

replicates 13b3f0c9c5d0fe341dbd52e772b894bde94742b3

S: 43adde12fb7d69a98d97f2559028236bc5bd89ed 172.38.0.16:6379

replicates 70065d2863d927ac8ffc5388d57614f595bc7f4e

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: 13b3f0c9c5d0fe341dbd52e772b894bde94742b3 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 2bd10fb25f2d8bbddaf64445ddb645f7c35e6abd 172.38.0.14:6379

slots: (0 slots) slave

replicates 12d7ad1452441e98f6484bc14497701e709141c4

S: 43adde12fb7d69a98d97f2559028236bc5bd89ed 172.38.0.16:6379

slots: (0 slots) slave

replicates 70065d2863d927ac8ffc5388d57614f595bc7f4e

S: 72517d5f4a5f3b50992bb3870b941d63ae2fd434 172.38.0.15:6379

slots: (0 slots) slave

replicates 13b3f0c9c5d0fe341dbd52e772b894bde94742b3

M: 70065d2863d927ac8ffc5388d57614f595bc7f4e 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 12d7ad1452441e98f6484bc14497701e709141c4 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

/data #

/data # clear

/data # redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:355

cluster_stats_messages_pong_sent:383

cluster_stats_messages_sent:738

cluster_stats_messages_ping_received:378

cluster_stats_messages_pong_received:355

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:738

127.0.0.1:6379> cluster nodes

2bd10fb25f2d8bbddaf64445ddb645f7c35e6abd 172.38.0.14:6379@16379 slave 12d7ad1452441e98f6484bc14497701e709141c4 0 1622193388768 4 connected

43adde12fb7d69a98d97f2559028236bc5bd89ed 172.38.0.16:6379@16379 slave 70065d2863d927ac8ffc5388d57614f595bc7f4e 0 1622193389577 6 connected

13b3f0c9c5d0fe341dbd52e772b894bde94742b3 172.38.0.11:6379@16379 myself,master - 0 1622193388000 1 connected 0-5460

72517d5f4a5f3b50992bb3870b941d63ae2fd434 172.38.0.15:6379@16379 slave 13b3f0c9c5d0fe341dbd52e772b894bde94742b3 0 1622193389776 5 connected

70065d2863d927ac8ffc5388d57614f595bc7f4e 172.38.0.12:6379@16379 master - 0 1622193389576 2 connected 5461-10922

12d7ad1452441e98f6484bc14497701e709141c4 172.38.0.13:6379@16379 master - 0 1622193389000 3 connected 10923-16383

127.0.0.1:6379> set a b

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

# 这里是另外开启一个窗口把redis3停掉 然后在原来的窗口里面查看是否可以获得a

/data # redis-cli -c

127.0.0.1:6379> get a

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

172.38.0.14:637第139天学习打卡(Docker 发布自己的镜像 Docker网络)

第139天学习打卡(Docker 发布自己的镜像 Docker网络)