TensorflowMac上Tensorflow卷积与反卷积

Posted Taily老段

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了TensorflowMac上Tensorflow卷积与反卷积相关的知识,希望对你有一定的参考价值。

卷积

tf.nn.conv2d是TensorFlow里面实现卷积的函数,参考文档对它的介绍并不是很详细,实际上这是搭建卷积神经网络比较核心的一个方法,非常重要

tf.nn.conv2d(input, filter, strides, padding, use_cudnn_on_gpu=None, name=None)

除去name参数用以指定该操作的name,与方法有关的一共五个参数:

第一个参数input:指需要做卷积的输入图像,它要求是一个Tensor,具有[batch, in_height, in_width, in_channels]这样的shape,具体含义是[训练时一个batch的图片数量, 图片高度, 图片宽度, 图像通道数],注意这是一个4维的Tensor,要求类型为float32和float64其中之一

第二个参数filter:相当于CNN中的卷积核,它要求是一个Tensor,具有[filter_height, filter_width, in_channels, out_channels]这样的shape,具体含义是[卷积核的高度,卷积核的宽度,图像通道数,卷积核个数],要求类型与参数input相同,有一个地方需要注意,第三维in_channels,就是参数input的第四维

第三个参数strides:卷积时在图像每一维的步长,这是一个一维的向量,长度4

第四个参数padding:string类型的量,只能是"SAME","VALID"其中之一,这个值决定了不同的卷积方式(后面会介绍)

第五个参数:use_cudnn_on_gpu:bool类型,是否使用cudnn加速,默认为true

结果返回一个Tensor,这个输出,就是我们常说的feature map

详情见:https://blog.csdn.net/mao_xiao_feng/article/details/53444333

反卷积

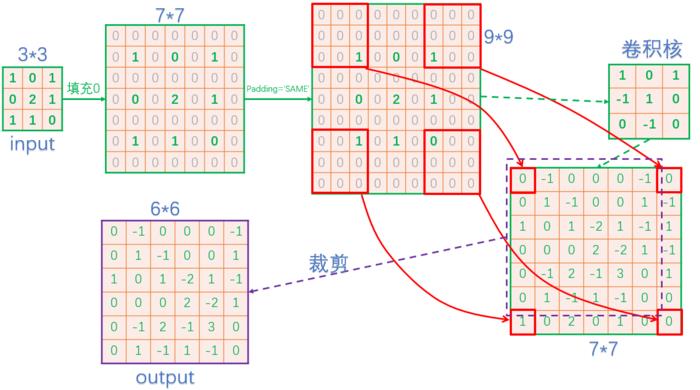

现在通过stride=2来进行反卷积,使得尺寸由原来的3*3变为6*6.那么在Tensorflow框架中,反卷积的过程如下(不同框架在裁剪这步可能不一样):

大致步骤就是:先填充0,然后进行卷积,卷积过程跟上一篇文章讲述的一致。最后一步还要进行裁剪。

将输出通道设置为多个,这样反卷积更符合实际场景。

详情见:https://blog.csdn.net/jacke121/article/details/80207796

实现一个从1x3x3x1到1x5x5x1的反卷积,kernel大小为2:

#coding:utf-8

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

import tensorflow as tf

import numpy as np

# the format of value: [NHWC]

value = tf.reshape(tf.constant([[1., 2., 3.], [4., 5., 6.], [7., 8., 9.]]), [1, 3, 3, 1])

# the format of filter: [height, width, output_channels, input_channels]

filter = tf.reshape(tf.constant([[1., 0.], [0., 1.]]), [2, 2, 1, 1])

# the format of output_shape: [NHWC]

output_shape = [1, 5, 5, 1]

# the format of strides: [1, stride, stride, 1]

strides = [1, 2, 2, 1]

padding = 'SAME'

# define the transpose conv op

transpose_conv = tf.nn.conv2d_transpose(value=value, filter=filter, output_shape=output_shape, strides=strides, padding=padding)

sess = tf.Session()

sess.run(transpose_conv)(py27tf) bash-3.2$ python

Python 2.7.15 |Anaconda, Inc.| (default, Dec 14 2018, 13:10:39)

[GCC 4.2.1 Compatible Clang 4.0.1 (tags/RELEASE_401/final)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

>>> import numpy as np

>>>

>>> # the format of value: [NHWC]

... value = tf.reshape(tf.constant([[1., 2., 3.], [4., 5., 6.], [7., 8., 9.]]), [1, 3, 3, 1])

>>> # the format of filter: [height, width, output_channels, input_channels]

... filter = tf.reshape(tf.constant([[1., 0.], [0., 1.]]), [2, 2, 1, 1])

>>> # the format of output_shape: [NHWC]

... output_shape = [1, 5, 5, 1]

>>> # the format of strides: [1, stride, stride, 1]

... strides = [1, 2, 2, 1]

>>> padding = 'SAME'

>>> # define the transpose conv op

... transpose_conv = tf.nn.conv2d_transpose(value=value, filter=filter, output_shape=output_shape, strides=strides, padding=padding)

>>> sess = tf.Session()

2018-12-29 16:13:44.769841: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2018-12-29 16:13:44.770191: I tensorflow/core/common_runtime/process_util.cc:69] Creating new thread pool with default inter op setting: 8. Tune using inter_op_parallelism_threads for best performance.

>>> sess.run(transpose_conv)

array([[[[1.],

[0.],

[2.],

[0.],

[3.]],

[[0.],

[1.],

[0.],

[2.],

[0.]],

[[4.],

[0.],

[5.],

[0.],

[6.]],

[[0.],

[4.],

[0.],

[5.],

[0.]],

[[7.],

[0.],

[8.],

[0.],

[9.]]]], dtype=float32)

>>>

实现一个从1x3x3x1到1x5x5x1的反卷积,kernel大小为3:

import tensorflow as tf

import numpy as np

# the format of value: [NHWC]

value = tf.reshape(tf.constant([[1., 2., 3.], [4., 5., 6.], [7., 8., 9.]]), [1, 3, 3, 1])

# the format of filter: [height, width, output_channels, input_channels]

filter = tf.reshape(tf.constant([[1., 0., 0.], [0., 1., 0.], [0., 0., 1.]]), [3, 3, 1, 1])

# the format of output_shape: [NHWC]

output_shape = [1, 5, 5, 1]

# the format of strides: [1, stride, stride, 1]

strides = [1, 2, 2, 1]

padding = 'SAME'

# define the transpose conv op

transpose_conv = tf.nn.conv2d_transpose(value=value, filter=filter, output_shape=output_shape, strides=strides, padding=padding)

sess = tf.Session()

sess.run(transpose_conv)>>> import tensorflow as tf

>>> import numpy as np

>>>

>>> # the format of value: [NHWC]

... value = tf.reshape(tf.constant([[1., 2., 3.], [4., 5., 6.], [7., 8., 9.]]), [1, 3, 3, 1])

>>> # the format of filter: [height, width, output_channels, input_channels]

... filter = tf.reshape(tf.constant([[1., 0., 0.], [0., 1., 0.], [0., 0., 1.]]), [3, 3, 1, 1])

>>> # the format of output_shape: [NHWC]

... output_shape = [1, 5, 5, 1]

>>> # the format of strides: [1, stride, stride, 1]

... strides = [1, 2, 2, 1]

>>> padding = 'SAME'

>>> # define the transpose conv op

... transpose_conv = tf.nn.conv2d_transpose(value=value, filter=filter, output_shape=output_shape, strides=strides, padding=padding)

>>> sess = tf.Session()

2018-12-29 16:17:56.936252: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2018-12-29 16:17:56.936597: I tensorflow/core/common_runtime/process_util.cc:69] Creating new thread pool with default inter op setting: 8. Tune using inter_op_parallelism_threads for best performance.

>>> sess.run(transpose_conv)

array([[[[ 1.],

[ 0.],

[ 2.],

[ 0.],

[ 3.]],

[[ 0.],

[ 6.],

[ 0.],

[ 8.],

[ 0.]],

[[ 4.],

[ 0.],

[ 5.],

[ 0.],

[ 6.]],

[[ 0.],

[12.],

[ 0.],

[14.],

[ 0.]],

[[ 7.],

[ 0.],

[ 8.],

[ 0.],

[ 9.]]]], dtype=float32)

>>>

对于在网络中的使用,最需要注意的一点就是filter中的output_channel和input_channel的顺序,TF会根据stride自动调整在输入数据的中间padding的数量,在满足 padding, output_size, input_size, stride的四者关系的情况下,放心使用就好。理解了stride的含义就可以随便调整自己想要的网络结构啦。

出现报错,在代码中加入下面代码;

2018-12-29 16:05:43.918087: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2018-12-29 16:05:43.918440: I tensorflow/core/common_runtime/process_util.cc:69] Creating new thread pool with default inter op setting: 8. Tune using inter_op_parallelism_threads for best performance.import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'import os

os.environ["TF_CPP_MIN_LOG_LEVEL"]='1' # 这是默认的显示等级,显示所有信息

os.environ["TF_CPP_MIN_LOG_LEVEL"]='2' # 只显示 warning 和 Error

os.environ["TF_CPP_MIN_LOG_LEVEL"]='3' # 只显示 Error

以上是关于TensorflowMac上Tensorflow卷积与反卷积的主要内容,如果未能解决你的问题,请参考以下文章