ijkplayer整体结构总结

Posted 后端码匠

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ijkplayer整体结构总结相关的知识,希望对你有一定的参考价值。

ijkplayer

【ijkplayer】read_thread 流程梳理(二)

【ijkplayer】整体结构总结(一)

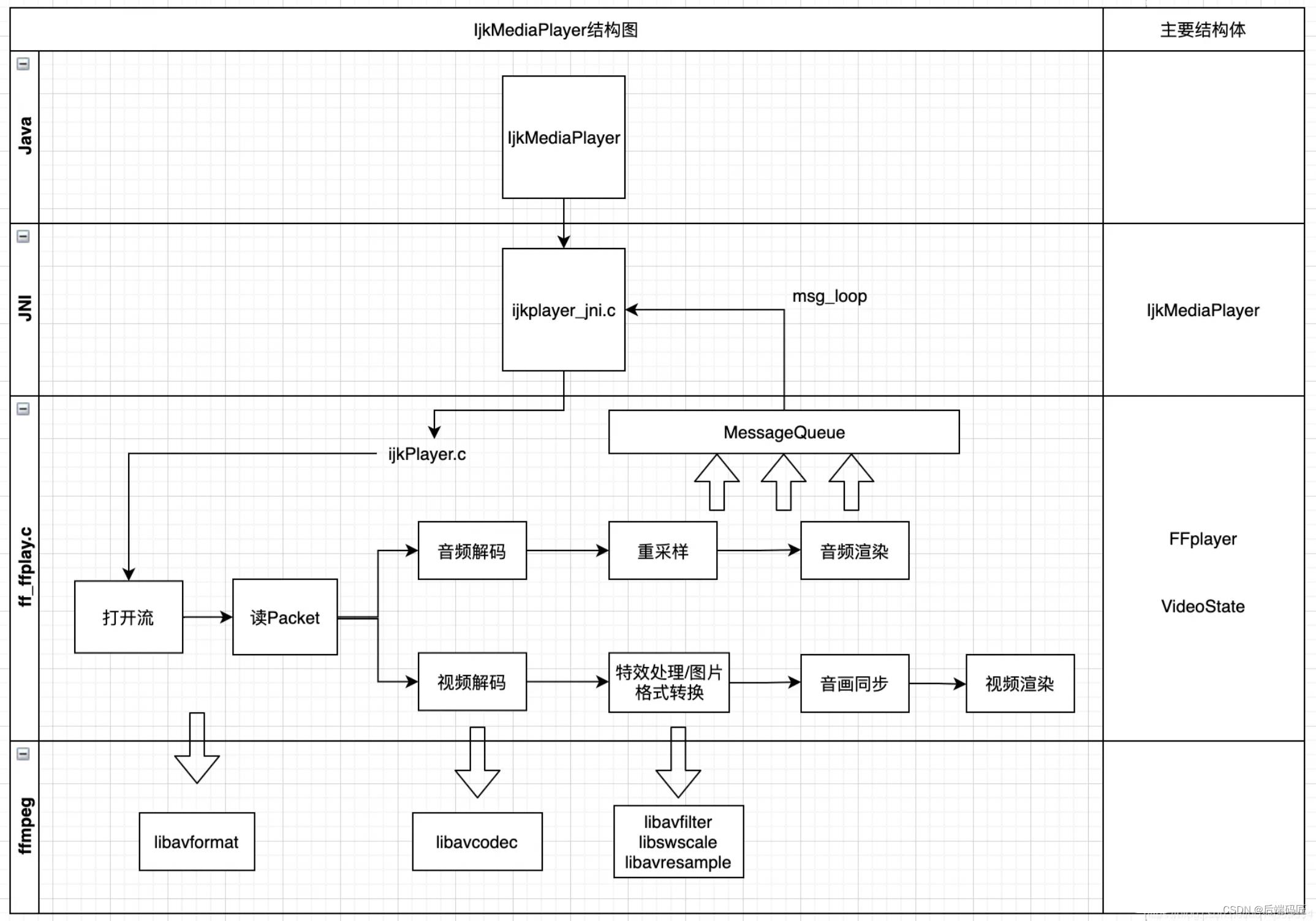

Java层—JNI层—C层,作为一个播放器的整体流程,整体结构体如下。

主要结构体

在初始化native_setUp的时候,创建了IjkMediaPlayer、FFPlayer、IJKFF_Pipeline,在prepare阶段通过stream_open创建了VideoState,这几个结构体基本贯穿了整个流程,非常重要

IjkMediaPlayer

表示native层的Player,与Java层一对一绑定。作为Java到c的入口封装。

struct IjkMediaPlayer

volatile int ref_count;

pthread_mutex_t mutex;

FFPlayer *ffplayer;

int (*msg_loop)(void*);

SDL_Thread *msg_thread;

SDL_Thread _msg_thread;

int mp_state;

char *data_source;

void *weak_thiz;

int restart;

int restart_from_beginning;

int seek_req;

;

FFPlayer

具体的播放器,internal player,包含编码、输出等;代码进入到ff_ffplay.c后,就都使用的是FFPlayer。持有VideoState。

typedef struct FFPlayer

VideoState *is;

/* extra fields */

SDL_Aout *aout;

SDL_Vout *vout;

struct IJKFF_Pipeline *pipeline;

struct IJKFF_Pipenode *node_vdec;

MessageQueue msg_queue;

VideoState

VideoState,在stream_open中被创建,表示播放过程中的所有状态。

typedef struct VideoState

Clock audclk;

Clock vidclk;

Clock extclk;

FrameQueue pictq;

FrameQueue subpq;

FrameQueue sampq;

Decoder auddec;

Decoder viddec;

Decoder subdec;

PacketQueue audioq;

PacketQueue subtitleq;

PacketQueue videoq;

int seek_req;

double frame_timer;

int abort_request;

int force_refresh;

int paused;

IJKFF_Pipeline

ffpipeline封装了视频解码器和音频解码器/输出,实现通过函数指针。

// ffpipeline_android.c中

typedef struct IJKFF_Pipeline_Opaque

FFPlayer *ffp;

SDL_mutex *surface_mutex;

jobject jsurface;

volatile bool is_surface_need_reconfigure;

bool (*mediacodec_select_callback)(void *opaque, ijkmp_mediacodecinfo_context *mcc);

void *mediacodec_select_callback_opaque;

SDL_Vout *weak_vout;

float left_volume;

float right_volume;

IJKFF_Pipeline_Opaque;

// 在ff_ffpipeline.h

typedef struct IJKFF_Pipeline_Opaque IJKFF_Pipeline_Opaque;

typedef struct IJKFF_Pipeline IJKFF_Pipeline;

struct IJKFF_Pipeline

SDL_Class *opaque_class;

IJKFF_Pipeline_Opaque *opaque;

void (*func_destroy) (IJKFF_Pipeline *pipeline);

IJKFF_Pipenode *(*func_open_video_decoder) (IJKFF_Pipeline *pipeline, FFPlayer *ffp);

SDL_Aout *(*func_open_audio_output) (IJKFF_Pipeline *pipeline, FFPlayer *ffp);

IJKFF_Pipenode *(*func_init_video_decoder) (IJKFF_Pipeline *pipeline, FFPlayer *ffp);

int (*func_config_video_decoder) (IJKFF_Pipeline *pipeline, FFPlayer *ffp);

;

IJKFF_Pipeline *ffpipeline_alloc(SDL_Class *opaque_class, size_t opaque_size);

void ffpipeline_free(IJKFF_Pipeline *pipeline);

void ffpipeline_free_p(IJKFF_Pipeline **pipeline);

// 打开视频解码器

IJKFF_Pipenode *ffpipeline_open_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp);

// 打开音频解码器

SDL_Aout *ffpipeline_open_audio_output(IJKFF_Pipeline *pipeline, FFPlayer *ffp);

// 异步初始化IJKFF_Pipenode

IJKFF_Pipenode* ffpipeline_init_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp);

// 异步初始化视频解码器

int ffpipeline_config_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp);

初始化流程

native_setup

static void IjkMediaPlayer_native_setup(JNIEnv *env, jobject thiz, jobject weak_this)

MPTRACE("%s\\n", __func__);

IjkMediaPlayer *mp = ijkmp_android_create(message_loop);

JNI_CHECK_GOTO(mp, env, "java/lang/OutOfMemoryError", "mpjni: native_setup: ijkmp_create() failed", LABEL_RETURN);

jni_set_media_player(env, thiz, mp);

ijkmp_set_weak_thiz(mp, (*env)->NewGlobalRef(env, weak_this));

ijkmp_set_inject_opaque(mp, ijkmp_get_weak_thiz(mp));

ijkmp_set_ijkio_inject_opaque(mp, ijkmp_get_weak_thiz(mp));

ijkmp_android_set_mediacodec_select_callback(mp, mediacodec_select_callback, ijkmp_get_weak_thiz(mp));

LABEL_RETURN:

ijkmp_dec_ref_p(&mp);

//ijkmp_android_create

IjkMediaPlayer *ijkmp_android_create(int(*msg_loop)(void*))

//初始化 IjkMediaPlayer结构体

IjkMediaPlayer *mp = ijkmp_create(msg_loop);

if (!mp)

goto fail;

// 创建SDL_Vout

mp->ffplayer->vout = SDL_VoutAndroid_CreateForAndroidSurface();

if (!mp->ffplayer->vout)

goto fail;

// 创建IJKFF_Pipeline

mp->ffplayer->pipeline = ffpipeline_create_from_android(mp->ffplayer);

if (!mp->ffplayer->pipeline)

goto fail;

// 互相绑定s

ffpipeline_set_vout(mp->ffplayer->pipeline, mp->ffplayer->vout);

return mp;

fail:

ijkmp_dec_ref_p(&mp);

return NULL;

IjkMediaPlayer_setDataSource

int ijkmp_set_data_source(IjkMediaPlayer *mp, const char *url)

assert(mp);

assert(url);

MPTRACE("ijkmp_set_data_source(url=\\"%s\\")\\n", url);

pthread_mutex_lock(&mp->mutex);

int retval = ijkmp_set_data_source_l(mp, url);

pthread_mutex_unlock(&mp->mutex);

MPTRACE("ijkmp_set_data_source(url=\\"%s\\")=%d\\n", url, retval);

return retval;

static int ijkmp_set_data_source_l(IjkMediaPlayer *mp, const char *url)

assert(mp);

assert(url);

// MPST_RET_IF_EQ(mp->mp_state, MP_STATE_IDLE);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_INITIALIZED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ASYNC_PREPARING);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PREPARED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STARTED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_PAUSED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_COMPLETED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_STOPPED);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_ERROR);

MPST_RET_IF_EQ(mp->mp_state, MP_STATE_END);

freep((void**)&mp->data_source);

mp->data_source = strdup(url);

if (!mp->data_source)

return EIJK_OUT_OF_MEMORY;

ijkmp_change_state_l(mp, MP_STATE_INITIALIZED);

return 0;

IjkMediaPlayer_prepareAsync

调用流程 IjkMediaPlayer_prepareAsync->ijkmp_prepare_async_l->ffp_prepare_async_l->stream_open

stream_open

封装了所有流程中需要的参数

static VideoState *stream_open(FFPlayer *ffp, const char *filename, AVInputFormat *iformat)

assert(!ffp->is);

VideoState *is;

is = av_mallocz(sizeof(VideoState));

if (!is)

return NULL;

is->filename = av_strdup(filename);

if (!is->filename)

goto fail;

is->iformat = iformat;

is->ytop = 0;

is->xleft = 0;

#if defined(__ANDROID__)

if (ffp->soundtouch_enable)

is->handle = ijk_soundtouch_create();

#endif

/* start video display */

if (frame_queue_init(&is->pictq, &is->videoq, ffp->pictq_size, 1) < 0)

goto fail;

if (frame_queue_init(&is->subpq, &is->subtitleq, SUBPICTURE_QUEUE_SIZE, 0) < 0)

goto fail;

if (frame_queue_init(&is->sampq, &is->audioq, SAMPLE_QUEUE_SIZE, 1) < 0)

goto fail;

if (packet_queue_init(&is->videoq) < 0 ||

packet_queue_init(&is->audioq) < 0 ||

packet_queue_init(&is->subtitleq) < 0)

goto fail;

if (!(is->continue_read_thread = SDL_CreateCond()))

av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\\n", SDL_GetError());

goto fail;

if (!(is->video_accurate_seek_cond = SDL_CreateCond()))

av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\\n", SDL_GetError());

ffp->enable_accurate_seek = 0;

if (!(is->audio_accurate_seek_cond = SDL_CreateCond()))

av_log(NULL, AV_LOG_FATAL, "SDL_CreateCond(): %s\\n", SDL_GetError());

ffp->enable_accurate_seek = 0;

init_clock(&is->vidclk, &is->videoq.serial);

init_clock(&is->audclk, &is->audioq.serial);

init_clock(&is->extclk, &is->extclk.serial);

is->audio_clock_serial = -1;

if (ffp->startup_volume < 0)

av_log(NULL, AV_LOG_WARNING, "-volume=%d < 0, setting to 0\\n", ffp->startup_volume);

if (ffp->startup_volume > 100)

av_log(NULL, AV_LOG_WARNING, "-volume=%d > 100, setting to 100\\n", ffp->startup_volume);

ffp->startup_volume = av_clip(ffp->startup_volume, 0, 100);

ffp->startup_volume = av_clip(SDL_MIX_MAXVOLUME * ffp->startup_volume / 100, 0, SDL_MIX_MAXVOLUME);

is->audio_volume = ffp->startup_volume;

is->muted = 0;

is->av_sync_type = ffp->av_sync_type;

is->play_mutex = SDL_CreateMutex();

is->accurate_seek_mutex = SDL_CreateMutex();

ffp->is = is;

is->pause_req = !ffp->start_on_prepared;

is->video_refresh_tid = SDL_CreateThreadEx(&is->_video_refresh_tid, video_refresh_thread, ffp, "ff_vout");

if (!is->video_refresh_tid)

av_freep(&ffp->is);

return NULL;

is->initialized_decoder = 0;

is->read_tid = SDL_CreateThreadEx(&is->_read_tid, read_thread, ffp, "ff_read");

if (!is->read_tid)

av_log(NULL, AV_LOG_FATAL, "SDL_CreateThread(): %s\\n", SDL_GetError());

goto fail;

if (ffp->async_init_decoder && !ffp->video_disable && ffp->video_mime_type && strlen(ffp->video_mime_type) > 0

&& ffp->mediacodec_default_name && strlen(ffp->mediacodec_default_name) > 0)

if (ffp->mediacodec_all_videos || ffp->mediacodec_avc || ffp->mediacodec_hevc || ffp->mediacodec_mpeg2)

decoder_init(&is->viddec, NULL, &is->videoq, is->continue_read_thread);

ffp->node_vdec = ffpipeline_init_video_decoder(ffp->pipeline, ffp);

is->initialized_decoder = 1;

return is;

fail:

is->initialized_decoder = 1;

is->abort_request = true;

if (is->video_refresh_tid)

SDL_WaitThread(is->video_refresh_tid, NULL);

stream_close(ffp);

return NULL;

video_refresh_thread,主要负责音视频同步,以及音视频渲染显示流程。

read_thread ,负责打开流,创建音视频解码线程,读取packet等流程

message_loop

消息循环队列,IjkMediaPlayer创建的时候赋值,prepare中创建线程,启动loop,实现机制类似android消息队列锁机制的Looper那一套。

直播技术总结ijkplayer的编译到Android平台并测试解码库

转载请把头部出处链接和尾部二维码一起转载,本文出自逆流的鱼yuiop:http://blog.csdn.net/hejjunlin/article/details/55670380

前言:ijkplayer,是b站工程师开源的播放器框架,基于FFmpeg及MediaCodec,内部实现软解及硬解的功能,对于没有自研底层播放器的公司,用它确实是比较合适了。关于介绍可以直接看:https://github.com/Bilibili/ijkplayer,今天主要是对ijkplayer进行编译在Android平台上运行。

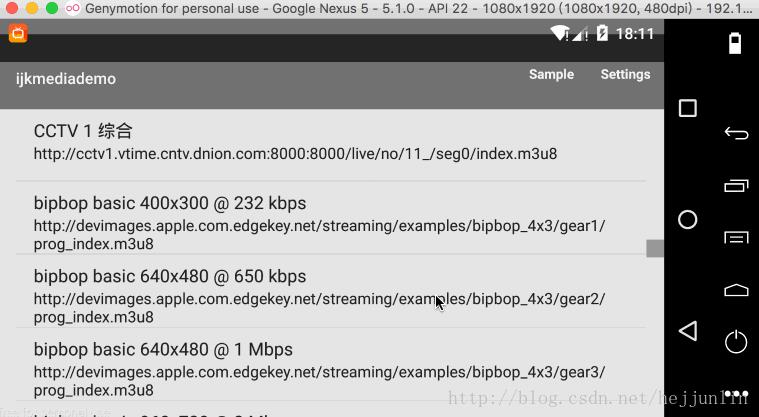

首先看下效果图:

gif图如下:

编译过程:在mac上进行编译这个库

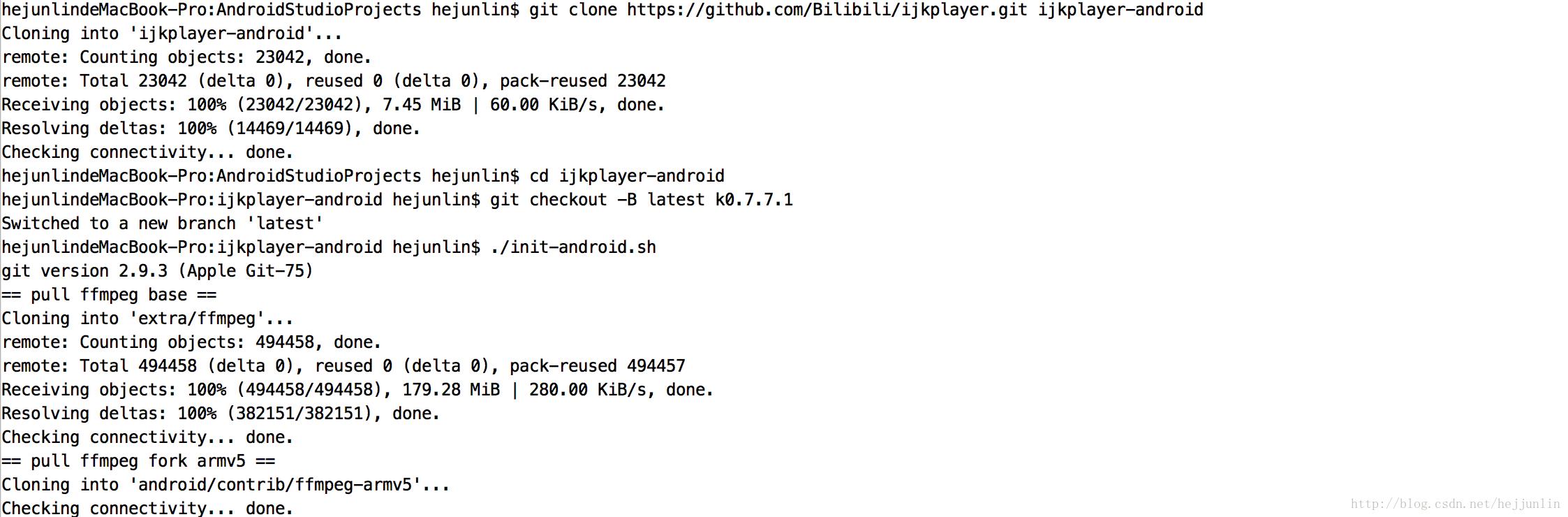

先clone一份源码到本地:

git clone https://github.com/Bilibili/ijkplayer.git ijkplayer-android

cd ijkplayer-android

git checkout -B latest k0.7.7.1 #最新分支

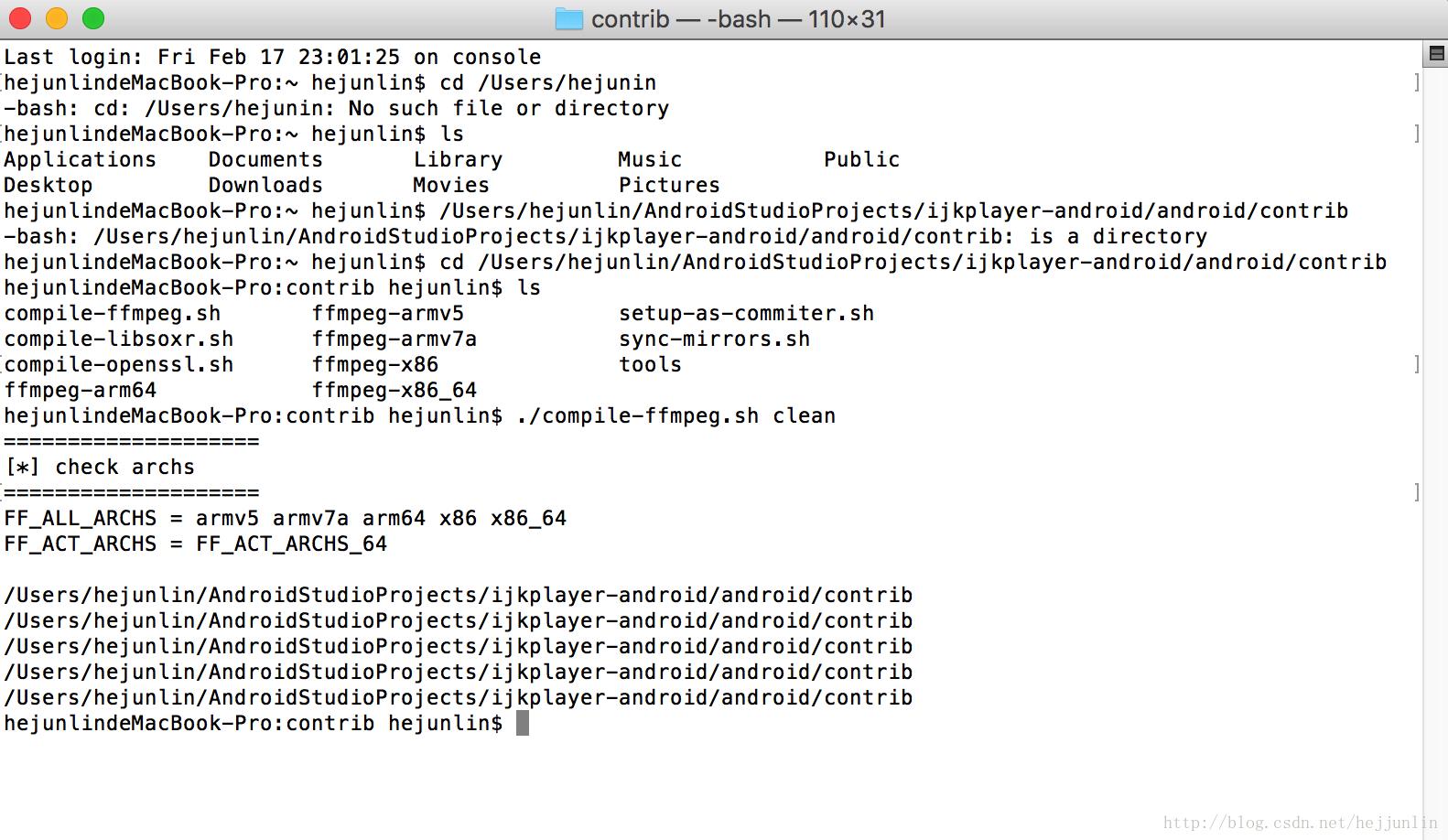

编译ffmpeg

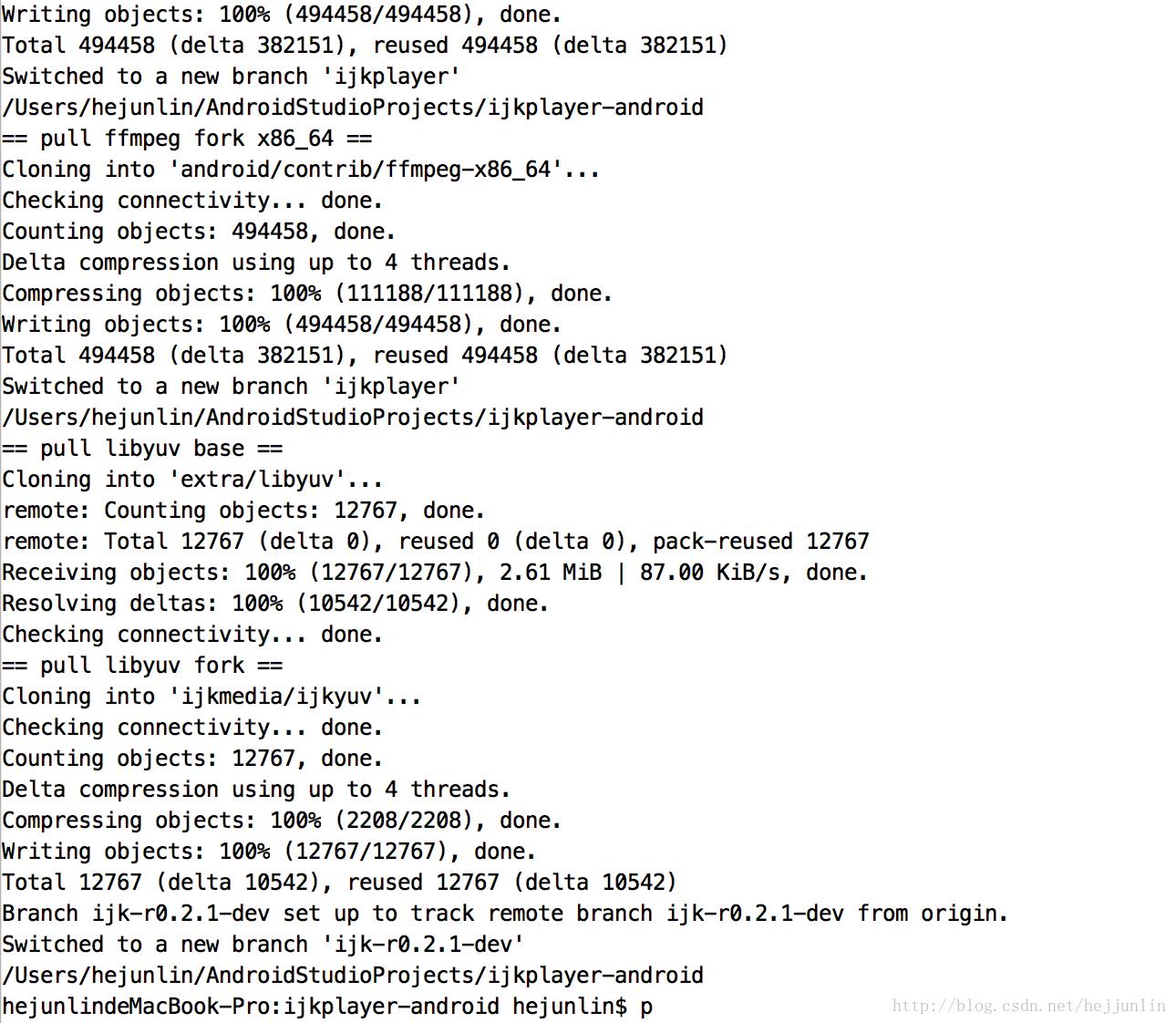

./init-android.sh # 初始化ijkplayer中和android相关,如果是在iOS,这是就是./init-ios.sh

cd android/contrib

./compile-ffmpeg.sh clean

./compile-ffmpeg.sh all #编译ffmpeg

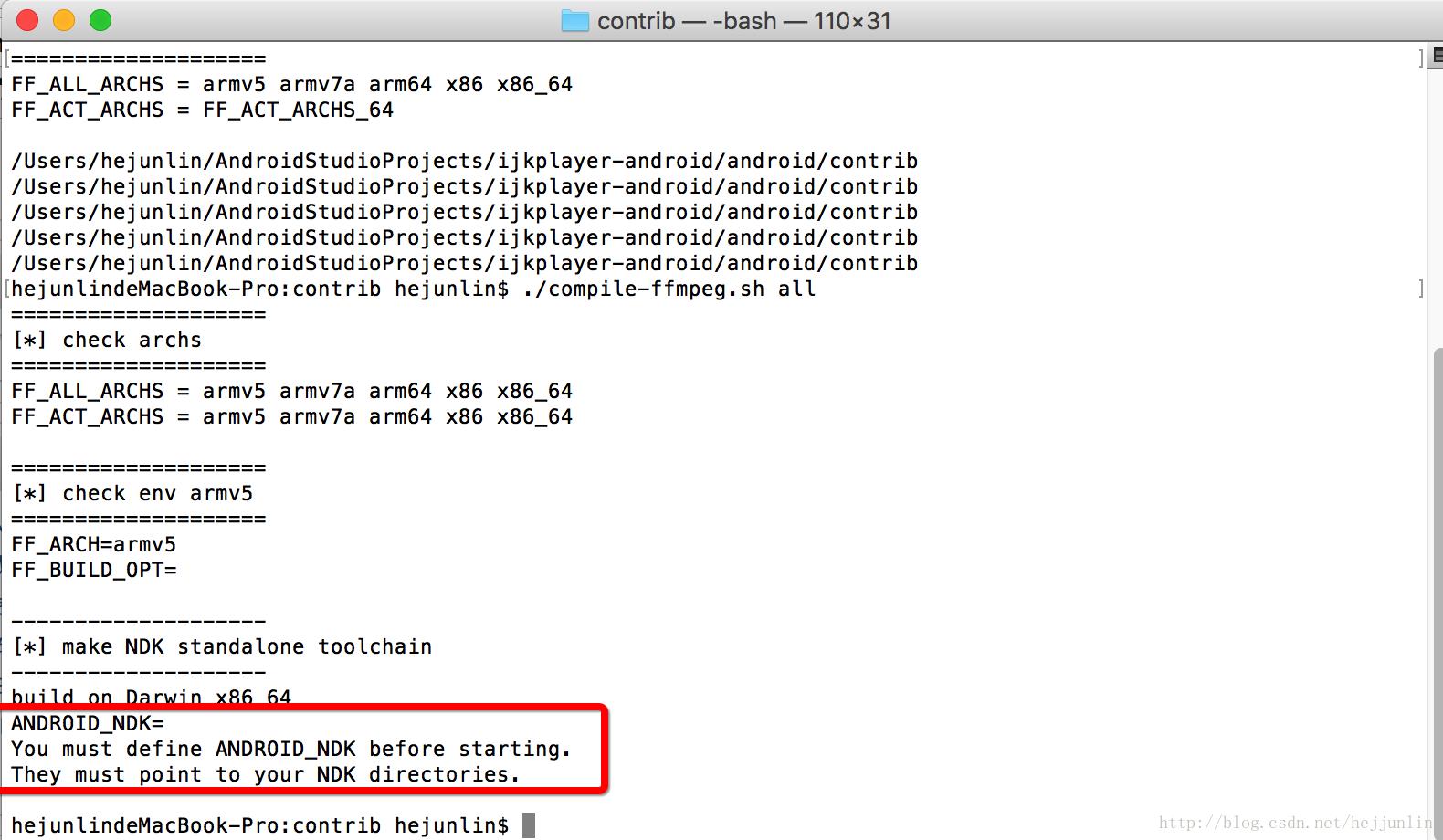

囧,发现pro上没有配置ndk环境。

下载ndk,还好在《手把手图文并茂教你用Android Studio编译FFmpeg库并移植》中,我把ndk-10e存到云盘中。

- 供参考下载地址见本文最下面,也可自行下载。

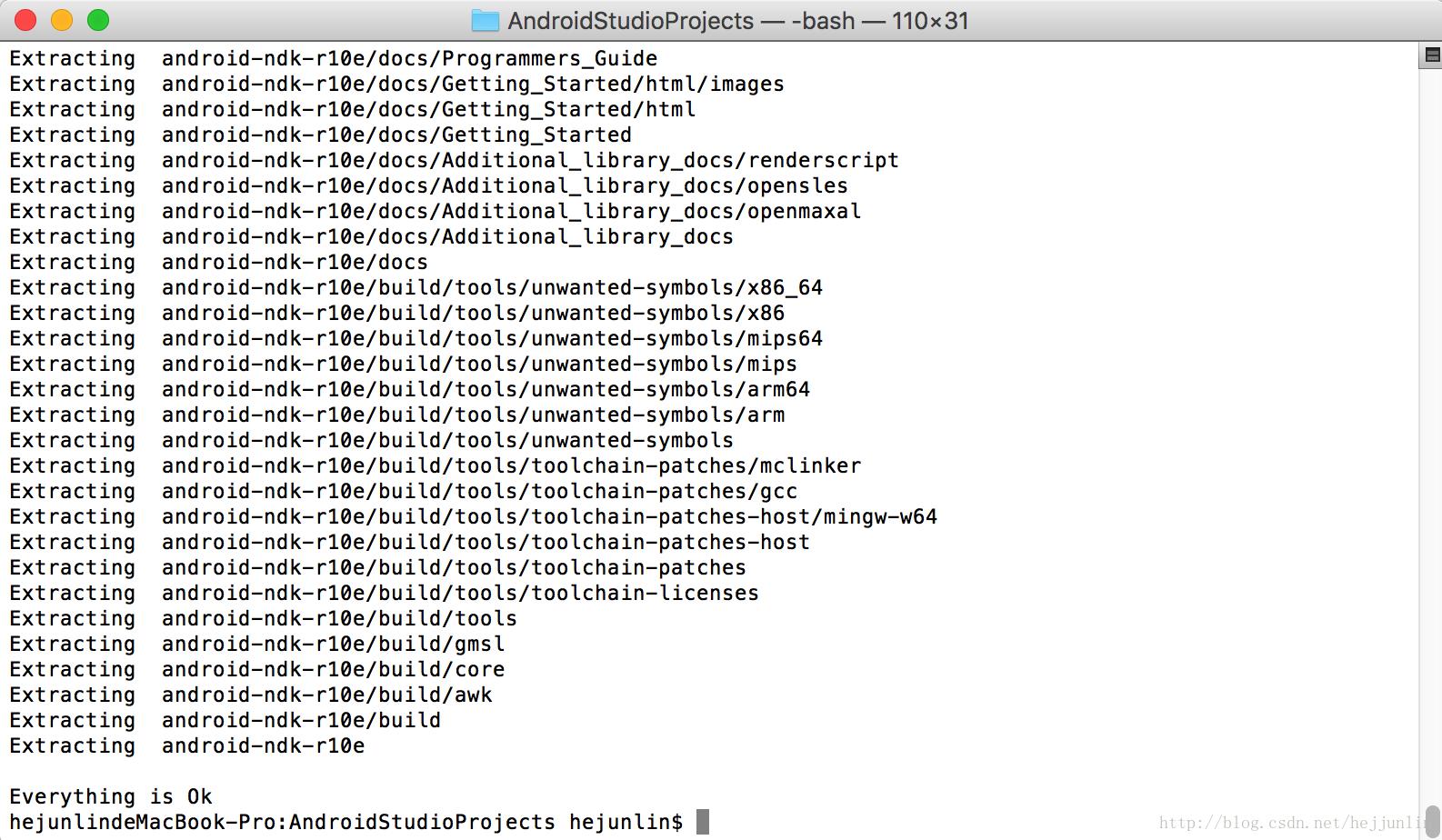

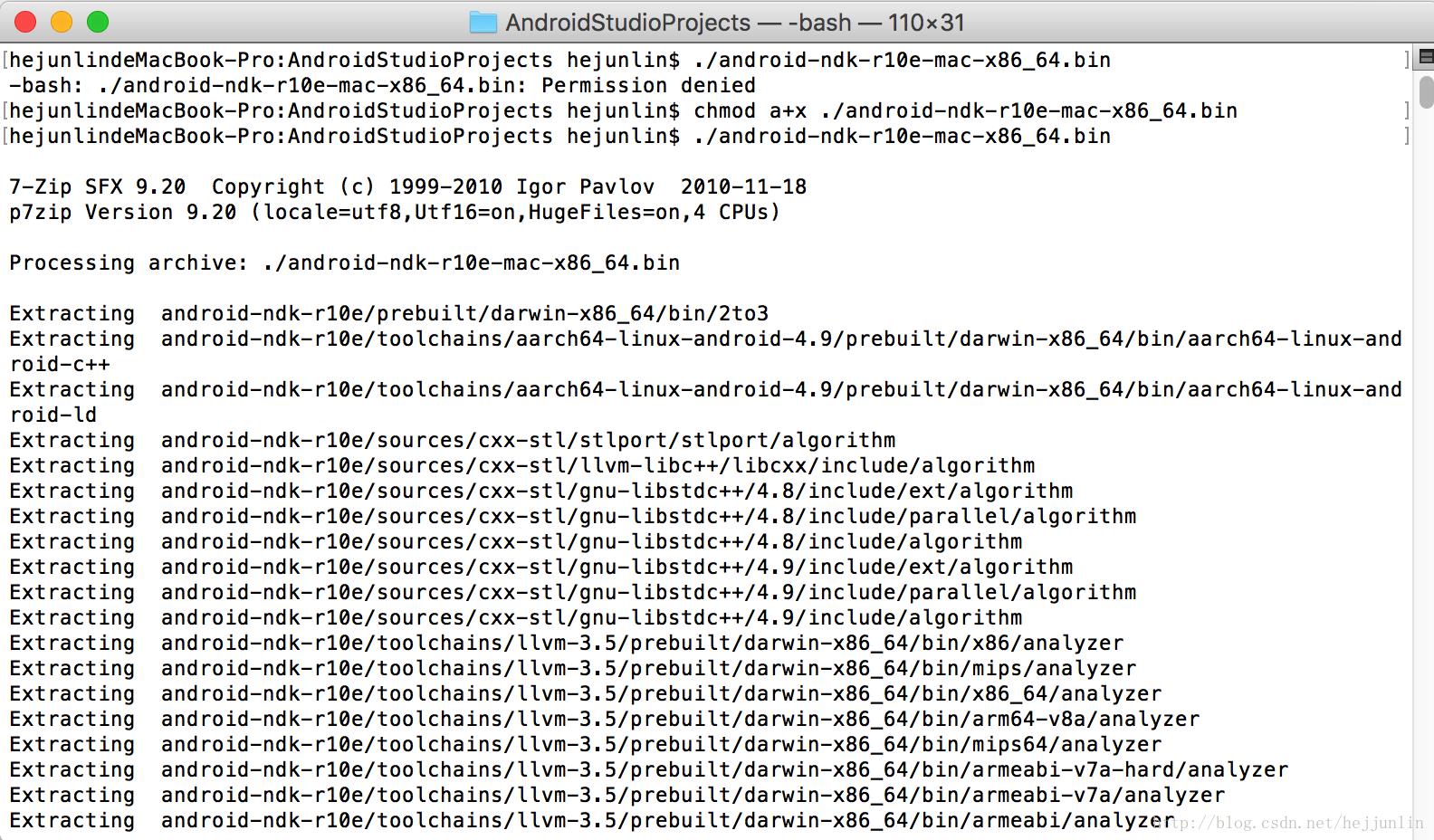

- 用命令 ./android-ndk-r10e-mac-x86_64.bin,就可进行进行解压,如下图:

不过并没有这么顺利, 在解压过程中出现Permission denied。

这时要改下权限,执行命令 chmod a+x ./android-ndk-r10e-mac-x86_64.bin

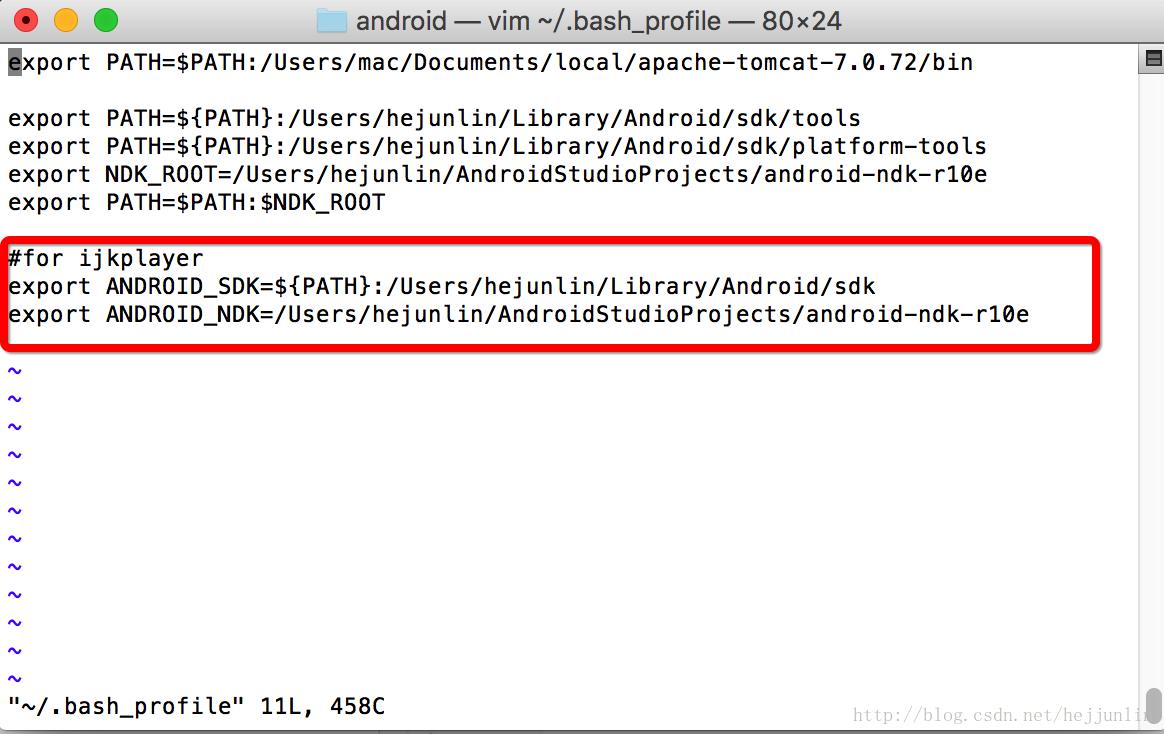

配置ndk环境

- 启动终端Terminal

- 进入当前用户的home目录

- 输入cd ~ 或 /Users/YourUserName

- 创建.bash_profile

- 输入touch .bash_profile

编辑.bash_profile文件

输入open -e .bash_profile

因为是为了配置NDK开发环境,输入Android NDK下目录,前面是android sdk的,可以不用动它,最终.bash_profile文件如下:

export PATH=$PATH:/Users/hejunlin/Documents/local/apache-tomcat-7.0.72/bin

export PATH=${PATH}:/Users/hejunlin/Library/Android/sdk/tools

export PATH=${PATH}:/Users/hejunlin/Library/Android/sdk/platform-tools

export NDK_ROOT=/Users/hejunlin/AndroidStudioProjects/android-ndk-r10e

export PATH=$PATH:$NDK_ROOT

#for ijkplayer

export ANDROID_SDK=${PATH}:/Users/hejunlin/Library/Android/sdk

export ANDROID_NDK=${PATH}:/Users/hejunlin/AndroidStudioProjects/android-ndk-r10e

- 保存文件,关闭.bash_profile

- 更新刚配置的环境变量

- 输入source .bash_profile,让刚长配置生效

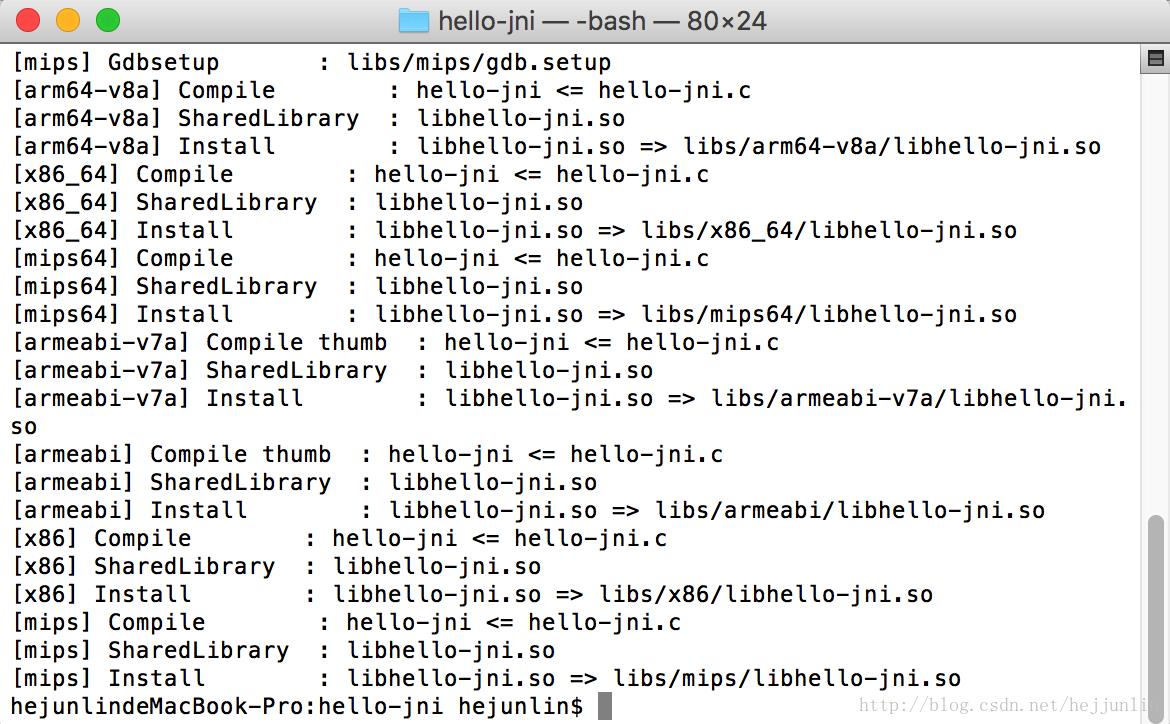

ndk-build测试

- 找到ndk-10e中sample目录,cd hello-jni中,执扫命令ndk-build。就会出现如下所示:

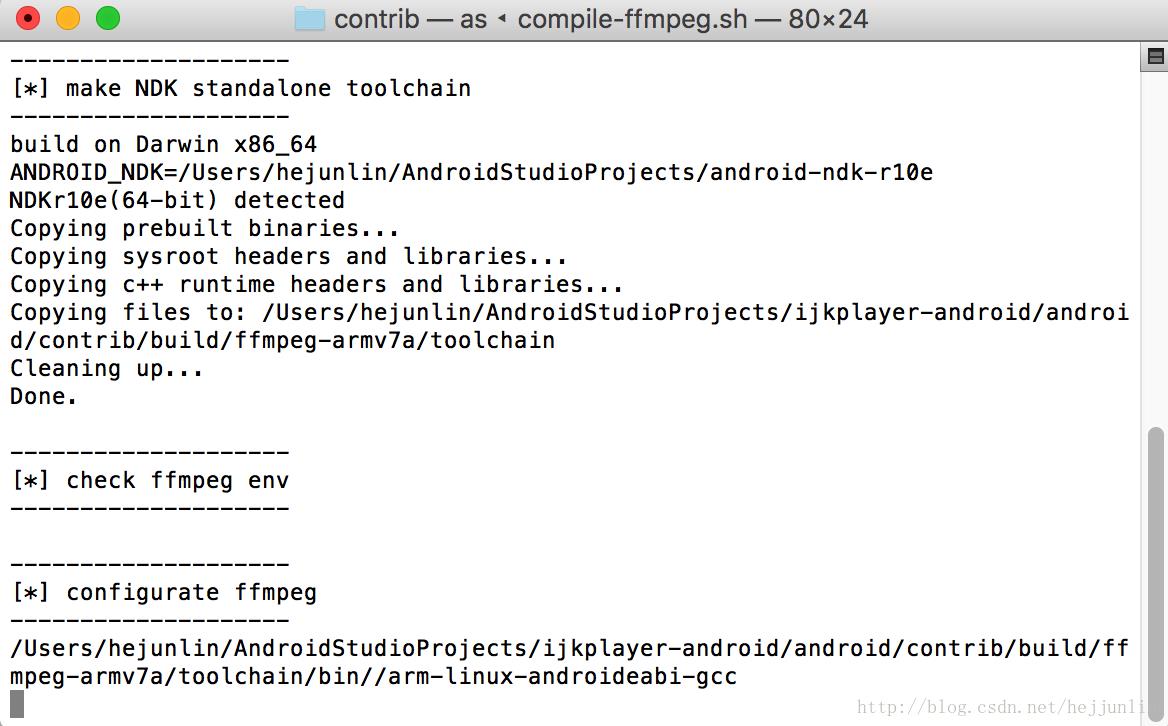

重新回到编译ffmpeg的过程:

依旧执行:./compile-ffmpeg.sh all,得到如下所示

本文出自逆流的鱼yuiop:http://blog.csdn.net/hejjunlin/article/details/55670380

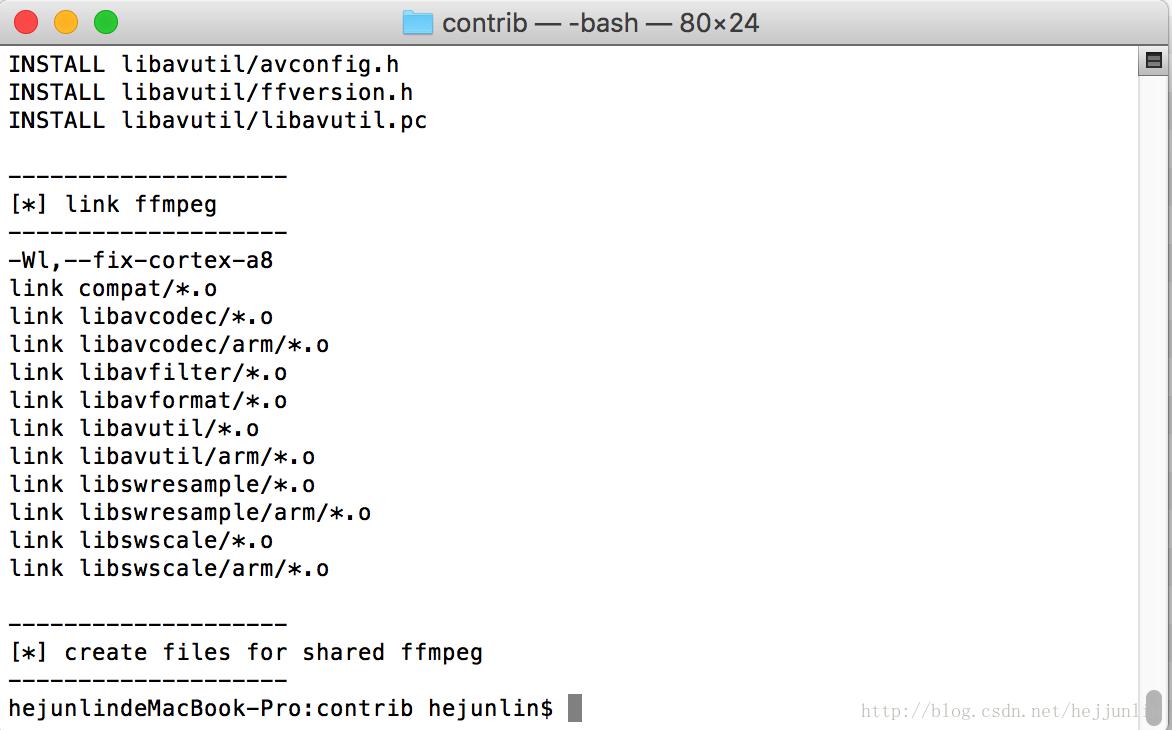

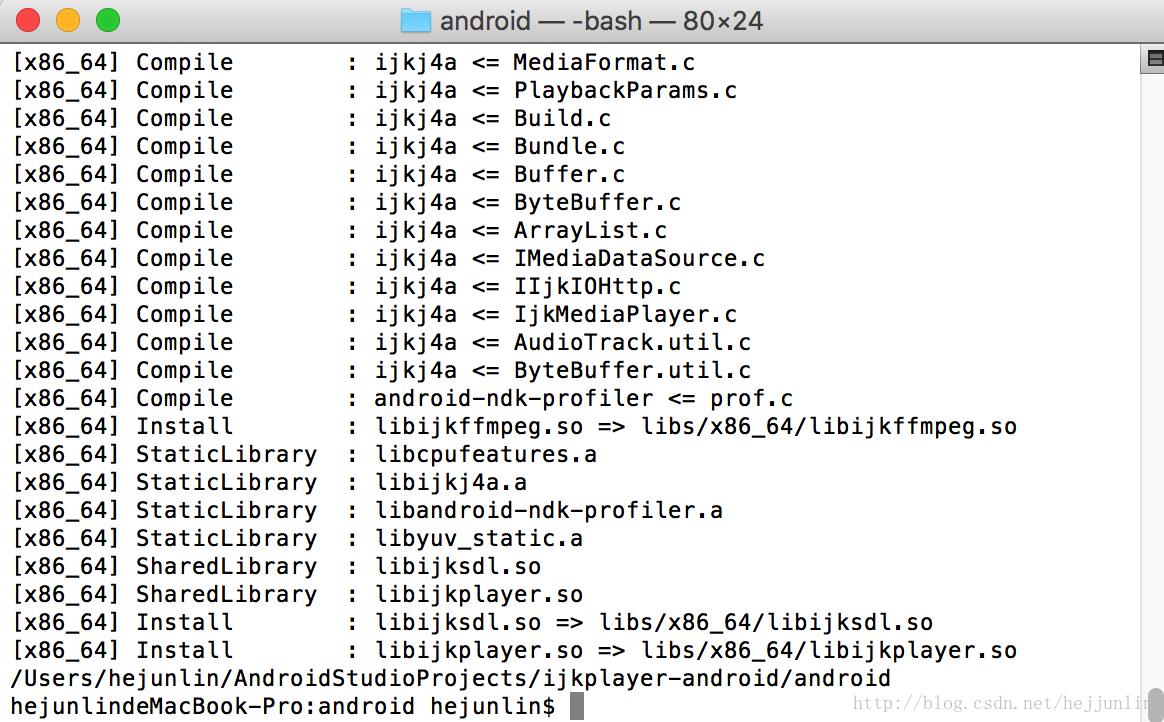

编译结束时如下:

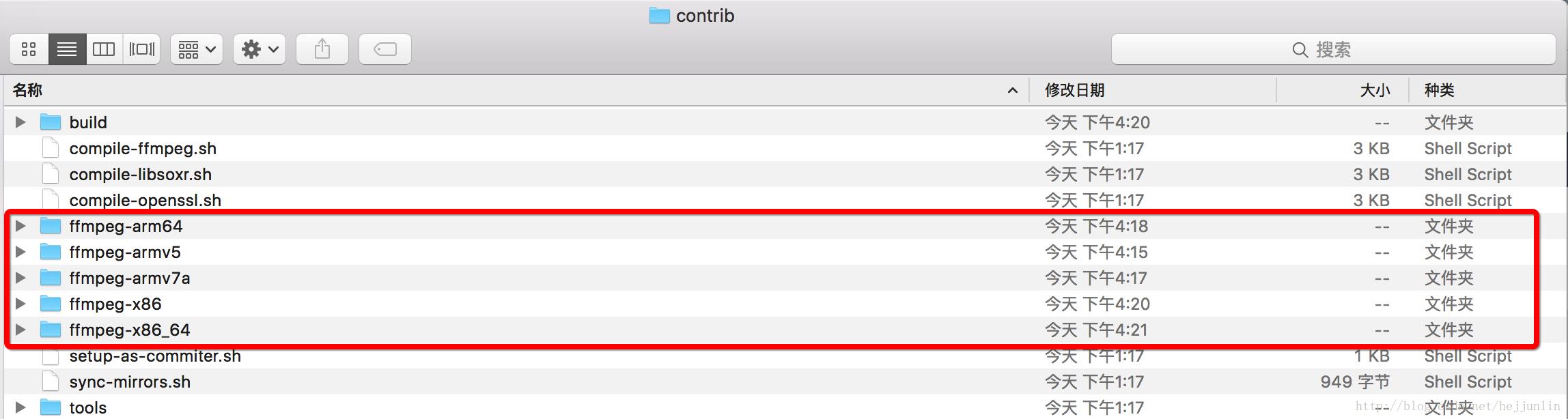

最终,在crontrib目录下,会出现编译so所需要的各平台文件,如arm,x86。也可以指定平台,编译。

最后执行如下命令,让ijkplayer和ffmpeg打成so库及demo工程:

cd ..

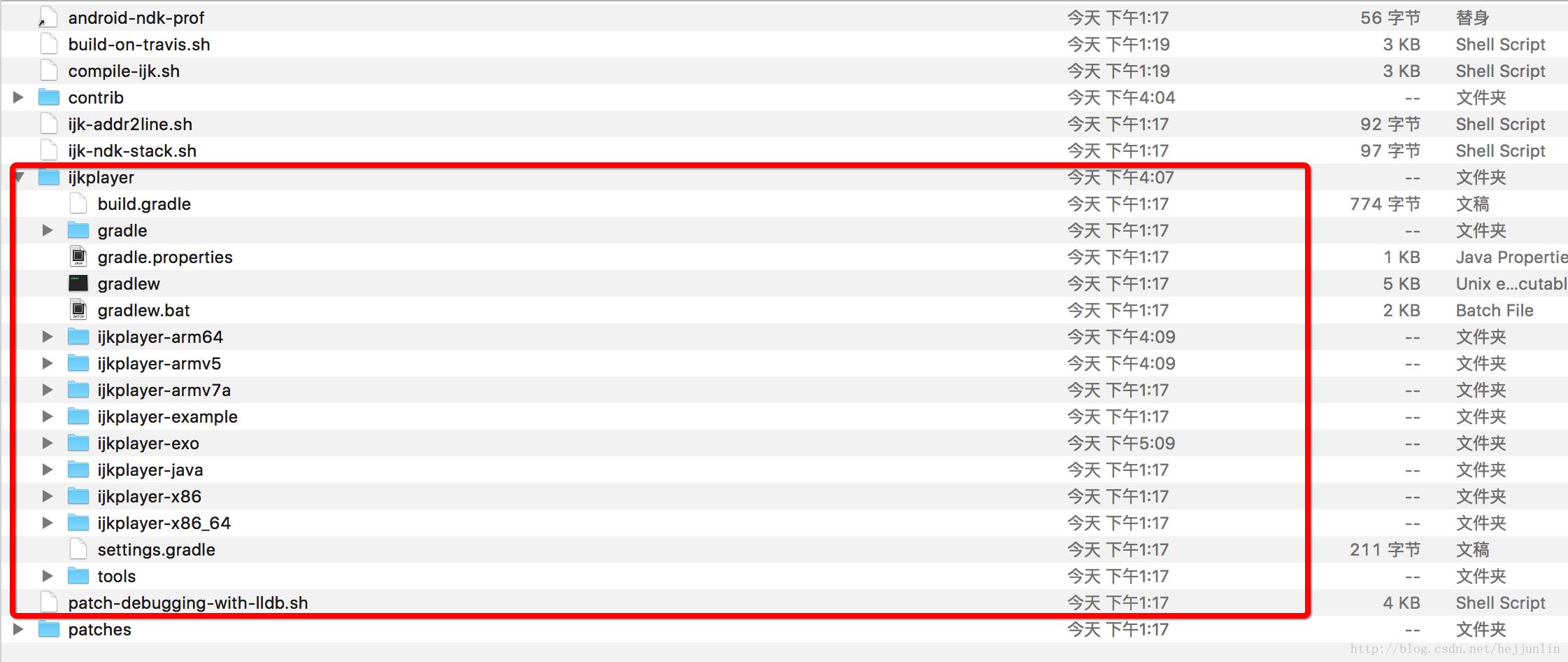

./compile-ijk.sh all

执行完毕后,就会出现一个ijkplayer文件夹,为studio工程,里面包含各平台so库。导入android studio,就可以运行demo工程。

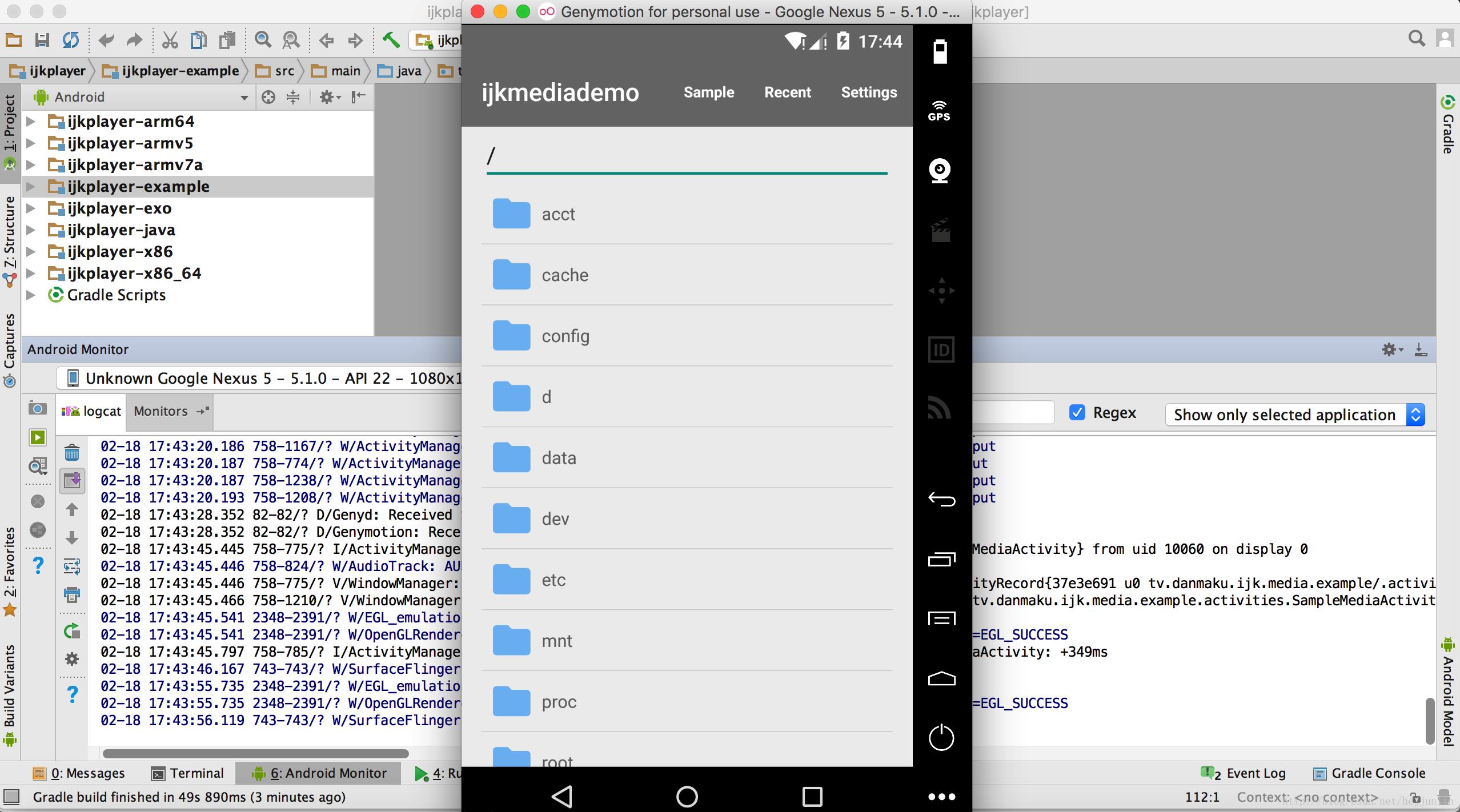

导入Android Studio,example工程运行到模拟器上:

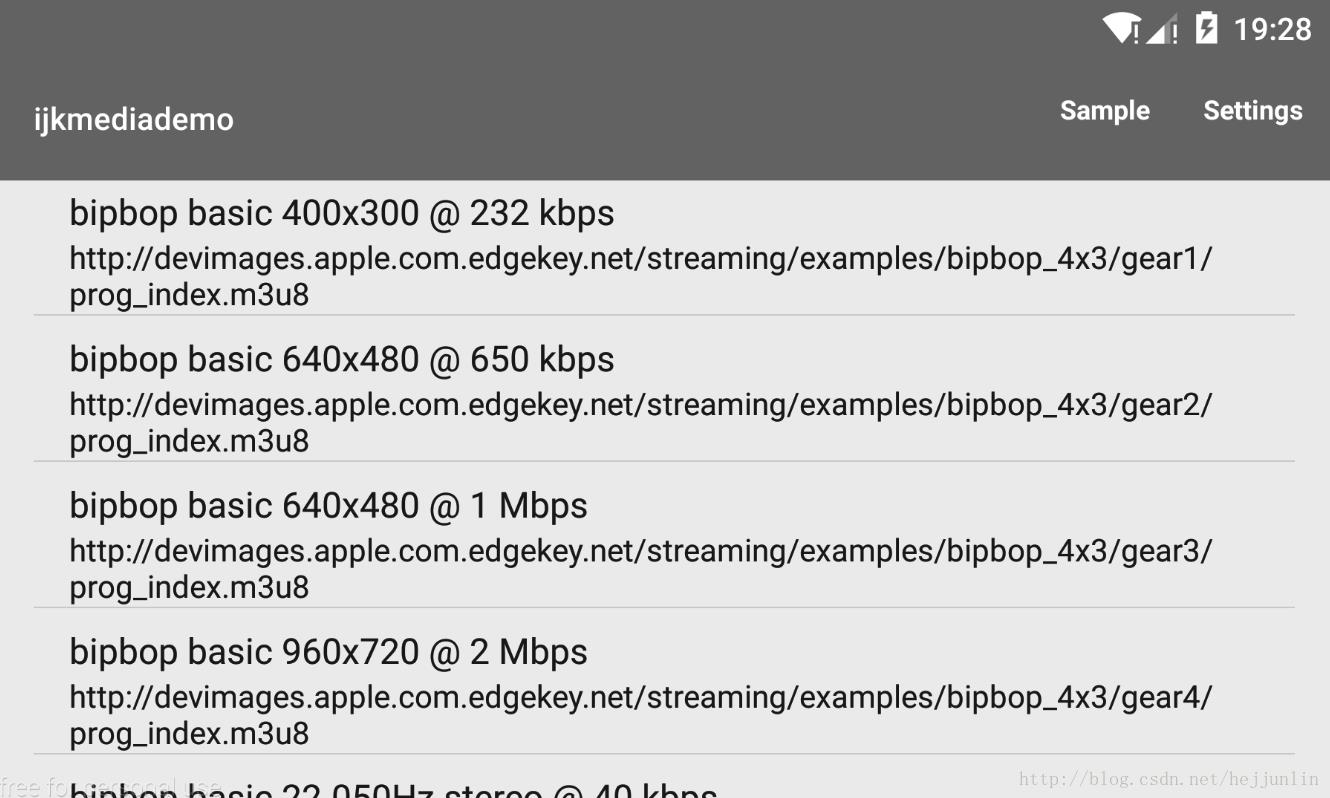

点击Sample,进入播放测试

最后我们编译它,不是用来看看demo就完了的。我们可以改c代码,并且按如上方式重新编译成so库,如果要在其他工程中使用的话。xml中引用如下:

<tv.danmaku.ijk.media.example.widget.media.IjkVideoView

android:id="@+id/video_view"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:layout_gravity="center"/>在你的Java代码中加入如下一些初始化条件就可以啦。

videoView = (IjkVideoView) findViewById(R.id.videoview);

// init player

IjkMediaPlayer.loadLibrariesOnce(null);

IjkMediaPlayer.native_profileBegin("libijkplayer.so");

videoView.setVideoURI(Uri.parse(mUrl));

videoView.setOnPreparedListener(new IMediaPlayer.OnPreparedListener() {

@Override

public void onPrepared(IMediaPlayer mp) {

videoView.start();

}

});第一时间获得博客更新提醒,以及更多android干货,源码分析,欢迎关注我的微信公众号,扫一扫下方二维码或者长按识别二维码,即可关注。

以上是关于ijkplayer整体结构总结的主要内容,如果未能解决你的问题,请参考以下文章

Android 音视频深入 十九 使用ijkplayer做个视频播放器(附源码下载)

ijkplayer阅读笔记02-创建音视频读取,解码,播放线程