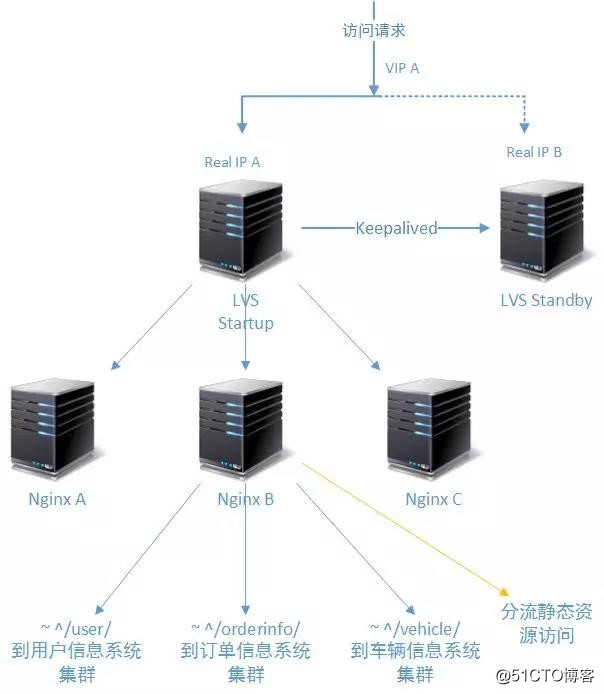

lvs+keepalived+nginx实现高性能负载均衡集群 高性能jsp集群

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了lvs+keepalived+nginx实现高性能负载均衡集群 高性能jsp集群相关的知识,希望对你有一定的参考价值。

LVS-master:192.168.254.134

LVS-backup:192.168.254.135

LVS-VIP:192.168.254.88

nginx+tomcat:192.168.254.131

nginx+tomcat:192.168.254.132

nginx+tomcat:192.168.254.133

(根据需求可以添加多个)

安装基础包

yum install -y gcc-c++ pcre pcre-devel zlib zlib-devel openssl openssl-devel

安装nginx

(下载路径自己选择)

下载nginx1.14.0稳定版本

wget http://nginx.org/download/nginx-1.14.0.tar.gz

解压:tar -zxf nginx-1.14.0.tar.gz

进入解压好后的源码目录:cd nginx-1.14.0

使用默认配置:./configure

编译并安装nginx:make && make install

启动nginx:/usr/local/nginx/sbin/nginx

快速停止nginx:/usr/local/nginx/sbin/nginx -s stop

优雅停止nginx(会将正在处理中的请求处理完毕):/usr/local/nginx/sbin/nginx -s quit

检测配置是否错误:/usr/local/nginx/sbin/nginx -t

重新加载配置:/usr/local/nginx/sbin/nginx -s reload

1、编写Nginx启动脚本,并加入系统服务

vim /etc/init.d/nginx

并在其中写入如下内容:

#!/bin/bash

#chkconfig: - 30 21

#description: http service.

#Source Function Library

. /etc/init.d/functions

#Nginx Settings

NGINX_SBIN="/usr/local/nginx/sbin/nginx"

NGINX_CONF="/usr/local/nginx/conf/nginx.conf"

NGINX_PID="/usr/local/nginx/logs/nginx.pid"

RETVAL=0

prog="Nginx"

start() {

echo -n $"Starting $prog: "

mkdir -p /dev/shm/nginx_temp

daemon $NGINX_SBIN -c $NGINX_CONF

RETVAL=$?

echo

return $RETVAL

}

stop() {

echo -n $"Stopping $prog: "

killproc -p $NGINX_PID $NGINX_SBIN -TERM

rm -rf /dev/shm/nginx_temp

RETVAL=$?

echo

return $RETVAL

}

reload(){

echo -n $"Reloading $prog: "

killproc -p $NGINX_PID $NGINX_SBIN -HUP

RETVAL=$?

echo

return $RETVAL

}

restart(){

stop

start

}

configtest(){

$NGINX_SBIN -c $NGINX_CONF -t

return 0

}

case "$1" in

start)

start

;;

stop)

stop

;;

reload)

reload

;;

restart)

restart

;;

configtest)

configtest

;;

*)

echo $"Usage: $0 {start|stop|reload|

restart|configtest}"

RETVAL=1

esac

exit $RETVAL

并更改文件的执行权限:chmod 755 /etc/init.d/nginx

加入系统服务启动列表 :chkconfig --add nginx

并使开机启动:chkconfig nginx on

开启服务 : service nginx start 或者 /etc/init.d/nginx start|stop|restart

查看nginx页面是否可以正常访问。

http://192.168.254.131/

tar -zxf jdk-8u171-linux-x64.tar.gz -C /usr/local/

cd /usr/local/

mv jdk1.8.0_171/ jdk1.8

vi /etc/profile

在最后添加jdk环境变量

export JAVA_HOME=/usr/local/jdk1.8

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

使环境变量生效

source /etc/profile

查看jdk版本

java -version

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)

查看jdk路径

echo $JAVA_HOME

/usr/local/jdk1.8

安装tomcat

wget http://mirrors.hust.edu.cn/apache/tomcat/tomcat-9/v9.0.10/bin/apache-tomcat-9.0.10.tar.gz

tar -zxf apache-tomcat-9.0.10.tar.gz

mv apache-tomcat-9.0.10 /usr/local/tomcat

创建项目目录

mkdir -p /home/www/web/ (里面写个index.jsp)

vi index.jsp (保存推出即可)

修改tomcat 访问路径

cd /usr/local/tomcat/conf/

vi server.xml

<Host name="localhost" appBase="/home/www/web"

unpackWARs="true" autoDeploy="true">

这一行是添加的

<Context path="" docBase="/home/www/web" reloadable="true"/>

查看tomcat页面是否可以正常访问

http://192.168.254.131:8080/

配置nginx和tomcat 负载均衡/反向代理

cd /usr/local/nginx/conf/

vi nginx.conf

user www www;

worker_processes 4;

error_log /usr/local/nginx/logs/error.log;

error_log /usr/local/nginx/logs/error.log notice;

error_log /usr/local/nginx/logs/error.log info;

pid /usr/local/nginx/logs/nginx.pid;

#工作模式及连接数上限

events {

use epoll;

worker_connections 65535;}

#设定http服务器,利用它的反向代理功能提供负载均衡支持

http {

#设定mime类型

include mime.types;

default_type application/octet-stream;

include /usr/local/nginx/conf/proxy.conf;

log_format main ‘$remote_addr - $remote_user [$time_local] "$request" ‘

‘$status $body_bytes_sent "$http_referer" ‘

‘"$http_user_agent" "$http_x_forwarded_for"‘;

access_log logs/access.log main;

#设定请求缓冲

server_names_hash_bucket_size 128;

client_header_buffer_size 32K;

large_client_header_buffers 4 32k;

# client_max_body_size 8m;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

#keepalive_timeout 0;

keepalive_timeout 65;

gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_comp_level 2;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

#此处为你tomcat的地址,可以写多个tomcat地址

upstream tomcat_pool {

server 192.168.254.133:8080 weight=4 max_fails=2 fail_timeout=30s;

server 192.168.254.132:8080 weight=4 max_fails=2 fail_timeout=30s;

server 192.168.254.131:8080 weight=4 max_fails=2 fail_timeout=30s;

}

server {

listen 80;

server_name www.web2.com;#此处替换为你自己的网址,如有多个中间用空格

index index.jsp index.htm index.html index.do;#设定访问的默认首页地址

root /home/www/web; #设定网站的资源存放路径

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

# root html;

index index.jsp index.html index.htm;

}

location ~ .(jsp|jspx|dp)?$ #所有JSP的页面均交由tomcat处理

{

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://tomcat_pool;#转向tomcat处理

}

#设定访问静态文件直接读取不经过tomcat

location ~ .*.(htm|html|gif|jpg|jpeg|png|bmp|swf|ioc|rar|zip|txt|flv|mid|doc|ppt|pdf|xls|mp3|wma)$

{

expires 30d;

}

location ~ .*.(js|css)?$

{

expires 1h;

}

access_log /usr/local/nginx/logs/ubitechtest.log main;#设定访问日志的存放路径

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# pass the php scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ .php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache‘s document root

# concurs with nginx‘s one

#

#location ~ /.ht {

# deny all;

#}

}

server {

listen 80;

server_name bbs.yourdomain.com;

location / {

root /home/www/web/springmvc; #设定网站的资源存放路径

index index.jsp index.htm index.html index.do welcome.jsp;#设定访问的默认首页地址

}

location ~ .(jsp|jspx|dp)?$ #所有JSP的页面均交由tomcat处理

{

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://tomcat_pool;#转向tomcat处理

}

#设定访问静态文件直接读取不经过tomcat

location ~ .*.(htm|html|gif|jpg|jpeg|png|bmp|swf|ioc|rar|zip|txt|flv|mid|doc|ppt|pdf|xls|mp3|wma)$

{

expires 30d;

}

location ~ .*.(js|css)?$

{

expires 1h;

}

access_log /usr/local/nginx/logs/ubitechztt.log main;#设定访问日志的存放路径

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}}

创建用户www和用户组www为该文件目录的使用权限者

/usr/sbin/groupadd www

/usr/sbin/useradd -g www www -s /sbin/nologin

mkdir -p /home/www

chmod +w /home/www

chown -R www:www/home/www

vi /usr/local/nginx/conf/proxy.conf

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

client_max_body_size 10m;

client_body_buffer_size 128k;

proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 4k;

proxy_buffers 4 32k;

proxy_busy_buffers_size 64k;

proxy_temp_file_write_size 64k;

/usr/local/nginx/sbin/nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful

简单测试

3个nginx都可以分别访问代理的三个tomcat 。

[[email protected] conf]# curl 192.168.254.131

2222222222222222222222222222222222222222

[[email protected] conf]# curl 192.168.254.131

33333333333333333333333333333

[[email protected] conf]# curl 192.168.254.131

111111111111111111111111111111111111111

ok,到此,nginx和tomcat 完成了。

(注意:以上每组配置都一样)

安装lvs+keepalived。

yum -y install ipvsadm keepalived (yum安装就不多说了 )

开启路由转发

永久开启

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

sysctl -p ----加载,使得配置文件立即生效

检查:

sysctl -a |grep "ip_forward"

net.ipv4.ip_forward = 1

net.ipv4.ip_forward_use_pmtu = 0

配置keepalived(master配置)

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {br/>[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_MASTER #备份服务器上将MASTER改为BACKUP

}

vrrp_instance VI_1 {

state BACKUP #备份服务器上将MASTER改为BACKUP

interface ens32 #该网卡名字需要查看具体服务器的网口

virtual_router_id 51

priority 100 # 备份服务上将100改为90

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.254.88

#(如果有多个VIP,继续换行填写.)

}

}

virtual_server 192.168.254.88 80 {

delay_loop 6 #(每隔6秒查询realserver状态)

lb_algo rr #(rr 算法)

lb_kind DR #(Direct Route)

persistence_timeout 50 #(同一IP的连接60秒内被分配到同一台realserver)

protocol TCP #(用TCP协议检查realserver状态)

real_server 192.168.254.131 80 {

weight 1 #(权重)

TCP_CHECK {

connect_timeout 10 #(10秒无响应超时)

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.254.132 80 {

weight 1

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.254.133 80 {

weight 1

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}}

backup配置

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

virtual_server 192.168.254.88 80 {

delay_loop 6 #(每隔6秒查询realserver状态)

lb_algo rr #(rr 算法)

lb_kind DR #(Direct Route)

persistence_timeout 50 #(同一IP的连接60秒内被分配到同一台realserver)

protocol TCP #(用TCP协议检查realserver状态)

real_server 192.168.254.131 80 {

weight 1 #(权重)

TCP_CHECK {

connect_timeout 10 #(10秒无响应超时)

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.254.132 80 {

weight 1

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.254.133 80 {

weight 1

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}}

service keepalived start

ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:e3:f4:ff brd ff:ff:ff:ff:ff:ff

inet 192.168.254.135/24 brd 192.168.254.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet 192.168.254.88/32 scope global ens32

valid_lft forever preferred_lft forever

显示虚拟IP 启动好了

最后就是在ngnx 服务器上配置

vi /etc/init.d/realserver

#!/bin/bashSNS_VIP=192.168.254.88

/etc/rc.d/init.d/functions

case "$1" in

start)

ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP

/sbin/route add -host $SNS_VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

ifconfig lo:0 down

route del $SNS_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

chmod 755 /etc/init.d/realserver

service realserver start

lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.254.88/32 brd 192.168.254.88 scope global lo:0

valid_lft forever preferred_lft forever

看到虚拟IP 就好了

(同理在其他nginx服务器都要操作)

测试

[[email protected] ~]# curl 192.168.254.88444444444444444444444444

[[email protected] ~]# curl 192.168.254.88

55555555555555555555555555

[[email protected] ~]# curl 192.168.254.88

22222222222222222222222222222222222222222222222222222

[[email protected] ~]# curl 192.168.254.88

444444444444444444444444

[[email protected] ~]# curl 192.168.254.88

33333333333333333333333333333

[[email protected] ~]# curl 192.168.254.88

111111111111111111111111111111111111111

以上是关于lvs+keepalived+nginx实现高性能负载均衡集群 高性能jsp集群的主要内容,如果未能解决你的问题,请参考以下文章

lvs+keepalived+nginx实现高性能负载均衡集群

lvs+keepalived+nginx实现高性能负载均衡集群 高性能jsp集群

keepalived+LVS 实现双机热备负载均衡失效转移 高性能 高可用 高伸缩性 服务器集群