linux网络协议栈源码分析 - 传输层(TCP连接的建立)

Posted arm7star

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了linux网络协议栈源码分析 - 传输层(TCP连接的建立)相关的知识,希望对你有一定的参考价值。

1、bind系统调用

1.1、地址端口及状态检查(inet_bind)

通过路由表查找绑定地址的路由类型,对于非本地IP检查是否允许绑定非本地IP地址;检查公认端口绑定权限,是否允许绑定0~1024端口;检查socket是否已经绑定了或者已经激活了;然后调用inet_csk_get_port绑定指定端口或者绑定动态分配的端口。

inet_bind函数实现如下:

int inet_bind(struct socket *sock, struct sockaddr *uaddr, int addr_len)

struct sockaddr_in *addr = (struct sockaddr_in *)uaddr;

struct sock *sk = sock->sk;

struct inet_sock *inet = inet_sk(sk);

struct net *net = sock_net(sk);

unsigned short snum;

int chk_addr_ret;

u32 tb_id = RT_TABLE_LOCAL;

int err;

/* If the socket has its own bind function then use it. (RAW) */

if (sk->sk_prot->bind) // socket有自己的绑定函数

err = sk->sk_prot->bind(sk, uaddr, addr_len); // 调用socket自己的绑定函数

goto out;

err = -EINVAL;

if (addr_len < sizeof(struct sockaddr_in))

goto out; // 地址长度小于sockaddr_in结构体长度,地址错误,跳转到out

if (addr->sin_family != AF_INET) // 协议类型不是AF_INET

/* Compatibility games : accept AF_UNSPEC (mapped to AF_INET)

* only if s_addr is INADDR_ANY.

*/

err = -EAFNOSUPPORT; // 设置错误返回值不支持EAFNOSUPPORT

if (addr->sin_family != AF_UNSPEC ||

addr->sin_addr.s_addr != htonl(INADDR_ANY))

goto out;

tb_id = l3mdev_fib_table_by_index(net, sk->sk_bound_dev_if) ? : tb_id;

chk_addr_ret = inet_addr_type_table(net, addr->sin_addr.s_addr, tb_id); // 从路由表查找地址的路由类型RTN_LOCAL/RTN_MULTICAST/RTN_BROADCAST...

/* Not specified by any standard per-se, however it breaks too

* many applications when removed. It is unfortunate since

* allowing applications to make a non-local bind solves

* several problems with systems using dynamic addressing.

* (ie. your servers still start up even if your ISDN link

* is temporarily down)

*/

err = -EADDRNOTAVAIL; // 设置错误返回值地址不可用EADDRNOTAVAIL

if (!net->ipv4.sysctl_ip_nonlocal_bind && // 不允许bind绑定非本地IP地址

!(inet->freebind || inet->transparent) && // 参考https://www.man7.org/linux/man-pages/man7/ip.7.html,IP_TRANSPARENT/IP_FREEBIND

addr->sin_addr.s_addr != htonl(INADDR_ANY) && // 非INADDR_ANY地址(0.0.0.0),0.0.0.0地址可以绑定,不需要检查0.0.0.0地址作用域

chk_addr_ret != RTN_LOCAL && // 可以绑定RTN_LOCAL、RTN_MULTICAST、RTN_BROADCAST路由类型的地址

chk_addr_ret != RTN_MULTICAST &&

chk_addr_ret != RTN_BROADCAST)

goto out; // RTN_LOCAL、RTN_MULTICAST、RTN_BROADCAST之外的路由类型地址不能绑定,跳转到out

snum = ntohs(addr->sin_port);

err = -EACCES;

if (snum && snum < PROT_SOCK && // 端口号不为0并且端口号小于PROT_SOCK(1024),绑定的端口号为公认端口

!ns_capable(net->user_ns, CAP_NET_BIND_SERVICE)) // namespace相关capability权限检查

goto out;

/* We keep a pair of addresses. rcv_saddr is the one

* used by hash lookups, and saddr is used for transmit.

*

* In the BSD API these are the same except where it

* would be illegal to use them (multicast/broadcast) in

* which case the sending device address is used.

*/

lock_sock(sk);

/* Check these errors (active socket, double bind). */

err = -EINVAL;

if (sk->sk_state != TCP_CLOSE || inet->inet_num) // 已经激活或者已经绑定的socket,不允许绑定

goto out_release_sock; // 跳转到out_release_sock

inet->inet_rcv_saddr = inet->inet_saddr = addr->sin_addr.s_addr; // 设置已经绑定的本地IP地址。接收数据时,作为条件的一部分查找数据所属的传输控制块。机械工业出版社《Linux内核源码剖析:TCP/IP实现(下册)》

if (chk_addr_ret == RTN_MULTICAST || chk_addr_ret == RTN_BROADCAST)

inet->inet_saddr = 0; /* Use device */ // 本地IP地址,发送时使用

/* Make sure we are allowed to bind here. */

if ((snum || !inet->bind_address_no_port) && // 绑定的端口不为0需要检查端口是否被占用,不允许在connect时候绑定端口(https://www.man7.org/linux/man-pages/man7/ip.7.html IP_BIND_ADDRESS_NO_PORT)

sk->sk_prot->get_port(sk, snum)) // 调用inet_csk_get_port绑定端口,如果端口为0,则自动分配一个可用端口进行绑定

inet->inet_saddr = inet->inet_rcv_saddr = 0;

err = -EADDRINUSE; // 端口被占用

goto out_release_sock; // 跳转到out_release_sock

if (inet->inet_rcv_saddr)

sk->sk_userlocks |= SOCK_BINDADDR_LOCK; // 已经绑定了本地地址

if (snum)

sk->sk_userlocks |= SOCK_BINDPORT_LOCK; // 已经绑定了本地端口

inet->inet_sport = htons(inet->inet_num); // 源端口

inet->inet_daddr = 0;

inet->inet_dport = 0;

sk_dst_reset(sk); // 重置socket路由缓存

err = 0;

out_release_sock:

release_sock(sk);

out:

return err;

1.2、端口获取(inet_csk_get_port)

如果没有指定端口,inet_csk_get_port动态分配一个端口, 并检测端口冲突,如果有指定端口,inet_csk_get_port检测端口冲突;端口地址复用参考https://www.man7.org/linux/man-pages/man7/socket.7.html中的SO_REUSEADDR、SO_REUSEPORT以及人民邮电出版社《UNIX网络编程 卷1:套接字联网API(第3版)》"7.5.11 SO_REUSEADDR 和 SO_REUSEPORT 套接字选项"。

- SO_REUSEADDR

Indicates that the rules used in validating addresses supplied in a bind(2) call should allow reuse of local addresses. For AF_INET sockets this means that a socket may bind, except when there is an active listening socket bound to the address. When the listening socket is bound to INADDR_ANY with a specific port then it is not possible to bind to this port for any local address. Argument is an integer boolean flag.

- SO_REUSEPORT (since Linux 3.9)

Permits multiple AF_INET or AF_INET6 sockets to be bound to an identical socket address. This option must be set on each socket (including the first socket) prior to calling bind(2) on the socket. To prevent port hijacking, all of the processes binding to the same address must have the same effective UID. This option can be employed with both TCP and UDP sockets.

For TCP sockets, this option allows accept(2) load distribution in a multi-threaded server to be improved by using a distinct listener socket for each thread. This provides improved load distribution as compared to traditional techniques such using a single accept(2)ing thread that distributes connections, or having multiple threads that compete to accept(2) from the same socket.

For UDP sockets, the use of this option can provide better distribution of incoming datagrams to multiple processes(or threads) as compared to the traditional technique of having multiple processes compete to receive datagrams on the same socket.

动态分配的本地端口范围比较大,可以用如下命令缩小本地端口范围,然后动态绑定两个端口就可以占用所有本地端口,写代码就容易构造各种条件的冲突场景,使用gdb在内核相关函数设置断点即可跟踪各种状态的处理流程,场景比较多,所以构造各种场景,使用gdb调试代码更容易理解观察各种处理流程:

echo 32768 32768 >/proc/sys/net/ipv4/ip_local_port_rangeinet_csk_get_port函数实现如下:

int inet_csk_get_port(struct sock *sk, unsigned short snum)

struct inet_hashinfo *hashinfo = sk->sk_prot->h.hashinfo;

struct inet_bind_hashbucket *head;

struct inet_bind_bucket *tb;

int ret, attempts = 5; // 分配动态端口尝试次数

struct net *net = sock_net(sk);

int smallest_size = -1, smallest_rover;

kuid_t uid = sock_i_uid(sk);

int attempt_half = (sk->sk_reuse == SK_CAN_REUSE) ? 1 : 0; // 地址复用SO_REUSEADDR,从一半端口范围内尝试分配可用端口(空闲或者可复用的端口)

local_bh_disable();

if (!snum) // 端口号为0,动态分配一个端口

int remaining, rover, low, high;

again:

inet_get_local_port_range(net, &low, &high); // 获取本地端口的范围

if (attempt_half) // attempt_half不为0,从一半端口范围内尝试分配可用端口(空闲或者可复用的端口)

int half = low + ((high - low) >> 1); // 计算[low, high]区间的中间端口

if (attempt_half == 1) // 第1次尝试分配动态端口,先从[low, half]查找可用端口

high = half;

else // 第1次之后分配动态端口(attempts减到为0的时候,不再尝试分配动态端口),从[half, high]查找可用端口

low = half;

remaining = (high - low) + 1; // 剩余端口数[low: high],查找过程不是从low到high查找,而是从[low: high]中间的一个随机端口查找,查找到high之后再从low开始查找,remaining就用于记录查找剩余的次数,也就是还有多少端口没有查找

smallest_rover = rover = prandom_u32() % remaining + low; // 生成一个[low: high]之间的随机端口,从rover开始往大的端口开始查找,查找[rover: high]区间,查找到high之后,再查找[low: rover)区间

smallest_size = -1; // 记录最少owners的可以复用的端口(地址/端口复用),也就是在复用端口/地址的情况下,优先复用owners最少的地址/端口,避免有的端口复用多有的端口复用少的情况

do

if (inet_is_local_reserved_port(net, rover)) // 是否是被预留的端口? sysctl_local_reserved_ports

goto next_nolock; // 不能使用/复用被预留的端口,跳转到next_nolock,检查下一个端口

head = &hashinfo->bhash[inet_bhashfn(net, rover,

hashinfo->bhash_size)]; // 调用inet_bhashfn计算rover端口的哈希值,根据哈希值找到rover端口所在的哈希链表表头(哈希值相同的端口存在一个链表里面,只需要查找对于端口哈希值所在链表即可)

spin_lock(&head->lock);

inet_bind_bucket_for_each(tb, &head->chain) // 遍历哈希链表,检查已绑定的rover端口,绑定的端口是否可以复用,是否存在冲突

if (net_eq(ib_net(tb), net) && tb->port == rover) // 在一个网络命名空间里面(不同网络命名空间,端口号不存在冲突),并且端口号与绑定的端口相同

if (((tb->fastreuse > 0 && // 地址可以被复用(非TCP_LISTEN状态会设置fastreuse为1,因此可以快速判断绑定的端口不处于监听状态)

sk->sk_reuse && // 地址可以被复用

sk->sk_state != TCP_LISTEN) || // sk处于非监听状态(占用端口及非占用端口的socket都处于非监听状态,可以复用地址)

(tb->fastreuseport > 0 && // 端口可以被复用(只有一个socket占用端口并且可以复用端口的情况下,会设置fastreuseport为1并记录占用端口的socket的fastuid,一般情况,uid相同即可复用端口)

sk->sk_reuseport && // 端口可以被复用

uid_eq(tb->fastuid, uid))) && // uid相同,相同的用户复用端口

(tb->num_owners < smallest_size || smallest_size == -1)) // 当前地址/端口可以复用的端口的owners数量小于之前记录的地址/端口可以复用的端口的owners数量,当前地址/端口可以复用的端口是第一个可以复用的,更新记录owners最少的地址/端口可以复用的端口及端口的owners数量,尽量分配owners最少的可用端口

smallest_size = tb->num_owners; // 记录smallest_rover端口的owners数量

smallest_rover = rover; // 记录复用最少owners的端口smallest_rover

if (!inet_csk(sk)->icsk_af_ops->bind_conflict(sk, tb, false)) // 调用inet_csk_bind_conflict检查冲突,检测SO_REUSEADDR/SO_REUSEPORT相关选项及绑定状态是否可以地址/端口复用,relax为false情况,非监听状态socket也不允许地址相同(尽可能不使用相同的端口地址???)

snum = rover; // 不冲突,使用rover作为绑定端口

goto tb_found; // 跳转到tb_found(锁还没释放,rover绑定状态不会被改变)

goto next; // 地址冲突,跳转到next

break; // inet_bind_bucket_for_each循环结束,绑定端口里面没有找到rover端口

next:

spin_unlock(&head->lock); // 遍历所有端口时间太长,影响其他进程/线程获取端口,这里释放了自旋锁(遍历过了的绑定端口的状态可能会被其他线程/进程改变,可能变成不冲突,一次遍历没找到合适端口情况下,绑定的端口状态可能变化,需要再次遍历;smallest_rover端口绑定状态也可能被改变)

next_nolock:

if (++rover > high) // 查找下一个端口,下一个端口大于最大的端口,那么从最小的端口重新开始查找

rover = low; // 从low开始查找,查找[low: rover)区间 (这里的rover是随机初始化的rover,remaining控制查找次数,减去[rover: high]区间的查找次数就剩下[low: rover)区间的次数)

while (--remaining > 0);

/* Exhausted local port range during search? It is not

* possible for us to be holding one of the bind hash

* locks if this test triggers, because if 'remaining'

* drops to zero, we broke out of the do/while loop at

* the top level, not from the 'break;' statement.

*/

ret = 1;

if (remaining <= 0) // [low: high]都查找了,才会导致remaining <= 0,也就是[low: high]区间端口都被占用了且不能复用

if (smallest_size != -1) // 有可以复用的地址/端口

snum = smallest_rover; // 使用复用最少的端口作为动态分配的端口(smallest_rover严格冲突检测失败!!!)

goto have_snum;

if (attempt_half == 1) // 端口可以复用,attempt_half为1的时候只查找了低的一半端口,高的一半端口没有查找,需要再次查找高的一半端口

/* OK we now try the upper half of the range */

attempt_half = 2; // attempt_half设置为2,从高的一半端口查找

goto again; // 跳转到again,从高的一半端口查找是否有可用的端口

goto fail; // 没有可用的以及可以复用的端口,跳转到fail

/* OK, here is the one we will use. HEAD is

* non-NULL and we hold it's mutex.

*/

snum = rover; // remaining大于0,inet_bind_bucket_for_each循环没有找到冲突端口,循环之后调用了break,那么就使用rover作为动态分配的端口

else // 有指定绑定的端口或者动态分配smallest_rover端口(释放自旋锁期间,smallest_rover绑定状态可能被改变,需要再次进行冲突检测)

have_snum:

head = &hashinfo->bhash[inet_bhashfn(net, snum,

hashinfo->bhash_size)]; // 获取绑定端口所在的哈希链表表头

spin_lock(&head->lock); // 锁定链表,避免检测过程被其他进程/线程修改链表

inet_bind_bucket_for_each(tb, &head->chain)

if (net_eq(ib_net(tb), net) && tb->port == snum) // 该端口已经被绑定了

goto tb_found; // 指定的绑定端口/smallest_rover端口已经有被绑定(smallest_rover在释放互斥锁期间没有被释放或者又被其他进程线程绑定了),需要做冲突检测

tb = NULL;

goto tb_not_found; // 指定的绑定端口没有其他socket绑定,直接绑定该端口即可;smallest_rover之前绑定了,但是释放互斥锁期间被解绑了,那么也可以使用smallest_rover作为动态分配的绑定端口

tb_found: // snum端口已经被绑定了(此次链表还是被锁定状态)

if (!hlist_empty(&tb->owners))

if (sk->sk_reuse == SK_FORCE_REUSE)

goto success; // 强制复用端口,不管是否冲突,都强制绑定该端口,跳转到success

if (((tb->fastreuse > 0 && // 1.1、地址复用(只有一个socket绑定端口并且处于非监听状态)

sk->sk_reuse && sk->sk_state != TCP_LISTEN) || // 1.2、sk处于非监听状态(已经绑定的socket也处于非监听状态),非监听状态可以复用相同的地址,那么不需要冲突检测

(tb->fastreuseport > 0 && // 2.1、端口复用(只有一个socket绑定了端口,只需要快速检测UID即可)

sk->sk_reuseport && uid_eq(tb->fastuid, uid))) && // 2.2、sk端口复用并且与绑定端口的socket是同一个用户,那么不需要冲突检测

smallest_size == -1) // smallest_size为-1,要么是指定了绑定的端口,地址或者端口可以快速复用即可绑定该端口,要么就是没有可以快速复用的地址/端口(端口相同,没有满足fastreuse/fastreuseport条件),满足严格检测条件,不管sk处于什么状态,都不会冲突,直接分配该端口即可

goto success;

else // 上面的if检查了可以快速复用的情况(检查比较少的条件就能直接判断是否可以复用),不能快速判断复用的情况,需要检测可能冲突的状态及地址,例如指定绑定端口复用地址有监听socket状态下,不能绑定监听的相同的地址,需要检测地址是否冲突

ret = 1;

if (inet_csk(sk)->icsk_af_ops->bind_conflict(sk, tb, true)) // 冲突检测(relax为true,严格检测非监听状态下的地址复用的地址不能相同,实际情况非监听状态地址是可以相同的,也就是放松检查条件可以地址相同,如果找不到严格不冲突的端口,那么也可以使用宽松不冲突的端口)

if (((sk->sk_reuse && sk->sk_state != TCP_LISTEN) || // 非地址复用、端口复用等情况下,不需要再次查找,一些可能查找到可用端口的情况下,才重新查找可用端口...

(tb->fastreuseport > 0 &&

sk->sk_reuseport && uid_eq(tb->fastuid, uid))) &&

smallest_size != -1 && --attempts >= 0) // 指定绑定端口情况下,smallest_size始终为-1,不能更换其他端口,所以有冲突就不能绑定该端口,不能跳到again分配动态端口;动态端口有两种情况,一种找到了严格检测地址端口不冲突的端口(这种情况下,链表被锁定了,绑定状态不会改变,再次宽松检查也不会冲突,不会走到这里),一种是没有找到严格检测地址端口不冲突的端口(使用快速复用的端口,这种情况查找过程会释放互斥锁,绑定端口的状态可能会变化,可能有可以用的端口,需要结合其他条件确定是否要尝试重新查找)

spin_unlock(&head->lock);

goto again; // 跳转到again,重新从随机端口开始查找可用端口

goto fail_unlock;

tb_not_found: // 地址端口不冲突

ret = 1;

if (!tb && (tb = inet_bind_bucket_create(hashinfo->bind_bucket_cachep,

net, head, snum)) == NULL) // 创建bucket

goto fail_unlock;

if (hlist_empty(&tb->owners)) // 第一次绑定该端口,根据sk检查地址端口是否可以快速复用

if (sk->sk_reuse && sk->sk_state != TCP_LISTEN) // 只检查当前sk的状态即可知道地址是否可以快速复用(不可以快速复用的情况需要检查比较多的条件)

tb->fastreuse = 1;

else

tb->fastreuse = 0;

if (sk->sk_reuseport) // 只检查当前sk的端口复用即可知道端口是否可以快速复用(不可以快速复用的情况需要检查比较多的条件)

tb->fastreuseport = 1;

tb->fastuid = uid;

else

tb->fastreuseport = 0;

else // 有其他owners,根据sk及之前的状态检查地址端口是否可以快速复用

if (tb->fastreuse &&

(!sk->sk_reuse || sk->sk_state == TCP_LISTEN))

tb->fastreuse = 0;

if (tb->fastreuseport &&

(!sk->sk_reuseport || !uid_eq(tb->fastuid, uid)))

tb->fastreuseport = 0;

success:

if (!inet_csk(sk)->icsk_bind_hash)

inet_bind_hash(sk, tb, snum); // 绑定端口,sk及端口添加到哈希表

WARN_ON(inet_csk(sk)->icsk_bind_hash != tb);

ret = 0;

fail_unlock:

spin_unlock(&head->lock);

fail:

local_bh_enable();

return ret;

1.3、绑定冲突检测

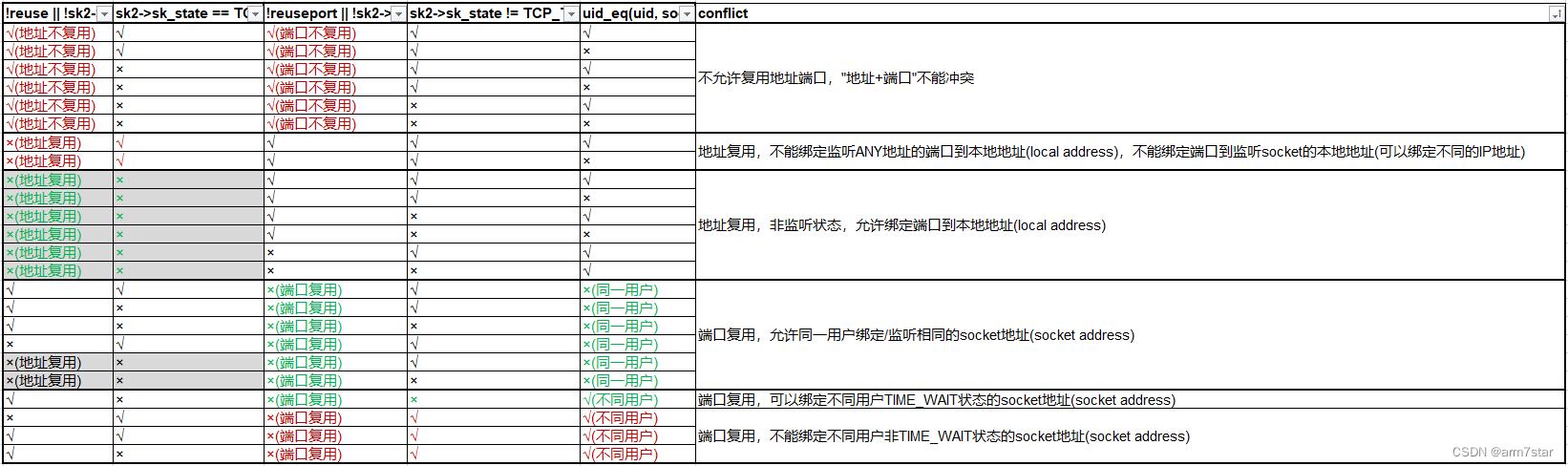

宽松冲突检测的各种状态:

inet_csk_bind_conflict就是根据各种各种选项及socket状态,检测这些组合条件下,地址端口是否可以复用,是否会冲突。

inet_csk_bind_conflict函数实现代码如下:

int inet_csk_bind_conflict(const struct sock *sk,

const struct inet_bind_bucket *tb, bool relax)

struct sock *sk2;

int reuse = sk->sk_reuse;

int reuseport = sk->sk_reuseport;

kuid_t uid = sock_i_uid((struct sock *)sk);

/*

* Unlike other sk lookup places we do not check

* for sk_net here, since _all_ the socks listed

* in tb->owners list belong to the same net - the

* one this bucket belongs to.

*/

sk_for_each_bound(sk2, &tb->owners) // 遍历owners,检查地址、状态是否冲突

if (sk != sk2 && // 比较不同的sk(绑定端口时会调用inet_csk_bind_conflict,此时sk不在owners里面;绑定完成之后,调用监听接口时,还会再次调用inet_csk_bind_conflict,只绑定端口不一定会冲突,但是如果要监听端口的话,监听状态下就可能冲突,此时sk在owners里面)

!inet_v6_ipv6only(sk2) &&

(!sk->sk_bound_dev_if || // 没有绑定网卡或者网卡相同,在绑定ANY地址的时候,内核可能选择相同的网卡,存在冲突的可能,需要检查冲突

!sk2->sk_bound_dev_if ||

sk->sk_bound_dev_if == sk2->sk_bound_dev_if))

if ((!reuse || !sk2->sk_reuse || // sk或者sk2没有设置地址复用

sk2->sk_state == TCP_LISTEN) && // 已绑定端口的sk2处于监听状态

(!reuseport || !sk2->sk_reuseport || // sk或者sk2没有设置端口复用

(sk2->sk_state != TCP_TIME_WAIT && // sk2处于非TCP_TIME_WAIT状态并且sk、sk2的uid不同(不同用户创建的socket)

!uid_eq(uid, sock_i_uid(sk2)))))

if (!sk2->sk_rcv_saddr || !sk->sk_rcv_saddr ||

sk2->sk_rcv_saddr == sk->sk_rcv_saddr)

break; // 地址相同冲突(地址相等或者存在ANY地址);1、地址端口不复用,不能绑定相同的地址端口;2、地址端口复用,不同用户不能绑定非TIME_WAIT的地址端口;3、地址复用、端口不复用,sk2正在监听该端口,如果sk2监听的是ANY地址,该端口不能绑定其他本地地址,如果sk、sk2地址相同(包括sk地址为ANY的情况),该端口也不能绑定;4、地址不复用、端口复用,不同用户不能绑定非TIME_WAIT的地址端口... 具体参考https://www.man7.org/linux/man-pages/man7/socket.7.html的SO_REUSEADDR、SO_REUSEPORT

if (!relax && reuse && sk2->sk_reuse && // 非relax检查(严格检查),地址复用情况下的非监听状态地址冲突检测,不允许地址相同

sk2->sk_state != TCP_LISTEN) // sk2不处于监听状态

if (!sk2->sk_rcv_saddr || !sk->sk_rcv_saddr ||

sk2->sk_rcv_saddr == sk->sk_rcv_saddr)

break;

return sk2 != NULL;

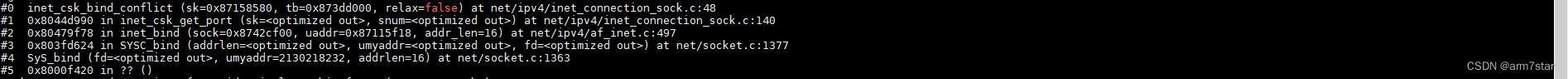

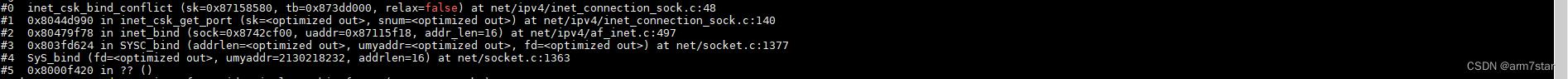

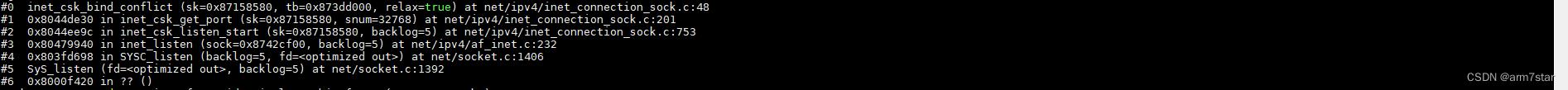

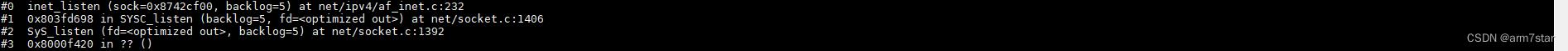

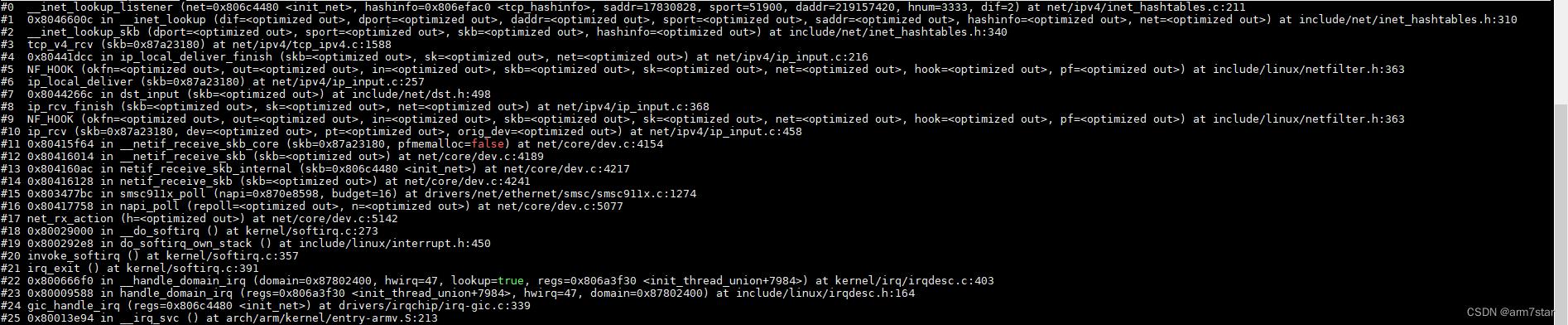

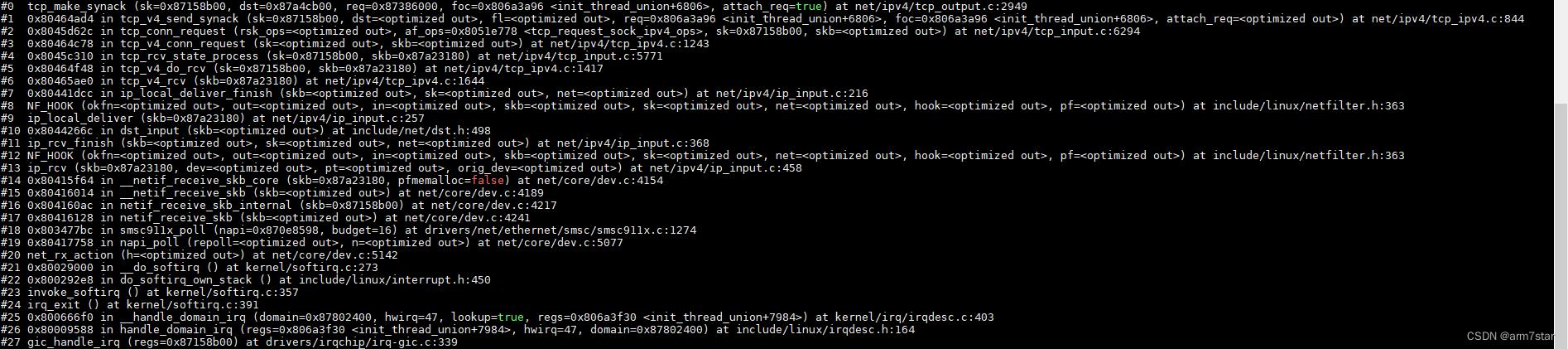

1.4、端口冲突检测调用栈

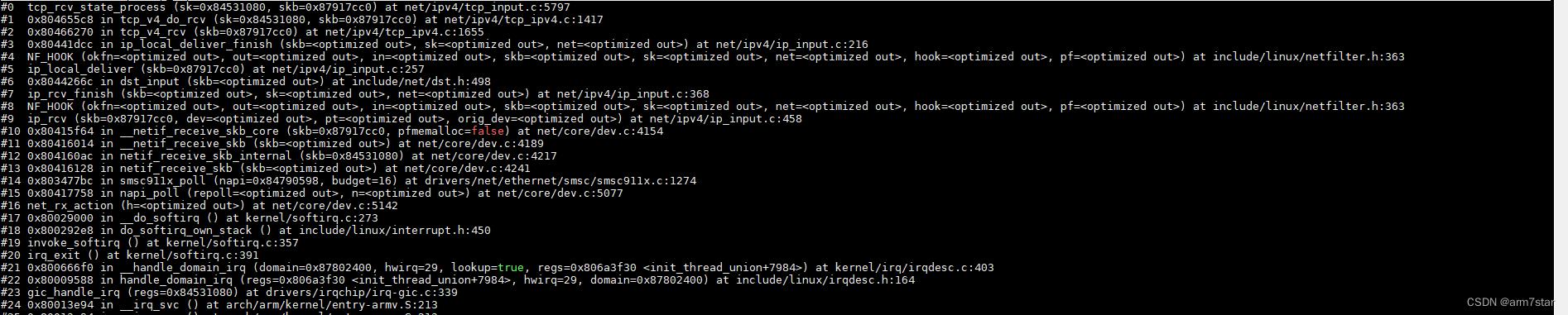

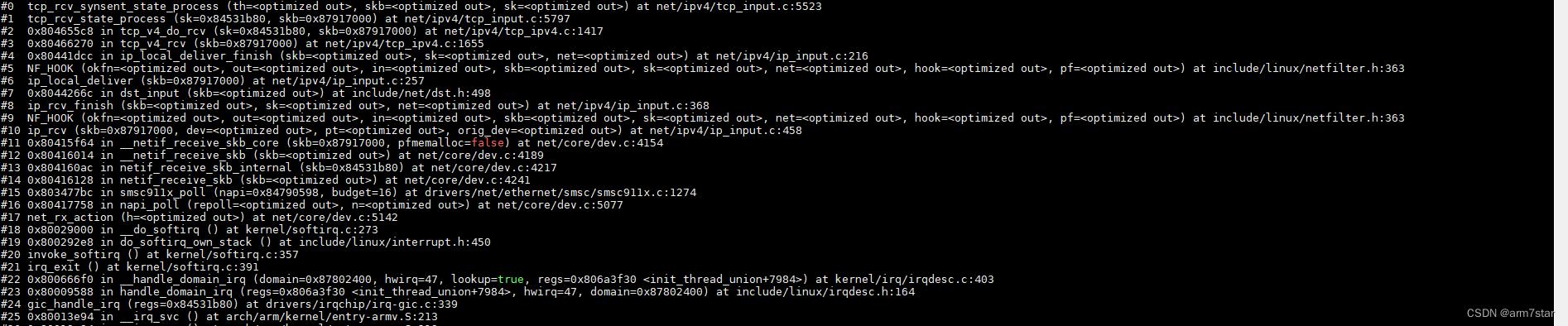

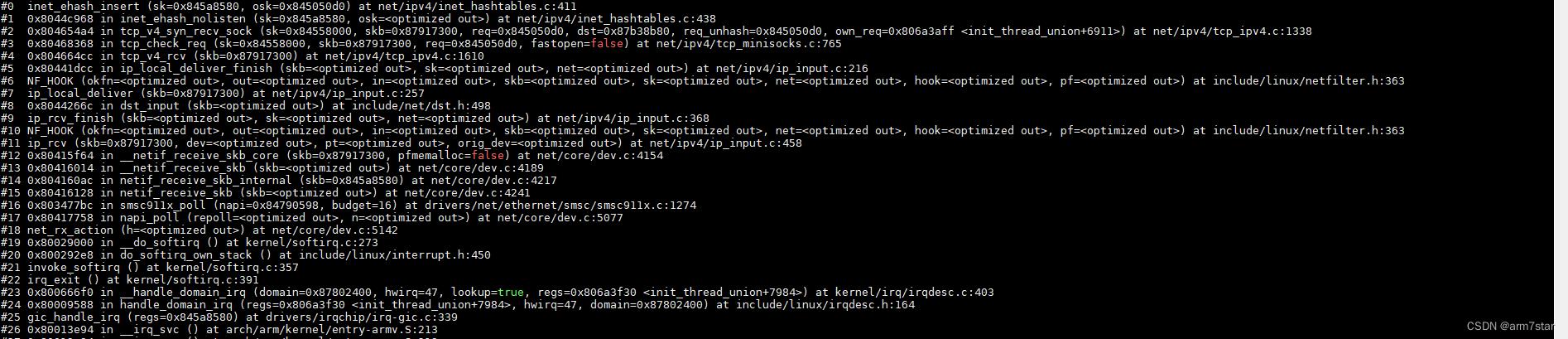

bind系统调用,分配动态端口时严格检测冲突调用栈:

bind系统调用,严格冲突检测找不到可用端口时,宽松冲突检测调用栈:

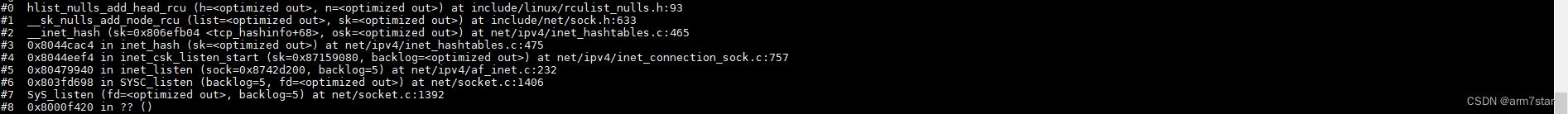

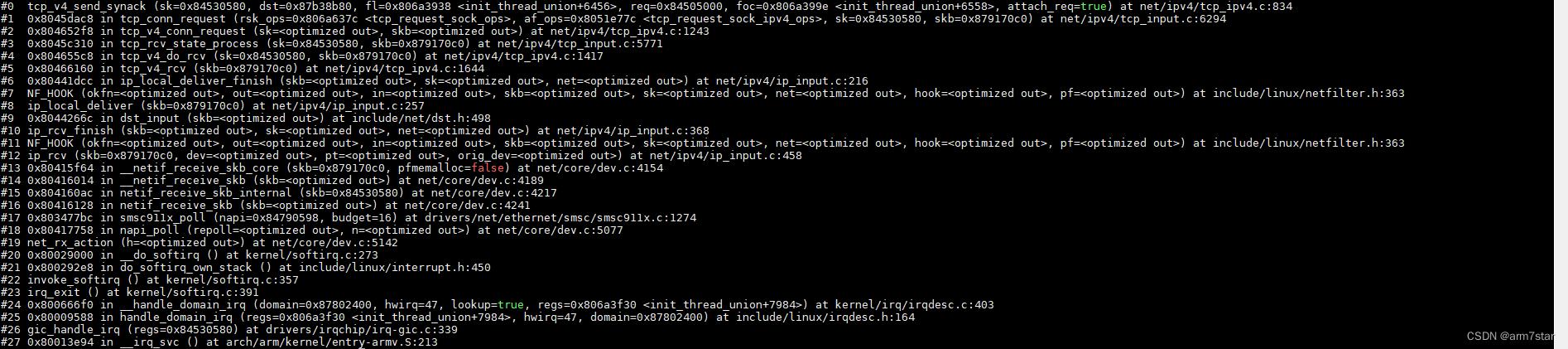

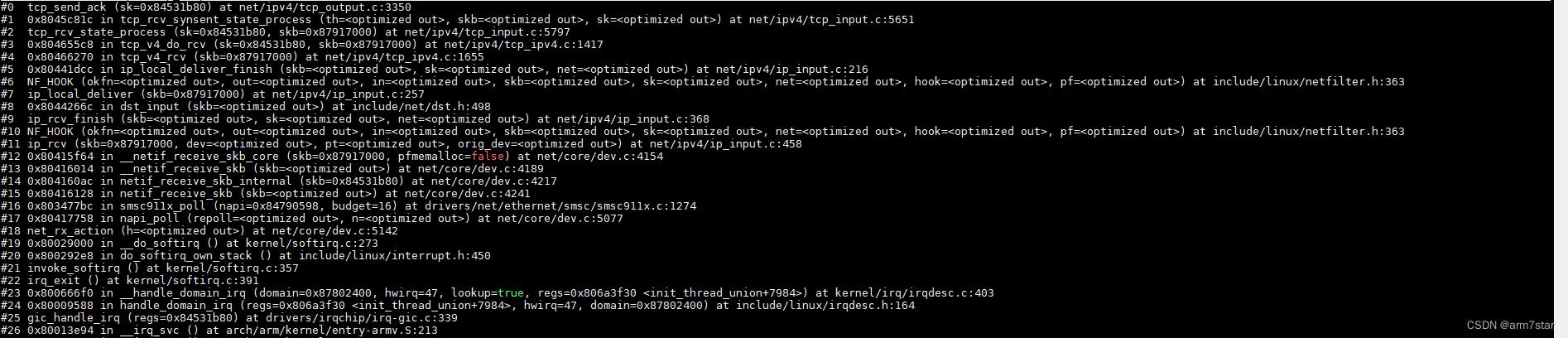

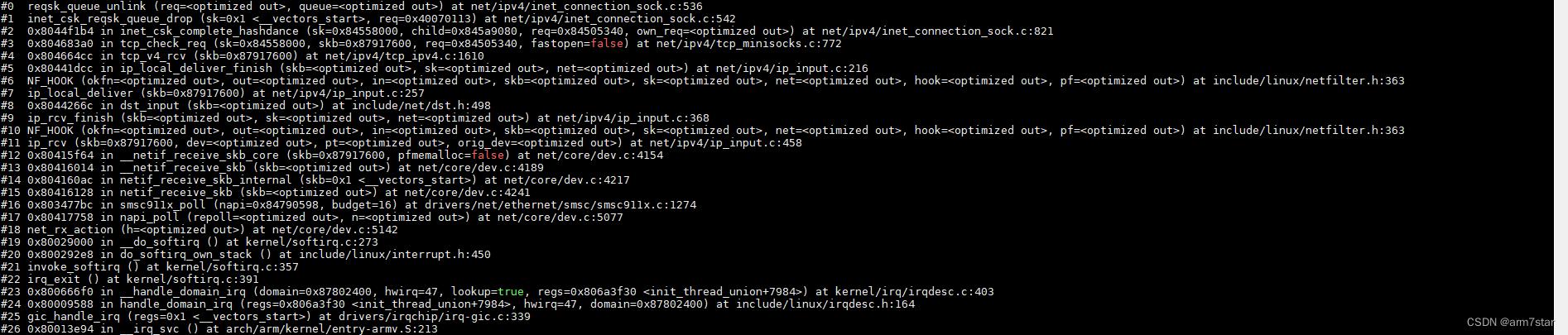

listen系统调用,冲突检测调用栈(绑定相同的地址端口不冲突,但是调用listen监听就可能导致绑定冲突了):

listen系统调用,冲突检测调用栈(绑定相同的地址端口不冲突,但是调用listen监听就可能导致绑定冲突了):

2、listen系统调用

2.1、listen系统调用(inet_listen)

inet_listen主要是检查协议类型、socket状态等,检查通过才调用inet_csk_listen_start开始监听。

inet_listen函数实现代码如下:

int inet_listen(struct socket *sock, int backlog)

struct sock *sk = sock->sk;

unsigned char old_state;

int err;

lock_sock(sk);

err = -EINVAL;

if (sock->state != SS_UNCONNECTED || sock->type != SOCK_STREAM)

goto out; // 不处于非连接状态或者非SOCK_STREAM类型的socket(例如UDP,无连接的协议不需要监听,不能调用listen),跳转到out返回错误

old_state = sk->sk_state;

if (!((1 << old_state) & (TCPF_CLOSE | TCPF_LISTEN))) // inet_csk_listen_start监听成功会设置TCP_LISTEN状态,tcp_close关闭socket的时候会设置TCP_CLOSE状态

goto out; // 监听及关闭状态下不能再次监听该socket,跳转到out返回错误

/* Really, if the socket is already in listen state

* we can only allow the backlog to be adjusted.

*/

if (old_state != TCP_LISTEN)

/* Check special setups for testing purpose to enable TFO w/o

* requiring TCP_FASTOPEN sockopt.

* Note that only TCP sockets (SOCK_STREAM) will reach here.

* Also fastopenq may already been allocated because this

* socket was in TCP_LISTEN state previously but was

* shutdown() (rather than close()).

*/

if ((sysctl_tcp_fastopen & TFO_SERVER_ENABLE) != 0 &&

!inet_csk(sk)->icsk_accept_queue.fastopenq.max_qlen) // TCP Fast Open: expediting web services,参考: https://lwn.net/Articles/508865/

if ((sysctl_tcp_fastopen & TFO_SERVER_WO_SOCKOPT1) != 0)

fastopen_queue_tune(sk, backlog);

else if ((sysctl_tcp_fastopen &

TFO_SERVER_WO_SOCKOPT2) != 0)

fastopen_queue_tune(sk,

((uint)sysctl_tcp_fastopen) >> 16);

tcp_fastopen_init_key_once(true);

err = inet_csk_listen_start(sk, backlog); // 调用inet_csk_listen_start实现监听

if (err)

goto out;

sk->sk_max_ack_backlog = backlog; // backlog参数(等待接受的连接达到backlog之后,不再接受更多连接,等连接被应用程序接受之后再接受更多连接)

err = 0;

out:

release_sock(sk);

return err;

inet_listen函数调用栈:

2.2、启动监听(inet_csk_listen_start)

(请求块相关数据结构参考机械工业出版社《Linux内核源码剖析:TCP/IP实现(下册)》,主要是存储连接的请求块、已连接未接收的请求块等...)

inet_csk_listen_start实现比较简单,主要是调用inet_csk_get_port进行地址端口冲突检测,如果冲突就返回地址使用中错误,如果不冲突,就将sk相关信息添加到监听哈希链表listening_hash里面,接收请求的时候,就可以从listening_hash链表找到监听的地址端口。

inet_csk_listen_start实现代码如下:

int inet_csk_listen_start(struct sock *sk, int backlog)

struct inet_connection_sock *icsk = inet_csk(sk);

struct inet_sock *inet = inet_sk(sk);

reqsk_queue_alloc(&icsk->icsk_accept_queue); // icsk_accept_queue初始化

sk->sk_max_ack_backlog = backlog; // backlog参数

sk->sk_ack_backlog = 0;

inet_csk_delack_init(sk); // icsk_ack初始化

/* There is race window here: we announce ourselves listening,

* but this transition is still not validated by get_port().

* It is OK, because this socket enters to hash table only

* after validation is complete.

*/

sk_state_store(sk, TCP_LISTEN); //设置sk为监听状态

if (!sk->sk_prot->get_port(sk, inet->inet_num)) // 调用inet_csk_get_port进行冲突检测,返回0不冲突

inet->inet_sport = htons(inet->inet_num);

sk_dst_reset(sk); // 路由项缓存重置

sk->sk_prot->hash(sk); // 调用inet_hash、__inet_hash将监听的sk信息添加到listening_hash链表里面,接收连接请求的时候,从__inet_lookup_listener查找监听的socket信息

return 0; // 返回0

sk->sk_state = TCP_CLOSE; // 端口地址冲突

return -EADDRINUSE; // 返回地址使用中错误

inet_csk_listen_start函数调用栈:

3、connect系统调用

3.1、tcp连接(tcp_v4_connect)

(细节参考机械工业出版社《Linux内核源码剖析:TCP IP实现(下册)“28.8.1 第一次握手: 发送SYN段”,新内核代码基本一致)

tcp_v4_connect主要检测目的地址类型等,设置源端口、目的端口、源地址、目的地址等,初始化tcp序号,查找设置路由,然后调用tcp_connect发送SYN报文。

tcp_v4_connect代码如下:

int tcp_v4_connect(struct sock *sk, struct sockaddr *uaddr, int addr_len) // sk: socket,uaddr: socket地址,addr_len: socket地址长度

struct sockaddr_in *usin = (struct sockaddr_in *)uaddr;

struct inet_sock *inet = inet_sk(sk);

struct tcp_sock *tp = tcp_sk(sk);

__be16 orig_sport, orig_dport;

__be32 daddr, nexthop;

struct flowi4 *fl4;

struct rtable *rt;

int err;

struct ip_options_rcu *inet_opt;

if (addr_len < sizeof(struct sockaddr_in)) // 目的地址长度是否有效

return -EINVAL;

if (usin->sin_family != AF_INET) // 协议族是否有效

return -EAFNOSUPPORT;

nexthop = daddr = usin->sin_addr.s_addr;

inet_opt = rcu_dereference_protected(inet->inet_opt,

sock_owned_by_user(sk));

if (inet_opt && inet_opt->opt.srr)

if (!daddr)

return -EINVAL;

nexthop = inet_opt->opt.faddr;

orig_sport = inet->inet_sport;

orig_dport = usin->sin_port;

fl4 = &inet->cork.fl.u.ip4;

rt = ip_route_connect(fl4, nexthop, inet->inet_saddr,

RT_CONN_FLAGS(sk), sk->sk_bound_dev_if,

IPPROTO_TCP,

orig_sport, orig_dport, sk); // 查找目的路由缓存

if (IS_ERR(rt))

err = PTR_ERR(rt);

if (err == -ENETUNREACH)

IP_INC_STATS(sock_net(sk), IPSTATS_MIB_OUTNOROUTES);

return err;

if (rt->rt_flags & (RTCF_MULTICAST | RTCF_BROADCAST)) // 目的路由缓存项为组播或广播类型

ip_rt_put(rt);

return -ENETUNREACH;

if (!inet_opt || !inet_opt->opt.srr) // 没有启用源路由选项

daddr = fl4->daddr;

if (!inet->inet_saddr) // 未设置传输控制块中的源地址

inet->inet_saddr = fl4->saddr;

sk_rcv_saddr_set(sk, inet->inet_saddr);

if (tp->rx_opt.ts_recent_stamp && inet->inet_daddr != daddr) // 传输控制块中的时间戳和目的地址已被使用过

/* Reset inherited state */

tp->rx_opt.ts_recent = 0;

tp->rx_opt.ts_recent_stamp = 0;

if (likely(!tp->repair))

tp->write_seq = 0;

if (tcp_death_row.sysctl_tw_recycle &&

!tp->rx_opt.ts_recent_stamp && fl4->daddr == daddr) // 启用了tcp_tw_recycle并接收过时间戳选项

tcp_fetch_timewait_stamp(sk, &rt->dst);

inet->inet_dport = usin->sin_port; // 目的地址及目标端口设置到传输控制块

sk_daddr_set(sk, daddr);

inet_csk(sk)->icsk_ext_hdr_len = 0;

if (inet_opt)

inet_csk(sk)->icsk_ext_hdr_len = inet_opt->opt.optlen;

tp->rx_opt.mss_clamp = TCP_MSS_DEFAULT;

/* Socket identity is still unknown (sport may be zero).

* However we set state to SYN-SENT and not releasing socket

* lock select source port, enter ourselves into the hash tables and

* complete initialization after this.

*/

tcp_set_state(sk, TCP_SYN_SENT); // 状态设置为SYN_SENT

err = inet_hash_connect(&tcp_death_row, sk);

if (err)

goto failure;

sk_set_txhash(sk);

rt = ip_route_newports(fl4, rt, orig_sport, orig_dport,

inet->inet_sport, inet->inet_dport, sk); // 如果源端口或目的端口发生改变,则需要重新查找路由

if (IS_ERR(rt))

err = PTR_ERR(rt);

rt = NULL;

goto failure;

/* OK, now commit destination to socket. */

sk->sk_gso_type = SKB_GSO_TCPV4; // 设置GSO类型和输出设备特性

sk_setup_caps(sk, &rt->dst);

if (!tp->write_seq && likely(!tp->repair)) // 传输控制块还未初始序号

tp->write_seq = secure_tcp_sequence_number(inet->inet_saddr,

inet->inet_daddr,

inet->inet_sport,

usin->sin_port);

inet->inet_id = tp->write_seq ^ jiffies;

err = tcp_connect(sk); // 构造并发送SYN段

rt = NULL;

if (err)

goto failure;

return 0;

failure:

/*

* This unhashes the socket and releases the local port,

* if necessary.

*/

tcp_set_state(sk, TCP_CLOSE);

ip_rt_put(rt);

sk->sk_route_caps = 0;

inet->inet_dport = 0;

return err;

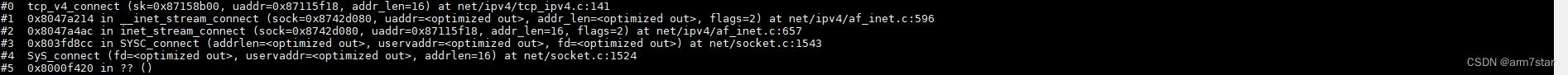

tcp_v4_connect函数调用栈:

3.2、客户端发送SYN报文(tcp_connect)

tcp_connect调用tcp_connect_init初始化传输控制块相关数据(发送队列、发送窗口、序号等),调用tcp_connect_queue_skb将SYN报文添加到发送队列,调用tcp_transmit_skb填充TCP协议各字段,然后调用ip_queue_xmit发送报文到网络层,发送之后,调用inet_csk_reset_xmit_timer重置启动超时重传定时器。

tcp_connect函数代码实现如下:

int tcp_connect(struct sock *sk)

struct tcp_sock *tp = tcp_sk(sk);

struct sk_buff *buff;

int err;

tcp_connect_init(sk); // tcp协议相关初始化

if (unlikely(tp->repair))

tcp_finish_connect(sk, NULL);

return 0;

buff = sk_stream_alloc_skb(sk, 0, sk->sk_allocation, true);

if (unlikely(!buff))

return -ENOBUFS;

tcp_init_nondata_skb(buff, tp->write_seq++, TCPHDR_SYN); // 初始化SYN报文中的flags、seq等相关字段

tp->retrans_stamp = tcp_time_stamp;

tcp_connect_queue_skb(sk, buff); // SYN报文添加到发送队列sk_write_queue

tcp_ecn_send_syn(sk, buff);

/* Send off SYN; include data in Fast Open. */

err = tp->fastopen_req ? tcp_send_syn_data(sk, buff) :

tcp_transmit_skb(sk, buff, 1, sk->sk_allocation); // 调用tcp_transmit_skb,设置TCP SYN报文首部的其他字段,并调用ip_queue_xmit发送到网络层

if (err == -ECONNREFUSED)

return err;

/* We change tp->snd_nxt after the tcp_transmit_skb() call

* in order to make this packet get counted in tcpOutSegs.

*/

tp->snd_nxt = tp->write_seq; // 更新下一个发送的报文序号

tp->pushed_seq = tp->write_seq; // 已经push到发送队列的最后一个序号

TCP_INC_STATS(sock_net(sk), TCP_MIB_ACTIVEOPENS);

/* Timer for repeating the SYN until an answer. */

inet_csk_reset_xmit_timer(sk, ICSK_TIME_RETRANS,

inet_csk(sk)->icsk_rto, TCP_RTO_MAX); // 重置重传次数(SYN报文超时需要重传,超时之后,tcp_xmit_retransmit_queue调用tcp_retransmit_skb重传发送队列里面的数据)

return 0;

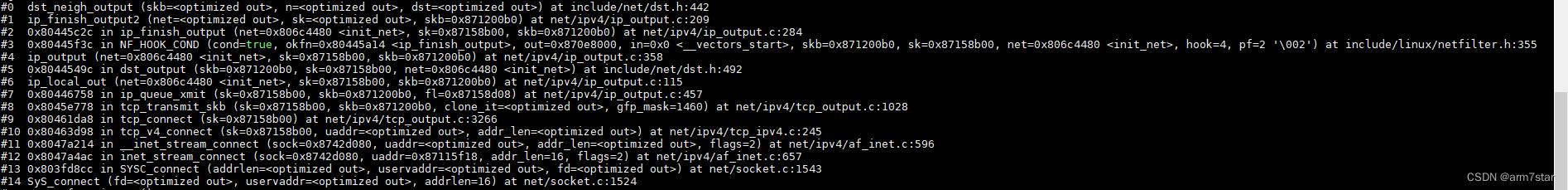

tcp_connect函数调用栈:

3.3、服务器接收SYN报文(tcp_v4_rcv)

第一次握手。

网卡收到数据,触发硬件中断,硬件中断服务程序触发软中断接收处理报文,网络层根据传输层协议类型,调用应用层的输入函数tcp_v4_rcv,tcp_v4_rcv调用__inet_lookup查找接收数据的socket,先调用__inet_lookup_established查找已建立连接的socket,相关的端口地址已建立连接的话,就不能再次建立连接,没有建立连接就调用__inet_lookup_listener检查是否有监听的socket,最后返回处理报文的socket,对于监听状态的socket,调用tcp_v4_do_rcv处理请求报文。

tcp_v4_rcv代码实现:

int tcp_v4_rcv(struct sk_buff *skb)

const struct iphdr *iph;

const struct tcphdr *th;

struct sock *sk;

int ret;

struct net *net = dev_net(skb->dev);

if (skb->pkt_type != PACKET_HOST)

goto discard_it;

/* Count it even if it's bad */

TCP_INC_STATS_BH(net, TCP_MIB_INSEGS);

if (!pskb_may_pull(skb, sizeof(struct tcphdr)))

goto discard_it;

th = tcp_hdr(skb);

if (th->doff < sizeof(struct tcphdr) / 4)

goto bad_packet;

if (!pskb_may_pull(skb, th->doff * 4))

goto discard_it;

/* An explanation is required here, I think.

* Packet length and doff are validated by header prediction,

* provided case of th->doff==0 is eliminated.

* So, we defer the checks. */

if (skb_checksum_init(skb, IPPROTO_TCP, inet_compute_pseudo))

goto csum_error;

th = tcp_hdr(skb);

iph = ip_hdr(skb);

/* This is tricky : We move IPCB at its correct location into TCP_SKB_CB()

* barrier() makes sure compiler wont play fool^Waliasing games.

*/

memmove(&TCP_SKB_CB(skb)->header.h4, IPCB(skb),

sizeof(struct inet_skb_parm));

barrier();

TCP_SKB_CB(skb)->seq = ntohl(th->seq);

TCP_SKB_CB(skb)->end_seq = (TCP_SKB_CB(skb)->seq + th->syn + th->fin +

skb->len - th->doff * 4);

TCP_SKB_CB(skb)->ack_seq = ntohl(th->ack_seq);

TCP_SKB_CB(skb)->tcp_flags = tcp_flag_byte(th);

TCP_SKB_CB(skb)->tcp_tw_isn = 0;

TCP_SKB_CB(skb)->ip_dsfield = ipv4_get_dsfield(iph);

TCP_SKB_CB(skb)->sacked = 0;

lookup:

sk = __inet_lookup_skb(&tcp_hashinfo, skb, th->source, th->dest); // 查找目的socket

if (!sk)

goto no_tcp_socket;

process:

if (sk->sk_state == TCP_TIME_WAIT)

goto do_time_wait; // TIME_WAIT状态,不接收数据

if (sk->sk_state == TCP_NEW_SYN_RECV)

struct request_sock *req = inet_reqsk(sk);

struct sock *nsk;

sk = req->rsk_listener;

if (unlikely(tcp_v4_inbound_md5_hash(sk, skb)))

reqsk_put(req);

goto discard_it;

if (unlikely(sk->sk_state != TCP_LISTEN))

inet_csk_reqsk_queue_drop_and_put(sk, req);

goto lookup;

sock_hold(sk);

nsk = tcp_check_req(sk, skb, req, false);

if (!nsk)

reqsk_put(req);

goto discard_and_relse;

if (nsk == sk)

reqsk_put(req);

else if (tcp_child_process(sk, nsk, skb))

tcp_v4_send_reset(nsk, skb);

goto discard_and_relse;

else

sock_put(sk);

return 0;

if (unlikely(iph->ttl < inet_sk(sk)->min_ttl)) // 丢弃TTL生存时间小于min_ttl的报文

NET_INC_STATS_BH(net, LINUX_MIB_TCPMINTTLDROP);

goto discard_and_relse;

if (!xfrm4_policy_check(sk, XFRM_POLICY_IN, skb))

goto discard_and_relse;

if (tcp_v4_inbound_md5_hash(sk, skb))

goto discard_and_relse;

nf_reset(skb);

if (sk_filter(sk, skb)) // filter过滤收到的报文,丢弃该报文

goto discard_and_relse;

skb->dev = NULL;

if (sk->sk_state == TCP_LISTEN) // 监听状态的socket,调用tcp_v4_do_rcv处理连接请求报文

ret = tcp_v4_do_rcv(sk, skb);

goto put_and_return;

sk_incoming_cpu_update(sk);

bh_lock_sock_nested(sk);

tcp_sk(sk)->segs_in += max_t(u16, 1, skb_shinfo(skb)->gso_segs);

ret = 0;

if (!sock_owned_by_user(sk))

if (!tcp_prequeue(sk, skb))

ret = tcp_v4_do_rcv(sk, skb);

else if (unlikely(sk_add_backlog(sk, skb,

sk->sk_rcvbuf + sk->sk_sndbuf)))

bh_unlock_sock(sk);

NET_INC_STATS_BH(net, LINUX_MIB_TCPBACKLOGDROP);

goto discard_and_relse;

bh_unlock_sock(sk);

put_and_return:

sock_put(sk);

return ret;

no_tcp_socket:

if (!xfrm4_policy_check(NULL, XFRM_POLICY_IN, skb))

goto discard_it;

if (tcp_checksum_complete(skb)) // 错误的报文,不发送RST

csum_error:

TCP_INC_STATS_BH(net, TCP_MIB_CSUMERRORS);

bad_packet:

TCP_INC_STATS_BH(net, TCP_MIB_INERRS);

else // 非错误的报文,本地没有对应的监听/接收的socket,发送RST重置报文,应用层根据RST错误可以知道对端可达(在ceph里面,如果收到RST,很可能目的地址错误或者服务还没起来,那么不需要立即重连,等更新目的地址或者隔段时间再次连接,此时可能获取到了正确的目的地址或者要连接的服务已经起来了)

tcp_v4_send_reset(NULL, skb); // 发送RST报文

discard_it:

/* Discard frame. */

kfree_skb(skb);

return 0;

discard_and_relse:

sock_put(sk);

goto discard_it;

do_time_wait:

if (!xfrm4_policy_check(NULL, XFRM_POLICY_IN, skb))

inet_twsk_put(inet_twsk(sk));

goto discard_it;

if (tcp_checksum_complete(skb))

inet_twsk_put(inet_twsk(sk));

goto csum_error;

switch (tcp_timewait_state_process(inet_twsk(sk), skb, th))

case TCP_TW_SYN:

struct sock *sk2 = inet_lookup_listener(dev_net(skb->dev),

&tcp_hashinfo,

iph->saddr, th->source,

iph->daddr, th->dest,

inet_iif(skb));

if (sk2)

inet_twsk_deschedule_put(inet_twsk(sk));

sk = sk2;

goto process;

/* Fall through to ACK */

case TCP_TW_ACK:

tcp_v4_timewait_ack(sk, skb);

break;

case TCP_TW_RST:

tcp_v4_send_reset(sk, skb);

inet_twsk_deschedule_put(inet_twsk(sk));

goto discard_it;

case TCP_TW_SUCCESS:;

goto discard_it;

tcp_v4_rcv查找监听socket调用栈:

3.4、SYN报文处理及发送SYN+ACK报文(tcp_rcv_state_process)

第二次握手。

tcp_v4_do_rcv最终调用tcp_rcv_state_process处理各种状态的输入,对于监听状态的socket,检查过滤掉各种不合法的标志的报文,只处理SYN标志的报文;细节参考机械工业出版社《Linux内核源码剖析:TCP/IP实现(下册)》P788。

tcp_rcv_state_process函数代码如下:

int tcp_rcv_state_process(struct sock *sk, struct sk_buff *skb)

struct tcp_sock *tp = tcp_sk(sk);

struct inet_connection_sock *icsk = inet_csk(sk);

const struct tcphdr *th = tcp_hdr(skb);

struct request_sock *req;

int queued = 0;

bool acceptable;

tp->rx_opt.saw_tstamp = 0;

switch (sk->sk_state)

case TCP_CLOSE:

goto discard; // 直接丢弃CLOSE状态报文

case TCP_LISTEN:

if (th->ack) // 有ACK标志位(连接请求的第一次握手不带ACK,带ACK的报文肯定不是合法的第一次握手SYN报文,不处理,返回-1即可)

return 1;

if (th->rst) // 有RST标志位(连接请求的第一次握手不带RST,带RST的报文肯定不是合法的第一次握手SYN报文,不处理,直接丢弃)

goto discard;

if (th->syn) // SYN报文

if (th->fin) // 设置了FIN标志,丢弃报文(第一次握手不可能有FIN标志)

goto discard;

if (icsk->icsk_af_ops->conn_request(sk, skb) < 0) // 调用tcp_v4_conn_request,添加到conn_request(有太多请求中的连接的话,可能被丢弃,失败的话返回-1),并发送SYN+ACK应答报文

return 1;

/* Now we have several options: In theory there is

* nothing else in the frame. KA9Q has an option to

* send data with the syn, BSD accepts data with the

* syn up to the [to be] advertised window and

* Solaris 2.1 gives you a protocol error. For now

* we just ignore it, that fits the spec precisely

* and avoids incompatibilities. It would be nice in

* future to drop through and process the data.

*

* Now that TTCP is starting to be used we ought to

* queue this data.

* But, this leaves one open to an easy denial of

* service attack, and SYN cookies can't defend

* against this problem. So, we drop the data

* in the interest of security over speed unless

* it's still in use.

*/

kfree_skb(skb);

return 0;

goto discard; // 非SYN报文,直接丢弃

case TCP_SYN_SENT:

queued = tcp_rcv_synsent_state_process(sk, skb, th);

if (queued >= 0)

return queued;

/* Do step6 onward by hand. */

tcp_urg(sk, skb, th);

__kfree_skb(skb);

tcp_data_snd_check(sk);

return 0;

req = tp->fastopen_rsk;

if (req)

WARN_ON_ONCE(sk->sk_state != TCP_SYN_RECV &&

sk->sk_state != TCP_FIN_WAIT1);

if (!tcp_check_req(sk, skb, req, true))

goto discard;

if (!th->ack && !th->rst && !th->syn)

goto discard;

if (!tcp_validate_incoming(sk, skb, th, 0))

return 0;

/* step 5: check the ACK field */

acceptable = tcp_ack(sk, skb, FLAG_SLOWPATH |

FLAG_UPDATE_TS_RECENT) > 0;

switch (sk->sk_state)

case TCP_SYN_RECV:

if (!acceptable)

return 1;

if (!tp->srtt_us)

tcp_synack_rtt_meas(sk, req);

/* Once we leave TCP_SYN_RECV, we no longer need req

* so release it.

*/

if (req)

tp->total_retrans = req->num_retrans;

reqsk_fastopen_remove(sk, req, false);

else

/* Make sure socket is routed, for correct metrics. */

icsk->icsk_af_ops->rebuild_header(sk);

tcp_init_congestion_control(sk);

tcp_mtup_init(sk);

tp->copied_seq = tp->rcv_nxt;

tcp_init_buffer_space(sk);

smp_mb();

tcp_set_state(sk, TCP_ESTABLISHED);

sk->sk_state_change(sk);

/* Note, that this wakeup is only for marginal crossed SYN case.

* Passively open sockets are not waked up, because

* sk->sk_sleep == NULL and sk->sk_socket == NULL.

*/

if (sk->sk_socket)

sk_wake_async(sk, SOCK_WAKE_IO, POLL_OUT);

tp->snd_una = TCP_SKB_CB(skb)->ack_seq;

tp->snd_wnd = ntohs(th->window) << tp->rx_opt.snd_wscale;

tcp_init_wl(tp, TCP_SKB_CB(skb)->seq);

if (tp->rx_opt.tstamp_ok)

tp->advmss -= TCPOLEN_TSTAMP_ALIGNED;

if (req)

/* Re-arm the timer because data may have been sent out.

* This is similar to the regular data transmission case

* when new data has just been ack'ed.

*

* (TFO) - we could try to be more aggressive and

* retransmitting any data sooner based on when they

* are sent out.

*/

tcp_rearm_rto(sk);

else

tcp_init_metrics(sk);

tcp_update_pacing_rate(sk);

/* Prevent spurious tcp_cwnd_restart() on first data packet */

tp->lsndtime = tcp_time_stamp;

tcp_initialize_rcv_mss(sk);

tcp_fast_path_on(tp);

break;

case TCP_FIN_WAIT1:

struct dst_entry *dst;

int tmo;

/* If we enter the TCP_FIN_WAIT1 state and we are a

* Fast Open socket and this is the first acceptable

* ACK we have received, this would have acknowledged

* our SYNACK so stop the SYNACK timer.

*/

if (req)

/* Return RST if ack_seq is invalid.

* Note that RFC793 only says to generate a

* DUPACK for it but for TCP Fast Open it seems

* better to treat this case like TCP_SYN_RECV

* above.

*/

if (!acceptable)

return 1;

/* We no longer need the request sock. */

reqsk_fastopen_remove(sk, req, false);

tcp_rearm_rto(sk);

if (tp->snd_una != tp->write_seq)

break;

tcp_set_state(sk, TCP_FIN_WAIT2);

sk->sk_shutdown |= SEND_SHUTDOWN;

dst = __sk_dst_get(sk);

if (dst)

dst_confirm(dst);

if (!sock_flag(sk, SOCK_DEAD))

/* Wake up lingering close() */

sk->sk_state_change(sk);

break;

if (tp->linger2 < 0 ||

(TCP_SKB_CB(skb)->end_seq != TCP_SKB_CB(skb)->seq &&

after(TCP_SKB_CB(skb)->end_seq - th->fin, tp->rcv_nxt)))

tcp_done(sk);

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_TCPABORTONDATA);

return 1;

tmo = tcp_fin_time(sk);

if (tmo > TCP_TIMEWAIT_LEN)

inet_csk_reset_keepalive_timer(sk, tmo - TCP_TIMEWAIT_LEN);

else if (th->fin || sock_owned_by_user(sk))

/* Bad case. We could lose such FIN otherwise.

* It is not a big problem, but it looks confusing

* and not so rare event. We still can lose it now,

* if it spins in bh_lock_sock(), but it is really

* marginal case.

*/

inet_csk_reset_keepalive_timer(sk, tmo);

else

tcp_time_wait(sk, TCP_FIN_WAIT2, tmo);

goto discard;

break;

case TCP_CLOSING:

if (tp->snd_una == tp->write_seq)

tcp_time_wait(sk, TCP_TIME_WAIT, 0);

goto discard;

break;

case TCP_LAST_ACK:

if (tp->snd_una == tp->write_seq)

tcp_update_metrics(sk);

tcp_done(sk);

goto discard;

break;

/* step 6: check the URG bit */

tcp_urg(sk, skb, th);

/* step 7: process the segment text */

switch (sk->sk_state)

case TCP_CLOSE_WAIT:

case TCP_CLOSING:

case TCP_LAST_ACK:

if (!before(TCP_SKB_CB(skb)->seq, tp->rcv_nxt))

break;

case TCP_FIN_WAIT1:

case TCP_FIN_WAIT2:

/* RFC 793 says to queue data in these states,

* RFC 1122 says we MUST send a reset.

* BSD 4.4 also does reset.

*/

if (sk->sk_shutdown & RCV_SHUTDOWN)

if (TCP_SKB_CB(skb)->end_seq != TCP_SKB_CB(skb)->seq &&

after(TCP_SKB_CB(skb)->end_seq - th->fin, tp->rcv_nxt))

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_TCPABORTONDATA);

tcp_reset(sk);

return 1;

/* Fall through */

case TCP_ESTABLISHED:

tcp_data_queue(sk, skb);

queued = 1;

break;

/* tcp_data could move socket to TIME-WAIT */

if (sk->sk_state != TCP_CLOSE)

tcp_data_snd_check(sk);

tcp_ack_snd_check(sk);

if (!queued)

discard:

__kfree_skb(skb);

return 0;

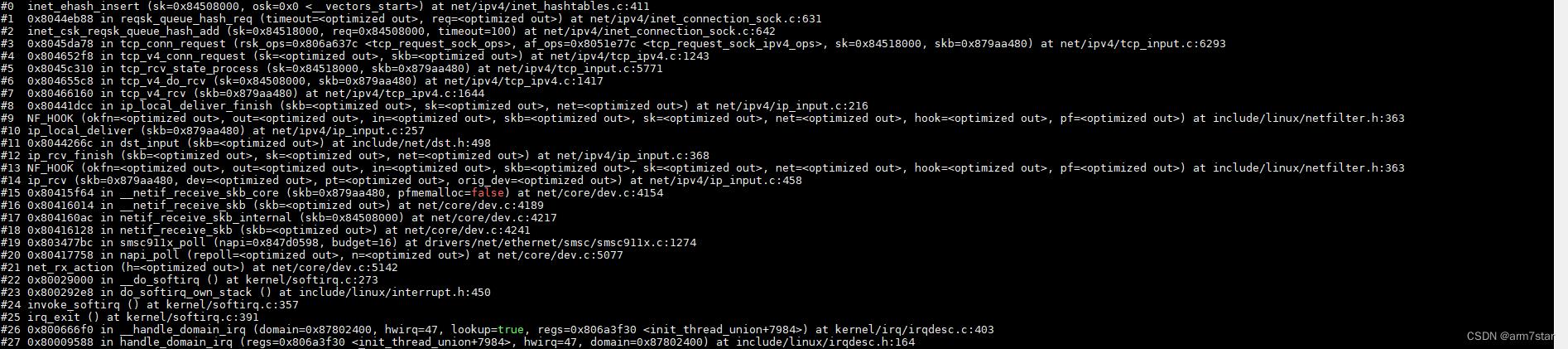

tcp_conn_request调用inet_reqsk_alloc分配连接请求块(TCP_NEW_SYN_RECV),调用inet_csk_reqsk_queue_hash_add、inet_ehash_insert将请求控制块加入到全局的ehash散列表中(__inet_lookup_established从里面查找socket),调用tcp_v4_send_synack构造发送SYN+ACK报文。

构建SYN+ACK报文调用栈:

发送SYN+ACK报文调用栈:

inet_ehash_insert调用栈:

3.5、客户端接收SYN+ACK发送ACK(tcp_rcv_state_process)

第三次握手。

网卡收到TCP报文,调用tcp_v4_rcv、tcp_v4_do_rcv、tcp_rcv_state_process处理SYN+ACK报文,客户端在TCP_SYN_SENT状态,处理第二次握手的SYN+ACK报文,调用tcp_rcv_synsent_state_process处理报文,初始化发送窗口,调用tcp_finish_connect完成连接(设置socket状态为TCP_ESTABLISHED,如果有等待socket的线程,唤醒该线程),不延迟发送ACK的情况下,调用tcp_send_ack发送第三次握手的ACK报文。

tcp_rcv_state_process函数代码如下:

int tcp_rcv_state_process(struct sock *sk, struct sk_buff *skb)

struct tcp_sock *tp = tcp_sk(sk);

struct inet_connection_sock *icsk = inet_csk(sk);

const struct tcphdr *th = tcp_hdr(skb);

struct request_sock *req;

int queued = 0;

bool acceptable;

tp->rx_opt.saw_tstamp = 0;

switch (sk->sk_state)

case TCP_CLOSE:

goto discard;

case TCP_LISTEN:

if (th->ack)

return 1;

if (th->rst)

goto discard;

if (th->syn)

if (th->fin)

goto discard;

if (icsk->icsk_af_ops->conn_request(sk, skb) < 0)

return 1;

/* Now we have several options: In theory there is

* nothing else in the frame. KA9Q has an option to

* send data with the syn, BSD accepts data with the

* syn up to the [to be] advertised window and

* Solaris 2.1 gives you a protocol error. For now

* we just ignore it, that fits the spec precisely

* and avoids incompatibilities. It would be nice in

* future to drop through and process the data.

*

* Now that TTCP is starting to be used we ought to

* queue this data.

* But, this leaves one open to an easy denial of

* service attack, and SYN cookies can't defend

* against this problem. So, we drop the data

* in the interest of security over speed unless

* it's still in use.

*/

kfree_skb(skb);

return 0;

goto discard;

case TCP_SYN_SENT: // SYN_SENT状态

queued = tcp_rcv_synsent_state_process(sk, skb, th); // 调用tcp_rcv_synsent_state_process处理第二次握手报文

if (queued >= 0)

return queued;

/* Do step6 onward by hand. */

tcp_urg(sk, skb, th);

__kfree_skb(skb);

tcp_data_snd_check(sk); // 收到第二次握手报文之后,就可以开始发送缓存里面的数据

return 0;

req = tp->fastopen_rsk;

if (req)

WARN_ON_ONCE(sk->sk_state != TCP_SYN_RECV &&

sk->sk_state != TCP_FIN_WAIT1);

if (!tcp_check_req(sk, skb, req, true))

goto discard;

if (!th->ack && !th->rst && !th->syn)

goto discard;

if (!tcp_validate_incoming(sk, skb, th, 0))

return 0;

/* step 5: check the ACK field */

acceptable = tcp_ack(sk, skb, FLAG_SLOWPATH |

FLAG_UPDATE_TS_RECENT) > 0;

switch (sk->sk_state)

case TCP_SYN_RECV:

if (!acceptable)

return 1;

if (!tp->srtt_us)

tcp_synack_rtt_meas(sk, req);

/* Once we leave TCP_SYN_RECV, we no longer need req

* so release it.

*/

if (req)

tp->total_retrans = req->num_retrans;

reqsk_fastopen_remove(sk, req, false);

else

/* Make sure socket is routed, for correct metrics. */

icsk->icsk_af_ops->rebuild_header(sk);

tcp_init_congestion_control(sk);

tcp_mtup_init(sk);

tp->copied_seq = tp->rcv_nxt;

tcp_init_buffer_space(sk);

smp_mb();

tcp_set_state(sk, TCP_ESTABLISHED);

sk->sk_state_change(sk);

/* Note, that this wakeup is only for marginal crossed SYN case.

* Passively open sockets are not waked up, because

* sk->sk_sleep == NULL and sk->sk_socket == NULL.

*/

if (sk->sk_socket)

sk_wake_async(sk, SOCK_WAKE_IO, POLL_OUT);

tp->snd_una = TCP_SKB_CB(skb)->ack_seq;

tp->snd_wnd = ntohs(th->window) << tp->rx_opt.snd_wscale;

tcp_init_wl(tp, TCP_SKB_CB(skb)->seq);

if (tp->rx_opt.tstamp_ok)

tp->advmss -= TCPOLEN_TSTAMP_ALIGNED;

if (req)

/* Re-arm the timer because data may have been sent out.

* This is similar to the regular data transmission case

* when new data has just been ack'ed.

*

* (TFO) - we could try to be more aggressive and

* retransmitting any data sooner based on when they

* are sent out.

*/

tcp_rearm_rto(sk);

else

tcp_init_metrics(sk);

tcp_update_pacing_rate(sk);

/* Prevent spurious tcp_cwnd_restart() on first data packet */

tp->lsndtime = tcp_time_stamp;

tcp_initialize_rcv_mss(sk);

tcp_fast_path_on(tp);

break;

case TCP_FIN_WAIT1:

struct dst_entry *dst;

int tmo;

/* If we enter the TCP_FIN_WAIT1 state and we are a

* Fast Open socket and this is the first acceptable

* ACK we have received, this would have acknowledged

* our SYNACK so stop the SYNACK timer.

*/

if (req)

/* Return RST if ack_seq is invalid.

* Note that RFC793 only says to generate a

* DUPACK for it but for TCP Fast Open it seems

* better to treat this case like TCP_SYN_RECV

* above.

*/

if (!acceptable)

return 1;

/* We no longer need the request sock. */

reqsk_fastopen_remove(sk, req, false);

tcp_rearm_rto(sk);

if (tp->snd_una != tp->write_seq)

break;

tcp_set_state(sk, TCP_FIN_WAIT2);

sk->sk_shutdown |= SEND_SHUTDOWN;

dst = __sk_dst_get(sk);

if (dst)

dst_confirm(dst);

if (!sock_flag(sk, SOCK_DEAD))

/* Wake up lingering close() */

sk->sk_state_change(sk);

break;

if (tp->linger2 < 0 ||

(TCP_SKB_CB(skb)->end_seq != TCP_SKB_CB(skb)->seq &&

after(TCP_SKB_CB(skb)->end_seq - th->fin, tp->rcv_nxt)))

tcp_done(sk);

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_TCPABORTONDATA);

return 1;

tmo = tcp_fin_time(sk);

if (tmo > TCP_TIMEWAIT_LEN)

inet_csk_reset_keepalive_timer(sk, tmo - TCP_TIMEWAIT_LEN);

else if (th->fin || sock_owned_by_user(sk))

/* Bad case. We could lose such FIN otherwise.

* It is not a big problem, but it looks confusing

* and not so rare event. We still can lose it now,

* if it spins in bh_lock_sock(), but it is really

* marginal case.

*/

inet_csk_reset_keepalive_timer(sk, tmo);

else

tcp_time_wait(sk, TCP_FIN_WAIT2, tmo);

goto discard;

break;

case TCP_CLOSING:

if (tp->snd_una == tp->write_seq)

tcp_time_wait(sk, TCP_TIME_WAIT, 0);

goto discard;

break;

case TCP_LAST_ACK:

if (tp->snd_una == tp->write_seq)

tcp_update_metrics(sk);

tcp_done(sk);

goto discard;

break;

/* step 6: check the URG bit */

tcp_urg(sk, skb, th);

/* step 7: process the segment text */

switch (sk->sk_state)

case TCP_CLOSE_WAIT:

case TCP_CLOSING:

case TCP_LAST_ACK:

if (!before(TCP_SKB_CB(skb)->seq, tp->rcv_nxt))

break;

case TCP_FIN_WAIT1:

case TCP_FIN_WAIT2:

/* RFC 793 says to queue data in these states,

* RFC 1122 says we MUST send a reset.

* BSD 4.4 also does reset.

*/

if (sk->sk_shutdown & RCV_SHUTDOWN)

if (TCP_SKB_CB(skb)->end_seq != TCP_SKB_CB(skb)->seq &&

after(TCP_SKB_CB(skb)->end_seq - th->fin, tp->rcv_nxt))

NET_INC_STATS_BH(sock_net(sk), LINUX_MIB_TCPABORTONDATA);

tcp_reset(sk);

return 1;

/* Fall through */

case TCP_ESTABLISHED:

tcp_data_queue(sk, skb);

queued = 1;

break;

/* tcp_data could move socket to TIME-WAIT */

if (sk->sk_state != TCP_CLOSE)

tcp_data_snd_check(sk);

tcp_ack_snd_check(sk);

if (!queued)

discard:

__kfree_skb(skb);

return 0;

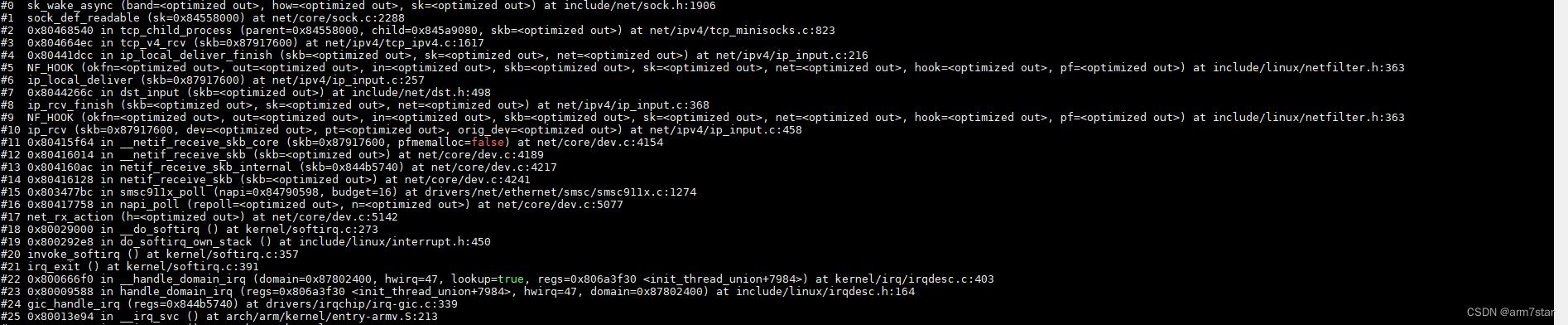

tcp_rcv_state_process函数调用栈:

tcp_rcv_synsent_state_process函数调用栈:

tcp_send_ack函数调用栈:

4、accept系统调用

4.1、服务器接收第三次握手ACK()

TCP_NEW_SYN_RECV状态,收到报文,tcp_v4_rcv主要调用tcp_check_req、tcp_child_process处理报文及子传输控制块。

- tcp_check_req主要处理SYN_RECV状态的输入报文,然后调用tcp_v4_syn_recv_sock;tcp_v4_syn_recv_sock调用tcp_create_openreq_child创建并初始化一个新的socket,调用inet_ehash_nolisten、inet_ehash_insert将新的socket添加到全局ehash散列表中,调用inet_csk_complete_hashdance删除旧的半连接请求并将新的已连接的请求添加到icsk_accept_queue,返回新的socket;

- tcp_child_process初始化子传输控制块,调用tcp_rcv_state_process处理输入报文,新的socket的状态更新为TCP_ESTABLISHED状态,Wakeup parent,应该是唤醒accept阻塞的线程或者poll监听socket的线程。

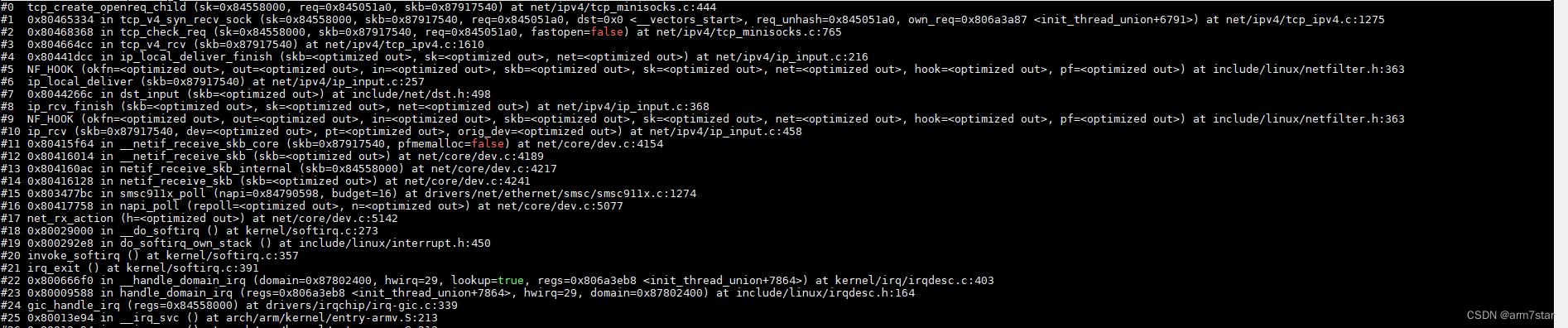

tcp_create_openreq_child函数调用栈:

inet_ehash_insert函数调用栈:

inet_csk_complete_hashdance函数调用栈:

tcp_child_process调用栈:

4.2、accept系统调用(inet_accept)

服务器连接建立完成之后,epoll监听的socket就可以读,然后就可以调用accept去接收新的连接,accept系统调用最终调用inet_csk_accept,检查icsk_accept_queue队列是否为空,不为空的话,就从icsk_accept_queue取第一个已连接的socket并返回给上一级函数,icsk_accept_queue队列为空的话,如果是非阻塞模式就返回EAGAIN错误,如果是阻塞模式的话就调用inet_csk_wait_for_connect等待连接。

inet_csk_accept函数代码如下:

struct sock *inet_csk_accept(struct sock *sk, int flags, int *err)

struct inet_connection_sock *icsk = inet_csk(sk);

struct request_sock_queue *queue = &icsk->icsk_accept_queue;

struct request_sock *req;

struct sock *newsk;

int error;

lock_sock(sk);

/* We need to make sure that this socket is listening,

* and that it has something pending以上是关于linux网络协议栈源码分析 - 传输层(TCP连接的建立)的主要内容,如果未能解决你的问题,请参考以下文章

linux网络协议栈源码分析 - 传输层(TCP连接的终止)

linux网络协议栈源码分析 - 传输层(TCP连接的终止)

linux网络协议栈源码分析 - 传输层(TCP连接的终止)

linux网络协议栈源码分析 - 传输层(TCP连接的建立)