如何在ffmpeg中使用滤镜技术

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了如何在ffmpeg中使用滤镜技术相关的知识,希望对你有一定的参考价值。

参考技术A 以下是本人的部分代码://解码一个avpacket

static int get_video_frame(VideoState *is, AVFrame *frame, int64_t *pts, AVPacket *pkt)

int got_picture, i; int ret = 1;

//if (packet_queue_get(&is->videoq, pkt, 1) < 0)

// return -1;

if ((i = av_read_frame(is->ic, pkt)) < 0)//如果读到文件尾部就退出

return 0;

i=avcodec_decode_video2(is->video_st->codec, frame, &got_picture, pkt);

if (got_picture==0)

;//return 0;

*pts = frame->pkt_dts;

if (*pts == AV_NOPTS_VALUE)

*pts = 0;

/*if (((is->av_sync_type == AV_SYNC_AUDIO_MASTER && is->audio_st) ||

is->av_sync_type == AV_SYNC_EXTERNAL_CLOCK) &&

(framedrop>0 || (framedrop && is->audio_st)))

//SDL_LockMutex(is->pictq_mutex);

if (is->frame_last_pts != AV_NOPTS_VALUE && *pts)

double clockdiff = get_video_clock(is) - get_master_clock(is);

double dpts = av_q2d(is->video_st->time_base) * *pts;

double ptsdiff = dpts - is->frame_last_pts;

if (fabs(clockdiff) < AV_NOSYNC_THRESHOLD &&

ptsdiff > 0 && ptsdiff < AV_NOSYNC_THRESHOLD &&

clockdiff + ptsdiff - is->frame_last_filter_delay < 0)

is->frame_last_dropped_pos = pkt->pos;

is->frame_last_dropped_pts = dpts;

is->frame_drops_early++;

ret = 0;

*/

if (ret)

is->frame_last_returned_time = av_gettime() / 1000000.0;

视频特效-使用ffmpeg滤镜

视频特效-使用ffmpeg滤镜

前言

ffmpeg的滤镜分为简单滤镜和复杂滤镜。

复杂滤镜存在多个输入和多个输出如图:

在命令行中可以通过 -filter_complex 或 -lavfi 来使用。

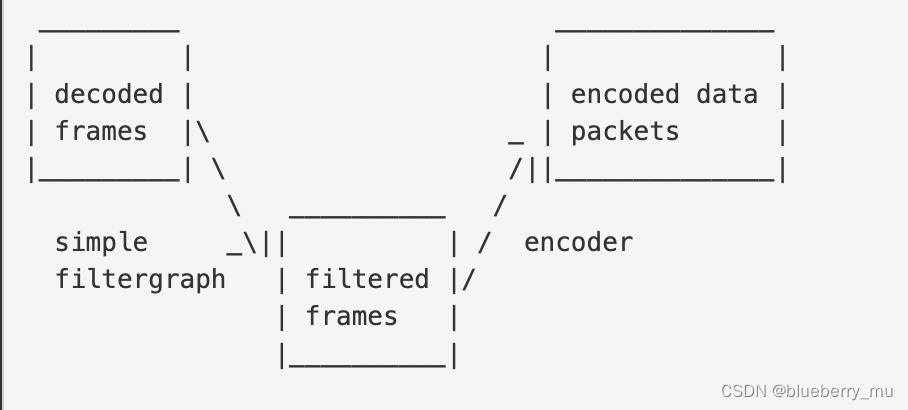

简单滤镜则只有一个输入和输出,如图:

在命令行中可以通过-vf来使用。

ffmpeg中的滤镜有很多,我们可以组合使用他们。举个例子,下图中就使用了多个滤镜(split , crop, vflip,overlay)。split是将输入数据拆分城两份,第一份为main,第二份为tmp。然后再通过crop 裁剪tmp,再通过vflip竖直反转得到flip。然后通过overlay将flip叠加到main上输出。

这些滤镜构成了一个图。每个滤镜都有输入和输出,上一个滤镜的输出是下一个滤镜的输入。

[main]

input --> split ---------------------> overlay --> output

| ^

|[tmp] [flip]|

+-----> crop --> vflip -------+

上面的这个滤镜图对应的命令为

ffmpeg -i INPUT -vf "split [main][tmp]; [tmp] crop=iw:ih/2:0:0, vflip [flip]; [main][flip] overlay=0:H/2" OUTPUT

其中中括号括起来的是对滤镜输出的命名,也可以不指定名称。分号隔开的是每个滤镜链。如果属于同一个链中的滤镜则只需要通过逗号隔开。

我们用张图片试一试上面的命令。原图:

执行命令:

ffmpeg -i lyf1.jpeg -vf "split [main][tmp]; [tmp] crop=iw:ih/2:0:0,vflip [flip]; [main][flip] overlay=0:H/2" output.jpeg

我们也可以直接通过ffplay直接使用滤镜看效果。

ffplay -i lyf1.jpeg -vf "split [main][tmp]; [tmp] crop=iw:ih/2:0:0,vflip [flip]; [main][flip] overlay=0:H/2"

再来看一个颜色滤镜:hue,hue可以改变图片的色彩,它的参数h代表颜色,取值范围为0~360,它的s代表的是饱和度取值范围是-10~10,它的参数b代表的是亮度,取值范围为-10~10。这些参数所代表的颜色可以参考下图:

我们用命令试一下:

ffplay -i lyf1.jpeg -vf "hue=90:s=1:b=0"

原图变为了:

实践

上面的命令实现的功能,我们一定能用代码实现。

流程如下图:

创建滤镜

void ImageProcessor::initializeFilterGraph()

// 创建滤镜图实例

this->filter_graph_ = avfilter_graph_alloc();

// 获取输入滤镜的定义

const auto avfilter_buffer_src = avfilter_get_by_name("buffer");

if (avfilter_buffer_src == nullptr)

throw std::runtime_error("buffer avfilter get failed.");

// 获取输出滤镜的定义

const auto avfilter_buffer_sink = avfilter_get_by_name("buffersink");

if (avfilter_buffer_sink == nullptr)

throw std::runtime_error("buffersink avfilter get failed.");

// 创建输入实体

this->filter_inputs_ = avfilter_inout_alloc();

// 创建输出实体

this->filter_outputs_ = avfilter_inout_alloc();

if (this->filter_inputs_ == nullptr || this->filter_outputs_ == nullptr)

throw std::runtime_error("filter inputs is null");

char args[512];

snprintf(args, sizeof(args), "video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

this->width_,

this->height_,

1,

1,

avframe_origin_->format,

avframe_origin_->sample_aspect_ratio.num,

avframe_origin_->sample_aspect_ratio.den);

// 根据参数创建输入滤镜的上下文,并添加到滤镜图

int ret = avfilter_graph_create_filter(&this->buffer_src_ctx_, avfilter_buffer_src, "in",

args, nullptr, this->filter_graph_);

if (ret < 0)

throw std::runtime_error("create filter failed .code is " + std::to_string(ret));

// 根据参数创建输出滤镜的上下文,并添加到滤镜图

ret = avfilter_graph_create_filter(&this->buffer_sink_ctx_, avfilter_buffer_sink, "out", nullptr,

nullptr, this->filter_graph_);

if (ret < 0)

throw std::runtime_error("create filter failed code is " + std::to_string(ret));

AVPixelFormat pix_fmts[] = AV_PIX_FMT_YUVJ420P, AV_PIX_FMT_NONE;

ret = av_opt_set_int_list(buffer_sink_ctx_, "pix_fmts", pix_fmts, AV_PIX_FMT_NONE,

AV_OPT_SEARCH_CHILDREN);

// 为输入滤镜关联滤镜名,滤镜上下文

this->filter_outputs_->name = av_strdup("in");

this->filter_outputs_->filter_ctx = this->buffer_src_ctx_;

this->filter_outputs_->pad_idx = 0;

this->filter_outputs_->next = nullptr;

// 为输出滤镜关联滤镜名,滤镜上下文

this->filter_inputs_->name = av_strdup("out");

this->filter_inputs_->filter_ctx = this->buffer_sink_ctx_;

this->filter_inputs_->pad_idx = 0;

this->filter_inputs_->next = nullptr;

// 根据传入的字符串解析出滤镜,并加入到滤镜图

ret = avfilter_graph_parse_ptr(this->filter_graph_,

this->filter_desc_.c_str(),

&this->filter_inputs_,

&this->filter_outputs_,

nullptr);

if (ret < 0)

throw std::runtime_error("avfilter graph parse failed .code is " + std::to_string(ret));

ret = avfilter_graph_config(this->filter_graph_, nullptr);

if (ret < 0)

throw std::runtime_error("avfilter graph config failed.");

使用滤镜

// send to filter graph.

int ret = av_buffersrc_add_frame(this->buffer_src_ctx_, this->avframe_origin_);

if (ret < 0)

throw std::runtime_error("add frame to filter failed code is " + std::to_string(ret));

ret = av_buffersink_get_frame(this->buffer_sink_ctx_, this->avframe_dst_);

if (ret < 0)

throw std::runtime_error("get avframe from sink failed code is " + std::to_string(ret));

其中avframe_orgin_为输入frame,avframe_dst_为输出frame。

完整代码

ImageProcessor.h

#ifndef FFMPEG_DECODER_JPEG_IMAGEPROCESSOR_H

#define FFMPEG_DECODER_JPEG_IMAGEPROCESSOR_H

#include <string>

extern "C"

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libavfilter/avfilter.h>

#include <libavfilter/buffersink.h>

#include <libavfilter/buffersrc.h>

#include <libavutil/avutil.h>

#include <libavutil/opt.h>

using namespace std;

class ImageProcessor

public:

ImageProcessor();

~ImageProcessor();

void setInput(string input);

void setOutput(string output);

void setFilterString(string filter_desc);

void initialize();

void process();

private:

void initializeFilterGraph();

string input_path_;

string output_path_;

string filter_desc_;

AVFormatContext *avformat_ctx_;

AVCodecContext *avcodec_decoder_ctx_;

AVCodecContext *avcodec_encoder_ctx_;

const AVCodec *avcodec_encoder_;

AVPacket *avpacket_origin_;

AVFrame *avframe_origin_;

AVFrame *avframe_dst_;

AVPacket *avpacket_dst_;

int width_;

int height_;

AVFilterContext *buffer_src_ctx_;

AVFilterContext *buffer_sink_ctx_;

AVFilterGraph *filter_graph_;

AVFilterInOut * filter_inputs_;

AVFilterInOut * filter_outputs_;

;

#endif //FFMPEG_DECODER_JPEG_IMAGEPROCESSOR_H

ImageProcess.cpp

#include "ImageProcessor.h"

#include <fstream>

ImageProcessor::ImageProcessor()

ImageProcessor::~ImageProcessor()

avformat_free_context(avformat_ctx_);

av_packet_free(&avpacket_origin_);

av_packet_free(&avpacket_dst_);

av_frame_free(&avframe_origin_);

av_frame_free(&avframe_dst_);

avfilter_inout_free(&filter_inputs_);

avfilter_inout_free(&filter_outputs_);

if (buffer_src_ctx_)

avfilter_free(buffer_src_ctx_);

if (buffer_sink_ctx_)

avfilter_free(buffer_sink_ctx_);

avfilter_graph_free(&filter_graph_);

void ImageProcessor::setInput(string input)

this->input_path_ = std::move(input);

void ImageProcessor::setOutput(string output)

this->output_path_ = std::move(output);

void ImageProcessor::setFilterString(string filter_desc)

this->filter_desc_ = std::move(filter_desc);

/**

* Before initialize you should setInput,setOutput,setFilterString first.

*/

void ImageProcessor::initialize()

avpacket_origin_ = av_packet_alloc();

avpacket_dst_ = av_packet_alloc();

avframe_origin_ = av_frame_alloc();

avframe_dst_ = av_frame_alloc();

avformat_ctx_ = avformat_alloc_context();

// open format

int ret = avformat_open_input(&avformat_ctx_, this->input_path_.c_str(), nullptr, nullptr);

if (ret < 0)

throw std::runtime_error("avformat open input error code is : " + std::to_string(ret));

// init decoder

const auto codec_decoder = avcodec_find_decoder(avformat_ctx_->streams[0]->codecpar->codec_id);

if (codec_decoder == nullptr)

throw std::runtime_error("avcodec find decoder failed.");

avcodec_decoder_ctx_ = avcodec_alloc_context3(codec_decoder);

ret = avcodec_parameters_to_context(avcodec_decoder_ctx_, avformat_ctx_->streams[0]->codecpar);

if (ret < 0)

throw std::runtime_error("avcodec_parameters_to_context failed code is " + std::to_string(ret));

// read frame

ret = av_read_frame(avformat_ctx_, avpacket_origin_);

if (ret < 0)

throw std::runtime_error("av read frame " + std::to_string(ret));

// open decoder

ret = avcodec_open2(avcodec_decoder_ctx_, codec_decoder, nullptr);

if (ret < 0)

throw std::runtime_error("avcodec open failed. codes is " + std::to_string(ret));

// decode packet to frame

ret = avcodec_send_packet(avcodec_decoder_ctx_, avpacket_origin_);

if (ret < 0)

throw std::runtime_error("avcodec send packet failed. code is " + std::to_string(ret));

ret = avcodec_receive_frame(avcodec_decoder_ctx_, avframe_origin_);

if (ret < 0)

throw std::runtime_error("avcodec_receive_frame failed. code is " + std::to_string(ret));

this->width_ = avframe_origin_->width;

this->height_ = avframe_origin_->height;

// init encoder

avcodec_encoder_ = avcodec_find_encoder_by_name("mjpeg");

if (avcodec_encoder_ == nullptr)

throw std::runtime_error("find encoder by name failed.");

avcodec_encoder_ctx_ = avcodec_alloc_context3(avcodec_encoder_);

if (avcodec_encoder_ctx_ == nullptr)

throw std::runtime_error("avcodec alloc failed");

avcodec_encoder_ctx_->width = this->width_;

avcodec_encoder_ctx_->height = this->height_;

avcodec_encoder_ctx_->pix_fmt = AVPixelFormat(avframe_origin_->format);

avcodec_encoder_ctx_->time_base = 1, 1;

initializeFilterGraph();

void ImageProcessor::process()

// send to filter graph.

int ret = av_buffersrc_add_frame(this->buffer_src_ctx_, this->avframe_origin_);

if (ret < 0)

throw std::runtime_error("add frame to filter failed code is " + std::to_string(ret));

ret = av_buffersink_get_frame(this->buffer_sink_ctx_, this->avframe_dst_);

if (ret < 0)

throw std::runtime_error("get avframe from sink failed code is " + std::to_string(ret));

ret = avcodec_open2(avcodec_encoder_ctx_, avcodec_encoder_, nullptr);

if (ret < 0)

throw std::runtime_error("open encode failed code is " + std::to_string(ret));

ret = avcodec_send_frame(avcodec_encoder_ctx_, this->avframe_dst_);

if (ret < 0)

throw std::runtime_error("encoder failed,code is " + std::to_string(ret));

ret = avcodec_receive_packet(avcodec_encoder_ctx_, avpacket_dst_);

if (ret < 0)

throw std::runtime_error("avcodec receive packet failed code is " + std::to_string(ret));

std::ofstream output_file(this->output_path_);

output_file.write(reinterpret_cast<const char *>(this->avpacket_dst_->data), avpacket_dst_->size);

void ImageProcessor::initializeFilterGraph()

// 创建滤镜图实例

this->filter_graph_ = avfilter_graph_alloc();

// 获取输入滤镜的定义

const auto avfilter_buffer_src = avfilter_get_by_name("buffer");

if (avfilter_buffer_src == nullptr)

throw std::runtime_error("buffer avfilter get failed.");

// 获取输出滤镜的定义

const auto avfilter_buffer_sink = avfilter_get_by_name("buffersink");

if (avfilter_buffer_sink == nullptr)

throw std::runtime_error("buffersink avfilter get failed.");

// 创建输入实体

this->filter_inputs_ = avfilter_inout_alloc();

// 创建输出实体

this->filter_outputs_ = avfilter_inout_alloc();

if (this->filter_inputs_ == nullptr || this->filter_outputs_ == nullptr)

throw std::runtime_error("filter inputs is null");

char args[512];

snprintf(args, sizeof(args), "video_size=以上是关于如何在ffmpeg中使用滤镜技术的主要内容,如果未能解决你的问题,请参考以下文章