neutron-dhcp-agent服务启动流程

Posted gj4990

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了neutron-dhcp-agent服务启动流程相关的知识,希望对你有一定的参考价值。

在分析nova boot创建VM的代码流程与neutron-dhcp-agent交互之前,首先分析neutron-dhcp-agent服务启动流程。与其他服务的启动入口一样。查看setup.cfg文件。

| [entry_points] console_scripts = neutron-db-manage = neutron.db.migration.cli:main neutron-debug = neutron.debug.shell:main neutron-dhcp-agent = neutron.cmd.eventlet.agents.dhcp:main neutron-hyperv-agent = neutron.cmd.eventlet.plugins.hyperv_neutron_agent:main neutron-keepalived-state-change = neutron.cmd.keepalived_state_change:main neutron-ibm-agent = neutron.plugins.ibm.agent.sdnve_neutron_agent:main neutron-l3-agent = neutron.cmd.eventlet.agents.l3:main neutron-linuxbridge-agent = neutron.plugins.linuxbridge.agent.linuxbridge_neutron_agent:main neutron-metadata-agent = neutron.cmd.eventlet.agents.metadata:main neutron-mlnx-agent = neutron.cmd.eventlet.plugins.mlnx_neutron_agent:main neutron-nec-agent = neutron.cmd.eventlet.plugins.nec_neutron_agent:main neutron-netns-cleanup = neutron.cmd.netns_cleanup:main neutron-ns-metadata-proxy = neutron.cmd.eventlet.agents.metadata_proxy:main neutron-ovsvapp-agent = neutron.cmd.eventlet.plugins.ovsvapp_neutron_agent:main neutron-nvsd-agent = neutron.plugins.oneconvergence.agent.nvsd_neutron_agent:main neutron-openvswitch-agent = neutron.cmd.eventlet.plugins.ovs_neutron_agent:main neutron-ovs-cleanup = neutron.cmd.ovs_cleanup:main neutron-restproxy-agent = neutron.plugins.bigswitch.agent.restproxy_agent:main neutron-server = neutron.cmd.eventlet.server:main neutron-rootwrap = oslo_rootwrap.cmd:main neutron-rootwrap-daemon = oslo_rootwrap.cmd:daemon neutron-usage-audit = neutron.cmd.usage_audit:main neutron-metering-agent = neutron.cmd.eventlet.services.metering_agent:main neutron-sriov-nic-agent = neutron.plugins.sriovnicagent.sriov_nic_agent:main neutron-sanity-check = neutron.cmd.sanity_check:main neutron-cisco-apic-service-agent = neutron.plugins.ml2.drivers.cisco.apic.apic_topology:service_main neutron-cisco-apic-host-agent = neutron.plugins.ml2.drivers.cisco.apic.apic_topology:agent_main |

#/neutron/cmd/eventlet/agents/dhcp.py

from neutron.agent import dhcp_agent

def main():

dhcp_agent.main()

#/neutron/agent/dhcp_agent.py

def register_options():

config.register_interface_driver_opts_helper(cfg.CONF)

config.register_use_namespaces_opts_helper(cfg.CONF)

config.register_agent_state_opts_helper(cfg.CONF)

cfg.CONF.register_opts(dhcp_config.DHCP_AGENT_OPTS)

cfg.CONF.register_opts(dhcp_config.DHCP_OPTS)

cfg.CONF.register_opts(dhcp_config.DNSMASQ_OPTS)

cfg.CONF.register_opts(metadata_config.DRIVER_OPTS)

cfg.CONF.register_opts(metadata_config.SHARED_OPTS)

cfg.CONF.register_opts(interface.OPTS)

def main():

register_options()

common_config.init(sys.argv[1:])

config.setup_logging()

server = neutron_service.Service.create(

binary='neutron-dhcp-agent',

topic=topics.DHCP_AGENT,

report_interval=cfg.CONF.AGENT.report_interval,

manager='neutron.agent.dhcp.agent.DhcpAgentWithStateReport')

service.launch(server).wait()

与其他的非api服务的启动流程类似,都是创建一个Service对象。具体可以参考《OpenStack-RPC-server的构建(二)》。直接分析我们所关注的代码。

#/neutron/service.py:Service

def start(self):

self.manager.init_host()

super(Service, self).start()

if self.report_interval:

pulse = loopingcall.FixedIntervalLoopingCall(self.report_state)

pulse.start(interval=self.report_interval,

initial_delay=self.report_interval)

self.timers.append(pulse)

if self.periodic_interval:

if self.periodic_fuzzy_delay:

initial_delay = random.randint(0, self.periodic_fuzzy_delay)

else:

initial_delay = None

periodic = loopingcall.FixedIntervalLoopingCall(

self.periodic_tasks)

periodic.start(interval=self.periodic_interval,

initial_delay=initial_delay)

self.timers.append(periodic)

self.manager.after_start()

这里的manager为neutron.agent.dhcp.agent.DhcpAgentWithStateReport对象。在分析start函数之前,先分析首先neutron.agent.dhcp.agent.DhcpAgentWithStateReport对象的创建过程。

#/neutron/agent/dhcp/agent.py:DhcpAgentWithStateReport

class DhcpAgentWithStateReport(DhcpAgent):

def __init__(self, host=None):

super(DhcpAgentWithStateReport, self).__init__(host=host)

self.state_rpc = agent_rpc.PluginReportStateAPI(topics.PLUGIN)

self.agent_state = {

'binary': 'neutron-dhcp-agent',

'host': host,

'topic': topics.DHCP_AGENT,

'configurations': {

'dhcp_driver': cfg.CONF.dhcp_driver,

'use_namespaces': cfg.CONF.use_namespaces,

'dhcp_lease_duration': cfg.CONF.dhcp_lease_duration},

'start_flag': True,

'agent_type': constants.AGENT_TYPE_DHCP}

report_interval = cfg.CONF.AGENT.report_interval

self.use_call = True

if report_interval:

self.heartbeat = loopingcall.FixedIntervalLoopingCall(

self._report_state)

self.heartbeat.start(interval=report_interval)

#/neutron/agent/dhcp/agent.py:DhcpAgent

class DhcpAgent(manager.Manager):

"""DHCP agent service manager.

Note that the public methods of this class are exposed as the server side

of an rpc interface. The neutron server uses

neutron.api.rpc.agentnotifiers.dhcp_rpc_agent_api.DhcpAgentNotifyApi as the

client side to execute the methods here. For more information about

changing rpc interfaces, see doc/source/devref/rpc_api.rst.

"""

target = oslo_messaging.Target(version='1.0')

def __init__(self, host=None):

super(DhcpAgent, self).__init__(host=host)

self.needs_resync_reasons = collections.defaultdict(list)

self.conf = cfg.CONF

self.cache = NetworkCache()

self.dhcp_driver_cls = importutils.import_class(self.conf.dhcp_driver)

ctx = context.get_admin_context_without_session()

self.plugin_rpc = DhcpPluginApi(topics.PLUGIN,

ctx, self.conf.use_namespaces)

# create dhcp dir to store dhcp info

dhcp_dir = os.path.dirname("/%s/dhcp/" % self.conf.state_path)

linux_utils.ensure_dir(dhcp_dir)

self.dhcp_version = self.dhcp_driver_cls.check_version()

self._populate_networks_cache()

self._process_monitor = external_process.ProcessMonitor(

config=self.conf,

resource_type='dhcp')

DhcpAgentWithStateReport类继承DhcpAgent类,DhcpAgentWithStateReport类的作用主要是创建一个协程定时向neutron-server启动时开启的rpc-server上报neutron-dhcp-agent的服务或network状态,然后通过neutron-server的core plugin将状态更新到数据库中。

DhcpAgent类才是为neutron-dhcp-agent服务做主要工作的。其中self.cache = NetworkCache()主要保存底层的active的dhcp networks信息,这些信息会通过DhcpAgentWithStateReport类的_report_state方法上报到数据库中。dhcp_dir= os.path.dirname("/%s/dhcp/" % self.conf.state_path)创建了一个目录,其中self.conf.state_path的配置(在/etc/neutron/dhcp_agent.ini配置文件中)为:

| state_path=/var/lib/neutron |

在该目录下将会保存创建的dhcp networks的相关信息。

self.dhcp_driver_cls =importutils.import_class(self.conf.dhcp_driver)中的dhcp_driver也为/etc/neutron/dhcp_agent.ini配置文件中参数。

| dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq |

self.dhcp_driver_cls是Dnsmasq的类实例。

好了,neutron.agent.dhcp.agent.DhcpAgentWithStateReport对象的创建过程大致就这么些内容,下面分析self.manager.init_host()代码。

#/neutron/agent/dhcp/agent.py:DhcpAgent

def init_host(self):

self.sync_state()

#/neutron/agent/dhcp/agent.py:DhcpAgent

@utils.synchronized('dhcp-agent')

def sync_state(self, networks=None):

"""Sync the local DHCP state with Neutron. If no networks are passed,

or 'None' is one of the networks, sync all of the networks.

"""

only_nets = set([] if (not networks or None in networks) else networks)

LOG.info(_LI('Synchronizing state'))

pool = eventlet.GreenPool(cfg.CONF.num_sync_threads)

known_network_ids = set(self.cache.get_network_ids())

try:

active_networks = self.plugin_rpc.get_active_networks_info()

active_network_ids = set(network.id for network in active_networks)

for deleted_id in known_network_ids - active_network_ids:

try:

self.disable_dhcp_helper(deleted_id)

except Exception as e:

self.schedule_resync(e, deleted_id)

LOG.exception(_LE('Unable to sync network state on '

'deleted network %s'), deleted_id)

for network in active_networks:

if (not only_nets or # specifically resync all

network.id not in known_network_ids or # missing net

network.id in only_nets): # specific network to sync

pool.spawn(self.safe_configure_dhcp_for_network, network)

pool.waitall()

LOG.info(_LI('Synchronizing state complete'))

except Exception as e:

self.schedule_resync(e)

LOG.exception(_LE('Unable to sync network state.'))

sync_state函数主要功能是根据数据库同步底层的networks信息,即将self.cache(底层保存的dhcp networks信息)与通过active_networks= self.plugin_rpc.get_active_networks_info()函数获取的数据库中的networks信息作比较,将未在数据库中的底层networks从self.cache中进行移除。其中self.cache中的networks信息在创建DhcpAgent类的__init__函数的self._populate_networks_cache()代码进行实现。

#/neutron/agent/dhcp/agent.py:DhcpAgent

def _populate_networks_cache(self):

"""Populate the networks cache when the DHCP-agent starts."""

try:

existing_networks = self.dhcp_driver_cls.existing_dhcp_networks(

self.conf

)

for net_id in existing_networks:

net = dhcp.NetModel(self.conf.use_namespaces,

{"id": net_id,

"subnets": [],

"ports": []})

self.cache.put(net)

except NotImplementedError:

# just go ahead with an empty networks cache

LOG.debug("The '%s' DHCP-driver does not support retrieving of a "

"list of existing networks",

self.conf.dhcp_driver)

#/neutron/agent/linux/dhcp.py:Dnsmasq

@classmethod

def existing_dhcp_networks(cls, conf):

"""Return a list of existing networks ids that we have configs for."""

confs_dir = cls.get_confs_dir(conf)

try:

return [

c for c in os.listdir(confs_dir)

if uuidutils.is_uuid_like(c)

]

except OSError:

return []

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

@staticmethod

def get_confs_dir(conf):

return os.path.abspath(os.path.normpath(conf.dhcp_confs))

其中/etc/neutron/dhcp_agent.ini配置文件中的dhcp_confs参数值如下

| state_path=/var/lib/neutron dhcp_confs = $state_path/dhcp |

_populate_networks_cache函数的作用为将/var/lib/neutron/dhcp目录下的dhcp networks组装成NetModel对象放到self.cache中。

如我的OpenStack环境。我有一个外部网络(用于VM连接外网)和一个内部网络(用于VM内部通信)。而内部网络的subnet enable了dhcp,所以在/var/lib/neutron/dhcp目录下将会有该内部网络id的目录。

| [root@jun ~(keystone_admin)]# neutron net-list +--------------------------------------+-----------+-------------------------------------------------------+ | id | name | subnets | +--------------------------------------+-----------+-------------------------------------------------------+ | cad98138-6e5f-4f83-a4c5-5497fa4758b4 | ext-net | 288421a4-63da-41ff-89c1-985e83271e6b 192.168.118.0/24 | | 8165bc3d-400a-48a0-9186-bf59f7f94b05 | inter-net | ec1028b2-7cb0-4feb-b974-6b8ea7e7f08f 172.16.0.0/16 | +--------------------------------------+-----------+-------------------------------------------------------+

[root@jun ~(keystone_admin)]# neutron net-show 8165bc3d-400a-48a0-9186-bf59f7f94b05 +---------------------------+--------------------------------------+ | Field | Value | +---------------------------+--------------------------------------+ | admin_state_up | True | | id | 8165bc3d-400a-48a0-9186-bf59f7f94b05 | | mtu | 0 | | name | inter-net | | provider:network_type | vlan | | provider:physical_network | physnet1 | | provider:segmentation_id | 120 | | router:external | False | | shared | False | | status | ACTIVE | | subnets | ec1028b2-7cb0-4feb-b974-6b8ea7e7f08f | | tenant_id | befa06e66e8047a1929a3912fff2c591 | +---------------------------+--------------------------------------+

[root@jun ~(keystone_admin)]# neutron subnet-show ec1028b2-7cb0-4feb-b974-6b8ea7e7f08f +-------------------+--------------------------------------------------+ | Field | Value | +-------------------+--------------------------------------------------+ | allocation_pools | {"start": "172.16.0.2", "end": "172.16.255.254"} | | cidr | 172.16.0.0/16 | | dns_nameservers | | | enable_dhcp | True | | gateway_ip | 172.16.0.1 | | host_routes | | | id | ec1028b2-7cb0-4feb-b974-6b8ea7e7f08f | | ip_version | 4 | | ipv6_address_mode | | | ipv6_ra_mode | | | name | inter-sub | | network_id | 8165bc3d-400a-48a0-9186-bf59f7f94b05 | | subnetpool_id | | | tenant_id | befa06e66e8047a1929a3912fff2c591 | +-------------------+--------------------------------------------------+

[root@jun ~(keystone_admin)]# neutron net-show cad98138-6e5f-4f83-a4c5-5497fa4758b4 +---------------------------+--------------------------------------+ | Field | Value | +---------------------------+--------------------------------------+ | admin_state_up | True | | id | cad98138-6e5f-4f83-a4c5-5497fa4758b4 | | mtu | 0 | | name | ext-net | | provider:network_type | flat | | provider:physical_network | physnet2 | | provider:segmentation_id | | | router:external | True | | shared | True | | status | ACTIVE | | subnets | 288421a4-63da-41ff-89c1-985e83271e6b | | tenant_id | 09e04766c06d477098201683497d3878 | +---------------------------+--------------------------------------+

[root@jun ~(keystone_admin)]# neutron subnet-show 288421a4-63da-41ff-89c1-985e83271e6b +-------------------+------------------------------------------------------+ | Field | Value | +-------------------+------------------------------------------------------+ | allocation_pools | {"start": "192.168.118.11", "end": "192.168.118.99"} | | cidr | 192.168.118.0/24 | | dns_nameservers | | | enable_dhcp | False | | gateway_ip | 192.168.118.10 | | host_routes | | | id | 288421a4-63da-41ff-89c1-985e83271e6b | | ip_version | 4 | | ipv6_address_mode | | | ipv6_ra_mode | | | name | ext-sub | | network_id | cad98138-6e5f-4f83-a4c5-5497fa4758b4 | | subnetpool_id | | | tenant_id | 09e04766c06d477098201683497d3878 | +-------------------+------------------------------------------------------+ |

这是在数据库中查询的创建的networks的相关信息。其中ext-net network下创建的subnets未enable dhcp,而inter-net network下创建的subnets enable dhcp,所以在/var/lib/neutron/dhcp目录下应该只有inter-net network id的目录。

| [root@jun2 dhcp]# ll total 0 drwxr-xr-x. 2 neutron neutron 71 May 20 20:30 8165bc3d-400a-48a0-9186-bf59f7f94b05 |

经查询可以看出,在/var/lib/neutron/dhcp目录下的确只有inter-net network id的目录。

讲述得有点偏离sync_state函数了,继续回到sync_state函数。在将未在数据库中的底层的dhcp networks从self.cache中进行移除后,将更新active的dhcp networks信息。

#/neutron/agent/dhcp/agent.py:DhcpAgent

@utils.exception_logger()

def safe_configure_dhcp_for_network(self, network):

try:

self.configure_dhcp_for_network(network)

except (exceptions.NetworkNotFound, RuntimeError):

LOG.warn(_LW('Network %s may have been deleted and its resources '

'may have already been disposed.'), network.id)

#/neutron/agent/dhcp/agent.py:DhcpAgent

def configure_dhcp_for_network(self, network):

if not network.admin_state_up:

return

enable_metadata = self.dhcp_driver_cls.should_enable_metadata(

self.conf, network)

dhcp_network_enabled = False

for subnet in network.subnets:

if subnet.enable_dhcp:

if self.call_driver('enable', network):

dhcp_network_enabled = True

self.cache.put(network)

break

if enable_metadata and dhcp_network_enabled:

for subnet in network.subnets:

if subnet.ip_version == 4 and subnet.enable_dhcp:

self.enable_isolated_metadata_proxy(network)

break

注意configure_dhcp_for_network函数有个enable_metadata变量,其值调用如下函数进行获得。

#/neutron/agent/linux/dhcp.py:Dnsmasq

@classmethod

def should_enable_metadata(cls, conf, network):

"""Determine whether the metadata proxy is needed for a network

This method returns True for truly isolated networks (ie: not attached

to a router), when the enable_isolated_metadata flag is True.

This method also returns True when enable_metadata_network is True,

and the network passed as a parameter has a subnet in the link-local

CIDR, thus characterizing it as a "metadata" network. The metadata

network is used by solutions which do not leverage the l3 agent for

providing access to the metadata service via logical routers built

with 3rd party backends.

"""

if conf.enable_metadata_network and conf.enable_isolated_metadata:

# check if the network has a metadata subnet

meta_cidr = netaddr.IPNetwork(METADATA_DEFAULT_CIDR)

if any(netaddr.IPNetwork(s.cidr) in meta_cidr

for s in network.subnets):

return True

if not conf.use_namespaces or not conf.enable_isolated_metadata:

return False

isolated_subnets = cls.get_isolated_subnets(network)

return any(isolated_subnets[subnet.id] for subnet in network.subnets)

这里根据/etc/neutron/dhcp_agent.ini配置文件中enable_isolated_metadata和enable_metadata_network参数值判断neutron-dhcp-agent服务是否enable metadata。其中参数值如下。

| enable_isolated_metadata = False enable_metadata_network = False |

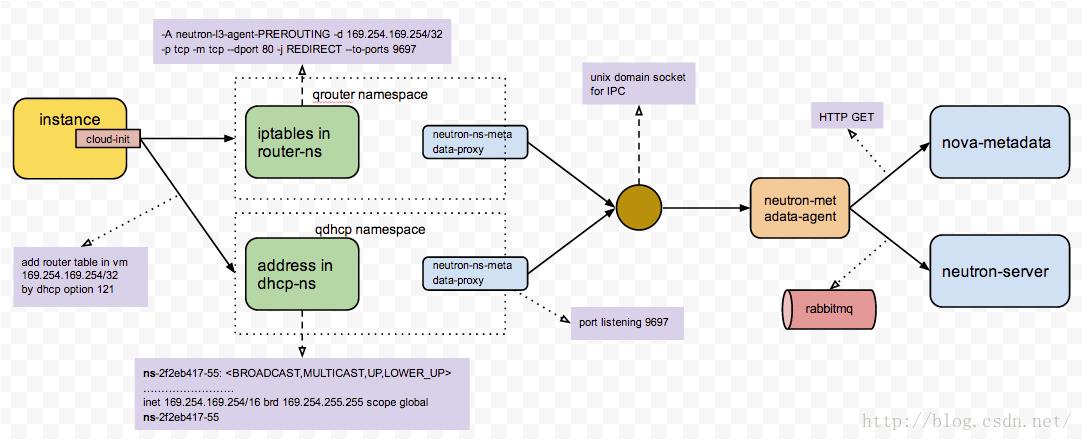

这里enable metadata后,VM便能够从数据库中获取自身的相关信息,如IP和MAC等等。如下图所示(这里网上的一个图)。

从上图可以看出,VM自身的metadata可通过neutron-l3-agent和neutron-dhcp-agent两种服务来从数据库中获得。不过我的OpenStack环境是通过neutron-l3-agent服务来获取,对于上图中的代码流程,我们将在后面的文章中进行分析。

继续分析configure_dhcp_for_network函数的代码。

#/neutron/agent/dhcp/agent.py:DhcpAgent.configure_dhcp_for_network

for subnet in network.subnets:

if subnet.enable_dhcp:

if self.call_driver('enable', network):

dhcp_network_enabled = True

self.cache.put(network)

break

从代码层面也可以看出:

1. 一个network下可以创建多个subnet。

2. self.cache中存放的是至少有一个subnet enable dhcp的network。

如果network中的subnets全部都未enable dhcp,则self.cache将不会存放该network。

在有subnet enabledhcp的network下将执行self.call_driver('enable', network)代码。

#/neutron/agent/dhcp/agent.py:DhcpAgent

def call_driver(self, action, network, **action_kwargs):

"""Invoke an action on a DHCP driver instance."""

LOG.debug('Calling driver for network: %(net)s action: %(action)s',

{'net': network.id, 'action': action})

try:

# the Driver expects something that is duck typed similar to

# the base models.

driver = self.dhcp_driver_cls(self.conf,

network,

self._process_monitor,

self.dhcp_version,

self.plugin_rpc)

getattr(driver, action)(**action_kwargs)

return True

except exceptions.Conflict:

# No need to resync here, the agent will receive the event related

# to a status update for the network

LOG.warning(_LW('Unable to %(action)s dhcp for %(net_id)s: there '

'is a conflict with its current state; please '

'check that the network and/or its subnet(s) '

'still exist.'),

{'net_id': network.id, 'action': action})

except Exception as e:

if getattr(e, 'exc_type', '') != 'IpAddressGenerationFailure':

# Don't resync if port could not be created because of an IP

# allocation failure. When the subnet is updated with a new

# allocation pool or a port is deleted to free up an IP, this

# will automatically be retried on the notification

self.schedule_resync(e, network.id)

if (isinstance(e, oslo_messaging.RemoteError)

and e.exc_type == 'NetworkNotFound'

or isinstance(e, exceptions.NetworkNotFound)):

LOG.warning(_LW("Network %s has been deleted."), network.id)

else:

LOG.exception(_LE('Unable to %(action)s dhcp for %(net_id)s.'),

{'net_id': network.id, 'action': action})

在call_driver函数中,将创建neutron.agent.linux.dhcp.Dnsmasq对象,且调用该类的enable函数。

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

def enable(self):

"""Enables DHCP for this network by spawning a local process."""

if self.active:

self.restart()

elif self._enable_dhcp():

utils.ensure_dir(self.network_conf_dir)

interface_name = self.device_manager.setup(self.network)

self.interface_name = interface_name

self.spawn_process()

enable函数首先判断dhcp_server(dnsmasq进程)进程是否处于active状态。

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

@property

def active(self):

return self._get_process_manager().active

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

def _get_process_manager(self, cmd_callback=None):

return external_process.ProcessManager(

conf=self.conf,

uuid=self.network.id,

namespace=self.network.namespace,

default_cmd_callback=cmd_callback,

pid_file=self.get_conf_file_name('pid'),

run_as_root=True)

#/neutron/agent/linux/external_process.py:ProcessManager

@property

def active(self):

pid = self.pid

if pid is None:

return False

cmdline = '/proc/%s/cmdline' % pid

try:

with open(cmdline, "r") as f:

return self.uuid in f.readline()

except IOError:

return False

#/neutron/agent/linux/external_process.py:ProcessManager

@property

def pid(self):

"""Last known pid for this external process spawned for this uuid."""

return utils.get_value_from_file(self.get_pid_file_name(), int)

#/neutron/agent/linux/external_process.py:ProcessManager

def get_pid_file_name(self):

"""Returns the file name for a given kind of config file."""

if self.pid_file:

return self.pid_file

else:

return utils.get_conf_file_name(self.pids_path,

self.uuid,

self.service_pid_fname)

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

def get_conf_file_name(self, kind):

"""Returns the file name for a given kind of config file."""

return os.path.join(self.network_conf_dir, kind)

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess.__init__

self.confs_dir = self.get_confs_dir(conf)

self.network_conf_dir = os.path.join(self.confs_dir, network.id)

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

@staticmethod

def get_confs_dir(conf):

return os.path.abspath(os.path.normpath(conf.dhcp_confs))

self.active函数首先从/var/lib/neutron/dhcp/${network.id}目录下get pid。

| [root@jun2 8165bc3d-400a-48a0-9186-bf59f7f94b05]# ll total 20 -rw-r--r--. 1 neutron neutron 116 May 20 20:30 addn_hosts -rw-r--r--. 1 neutron neutron 120 May 20 20:30 host -rw-r--r--. 1 neutron neutron 14 May 20 20:30 interface -rw-r--r--. 1 neutron neutron 71 May 20 20:30 opts -rw-r--r--. 1 root root 5 May 20 20:30 pid [root@jun2 8165bc3d-400a-48a0-9186-bf59f7f94b05]# cat pid 3235 |

然后执行cmdline = '/proc/%s/cmdline' %pid代码查看/proc/${pid}/cmcline文件内容中是否有network.id,如果有network.id,则说明dnsmasq进程处于active状态,否则dnsmasq进程未active。

| [root@jun2 8165bc3d-400a-48a0-9186-bf59f7f94b05]# cat /proc/3235/cmdline dnsmasq--no-hosts--no-resolv--strict-order--bind-interfaces--interface=ns-712a2c63-e6--except-interface=lo--pid-file=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/pid--dhcp-hostsfile=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/host--addn-hosts=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/addn_hosts--dhcp-optsfile=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/opts--leasefile-ro--dhcp-authoritative--dhcp-range=set:tag0,172.16.0.0,static,86400s--dhcp-lease-max=65536--conf-file=--domain=openstacklocal |

我们这里主要分析dnsmasq进程处于active状态的代码流程,因为active的代码流程先执行disable函数后,最终也会走非active状态的代码流程。

此时执行restart函数。

#/neutron/agent/linux/dhcp.py:DhcpBase

def restart(self):

"""Restart the dhcp service for the network."""

self.disable(retain_port=True)

self.enable()

restart函数将首先disable函数一些dnsmasq进程的相关东西,然后再执行enable函数。不过再进入enable函数之后,将不再走active分支了,而是走另外一个创建新的dnsmasq进程的分支(因为在disable函数中,将原来的dnsmasq进程已经kill了)。这里我们不分析disable函数的代码流程,disable函数主要执行与enable函数的相反流程,如果enable函数创建dnsmasq进程和相应的参数配置文件,所以disable将kill dnsmasq进程和删除相应的参数配置文件。所以这里我们直接分析enable函数。

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

def enable(self):

"""Enables DHCP for this network by spawning a local process."""

if self.active:

self.restart()

elif self._enable_dhcp():

utils.ensure_dir(self.network_conf_dir)

interface_name = self.device_manager.setup(self.network)

self.interface_name = interface_name

self.spawn_process()

#/neutron/agent/linux/dhcp.py:DhcpLocalProcess

def _enable_dhcp(self):

"""check if there is a subnet within the network with dhcp enabled."""

for subnet in self.network.subnets:

if subnet.enable_dhcp:

return True

return False

enable函数在创建dnsmasq进程的分支下将判断network下是否有enable dhcp的subnet,该network下至少有一个subnet enable dhcp才会执行创建dnsmasq进程的操作。

这里需要注意:在调用enable函数的call_driver函数外层有一个用于遍历所有的active network的for循环。如果每个network下都有subnets enable dhcp,那么每个network在调用这里的enable函数时都将创建一个自己的dnsmasq进程。如我的OpenStack环境下。

| [root@jun2 dhcp]# ll total 0 drwxr-xr-x. 2 neutron neutron 71 May 21 07:53 8165bc3d-400a-48a0-9186-bf59f7f94b05 drwxr-xr-x. 2 neutron neutron 71 May 21 08:17 8c0b6ddf-8928-46e9-8caf-2416be7a48b8 [root@jun2 dhcp]# [root@jun2 dhcp]# ps -efl | grep dhcp 4 S neutron 1280 1 0 80 0 - 86928 ep_pol 07:49 ? 00:00:07 /usr/bin/python2 /usr/bin/neutron-dhcp-agent --config-file /usr/share/neutron/neutron-dist.conf --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/dhcp_agent.ini --config-dir /etc/neutron/conf.d/neutron-dhcp-agent --log-file /var/log/neutron/dhcp-agent.log 5 S nobody 2914 1 0 80 0 - 3880 poll_s 07:53 ? 00:00:00 dnsmasq --no-hosts --no-resolv --strict-order --bind-interfaces --interface=ns-712a2c63-e6 --except-interface=lo --pid-file=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/pid --dhcp-hostsfile=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/host --addn-hosts=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/addn_hosts --dhcp-optsfile=/var/lib/neutron/dhcp/8165bc3d-400a-48a0-9186-bf59f7f94b05/opts --leasefile-ro --dhcp-authoritative --dhcp-range=set:tag0,172.16.0.0,static,86400s --dhcp-lease-max=65536 --conf-file= --domain=openstacklocal 5 S nobody 3659 1 0 80 0 - 3880 poll_s 08:17 ? 00:00:00 dnsmasq --no-hosts --no-resolv --strict-order --bind-interfaces --interface=ns-2434d651-41 --except-interface=lo --pid-file=/var/lib/neutron/dhcp/8c0b6ddf-8928-46e9-8caf-2416be7a48b8/pid --dhcp-hostsfile=/var/lib/neutron/dhcp/8c0b6ddf-8928-46e9-8caf-2416be7a48b8/host --addn-hosts=/var/lib/neutron/dhcp/8c0b6ddf-8928-46e9-8caf-2416be7a48b8/addn_hosts --dhcp-optsfile=/var/lib/neutron/dhcp/8c0b6ddf-8928-46e9-8caf-2416be7a48b8/opts --leasefile-ro --dhcp-authoritative --dhcp-range=set:tag0,10.0.0.0,static,86400s --dhcp-lease-max=16777216 --conf-file= --domain=openstacklocal 0 S root 3723 2723 0 80 0 - 28161 pipe_w 08:17 pts/0 00:00:00 grep --color=auto dhcp |

继续enable函数在创建dnsmasq进程的分支代码流程分析。

#/neutron/agent/linux/utils.py

def ensure_dir(dir_path):

"""Ensure a directory with 755 permissions mode."""

if not os.path.isdir(dir_path):

os.makedirs(dir_path, 0o755)

首先判断self.network_conf_dir(即/var/lib/neutron/dhcp/{network.id})目录是否存在,不存在则创建,并设置目录权限为755.

然后执行DeviceManager类的set_up函数。

#/neutron/agent/linux/dhcp.py:DeviceManager

def setup(self, network):

"""Create and initialize a device for network's DHCP on this host."""

port = self.setup_dhcp_port(network)

interface_name = self.get_interface_name(network, port)

if ip_lib.ensure_device_is_ready(interface_name,

namespace=network.namespace):

LOG.debug('Reusing existing device: %s.', interface_name)

else:

self.driver.plug(network.id,

port.id,

interface_name,

port.mac_address,

namespace=network.namespace)

self.fill_dhcp_udp_checksums(namespace=network.namespace)

ip_cidrs = []

for fixed_ip in port.fixed_ips:

subnet = fixed_ip.subnet

if not ipv6_utils.is_auto_address_subnet(subnet):

net = netaddr.IPNetwork(subnet.cidr)

ip_cidr = '%s/%s' % (fixed_ip.ip_address, net.prefixlen)

ip_cidrs.append(ip_cidr)

if (self.conf.enable_isolated_metadata and

self.conf.use_namespaces):

ip_cidrs.append(METADATA_DEFAULT_CIDR)

self.driver.init_l3(interface_name, ip_cidrs,

namespace=network.namespace)

# ensure that the dhcp interface is first in the list

if network.namespace is None:

device = ip_lib.IPDevice(interface_name)

device.route.pullup_route(interface_name)

if self.conf.use_namespaces:

self._set_default_route(network, interface_name)

return interface_name

正如set_up函数的注释可知,set_up函数将为network的dhcp创建和初始化device。

#/neutron/agent/linux/dhcp.py:DeviceManager

def setup_dhcp_port(self, network):

"""Create/update DHCP port for the host if needed and return port."""

device_id = self.get_device_id(network)

subnets = {}

dhcp_enabled_subnet_ids = []

for subnet in network.subnets:

if subnet.enable_dhcp:

dhcp_enabled_subnet_ids.append(subnet.id)

subnets[subnet.id] = subnet

dhcp_port = None

for port in network.ports:

port_device_id = getattr(port, 'device_id', None)

if port_device_id == device_id:

port_fixed_ips = []

ips_needs_removal = False

for fixed_ip in port.fixed_ips:

if fixed_ip.subnet_id in dhcp_enabled_subnet_ids:

port_fixed_ips.append(

{'subnet_id': fixed_ip.subnet_id,

'ip_address': fixed_ip.ip_address})

dhcp_enabled_subnet_ids.remove(fixed_ip.subnet_id)

else:

ips_needs_removal = True

# If there are dhcp_enabled_subnet_ids here that means that

# we need to add those to the port and call update.

if dhcp_enabled_subnet_ids or ips_needs_removal:

port_fixed_ips.extend(

[dict(subnet_id=s) for s in dhcp_enabled_subnet_ids])

dhcp_port = self.plugin.update_dhcp_port(

port.id, {'port': {'network_id': network.id,

'fixed_ips': port_fixed_ips}})

if not dhcp_port:

raise exceptions.Conflict()

else:

dhcp_port = port

# break since we found port that matches device_id

break

# check for a reserved DHCP port

if dhcp_port is None:

LOG.debug('DHCP port %(device_id)s on network %(network_id)s'

' does not yet exist. Checking for a reserved port.',

{'device_id': device_id, 'network_id': network.id})

for port in network.ports:

port_device_id = getattr(port, 'device_id', None)

if port_device_id == constants.DEVICE_ID_RESERVED_DHCP_PORT:

dhcp_port = self.plugin.update_dhcp_port(

port.id, {'port': {'network_id': network.id,

'device_id': device_id}})

if dhcp_port:

break

# DHCP port has not yet been created.

if dhcp_port is None:

LOG.debug('DHCP port %(device_id)s on network %(network_id)s'

' does not yet exist.', {'device_id': device_id,

'network_id': network.id})

port_dict = dict(

name='',

admin_state_up=True,

device_id=device_id,

network_id=network.id,

tenant_id=network.tenant_id,

fixed_ips=[dict(subnet_id=s) for s in dhcp_enabled_subnet_ids])

dhcp_port = self.plugin.create_dhcp_port({'port': port_dict})

if not dhcp_port:

raise exceptions.Conflict()

# Convert subnet_id to subnet dict

fixed_ips = [dict(subnet_id=fixed_ip.subnet_id,

ip_address=fixed_ip.ip_address,

subnet=subnets[fixed_ip.subnet_id])

for fixed_ip in dhcp_port.fixed_ips]

ips = [DictModel(item) if isinstance(item, dict) else item

for item in fixed_ips]

dhcp_port.fixed_ips = ips

return dhcp_port

setup_dhcp_port函数的作用为创建或更新dhcp port信息,并将最终的dhcp port信息返回。在我的OpenStack环境下的dhcp port信息如下。

| dhcp_port = { u'status': u'ACTIVE', u'binding:host_id': u'jun2', u'allowed_address_pairs': [], u'extra_dhcp_opts': [], u'device_owner': u'network:dhcp', u'binding:profile': {}, u'fixed_ips': [{ 'subnet_id': u'ec1028b2-7cb0-4feb-b974-6b8ea7e7f08f', 'subnet': { u'name': u'inter-sub', u'enable_dhcp': True, u'network_id': u'8165bc3d-400a-48a0-9186-bf59f7f94b05', u'tenant_id': u'befa06e66e8047a1929a3912fff2c591', u'dns_nameservers': [], u'ipv6_ra_mode': None, u'allocation_pools': [{u'start': u'172.16.0.2', u'end': u'172.16.255.254'}], u'gateway_ip': u'172.16.0.1', u'shared': False, u'ip_version': 4, u'host_routes': [], u'cidr': u'172.16.0.0/16', u'ipv6_address_mode': None, u'id': u'ec1028b2-7cb0-4feb-b974-6b8ea7e7f08f', u'subnetpool_id': None }, 'ip_address': u'172.16.0.2' }], u'id': u'712a2c63-e610-42c9-9ab3-4e8b6540d125', u'security_groups': [], u'device_id': u'dhcp2156d71d-f5c3-5752-9e43-4e8290a5696a-8165bc3d-400a-48a0-9186-bf59f7f94b05', u'name': u'', u'admin_state_up': True, u'network_id': u'8165bc3d-400a-48a0-9186-bf59f7f94b05', u'tenant_id': u'befa06e66e8047a1929a3912fff2c591', u'binding:vif_details': {u'port_filter': True}, u'binding:vnic_type': u'normal', u'binding:vif_type': u'bridge', u'mac_address': u'fa:16:3e:65:29:6d' } |

该dhcp port便是对应dashboard上的以下截图port。

setup_dhcp_port函数返回的dhcp port信息在setup函数中用于get interfacename。

#/neutron/agent/linux/dhcp.py:DeviceManager

class DeviceManager(object):

def __init__(self, conf, plugin):

self.conf = conf

self.plugin = plugin

if not conf.interface_driver:

LOG.error(_LE('An interface driver must be specified'))

raise SystemExit(1)

try:

self.driver = importutils.import_object(

conf.interface_driver, conf)

except Exception as e:

LOG.error(_LE("Error importing interface driver '%(driver)s': "

"%(inner)s"),

{'driver': conf.interface_driver,

'inner': e})

raise SystemExit(1)

def get_interface_name(self, network, port):

"""Return interface(device) name for use by the DHCP process."""

return self.driver.get_device_name(port)

这里使用/etc/neutron/dhcp_agent.ini配置文件中的interface_driver参数值去创建interface的driver对象。其中interface_driver参数值如下。

| interface_driver =neutron.agent.linux.interface.BridgeInterfaceDriver |

最终get_interface_name函数将调用neutron.agent.linux.interface.BridgeInterfaceDriver的get_device_name函数。

#/neutron/agent/linux/interface.py:LinuxInterfaceDriver

def get_device_name(self, port):

return (self.DEV_NAME_PREFIX + port.id)[:self.DEV_NAME_LEN]

#/neutron/agent/linux/interface.py:LinuxInterfaceDriver

# from linux IF_NAMESIZE

DEV_NAME_LEN = 14

#/neutron/agent/linux/interface.py:BridgeInterfaceDriver

DEV_NAME_PREFIX = 'ns-'

neutron.agent.linux.interface.BridgeInterfaceDriver类继承LinuxInterfaceDriver类。最终set_up函数中的interface_name将会是’ns-‘开头的且加上port id的前11个字符形成的字符串。就拿上面返回的dhcp port来说,该port id为'712a2c63-e610-42c9-9ab3-4e8b6540d125',所以interface_name = ‘ns-712a2c63-e6’。

继续回到set_up函数。

#/neutron/agent/linux/dhcp.py:DeviceManager.set_up

if ip_lib.ensure_device_is_ready(interface_name,

namespace=network.namespace):

LOG.debug('Reusing existing device: %s.', interface_name)

else:

self.driver.plug(network.id,

port.id,

interface_name,

port.mac_address,

namespace=network.namespace)

self.fill_dhcp_udp_checksums(namespace=network.namespace)

在获取到interface_name之后,将检验interface_name是否已经在本地的host的命名空间中被创建,检验的方法相当于执行类似下面的命令。

| [root@jun2 ~]# ip netns exec qdhcp-8165bc3d-400a-48a0-9186-bf59f7f94b05 ip link set ns-712a2c63-e6 up |

若执行失败,则说明该device不存在,所以将执行else流程。

#/neutron/agent/linux/interface.py:BridgeInterfaceDriver

class BridgeInterfaceDriver(LinuxInterfaceDriver):

"""Driver for creating bridge interfaces."""

DEV_NAME_PREFIX = 'ns-'

def plug(self, network_id, port_id, device_name, mac_address,

bridge=None, namespace=None, prefix=None):

"""Plugin the interface."""

if not ip_lib.device_exists(device_name, namespace=namespace):

ip = ip_lib.IPWrapper()

# Enable agent to define the prefix

tap_name = device_name.replace(prefix or self.DEV_NAME_PREFIX,

n_const.TAP_DEVICE_PREFIX)

# Create ns_veth in a namespace if one is configured.

root_veth, ns_veth = ip.add_veth(tap_name, device_name,

namespace2=namespace)

ns_veth.link.set_address(mac_address)

if self.conf.network_device_mtu:

root_veth.link.set_mtu(self.conf.network_device_mtu)

ns_veth.link.set_mtu(self.conf.network_device_mtu)

root_veth.link.set_up()

ns_veth.link.set_up()

else:

LOG.info(_LI("Device %s already exists"), device_name)

首先,check ns-xxx开头的device是否存在。

#/neutron/agent/linux/ip_lib.py

def device_exists(device_name, namespace=None):

"""Return True if the device exists in the namespace."""

try:

dev = IPDevice(device_name, namespace=namespace)

dev.set_log_fail_as_error(False)

address = dev.link.address

except RuntimeError:

return False

return bool(address)

#/neutron/agent/linux/ip_lib.py:IpLinkCommand

@property

def address(self):

return self.attributes.get('link/ether')

#/neutron/agent/linux/ip_lib.py:IpLinkCommand

@property

def attributes(self):

return self._parse_line(self._run(['o'], ('show', self.name)))

#/neutron/agent/linux/ip_lib.py:IpLinkCommand

def _parse_line(self, value):

if not value:

return {}

device_name, settings = value.replace("\\\\", '').split('>', 1)

tokens = settings.split()

keys = tokens[::2]

values = [int(v) if v.isdigit() else v for v in tokens[1::2]]

retval = dict(zip(keys, values))

return retval

其中self._run(['o'], ('show',self.name))代码相当于执行类似以下命名。

| [root@jun2 ~]# ip netns exec qdhcp-8165bc3d-400a-48a0-9186-bf59f7f94b05 ip -o link show ns-712a2c63-e6 2: ns-712a2c63-e6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000\\ link/ether fa:16:3e:65:29:6d brd ff:ff:ff:ff:ff:ff |

ip 中的-o参数的作用是将输出的结果显示为一行,且将’\\n’用’\\’替换。最终通过_parse_line函数将显示的结果进行处理,所以address函数将获取MAC地址:fa:16:3e:65:29:6d(如果ns-712a2c63-e6存在的话)。

这里,我们假设以ns-开头的device不存在,则将执行哪些操作呢?具体可以看看plug的if分支,大致是执行类似以下的命令。

| Command1: [root@jun2 ~]# ip link add tap_test type veth peer namens_test netns qdhcp-8c0b6ddf-8928-46e9-8caf-2416be7a48b8

查询结果: [root@jun2 ~]# ip netns exec qdhcp-8c0b6ddf-8928-46e9-8caf-2416be7a48b8 ip link 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: ns-2434d651-41: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether fa:16:3e:13:d1:c2 brd ff:ff:ff:ff:ff:ff 3: ns_test: <BROADCAST,MULTICAST> mtu 1500 qdisc noop stateDOWN mode DEFAULT qlen 1000 link/ether e6:b7:08:bd:4c:d1 brd ff:ff:ff:ff:ff:ff

[root@jun2 ~]# ip link 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether 00:0c:29:71:c8:02 brd ff:ff:ff:ff:ff:ff ... ... ... 13: brqcad98138-6e: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT link/ether 00:0c:29:71:c8:16 brd ff:ff:ff:ff:ff:ff 14: tap_test: <BROADCAST,MULTICAST> mtu 1500 qdisc noop stateDOWN mode DEFAULT qlen 1000 link/ether b6:c7:53:22:d7:63 brd ff:ff:ff:ff:ff:ff

------------------------------------------------------------------------------------------------------------------------------- Command2: [root@jun2 ~]# ip netns exec qdhcp-8c0b6ddf-8928-46e9-8caf-2416be7a48b8 ip link setns_testaddress fa:16:3e:11:11:11

查询结果: [root@jun2 ~]# ip netns exec qdhcp-8c0b6ddf-8928-46e9-8caf-2416be7a48b8 ip link 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: ns-2434d651-41: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether fa:16:3e:13:d1:c2 brd ff:ff:ff:ff:ff:ff 3: ns_test: <BROADCAST,MULTICAST> mtu 1500 qdisc noop stateDOWN mode DEFAULT qlen 1000 link/ether fa:16:3e:11:11:11 brd ff:ff:ff:ff:ff:ff

------------------------------------------------------------------------------------------------------------------------------- 如果在/etc/neutron/dhcp_agent.ini配置文件中配置network_device_mtu参数值的大小为1000。则还将执行设置device的mtu的大小。 Command3(可选): [root@jun2 ~]# ip link set tap_test mtu 1000 [root@jun2 ~]# ip netns exec qdhcp-8c0b6ddf-8928-46e9-8caf-2416be7a48b8 ip link set ns_test mtu 1000

查询结果: [root@jun2 ~]# ip link 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether 00:0c:29:71:c8:02 brd ff:ff:ff:ff:ff:ff 13: brqcad98138-6e: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT link/ether 00:0c:29:71:c8:16 brd ff:ff:ff:ff:ff:ff 14: tap_test: <BROADCAST,MULTICAST> mtu 1000 qdisc noop stateDOWN mode DEFAULT qlen 1000 link/ether b6:c7:53:22:d7:63 brd ff:ff:ff:ff:ff:ff

[root@jun2 ~]# ip netns exec qdhcp-8c0b6ddf-8928-46e9-8caf-2416be7a48b8 ip link 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: ns-2434d651-41: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether fa:16:3e:13:d1:c2 brd ff:ff:ff:ff:ff:ff 3: ns_test: <BROADCAST,MULTICAST> mtu 1000 qdisc noop stateDOWN mode DEFAULT qlen 1000 link/ether fa:16:3e:11:11:11 brd ff:ff:ff:ff:ff:ff

------------------------------------------------------------------------------------------------------------------------------- Command4: 以上是关于neutron-dhcp-agent服务启动流程的主要内容,如果未能解决你的问题,请参考以下文章 |