TensorFlow NormLization

Posted 寂寞的小乞丐

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了TensorFlow NormLization相关的知识,希望对你有一定的参考价值。

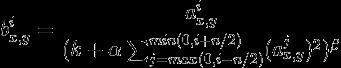

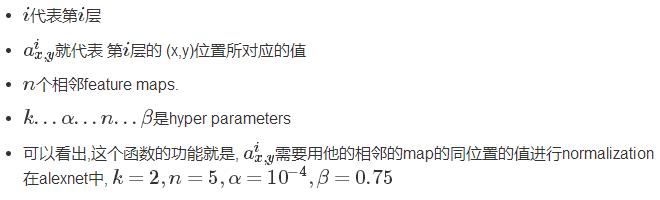

local_response_normalization

local_response_normalization出现在论文”ImageNet Classification with deep Convolutional Neural Networks”中,论文中说,这种normalization对于泛化是有好处的.

经过了一个conv2d或pooling后,我们获得了[batch_size, height, width, channels]这样一个tensor.现在,将channels称之为层,不考虑batch_size

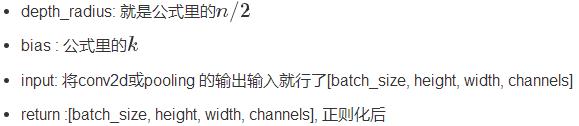

1 tf.nn.local_response_normalization(input, depth_radius=None, bias=None, alpha=None, beta=None, name=None) 2 \'\'\' 3 Local Response Normalization. 4 The 4-D input tensor is treated as a 3-D array of 1-D vectors (along the last dimension), and each vector is normalized independently. Within a given vector, each component is divided by the weighted, squared sum of inputs within depth_radius. In detail, 5 \'\'\' 6 """ 7 input: A Tensor. Must be one of the following types: float32, half. 4-D. 8 depth_radius: An optional int. Defaults to 5. 0-D. Half-width of the 1-D normalization window. 9 bias: An optional float. Defaults to 1. An offset (usually positive to avoid dividing by 0). 10 alpha: An optional float. Defaults to 1. A scale factor, usually positive. 11 beta: An optional float. Defaults to 0.5. An exponent. 12 name: A name for the operation (optional). 13 """

举例子:

1 import tensorflow as tf 2 3 a = tf.constant([ 4 [[1.0, 2.0, 3.0, 4.0], 5 [5.0, 6.0, 7.0, 8.0], 6 [8.0, 7.0, 6.0, 5.0], 7 [4.0, 3.0, 2.0, 1.0]], 8 [[4.0, 3.0, 2.0, 1.0], 9 [8.0, 7.0, 6.0, 5.0], 10 [1.0, 2.0, 3.0, 4.0], 11 [5.0, 6.0, 7.0, 8.0]] 12 ]) 13 #reshape a,get the feature map [batch:1 height:2 width:2 channels:8] 14 a = tf.reshape(a, [1, 2, 2, 8]) 15 16 normal_a=tf.nn.local_response_normalization(a,2,0,1,1) 17 with tf.Session() as sess: 18 print("feature map:") 19 image = sess.run(a) 20 print (image) 21 print("normalized feature map:") 22 normal = sess.run(normal_a) 23 print (normal)

feature map: [[[[ 1. 2. 3. 4. 5. 6. 7. 8.] [ 8. 7. 6. 5. 4. 3. 2. 1.]] [[ 4. 3. 2. 1. 8. 7. 6. 5.] [ 1. 2. 3. 4. 5. 6. 7. 8.]]]] normalized feature map: [[[[ 0.07142857 0.06666667 0.05454545 0.04444445 0.03703704 0.03157895 0.04022989 0.05369128] [ 0.05369128 0.04022989 0.03157895 0.03703704 0.04444445 0.05454545 0.06666667 0.07142857]] [[ 0.13793103 0.10000001 0.0212766 0.00787402 0.05194805 0.04 0.03448276 0.04545454] [ 0.07142857 0.06666667 0.05454545 0.04444445 0.03703704 0.03157895 0.04022989 0.05369128]]]]

这里我取了n/2=2,k=0,α=1,β=1,举个例子,比如对于一通道的第一个像素“1”来说,我们把参数代人公式就是1/(1^2+2^2+3^2)=0.07142857,对于四通道的第一个像素“4”来说,公式就是4/(2^2+3^2+4^2+5^2+6^2)=0.04444445,以此类推

注意:这里的feature_map为【1,2,2,8】,其中1代表图像的数量,2X2代表图像的长宽,8代表图像的层数(map),NRL主要是利用map去计算,然后计算的值为图像的长宽(像素),与图像的数量无关!

我们可以这么理解,feature_map分割为直观的图像,第一个通道[1,8,4,1],第二个通道[2,7,3,2],第三个通道[3,6,2,3],以此类推。。。

那么求解的过程和上面就一一对应了,其中在边角达不到n的时候,那就省略。

能感觉到这种方法不好吗?效果肯定有的,因为对像素归一化了,有利于计算。但是对于一整幅图像来说反而没有什么太大的作用,因为归一化的种类不同,造成部分特征体现不出来,有时候反而不好。

batch_normalization

为什么有batch?上面结尾已经做了初步分析,也已经大概引出来对批量的图像做归一化,对单个图像做的归一化效果不好!

可以看出,batch_normalization之后,数据的维数没有任何变化,只是数值发生了变化

OutOut作为下一层的输入

函数:

tf.nn.batch_normalization()

def batch_normalization(x, mean, variance, offset, scale, variance_epsilon, name=None):

Args:

- x: Input

Tensorof arbitrary dimensionality. - mean: A mean

Tensor. - variance: A variance

Tensor. - offset: An offset

Tensor, often denoted ββ in equations, or None. If present, will be added to the normalized tensor. - scale: A scale

Tensor, often denoted γγ in equations, orNone. If present, the scale is applied to the normalized tensor. - variance_epsilon: A small float number to avoid dividing by 0.

- name: A name for this operation (optional).

- Returns: the normalized, scaled, offset tensor.

对于卷积,x:[bathc,height,width,depth]

对于卷积,我们要feature map中共享 γiγi 和 βiβi ,所以 γ,βγ,β的维度是[depth]

现在,我们需要一个函数 返回mean和variance, 看下面.

tf.nn.moments()

def moments(x, axes, shift=None, name=None, keep_dims=False): # for simple batch normalization pass `axes=[0]` (batch only):

对于卷积的batch_normalization, x 为[batch_size, height, width, depth],axes=[0,1,2],就会输出(mean,variance), mean 与 variance 均为标量。

参考:

https://blog.csdn.net/mao_xiao_feng/article/details/53488271

https://www.jianshu.com/p/c06aea337d5d

https://blog.csdn.net/u012436149/article/details/52985303

以上是关于TensorFlow NormLization的主要内容,如果未能解决你的问题,请参考以下文章