爬虫大作业

Posted 区伯

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬虫大作业相关的知识,希望对你有一定的参考价值。

import requests import re from bs4 import BeautifulSoup import json import urllib import jieba from wordcloud import WordCloud import matplotlib.pyplot as plt import numpy as np import xlwt import jieba.analyse from PIL import Image,ImageSequence url=\'https://juejin.im/search?query=前端\' res = requests.get(url) res.encoding = "utf-8" soup = BeautifulSoup(res.text,"html.parser") ajaxUrlBegin=\'https://search-merger-ms.juejin.im/v1/search?query=%E5%89%8D%E7%AB%AF&page=\' ajaxUrlLast=\'&raw_result=false&src=web\' for i in range(0,25): ajaxUrl=ajaxUrlBegin+str(i)+ajaxUrlLast; response=urllib.request.urlopen(ajaxUrl) ajaxres=response.read().decode(\'utf-8\') json_str = json.dumps(ajaxres) strdata = json.loads(json_str) data=eval(strdata) #str转换为dict for i in range(0,25): ajaxUrl = ajaxUrlBegin + str(i) + ajaxUrlLast; for i in range(0,19): result=[] result=data[\'d\'][i][\'title\'] print(result+\'\\n\') f = open(\'finally.txt\', \'a\', encoding=\'utf-8\') f.write(result) f.close() f = open(\'finally.txt\', \'r\', encoding=\'utf-8\') str = f.read() stringList = list(jieba.cut(str)) symbol = {"/", "(", ")", " ", ";", "!", "、", ":","+","?"," ",")","(","?",",","之","你","了","吗","】","【"} stringSet = set(stringList) - symbol title_dict = {} for i in stringSet: title_dict[i] = stringList.count(i) print(title_dict) di = title_dict wbk = xlwt.Workbook(encoding=\'utf-8\') sheet = wbk.add_sheet("wordCount") k = 0 for i in di.items(): sheet.write(k, 0, label=i[0]) sheet.write(k, 1, label=i[1]) k = k + 1 wbk.save(\'前端数据.xls\') font = r\'C:\\Windows\\Fonts\\simhei.ttf\' content = \' \'.join(title_dict.keys()) image = np.array(Image.open(\'test.jpg\')) wordcloud = WordCloud(background_color=\'white\', font_path=font, mask=image, width=1000, height=860, margin=2).generate(content) plt.imshow(wordcloud) plt.axis("off") plt.show() wordcloud.to_file(\'c-cool.jpg\')

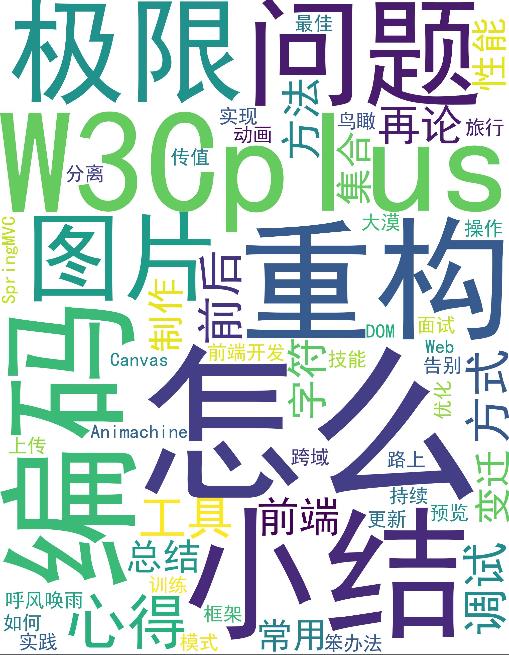

生成词云

以上是关于爬虫大作业的主要内容,如果未能解决你的问题,请参考以下文章