solr实现动态加载分词

Posted james-roger

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了solr实现动态加载分词相关的知识,希望对你有一定的参考价值。

版本是5.3.0

在core(自己创建的模块)的schema.xml里面增加类型:

<fieldType name="text_lj" class="solr.TextField" positionIncrementGap="100" > <analyzer type="index" > <tokenizer class="org.wltea.analyzer.lucene.IKTokenizerFactory" useSmart="false" conf="ik.conf"/> //同级目录下创建的ik.conf文件 </analyzer> <analyzer type="query"> <tokenizer class="org.wltea.analyzer.lucene.IKTokenizerFactory" useSmart="false" conf="ik.conf"/> //IKTokenizerFactory,这个是我们后面要改造的类

</analyzer> </fieldType>

<field name="desc" type="text_lj" indexed="true" stored="true" required="true" multiValued="false"/>

ik.conf:

lastupdate=1

files=extDic.txt

lastupdate:表示的是版本,比如我现在添加了新的分词,则将版本号加1。files表示分词的文件,后面可以是多个文件名,用英文的逗号分隔。在同级目录下创建文件extDic.txt

extDic.txt的内容:文件保存格式必须是utf-8

小红帽

华为手机

格力空调

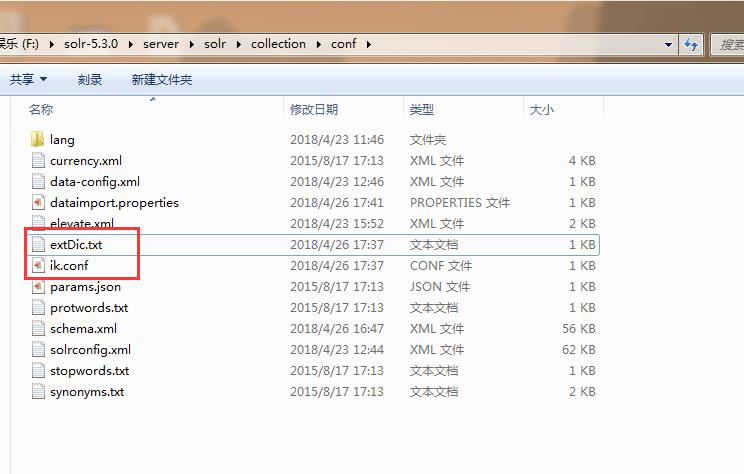

给出一个目录:

配置已经完成,现在最主要的是修改ik分词器的源码,主要的思路是创建一个线程轮询更新分词

源码下载地址:https://codeload.github.com/EugenePig/ik-analyzer-solr5/zip/master

使用ideal打开工程:

主要设计这三个类:UpdateKeeper是新创建的,用于轮询读取配置文件

package org.wltea.analyzer.lucene; import java.io.IOException; import java.util.Vector; //TODO optimize public class UpdateKeeper implements Runnable{ public static interface UpdateJob{ public void update() throws IOException ; } final static int INTERVAL = 1 * 60 * 1000; private static UpdateKeeper singleton; Vector<UpdateJob> filterFactorys; Thread worker; private UpdateKeeper(){ filterFactorys = new Vector<UpdateJob>(); worker = new Thread(this); worker.setDaemon(true); worker.start(); } public static UpdateKeeper getInstance(){ if(singleton == null){ synchronized(UpdateKeeper.class){ if(singleton == null){ singleton = new UpdateKeeper(); return singleton; } } } return singleton; } /*保留各个FilterFactory实例对象的引用,用于后期更新操作*/ public void register(UpdateKeeper.UpdateJob filterFactory ){ filterFactorys.add(filterFactory); } @Override public void run() { while(true){ try { Thread.sleep(INTERVAL); } catch (InterruptedException e) { e.printStackTrace(); } if(!filterFactorys.isEmpty()){ for(UpdateJob factory: filterFactorys){ try { factory.update(); } catch (IOException e) { e.printStackTrace(); } } } } } }

/* * Licensed to the Apache Software Foundation (ASF) under one or more * contributor license agreements. See the NOTICE file distributed with * this work for additional information regarding copyright ownership. * The ASF licenses this file to You under the Apache License, Version 2.0 * (the "License"); you may not use this file except in compliance with * the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ package org.wltea.analyzer.lucene; import java.io.IOException; import java.io.InputStream; import java.util.*; import org.apache.lucene.analysis.Tokenizer; import org.apache.lucene.analysis.util.ResourceLoader; import org.apache.lucene.analysis.util.ResourceLoaderAware; import org.apache.lucene.analysis.util.TokenizerFactory; import org.apache.lucene.util.AttributeFactory; import org.wltea.analyzer.dic.Dictionary; /** * @author <a href="mailto:su.eugene@gmail.com">Eugene Su</a> */ public class IKTokenizerFactory extends TokenizerFactory implements ResourceLoaderAware, UpdateKeeper.UpdateJob{ private boolean useSmart; private ResourceLoader loader; private long lastUpdateTime = -1; private String conf = null; public boolean useSmart() { return useSmart; } public void setUseSmart(boolean useSmart) { this.useSmart = useSmart; } public IKTokenizerFactory(Map<String,String> args) { super(args); String useSmartArg = args.get("useSmart"); this.setUseSmart(useSmartArg != null ? Boolean.parseBoolean(useSmartArg) : false); conf = get(args, "conf"); } @Override public Tokenizer create(AttributeFactory factory) { Tokenizer _IKTokenizer = new IKTokenizer(factory , this.useSmart); return _IKTokenizer; } @Override public void update() throws IOException { Properties p = canUpdate(); if (p != null){ List<String> dicPaths = SplitFileNames(p.getProperty("files")); List<InputStream> inputStreamList = new ArrayList<InputStream>(); for (String path : dicPaths) { if ((path != null && !path.isEmpty())) { InputStream is = loader.openResource(path);if (is != null) { inputStreamList.add(is); } } } if (!inputStreamList.isEmpty()) { Dictionary.addDic2MainDic(inputStreamList); // load dic to MainDic } } } @Override public void inform(ResourceLoader resourceLoader) throws IOException { System.out.println(":::ik:::inform::::::::::::::::::::::::" + conf); this.loader = resourceLoader; this.update(); if(conf != null && !conf.trim().isEmpty()) { UpdateKeeper.getInstance().register(this); } } private Properties canUpdate() { try{ if (conf == null) return null; Properties p = new Properties(); InputStream confStream = loader.openResource(conf); p.load(confStream); confStream.close(); String lastupdate = p.getProperty("lastupdate", "0"); Long t = new Long(lastupdate); if (t > this.lastUpdateTime){ this.lastUpdateTime = t.longValue(); String paths = p.getProperty("files"); if (paths==null || paths.trim().isEmpty()) // 必须有地址 return null; System.out.println("loading conf"); return p; }else{ this.lastUpdateTime = t.longValue(); return null; } }catch(Exception e){ System.err.println("IK parsing conf NullPointerException~~~~~" + e.getMessage()); return null; } } public static List<String> SplitFileNames(String fileNames) { if (fileNames == null) return Collections.<String> emptyList(); List<String> result = new ArrayList<String>(); for (String file : fileNames.split("[,\\\\s]+")) { result.add(file); } return result; } }

Dictionary类里面新增方法:

Dictionary是单例模式

public static void addDic2MainDic(List<InputStream> inputStreams){ if(singleton == null) { Configuration cfg = DefaultConfig.getInstance(); Dictionary.initial(cfg); } for(InputStream is : inputStreams){ //如果找不到扩展的字典,则忽略 if(is == null){ continue; } try { BufferedReader br = new BufferedReader(new InputStreamReader(is , "UTF-8"), 512); String theWord = null; do { theWord = br.readLine(); if (theWord != null && !"".equals(theWord.trim())) { //加载扩展词典数据到主内存词典中 //System.out.println(theWord); singleton._MainDict.fillSegment(theWord.trim().toLowerCase().toCharArray()); } } while (theWord != null); } catch (IOException ioe) { System.err.println("Extension Dictionary loading exception."); ioe.printStackTrace(); }finally{ try { if(is != null){ is.close(); is = null; } } catch (IOException e) { e.printStackTrace(); } } } }

最后将工程打成jar放到web-inf的lib目录里面。大功告成!

以上是关于solr实现动态加载分词的主要内容,如果未能解决你的问题,请参考以下文章