基于flask+requests 小说爬取

Posted 孤寂的狼

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于flask+requests 小说爬取相关的知识,希望对你有一定的参考价值。

最终实现效果如下图:

首页部分

技术文章界面

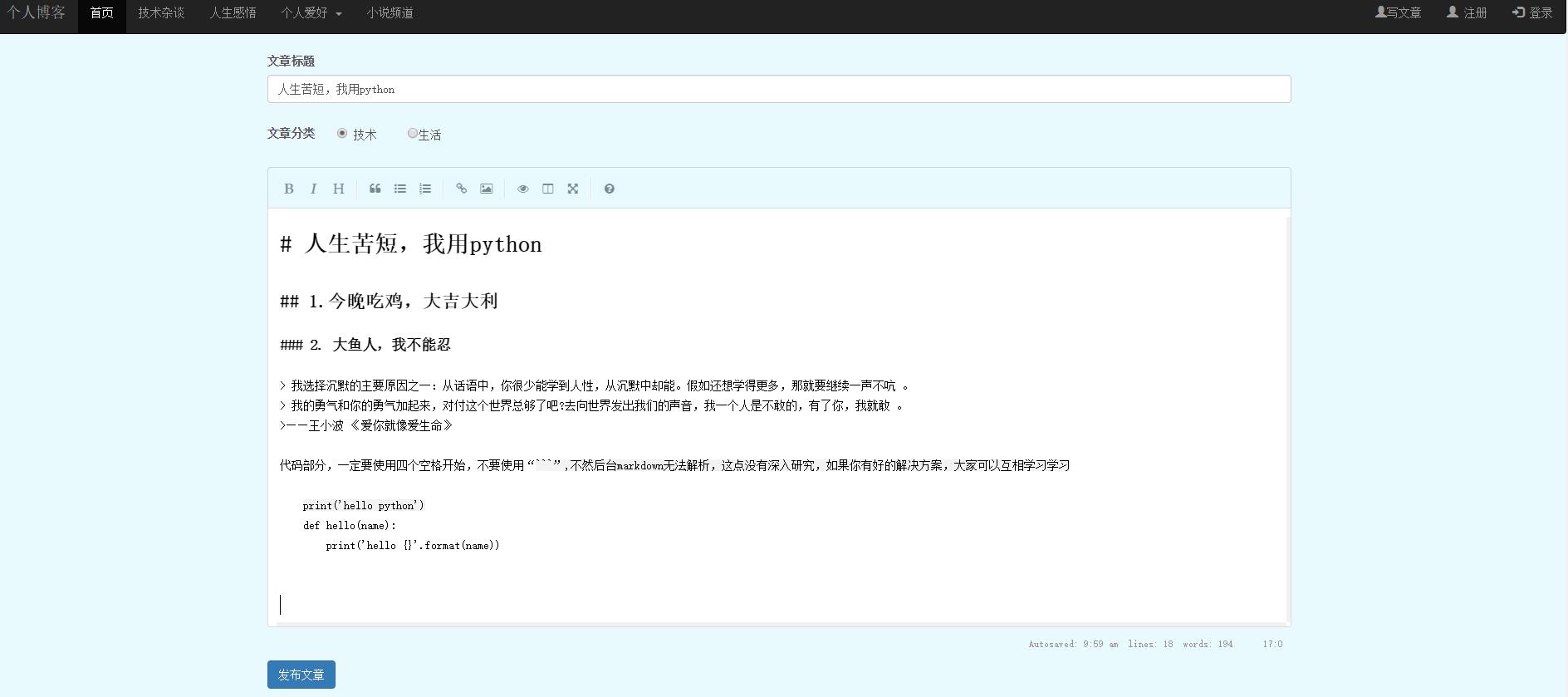

写文章发布文章

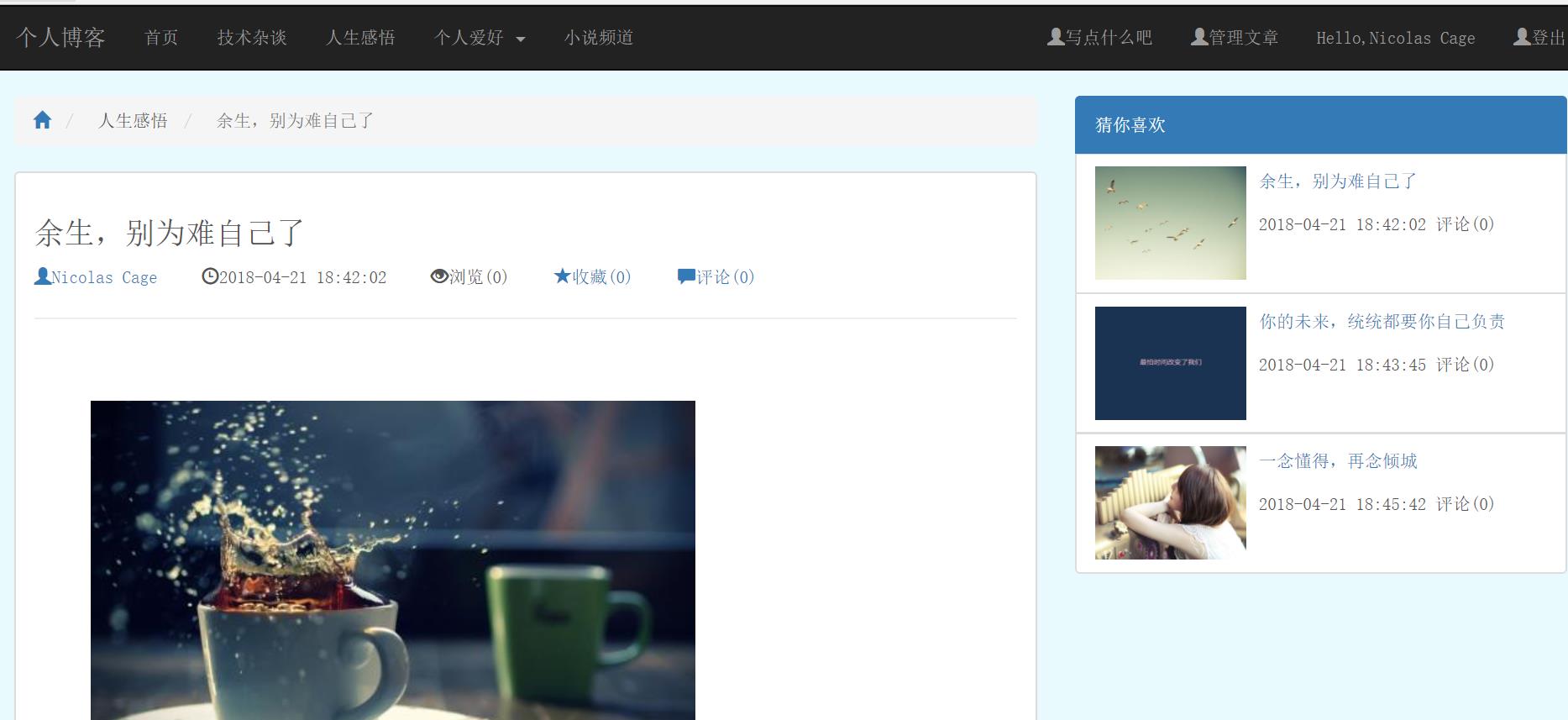

文章显示

发表评论

管理文章界面

小说首页显示

可以输入查询小说,如果小说不存在,就调用后台爬虫程序下载

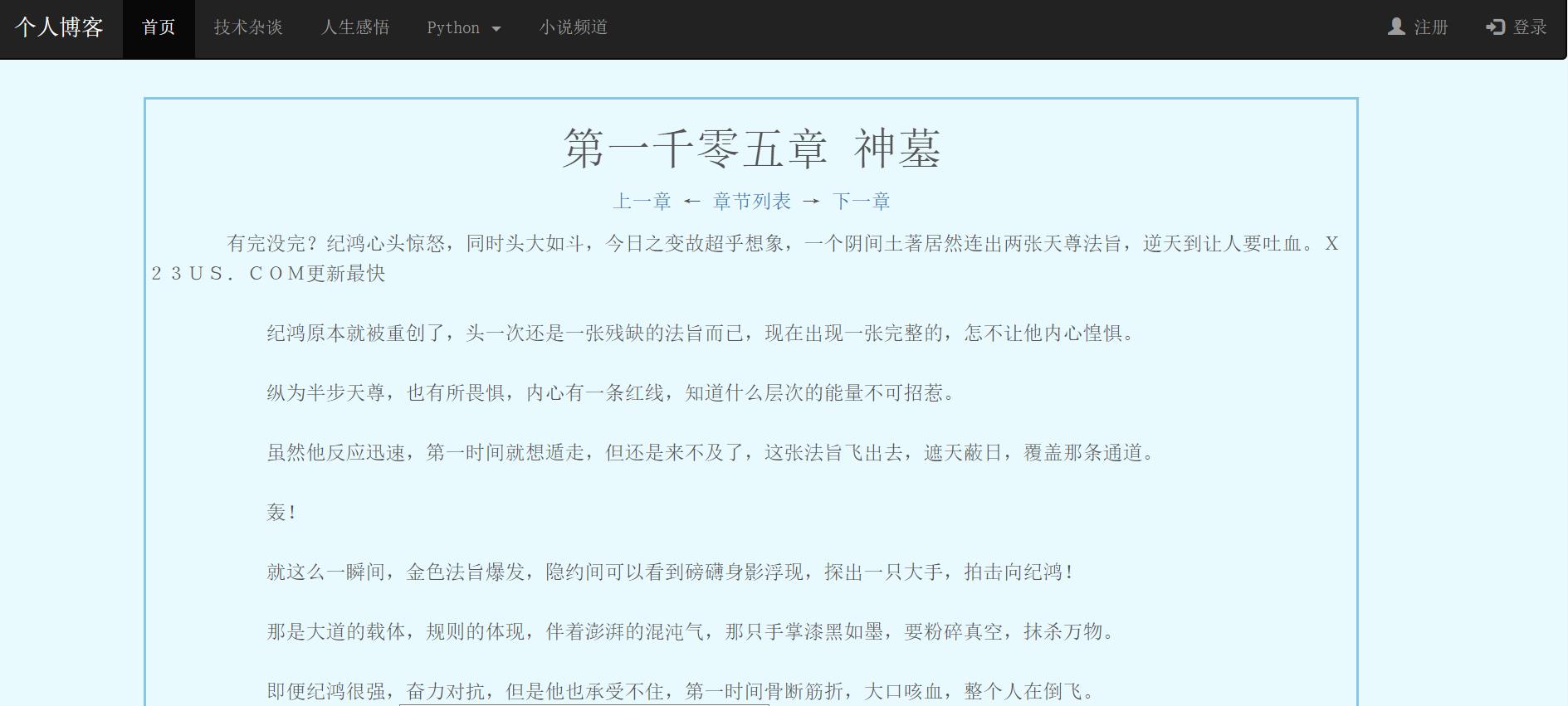

点开具体页面显示,小说章节列表,对于每个章节,如果本地没有就直接下载,可以点开具体章节开心的阅读,而没有广告,是的没有广告,纯净的

2.代码部分

2.1 开发环境

1 Centos7 + mysql 2 Flask==0.12.2 3 Flask-Bootstrap==3.3.7.1 4 Flask-Failsafe==0.2 5 Flask-Login==0.4.1 6 Flask-Mail==0.9.1 7 Flask-Migrate==2.1.1 8 Flask-Script==2.0.6 9 Flask-SQLAlchemy==2.3.2 10 Flask-WTF==0.14.2

2.2 主要代码部分

爬虫部分下载小说资源

xiaoshuoSpider.py

# -*- coding: utf-8 -*- # @Author: longzx # @Date: 2018-03-10 21:41:55 # @cnblog:http://www.cnblogs.com/lonelyhiker/ import requests import sys from bs4 import BeautifulSoup from pymysql.err import ProgrammingError from app.xiaoshuo.spider_tools import get_one_page, insert_fiction, insert_fiction_content, insert_fiction_lst from app.models import Fiction_Lst, Fiction_Content, Fiction def search_fiction(name, flag=1): """输入小说名字 返回小说在网站的具体网址 """ if name is None: raise Exception(\'小说名字必须输入!!!\') url = \'http://zhannei.baidu.com/cse/search?s=920895234054625192&q={}\'.format( name) html = get_one_page(url, sflag=flag) soup = BeautifulSoup(html, \'html5lib\') result_list = soup.find(\'div\', \'result-list\') fiction_lst = result_list.find_all(\'a\', \'result-game-item-title-link\') fiction_url = fiction_lst[0].get(\'href\') fiction_name = fiction_lst[0].text.strip() fiction_img = soup.find(\'img\')[\'src\'] fiction_comment = soup.find_all(\'p\', \'result-game-item-desc\')[0].text fiction_author = soup.find_all( \'div\', \'result-game-item-info\')[0].find_all(\'span\')[1].text.strip() if fiction_name is None: print(\'{} 小说不存在!!!\'.format(name)) raise Exception(\'{} 小说不存在!!!\'.format(name)) fictions = (fiction_name, fiction_url, fiction_img, fiction_author, fiction_comment) save_fiction_url(fictions) return fiction_name, fiction_url def get_fiction_list(fiction_name, fiction_url, flag=1): # 获取小说列表 fiction_html = get_one_page(fiction_url, sflag=flag) soup = BeautifulSoup(fiction_html, \'html5lib\') dd_lst = soup.find_all(\'dd\') fiction_lst = [] fiction_url_tmp = fiction_url.split(\'/\')[-2] for item in dd_lst[12:]: fiction_lst_name = item.a.text.strip() fiction_lst_url = item.a[\'href\'].split(\'/\')[-1].strip(\'.html\') fiction_real_url = fiction_url + fiction_lst_url + \'.html\' lst = (fiction_name, fiction_url_tmp, fiction_lst_url, fiction_lst_name, fiction_real_url) fiction_lst.append(lst) return fiction_lst def get_fiction_content(fiction_url, flag=1): fiction_id = fiction_url.split(\'/\')[-2] fiction_conntenturl = fiction_url.split(\'/\')[-1].strip(\'.html\') fc = Fiction_Content().query.filter_by( fiction_id=fiction_id, fiction_url=fiction_url).first() if fc is None: print(\'此章节不存在,需下载\') html = get_one_page(fiction_url, sflag=flag) soup = BeautifulSoup(html, \'html5lib\') content = soup.find(id=\'content\') f_content = str(content) save_fiction_content(fiction_url, f_content) else: print(\'此章节已存在,无需下载!!!\') def save_fiction_url(fictions): args = (fictions[0], fictions[1].split(\'/\')[-2], fictions[1], fictions[2], fictions[3], fictions[4]) insert_fiction(*args) def save_fiction_lst(fiction_lst): total = len(fiction_lst) if Fiction().query.filter_by(fiction_id=fiction_lst[0][1]) == total: print(\'此小说已存在!!,无需下载\') return 1 for item in fiction_lst: print(\'此章节列表不存在,需下载\') insert_fiction_lst(*item) def save_fiction_content(fiction_url, fiction_content): fiction_id = fiction_url.split(\'/\')[-2] fiction_conntenturl = fiction_url.split(\'/\')[-1].strip(\'.html\') insert_fiction_content(fiction_conntenturl, fiction_content, fiction_id) def down_fiction_lst(f_name): # 1.搜索小说 args = search_fiction(f_name, flag=0) # 2.获取小说目录列表 fiction_lst = get_fiction_list(*args, flag=0) # 3.保存小说目录列表 flag = save_fiction_lst(fiction_lst) print(\'下载小说列表完成!!\') def down_fiction_content(f_url): get_fiction_content(f_url, flag=0) print(\'下载章节完成!!\')

存储小说信息及相关爬虫公共工具

spider_tools.py

# -*- coding: utf-8 -*- # @Author: longzx # @Date: 2018-03-08 20:56:26 """ 爬虫常用工具包 将一些通用的功能进行封装 """ from functools import wraps from random import choice, randint from time import ctime, sleep, time import pymysql import requests from requests.exceptions import RequestException from app.models import Fiction, Fiction_Content, Fiction_Lst from app import db #请求头 headers = {} headers[ \'Accept\'] = \'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8\' headers[\'Accept-Encoding\'] = \'gzip, deflate, br\' headers[\'Accept-Language\'] = \'zh-CN,zh;q=0.9\' headers[\'Connection\'] = \'keep-alive\' headers[\'Upgrade-Insecure-Requests\'] = \'1\' agents = [ "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", \'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36\' ] def get_one_page(url, proxies=None, sflag=1): #获取给定的url页面 while True: try: headers[\'User-Agent\'] = choice(agents) # 控制爬取速度 if sflag: print(\'放慢下载速度。。。。。。\') sleep(randint(1, 3)) print(\'正在下载:\', url) if proxies: r = requests.get( url, headers=headers, timeout=5, proxies=proxies) else: r = requests.get(url, headers=headers, timeout=5) except Exception as r: print(\'errorinfo:\', r) continue else: if r.status_code == 200: r.encoding = r.apparent_encoding print(\'爬取成功!!!\') return r.text else: continue def insert_fiction(fiction_name, fiction_id, fiction_real_url, fiction_img, fiction_author, fiction_comment): fiction = Fiction().query.filter_by(fiction_id=fiction_id).first() if fiction is None: fiction = Fiction( fiction_name=fiction_name, fiction_id=fiction_id, fiction_real_url=fiction_real_url, fiction_img=fiction_img, fiction_author=fiction_author, fiction_comment=fiction_comment) db.session.add(fiction) db.session.commit() else: print(\'记录已存在,无需下载\') def insert_fiction_lst(fiction_name, fiction_id, fiction_lst_url, fiction_lst_name, fiction_real_url): fl = Fiction_Lst().query.filter_by( fiction_id=fiction_id, fiction_lst_url=fiction_lst_url).first() if fl is None: fl = Fiction_Lst( fiction_name=fiction_name, fiction_id=fiction_id, fiction_lst_url=fiction_lst_url, fiction_lst_name=fiction_lst_name, fiction_real_url=fiction_real_url) db.session.add(fl) db.session.commit() else: print(\'此章节已存在!!!\') def insert_fiction_content(fiction_url, fiction_content, fiction_id): fc = Fiction_Content( fiction_id=fiction_id, fiction_content=fiction_content, fiction_url=fiction_url) db.session.add(fc) db.session.commit()

Flask 部分

models.py

# -*- coding: utf-8 -*- # @Author: longzx # @Date: 2018-03-19 23:44:05 # @cnblog:http://www.cnblogs.com/lonelyhiker/ from . import db class Fiction(db.Model): __tablename__ = \'fiction\' __table_args__ = {"useexisting": True} id = db.Column(db.Integer, primary_key=True) fiction_name = db.Column(db.String) fiction_id = db.Column(db.String) fiction_real_url = db.Column(db.String) fiction_img = db.Column(db.String) fiction_author = db.Column(db.String) fiction_comment = db.Column(db.String) def __repr__(self): return \'<fiction %r> \' % self.fiction_name class Fiction_Lst(db.Model): __tablename__ = \'fiction_lst\' __table_args__ = {"useexisting": True} id = db.Column(db.Integer, primary_key=True) fiction_name = db.Column(db.String) fiction_id = db.Column(db.String) fiction_lst_url = db.Column(db.String) fiction_lst_name = db.Column(db.String) fiction_real_url = db.Column(db.String) def __repr__(self): return \'<fiction_lst %r> \' % self.fiction_name class Fiction_Content(db.Model): __tablename__ = \'fiction_content\' __table_args__ = {"useexisting": True} id = db.Column(db.Integer, primary_key=True) fiction_url = db.Column(db.String) fiction_content = db.Column(db.String) fiction_id = db.Column(db.String)

views.py

# -*- coding: utf-8 -*- # @Author: longzx # @Date: 2018-03-20 20:45:37 # @cnblog:http://www.cnblogs.com/lonelyhiker/ from flask import render_template, request, redirect, url_for from app.xiaoshuo.xiaoshuoSpider import down_fiction_lst, down_fiction_content from app.xiaoshuo.spider_tools import get_one_page from . import fiction import requests from bs4 import BeautifulSoup from app.models import Fiction, Fiction_Content, Fiction_Lst from app import db @fiction.route(\'/book/\') def book_index(): fictions = Fiction().query.all() print(fictions) return render_template(\'fiction_index.html\', fictions=fictions) @fiction.route(\'/book/list/<f_id>\') def book_lst(f_id): # 1.获取全部小说 fictions = Fiction().query.all() for fiction in fictions: if fiction.fiction_id == f_id: break print(fiction) # 2.获取小说章节列表 fiction_lst = Fiction_Lst().query.filter_by(fiction_id=f_id).all() if len(fiction_lst) == 0: print(fiction.fiction_name) down_fiction_lst(fiction.fiction_name) fiction_lst = Fiction_Lst().query.filter_by(fiction_id=f_id).all() if len(fiction_lst) == 0: return render_template( \'fiction_error.html\', message=\'暂无此章节信息,请重新刷新下\') fiction_name = fiction_lst[0].fiction_name return render_template( \'fiction_lst.html\', fictions=fictions, fiction=fiction, fiction_lst=fiction_lst, fiction_name=fiction_name) @fiction.route(\'/book/fiction/\') def fiction_content(): fic_id = request.args.get(\'id\') f_url = request.args.get(\'f_url\') print(\'获取书本 id={} url={}\'.format(fic_id, f_url)) # 获取上一章和下一章信息 fiction_lst = Fiction_Lst().query.filter_by( fiction_id=fic_id, fiction_lst_url=f_url).first() id = fiction_lst.id fiction_name = fiction_lst.fiction_lst_name pre_id = id - 1 next_id = id + 1 fiction_pre = Fiction_Lst().query.filter_by( id=pre_id).first().fiction_lst_url fiction_next = Fiction_Lst().query.filter_by( id=next_id).first().fiction_lst_url f_id = fic_id # 获取具体章节内容 fiction_contents = Fiction_Content().query.filter_by( fiction_id=fic_id, fiction_url=f_url).first() if fiction_contents is None: print(\'fiction_real_url={}\'.format(fiction_lst.fiction_real_url)) down_fiction_content(fiction_lst.fiction_real_url) print(\'fiction_id={} fiction_url={}\'.format(fic_id, f_url)) fiction_contents = Fiction_Content().query.filter_by( fiction_id=fic_id, fiction_url=f_url).first() if fiction_contents is None: return render_template(\'fiction_error.html\', message=\'暂无此章节信息,请重新刷新下\') print(\'fiction_contents=\', fiction_contents) fiction_content = fiction_contents.fiction_content print(\'sdfewf\') return render_template( \'fiction.html\', f_id=f_id, fiction_name=fiction_name, fiction_pre=fiction_pre, fiction_next=fiction_next, fiction_content=fiction_content) @fiction.route(\'/book/search/\') def f_search(): f_name = request.args.get(\'f_name\') print(\'收到输入:\', f_name) # 1.查询数据库存在记录 fictions = Fiction().query.all() fiction = None for x in fictions: if f_name in x.fiction_name: fiction = x break if fiction: fiction_lst = Fiction_Lst().query.filter_by( fiction_id=fiction.fiction_id).all() if len(fiction_lst) == 0: down_fiction_lst(f_name) fictions = Fiction().query.all() print(\'fictions=\', fictions) for fiction in fictions: if f_name in fiction.fiction_name: break if f_name not in fiction.fiction_name: return render_template(\'fiction_error.html\', message=\'暂无此小说信息\') fiction_lst = Fiction_Lst().query.filter_by( fiction_id=fiction.fiction_id).all() return render_template( \'fiction_lst.html\', fictions=fictions, fiction=fiction, fiction_lst=fiction_lst, fiction_name=fiction.fiction_name) else: fiction_name = fiction_lst[0].fiction_name return render_template( \'fiction_lst.html\', fictions=fictions, fiction=fiction, fiction_lst=fiction_lst, fiction_name=fiction_name) else: down_fiction_lst(f_name) fictions = Fiction().query.all() print(\'fictions=\', fictions) for fiction in fictions: if f_name in fiction.fiction_name: break if f_name not in fiction.fiction_name: return render_template(\'fiction_error.html\', message=\'暂无此小说信息\') fiction_lst = Fiction_Lst().query.filter_by( fiction_id=fiction.fiction_id).all() return render_template( \'fiction_lst.html\', fictions=fictions, fiction=fiction, fiction_lst=fiction_lst, fiction_name=fiction.fiction_name)

templates

fiction_index.html 小说首页

{% extends "base.html" %} {% block styles %} {{super()}} <link href="{{url_for(\'static\',filename=\'css/xscss.css\')}}" rel="stylesheet"> {% endblock %} {% block content %} <!-- 搜索栏 --> <div class="container-fluid"> <div class="row"> <div class=" col-md-offset-7 col-md-4"> <form class="navbar-form navbar-right" role="search" action="/book/search/"> <div class="form-group"> <input name=\'f_name\' type="text" class="form-control" placeholder="输入你喜欢的小说名字"> </div> <button type="submit" class以上是关于基于flask+requests 小说爬取的主要内容,如果未能解决你的问题,请参考以下文章