数据结构化与保存

Posted 有志气的骚年

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了数据结构化与保存相关的知识,希望对你有一定的参考价值。

1. 将新闻的正文内容保存到文本文件。

def writeDetailNews(content): f = open(\'gzccnews.txt\',"a",encoding="utf-8") f.write(content) f.close()

2. 将新闻数据结构化为字典的列表:

- 单条新闻的详情-->字典news

- 一个列表页所有单条新闻汇总-->列表newsls.append(news)

- 所有列表页的所有新闻汇总列表newstotal.extend(newsls)

import requests from bs4 import BeautifulSoup from datetime import datetime import re import pandas import openpyxl import sqlite3 url = "http://news.gzcc.cn/html/xiaoyuanxinwen/" res = requests.get(url); res.encoding = "utf-8" soup = BeautifulSoup(res.text,"html.parser"); def writeDetailNews(content): f = open(\'gzccnews.txt\',"a",encoding="utf-8") f.write(content) f.close() def getClickCount(newUrl): newsId = re.findall("\\_(.*).html",newUrl)[0].split("/")[-1]; res = requests.get("http://oa.gzcc.cn/api.php?op=count&id= {}&modelid=80".format(newsId)) return int(res.text.split(".html")[-1].lstrip("(\'").rsplit("\');")[0]) #获取新闻详情 def getNewDetail(newsDetailUrl): detail_res = requests.get(newsDetailUrl) detail_res.encoding = "utf-8" detail_soup = BeautifulSoup(detail_res.text, "html.parser") news={} news[\'title\'] = detail_soup.select(".show-title")[0].text info = detail_soup.select(".show-info")[0].text news[\'date_time\'] = datetime.strptime(info.lstrip(\'发布时间:\')[:19], "%Y-%m-%d %H:%M:%S") if info.find(\'来源:\')>0: news[\'source\'] = info[info.find("来源:"):].split()[0].lstrip(\'来源:\') else: news[\'source\'] = \'none\' news[\'content\'] = detail_soup.select("#content")[0].text writeDetailNews(news[\'content\']) news[\'click\'] = getClickCount(newsDetailUrl) return news # print(news) # 获取总页数 def getPageN(url): res = requests.get(url) res.encoding = \'utf-8\' soup = BeautifulSoup(res.text, \'html.parser\') return int(soup.select(".a1")[0].text.rstrip("条"))//10+1 # 获取新闻一页的所有信息 def getListPage(url): newsList = [] for news in soup.select("li"): if len(news.select(".news-list-title"))>0: #排除为空的li # time = news.select(".news-list-info")[0].contents[0].text # title = news.select(".news-list-title")[0].text # description = news.select(".news-list-description")[0].text detail_url = news.select(\'a\')[0].attrs[\'href\'] newsList.append(getNewDetail(detail_url)) return newsList newsTotal = [] totalPageNum = getPageN(url) firstPageUrl = "http://news.gzcc.cn/html/xiaoyuanxinwen/" newsTotal.extend(getListPage(firstPageUrl)) for num in range(totalPageNum,totalPageNum+1): listpageurl="http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html".format(num) getListPage(listpageurl) print(newsTotal)

3. 安装pandas,用pandas.DataFrame(newstotal),创建一个DataFrame对象df.

import pandas df = pandas.DataFrame(newstotal)

4. 通过df将提取的数据保存到csv或excel 文件。

df.to_excel(\'cwh.xlsx\')

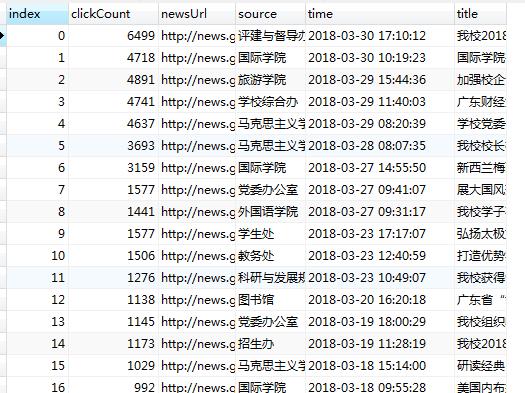

5. 用pandas提供的函数和方法进行数据分析:

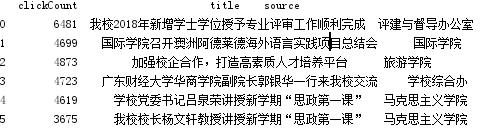

- 提取包含点击次数、标题、来源的前6行数据

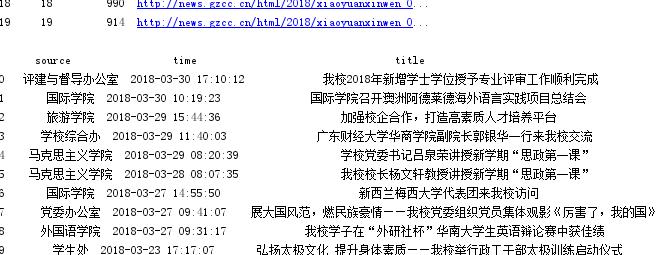

- 提取‘学校综合办’发布的,‘点击次数’超过3000的新闻。

- 提取\'国际学院\'和\'学生工作处\'发布的新闻。

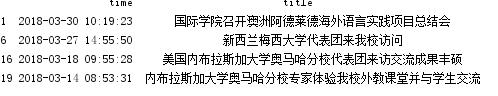

- 进取2018年3月的新闻

print(df[[\'clickCount\',\'title\',\'source\']].head(6))

print(df[(df[\'clickCount\']>3000)&(df[\'source\']==\'学校综合办\')])

print(df[(df[\'source\']==\'国际学院\')|(df[\'source\']==\'学生工作处\')])

df1 = df.set_index(\'time\') print(df1[\'2018-03\'])

6. 保存到sqlite3数据库

import sqlite3

with sqlite3.connect(\'gzccnewsdb.sqlite\') as db:

df3.to_sql(\'gzccnews05\',con = db, if_exists=\'replace\')

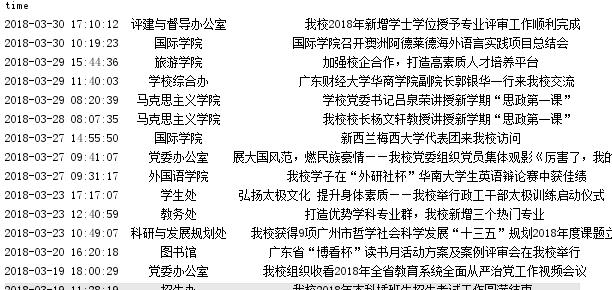

7. 从sqlite3读数据

with sqlite3.connect(\'gzccnewsdb.sqlite\') as db:

df2 = pandas.read_sql_query(\'SELECT * FROM gzccnews05\',con=db)

print(df2)

with sqlite3.connect(\'cwhdb.sqlite\') as db: df.to_sql(\'cwh\',con=db,if_exists=\'replace\') with sqlite3.connect(\'cwhdb.sqlite\') as db: df10 = pandas.read_sql_query(\'select * from cwh\',con=db) print(df10)

8. df保存到mysql数据库

安装SQLALchemy

安装PyMySQL

MySQL里创建数据库:create database gzccnews charset utf8;

import pymysql

from sqlalchemy import create_engine

conn = create_engine(\'mysql+pymysql://root:root@localhost:3306/gzccnews?charset=utf8\')

pandas.io.sql.to_sql(df, \'gzccnews\', con=conn, if_exists=\'replace\')

MySQL里查看已保存了数据。(通过MySQL Client或Navicate。)

import pymysql from sqlalchemy import create_engine conn =create_engine(\'mysql+pymysql://root:mysql@localhost:3306/cwh?charset=utf8\') pandas.io.sql.to_sql(df,\'gzccnews\',con=conn,if_exists=\'replace\')

以上是关于数据结构化与保存的主要内容,如果未能解决你的问题,请参考以下文章