04蒙特卡洛树入门学习笔记

Posted stevehawk

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了04蒙特卡洛树入门学习笔记相关的知识,希望对你有一定的参考价值。

蒙特卡洛树学习笔记

1. 强化学习(RL)

概念

? 强化学习是机器学习中的一个领域,强调如何基于环境而行动,以取得最大化的预期利益。其灵感来源于心理学中的行为主义理论,即有机体如何在环境给予的奖励或惩罚的刺激下,逐步形成对刺激的预期,产生能获得最大利益的习惯性行为。这个方法具有普适性,因此在其他许多领域都有研究,例如博弈论、控制论、运筹学、信息论、仿真优化、多主体系统学习、群体智能、统计学以及遗传算法。在运筹学和控制理论研究的语境下,强化学习被称作“近似动态规划”(approximate dynamic programming,ADP)。在最优控制理论中也有研究这个问题,虽然大部分的研究是关于最优解的存在和特性,并非是学习或者近似方面。在经济学和博弈论中,强化学习被用来解释在有限理性的条件下如何出现平衡。

? 在机器学习问题中,环境通常被规范为马尔可夫决策过程(MDP),所以许多强化学习算法在这种情况下使用动态规划技巧。传统的技术和强化学习算法的主要区别是,后者不需要关于MDP的知识,而且针对无法找到确切方法的大规模MDP。

? 强化学习和标准的监督式学习之间的区别在于,它并不需要出现正确的输入/输出对,也不需要精确校正次优化的行为。强化学习更加专注于在线规划,需要在探索(在未知的领域)和遵从(现有知识)之间找到平衡。强化学习中的“探索-遵从”的交换,在多臂Lao Hu机(英语:multi-armed bandit)问题和有限MDP中研究得最多。

强化学习的基本组件

- 环境/状态(标准的为静态stationary,对应的non-stationary)

- agent(与环境交互的对象)

- 动作(action space,环境下可行的动作集合,离散/连续)

- 反馈(回报,reward,正是有了反馈,RL才能迭代,才会学习到策略链)

2. 马尔可夫决策过程(MDP)

马尔可夫过程

? 在概率论及统计学中,马尔可夫过程(Markov process)又叫马尔可夫链(Markov Chain),是一个具备了马尔可夫性质的随机过程,因为俄国数学家安德雷·马尔可夫得名。马尔可夫过程是不具备记忆特质的(memorylessness)。换言之,马尔可夫过程的条件概率仅仅与系统的当前状态相关,而与它的过去历史或未来状态,都是独立、不相关的。马尔可夫过程可以用一个元组

马尔可夫奖励过程

? 马尔可夫奖励过程(Markov Reward Process)在马尔可夫过程的基础上增加了奖励R和衰减系数γ:

马尔可夫决策过程

? 相较于马尔可夫奖励过程,马尔可夫决策过程(Markov Decision Process)多了一个行为集合A,它是这样的一个元组:

RL与MDP

? 在强化学习中,马尔可夫决策过程是对完全可观测的环境进行描述的,也就是说观测到的状态内容完整地决定了决策的需要的特征。几乎所有的强化学习问题都可以转化为MDP。

引用自:https://zh.wikipedia.org/zh-hans/马可夫过程 | https://zhuanlan.zhihu.com/p/28084942

3. 蒙特卡洛方法(MCM)

简介

? 蒙特卡罗方法(Monte Carlo Method),也称统计模拟方法,是1940年代中期由于科学技术的发展和电子计算机的发明,而提出的一种以概率统计理论为指导的数值计算方法。是指使用随机数(或更常见的伪随机数)来解决很多计算问题的方法。

? 20世纪40年代,在冯·诺伊曼,斯塔尼斯拉夫·乌拉姆和尼古拉斯·梅特罗波利斯在洛斯阿拉莫斯国家实验室为核武器计划工作时,发明了蒙特卡罗方法。因为乌拉姆的叔叔经常在摩纳哥的蒙特卡洛赌场输钱得名,而蒙特卡罗方法正是以概率为基础的方法。

? 通常蒙特卡罗方法可以粗略地分成两类:一类是所求解的问题本身具有内在的随机性,借助计算机的运算能力可以直接模拟这种随机的过程。例如在核物理研究中,分析中子在反应堆中的传输过程。中子与原子核作用受到量子力学规律的制约,人们只能知道它们相互作用发生的概率,却无法准确获得中子与原子核作用时的位置以及裂变产生的新中子的行进速率和方向。科学家依据其概率进行随机抽样得到裂变位置、速度和方向,这样模拟大量中子的行为后,经过统计就能获得中子传输的范围,作为反应堆设计的依据。

? 另一种类型是所求解问题可以转化为某种随机分布的特征数,比如随机事件出现的概率,或者随机变量的期望值。通过随机抽样的方法,以随机事件出现的频率估计其概率,或者以抽样的数字特征估算随机变量的数字特征,并将其作为问题的解。这种方法多用于求解复杂的多维积分问题。

一个例子

? 使用蒙特卡罗方法估算π值。放置30000个随机点后,π的估算值与真实值相差0.07%。

前景

? 就单纯的用蒙特卡洛方法来下棋(最早在1993年被提出,后在2001被再次提出),我们可以简单的用随机比赛的方式来评价某一步落子。从需要评价的那一步开始,双方随机落子,直到一局比赛结束。为了保证结果的准确性,这样的随机对局通常需要进行上万盘,记录下每一盘的结果,最后取这些结果的平均,就能得到某一步棋的评价。最后要做的就是取评价最高的一步落子作为接下来的落子。也就是说为了决定一步落子就需要程序自己进行上万局的随机对局,这对随机对局的速度也提出了一定的要求。和使用了大量围棋知识的传统方法相比,这种方法的好处显而易见,就是几乎不需要围棋的专业知识,只需通过大量的随机对局就能估计出一步棋的价值。再加上一些优化方法,基于纯蒙特卡洛方法的围棋程序已经能够匹敌最强的传统围棋程序。

? 既然蒙特卡洛的路似乎充满着光明,我们就应该沿着这条路继续前行。MCTS也就是将以上想法融入到树搜索中,利用树结构来更加高效的进行节点值的更新和选择。

引用自:https://blog.csdn.net/natsu1211/article/details/50986810

4. 蒙特卡洛树搜索(MCTS)

简介

? 蒙特卡洛树搜索(Monte Carlo tree search;MCTS)是一种用于某些决策过程的启发式搜索算法,最引人注目的是在游戏中的使用。一个主要例子是电脑围棋程序,它也用于其他棋盘游戏、即时电子游戏以及不确定性游戏。

搜索步骤

解释一

- 选举(selection)是根据当前获得所有子步骤的统计结果,选择一个最优的子步骤。

- 扩展(expansion)在当前获得的统计结果不足以计算出下一个步骤时,随机选择一个子步骤。

- 模拟(simulation)模拟游戏,进入下一步。

- 反向传播(Back-Propagation)根据游戏结束的结果,计算对应路径上统计记录的值。

解释二

- 选择(Selection):从根结点R开始,选择连续的子结点向下至叶子结点L。后面给出了一种选择子结点的方法,让游戏树向最优的方向扩展,这是蒙特卡洛树搜索的精要所在。

- 扩展(Expansion):除非任意一方的输赢使得游戏在L结束,否则创建一个或多个子结点并选取其中一个结点C。

- 仿真(Simulation):在从结点C开始,用随机策略进行游戏,又称为playout或者rollout。

- 反向传播(Backpropagation):使用随机游戏的结果,更新从C到R的路径上的结点信息。

图解

详见下述链接

详细算法

在开始阶段,搜索树只有一个节点,也就是我们需要决策的局面。

搜索树中的每一个节点包含了三个基本信息:代表的局面,被访问的次数,累计评分。

选择(Selection)

? 在选择阶段,需要从根节点,也就是要做决策的局面R出发向下选择出一个最急迫需要被拓展的节点N,局面R是是每一次迭代中第一个被检查的节点;

对于被检查的局面而言,他可能有三种可能:

- 该节点所有可行动作都已经被拓展过

- 该节点有可行动作还未被拓展过

- 这个节点游戏已经结束了(例如已经连成五子的五子棋局面)

对于这三种可能:

- 如果所有可行动作都已经被拓展过了,那么我们将使用UCB公式计算该节点所有子节点的UCB值,并找到值最大的一个子节点继续检查。反复向下迭代。

- 如果被检查的局面依然存在没有被拓展的子节点(例如说某节点有20个可行动作,但是在搜索树中才创建了19个子节点),那么我们认为这个节点就是本次迭代的的目标节点N,并找出N还未被拓展的动作A。执行步骤[2]

- 如果被检查到的节点是一个游戏已经结束的节点。那么从该节点直接执行步骤{4]。

每一个被检查的节点的被访问次数在这个阶段都会自增。

在反复的迭代之后,我们将在搜索树的底端找到一个节点,来继续后面的步骤。

拓展(Expansion)

? 在选择阶段结束时候,我们查找到了一个最迫切被拓展的节点N,以及他一个尚未拓展的动作A。在搜索树中创建一个新的节点$N_n$作为N的一个新子节点。$N_n$的局面就是节点N在执行了动作A之后的局面。

模拟(Simulation)

? 为了让$N_n$得到一个初始的评分。我们从$N_n$开始,让游戏随机进行,直到得到一个游戏结局,这个结局将作为$N_n$的初始评分。一般使用胜利/失败来作为评分,只有1或者0。

反向传播(Backpropagation)

? 在$N_n$的模拟结束之后,它的父节点N以及从根节点到N的路径上的所有节点都会根据本次模拟的结果来添加自己的累计评分。如果在[1]的选择中直接发现了一个游戏结局的话,根据该结局来更新评分。

? 每一次迭代都会拓展搜索树,随着迭代次数的增加,搜索树的规模也不断增加。当到了一定的迭代次数或者时间之后结束,选择根节点下最好的子节点作为本次决策的结果。

引用自:https://www.zhihu.com/question/39916945/answer/83799720

另可参考:https://jeffbradberry.com/posts/2015/09/intro-to-monte-carlo-tree-search/

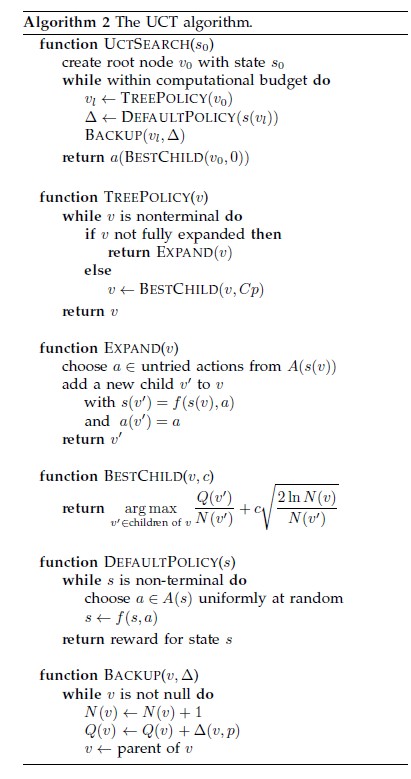

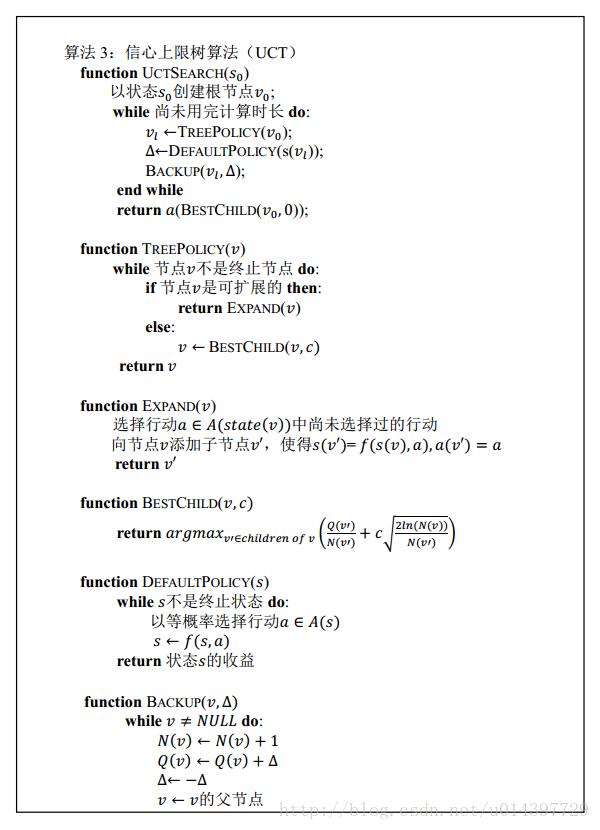

算法伪代码

引用自:https://blog.csdn.net/u014397729/article/details/27366363

5. 上限置信区间算法(UCT)

UCB1

$$\\frac{w_i}{n_i}+c \\sqrt{ \\frac{ln t}{n_i} }$$

在该式中:

代表第

次移动后取胜的次数;

代表第

次移动后仿真的次数;

为探索参数—理论上等于

;在实际中通常可凭经验选择;

代表仿真总次数,等于所有

的和。

其中,C越大,就会越照顾访问次数相对较少的子节点。

UCT介绍

? UCT算法(Upper Confidence Bound Apply to Tree)即上限置信区间算法,是一种博弈树搜索算法,该算法将蒙特卡洛树搜索方法与UCB公式结合,在超大规模博弈树的搜索过程中相对于传统的搜索算法有着时间和空间方面的优势。

即:MCTS + UCB1 = UCT

算法中的UCB公式可替换为:UCB1-tuned 等

引用自:https://blog.csdn.net/xbinworld/article/details/79372777

优点

MCTS 提供了比传统树搜索更好的方法。

Aheuristic 启发式

MCTS 不要求任何关于给定的领域策略或者具体实践知识来做出合理的决策。这个算法可以在没有任何关于博弈游戏除基本规则外的知识的情况下进行有效工作;这意味着一个简单的MCTS 实现可以重用在很多的博弈游戏中,只需要进行微小的调整,所以这也使得 MCTS 是对于一般的博弈游戏的很好的方法。

Asymmetric 非对称

MCTS 执行一种非对称的树的适应搜索空间拓扑结构的增长。这个算法会更频繁地访问更加有趣的节点,并聚焦其搜索时间在更加相关的树的部分。这使得 MCTS 更加适合那些有着更大的分支因子的博弈游戏,比如说 19X19 的围棋。这么大的组合空间会给标准的基于深度或者宽度的搜索方法带来问题,所以MCTS 的适应性说明它(最终)可以找到那些更加优化的行动,并将搜索的工作聚焦在这些部分。

任何时间

算法可以在任何时间终止,并返回当前最有的估计。当前构造出来的搜索树可以被丢弃或者供后续重用。(对比dfs暴力搜索)

简洁

算法实现非常方便( http://mcts.ai/code/python.html )

缺点

MCTS 有缺点很少,但这些缺点也可能是非常关键的影响因素。

行为能力

MCTS 算法,根据其基本形式,在某些甚至不是很大的博弈游戏中在可承受的时间内也不能够找到最好的行动方式。这基本上是由于组合步的空间的全部大小所致,关键节点并不能够访问足够多的次数来给出合理的估计。

速度

MCTS 搜索可能需要足够多的迭代才能收敛到一个很好的解上,这也是更加一般的难以优化的应用上的问题。例如,最佳的围棋程序可能需要百万次的交战和领域最佳和强化才能得到专家级的行动方案,而最有的GGP 实现对更加复杂的博弈游戏可能也就只要每秒钟数十次(领域无关的)交战。对可承受的行动时间,这样的GGP 可能很少有时间访问到每个合理的行动,所以这样的情形也不大可能出现表现非常好的搜索。

6. 详细示例代码

# This is a very simple implementation of the UCT Monte Carlo Tree Search algorithm in Python 2.7 (convert to Python3).

# The function UCT(rootstate, itermax, verbose = False) is towards the bottom of the code.

# It aims to have the clearest and simplest possible code, and for the sake of clarity, the code

# is orders of magnitude less efficient than it could be made, particularly by using a

# state.GetRandomMove() or state.DoRandomRollout() function.

#

# Example GameState classes for Nim, OXO and Othello are included to give some idea of how you

# can write your own GameState use UCT in your 2-player game. Change the game to be played in

# the UCTPlayGame() function at the bottom of the code.

#

# Written by Peter Cowling, Ed Powley, Daniel Whitehouse (University of York, UK) September 2012.

#

# Licence is granted to freely use and distribute for any sensible/legal purpose so long as this comment

# remains in any distributed code.

#

# For more information about Monte Carlo Tree Search check out our web site at www.mcts.ai

from math import *

import random

class GameState:

""" A state of the game, i.e. the game board. These are the only functions which are

absolutely necessary to implement UCT in any 2-player complete information deterministic

zero-sum game, although they can be enhanced and made quicker, for example by using a

GetRandomMove() function to generate a random move during rollout.

By convention the players are numbered 1 and 2.

"""

def __init__(self):

self.playerJustMoved = 2 # At the root pretend the player just moved is player 2 - player 1 has the first move

def Clone(self):

""" Create a deep clone of this game state.

"""

st = GameState()

st.playerJustMoved = self.playerJustMoved

return st

def DoMove(self, move):

""" Update a state by carrying out the given move.

Must update playerJustMoved.

"""

self.playerJustMoved = 3 - self.playerJustMoved

def GetMoves(self):

""" Get all possible moves from this state.

"""

def GetResult(self, playerjm):

""" Get the game result from the viewpoint of playerjm.

"""

def __repr__(self):

""" Don‘t need this - but good style.

"""

pass

class NimState:

""" A state of the game Nim. In Nim, players alternately take 1,2 or 3 chips with the

winner being the player to take the last chip.

In Nim any initial state of the form 4n+k for k = 1,2,3 is a win for player 1

(by choosing k) chips.

Any initial state of the form 4n is a win for player 2.

"""

def __init__(self, ch):

self.playerJustMoved = 2 # At the root pretend the player just moved is p2 - p1 has the first move

self.chips = ch

def Clone(self):

""" Create a deep clone of this game state.

"""

st = NimState(self.chips)

st.playerJustMoved = self.playerJustMoved

return st

def DoMove(self, move):

""" Update a state by carrying out the given move.

Must update playerJustMoved.

"""

assert move >= 1 and move <= 3 and move == int(move)

self.chips -= move

self.playerJustMoved = 3 - self.playerJustMoved

def GetMoves(self):

""" Get all possible moves from this state.

"""

return list(range(1, min([4, self.chips + 1])))

def GetResult(self, playerjm):

""" Get the game result from the viewpoint of playerjm.

"""

assert self.chips == 0

if self.playerJustMoved == playerjm:

return 1.0 # playerjm took the last chip and has won

else:

return 0.0 # playerjm‘s opponent took the last chip and has won

def __repr__(self):

s = "Chips:" + str(self.chips) + " JustPlayed:" + str(self.playerJustMoved)

return s

class OXOState:

""" A state of the game, i.e. the game board.

Squares in the board are in this arrangement

012

345

678

where 0 = empty, 1 = player 1 (X), 2 = player 2 (O)

"""

def __init__(self):

self.playerJustMoved = 2 # At the root pretend the player just moved is p2 - p1 has the first move

self.board = [0, 0, 0, 0, 0, 0, 0, 0, 0] # 0 = empty, 1 = player 1, 2 = player 2

def Clone(self):

""" Create a deep clone of this game state.

"""

st = OXOState()

st.playerJustMoved = self.playerJustMoved

st.board = self.board[:]

return st

def DoMove(self, move):

""" Update a state by carrying out the given move.

Must update playerToMove.

"""

assert move >= 0 and move <= 8 and move == int(move) and self.board[move] == 0

self.playerJustMoved = 3 - self.playerJustMoved

self.board[move] = self.playerJustMoved

def GetMoves(self):

""" Get all possible moves from this state.

"""

return [i for i in range(9) if self.board[i] == 0]

def GetResult(self, playerjm):

""" Get the game result from the viewpoint of playerjm.

"""

for (x, y, z) in [(0, 1, 2), (3, 4, 5), (6, 7, 8), (0, 3, 6), (1, 4, 7), (2, 5, 8), (0, 4, 8), (2, 4, 6)]:

if self.board[x] == self.board[y] == self.board[z]:

if self.board[x] == playerjm:

return 1.0

else:

return 0.0

if self.GetMoves() == []: return 0.5 # draw

assert False # Should not be possible to get here

def __repr__(self):

s = ""

for i in range(9):

s += ".XO"[self.board[i]]

if i % 3 == 2: s += "\\n"

return s

class OthelloState:

""" A state of the game of Othello, i.e. the game board.

The board is a 2D array where 0 = empty (.), 1 = player 1 (X), 2 = player 2 (O).

In Othello players alternately place pieces on a square board - each piece played

has to sandwich opponent pieces between the piece played and pieces already on the

board. Sandwiched pieces are flipped.

This implementation modifies the rules to allow variable sized square boards and

terminates the game as soon as the player about to move cannot make a move (whereas

the standard game allows for a pass move).

"""

def __init__(self, sz=8):

self.playerJustMoved = 2 # At the root pretend the player just moved is p2 - p1 has the first move

self.board = [] # 0 = empty, 1 = player 1, 2 = player 2

self.size = sz

assert sz == int(sz) and sz % 2 == 0 # size must be integral and even

for y in range(sz):

self.board.append([0] * sz)

self.board[sz / 2][sz / 2] = self.board[sz / 2 - 1][sz / 2 - 1] = 1

self.board[sz / 2][sz / 2 - 1] = self.board[sz / 2 - 1][sz / 2] = 2

def Clone(self):

""" Create a deep clone of this game state.

"""

st = OthelloState()

st.playerJustMoved = self.playerJustMoved

st.board = [self.board[i][:] for i in range(self.size)]

st.size = self.size

return st

def DoMove(self, move):

""" Update a state by carrying out the given move.

Must update playerToMove.

"""

(x, y) = (move[0], move[1])

assert x == int(x) and y == int(y) and self.IsOnBoard(x, y) and self.board[x][y] == 0

m = self.GetAllSandwichedCounters(x, y)

self.playerJustMoved = 3 - self.playerJustMoved

self.board[x][y] = self.playerJustMoved

for (a, b) in m:

self.board[a][b] = self.playerJustMoved

def GetMoves(self):

""" Get all possible moves from this state.

"""

return [(x, y) for x in range(self.size) for y in range(self.size) if

self.board[x][y] == 0 and self.ExistsSandwichedCounter(x, y)]

def AdjacentToEnemy(self, x, y):

""" Speeds up GetMoves by only considering squares which are adjacent to an enemy-occupied square.

"""

for (dx, dy) in [(0, +1), (+1, +1), (+1, 0), (+1, -1), (0, -1), (-1, -1), (-1, 0), (-1, +1)]:

if self.IsOnBoard(x + dx, y + dy) and self.board[x + dx][y + dy] == self.playerJustMoved:

return True

return False

def AdjacentEnemyDirections(self, x, y):

""" Speeds up GetMoves by only considering squares which are adjacent to an enemy-occupied square.

"""

es = []

for (dx, dy) in [(0, +1), (+1, +1), (+1, 0), (+1, -1), (0, -1), (-1, -1), (-1, 0), (-1, +1)]:

if self.IsOnBoard(x + dx, y + dy) and self.board[x + dx][y + dy] == self.playerJustMoved:

es.append((dx, dy))

return es

def ExistsSandwichedCounter(self, x, y):

""" Does there exist at least one counter which would be flipped if my counter was placed at (x,y)?

"""

for (dx, dy) in self.AdjacentEnemyDirections(x, y):

if len(self.SandwichedCounters(x, y, dx, dy)) > 0:

return True

return False

def GetAllSandwichedCounters(self, x, y):

""" Is (x,y) a possible move (i.e. opponent counters are sandwiched between (x,y) and my counter in some direction)?

"""

sandwiched = []

for (dx, dy) in self.AdjacentEnemyDirections(x, y):

sandwiched.extend(self.SandwichedCounters(x, y, dx, dy))

return sandwiched

def SandwichedCounters(self, x, y, dx, dy):

""" Return the coordinates of all opponent counters sandwiched between (x,y) and my counter.

"""

x += dx

y += dy

sandwiched = []

while self.IsOnBoard(x, y) and self.board[x][y] == self.playerJustMoved:

sandwiched.append((x, y))

x += dx

y += dy

if self.IsOnBoard(x, y) and self.board[x][y] == 3 - self.playerJustMoved:

return sandwiched

else:

return [] # nothing sandwiched

def IsOnBoard(self, x, y):

return x >= 0 and x < self.size and y >= 0 and y < self.size

def GetResult(self, playerjm):

""" Get the game result from the viewpoint of playerjm.

"""

jmcount = len([(x, y) for x in range(self.size) for y in range(self.size) if self.board[x][y] == playerjm])

notjmcount = len(

[(x, y) for x in range(self.size) for y in range(self.size) if self.board[x][y] == 3 - playerjm])

if jmcount > notjmcount:

return 1.0

elif notjmcount > jmcount:

return 0.0

else:

return 0.5 # draw

def __repr__(self):

s = ""

for y in range(self.size - 1, -1, -1):

for x in range(self.size):

s += ".XO"[self.board[x][y]]

s += "\\n"

return s

class Node:

""" A node in the game tree. Note wins is always from the viewpoint of playerJustMoved.

Crashes if state not specified.

"""

def __init__(self, move=None, parent=None, state=None):

self.move = move # the move that got us to this node - "None" for the root node

self.parentNode = parent # "None" for the root node

self.childNodes = []

self.wins = 0

self.visits = 0

self.untriedMoves = state.GetMoves() # future child nodes

self.playerJustMoved = state.playerJustMoved # the only part of the state that the Node needs later

def UCTSelectChild(self):

""" Use the UCB1 formula to select a child node. Often a constant UCTK is applied so we have

lambda c: c.wins/c.visits + UCTK * sqrt(2*log(self.visits)/c.visits to vary the amount of

exploration versus exploitation.

"""

s = sorted(self.childNodes, key=lambda c: c.wins / c.visits + sqrt(2 * log(self.visits) / c.visits))[-1]

return s

def AddChild(self, m, s):

""" Remove m from untriedMoves and add a new child node for this move.

Return the added child node

"""

n = Node(move=m, parent=self, state=s)

self.untriedMoves.remove(m)

self.childNodes.append(n)

return n

def Update(self, result):

""" Update this node - one additional visit and result additional wins. result must be from the viewpoint of playerJustmoved.

"""

self.visits += 1

self.wins += result

def __repr__(self):

return "[M:" + str(self.move) + " W/V:" + str(self.wins) + "/" + str(self.visits) + " U:" + str(

self.untriedMoves) + "]"

def TreeToString(self, indent):

s = self.IndentString(indent) + str(self)

for c in self.childNodes:

s += c.TreeToString(indent + 1)

return s

def IndentString(self, indent):

s = "\\n"

for i in range(1, indent + 1):

s += "| "

return s

def ChildrenToString(self):

s = ""

for c in self.childNodes:

s += str(c) + "\\n"

return s

def UCT(rootstate, itermax, verbose=False):

""" Conduct a UCT search for itermax iterations starting from rootstate.

Return the best move from the rootstate.

Assumes 2 alternating players (player 1 starts), with game results in the range [0.0, 1.0]."""

rootnode = Node(state=rootstate)

for i in range(itermax):

node = rootnode

state = rootstate.Clone()

# Select

while node.untriedMoves == [] and node.childNodes != []: # node is fully expanded and non-terminal

node = node.UCTSelectChild()

state.DoMove(node.move)

# Expand

if node.untriedMoves != []: # if we can expand (i.e. state/node is non-terminal)

m = random.choice(node.untriedMoves)

state.DoMove(m)

node = node.AddChild(m, state) # add child and descend tree

# Rollout - this can often be made orders of magnitude quicker using a state.GetRandomMove() function

while state.GetMoves() != []: # while state is non-terminal

state.DoMove(random.choice(state.GetMoves()))

# Backpropagate

while node != None: # backpropagate from the expanded node and work back to the root node

node.Update(state.GetResult(

node.playerJustMoved)) # state is terminal. Update node with result from POV of node.playerJustMoved

node = node.parentNode

# Output some information about the tree - can be omitted

if (verbose):

print(rootnode.TreeToString(0))

else:

print(rootnode.ChildrenToString())

return sorted(rootnode.childNodes, key=lambda c: c.visits)[-1].move # return the move that was most visited

def UCTPlayGame():

""" Play a sample game between two UCT players where each player gets a different number

of UCT iterations (= simulations = tree nodes).

"""

# state = OthelloState(4) # uncomment to play Othello on a square board of the given size

state = OXOState() # uncomment to play OXO

# state = NimState(15) # uncomment to play Nim with the given number of starting chips

while (state.GetMoves() != []):

print(str(state))

if state.playerJustMoved == 1:

m = UCT(rootstate=state, itermax=1000, verbose=False) # play with values for itermax and verbose = True

else:

m = UCT(rootstate=state, itermax=100, verbose=False)

print("Best Move: " + str(m) + "\\n")

state.DoMove(m)

if state.GetResult(state.playerJustMoved) == 1.0:

print("Player " + str(state.playerJustMoved) + " wins!")

elif state.GetResult(state.playerJustMoved) == 0.0:

print("Player " + str(3 - state.playerJustMoved) + " wins!")

else:

print("Nobody wins!")

if __name__ == "__main__":

""" Play a single game to the end using UCT for both players.

"""

UCTPlayGame()原始代码为python2,由我转换为python3的代码。

以上是关于04蒙特卡洛树入门学习笔记的主要内容,如果未能解决你的问题,请参考以下文章