基础任务

| Contributor | 贡献占比 |

|---|---|

| 蒋志远 | 37% |

| 李露阳 | 30% |

| 鲁平 | 33% |

PSP

| PSP2.1 | PSP阶段 | 预估耗时实际耗时(分钟) | 实际耗时(分钟) |

|---|---|---|---|

| Planning | 计划 | 20 | 30 |

| Estimate | 估计这个任务需要多少时间 | 10 | 10 |

| Development | 开发 | 530 | 740 |

| - Analysis | - 需求分析(包括学习新技术) | 100 | 160 |

| - Design Spec | - 生成设计文档 | 100 | 150 |

| - Coding Standard | - 代码规范 (为目前的开发制定合适的规范) | 10 | 10 |

| - Design | - 具体设计 | 30 | 30 |

| - Coding | - 具体编码 | 200 | 240 |

| - Code Review | - 代码复审 | 30 | 30 |

| - Test | - 测试(自我测试,修改代码,提交修改) | 60 | 120 |

| Reporting | 报告 | 180 | 320 |

| - Test Report | - 测试报告 | 40 | 65 |

| - Size Measurement | - 计算工作量 | 10 | 15 |

| - Postmortem & Process Improvement Plan | - 事后总结, 并提出过程改进计划 | 130 | 240 |

| 合计 | 740 | 1100 |

模块划分

将程序划分成三个部分,分别管控 IO 、核心功能的实现,和主函数。

因为核心功能比较复杂,其中我负责和李露阳结对编程,实现核心功能 WordCounter.java,编写主要的单元测试,并且教他优化代码,编写 Javadoc。

各模块设计如下:

1. Main

/**

* com.hust.wcPro

* Created by Blues on 2018/3/27.

*/

import java.util.HashMap;

public class Main {

static public void main(String[] args) {

IOController io_control = new IOController();

String valid_file = io_control.get(args);

if (valid_file.equals("")) {

return ;

}

WordCounter wordcounter = new WordCounter();

HashMap<String, Integer> result = wordcounter.count(valid_file);

io_control.save(result);

}

}

Main函数负责所有接口的调用,逻辑很简单,即IO获取有效的文件参数,调用 WordCounter 类的核心函数,IO 将结果排序后存入 result.txt 中。

2. IOController

IOController 类负责管控 io,具体设计如下:

class IOController {

IOController() {}

/**

* Parses the main function arguments

*

* @param args the main function arguments

* @return a valid file name

*/

public String get(String[] args);

/**

* Saves the result sorted

*

* @param result the result contain word as key as count as value

* @return the state code of operation

*/

public int save(HashMap<String, Integer> result);

}

get()负责解析主函数的参数,返回一个合法的,存在的文件名。save()负责将输出传入的结果排序后输出到 result.txt 文件中。

3. WordCounter

WordCounter 类负责实现核心功能 count() 函数,判断合法的单词,处理老师要求的各种特殊情况,统计传入的文件中的各字符的数量,结果以 HashMap 的形式返回。

/**

* The {@code WordCounter} class is a multi-purpose text file

* counter, which can judge a legal word and count word numbers,

* to put the result to a {@code HashMap}.

* <br><br>

*

* @author VectorLu

* @author YangLeee

* @since JDK1.8

*/

public class WordCounter {

WordCounter() {

}

/**

* Return whether the argument is in the English alphabet.

* @param c

* @return {@code true} if the argument is in the English alphabet,

* otherwise {@code false}.

*/

private boolean isEngChar(char c);

/**

* Return whether the argument is hyphen, which is {@code -}.

* @param c

* @return {@code true} if the argument is hyphen

* otherwise {@code false}.

*/

private boolean isHyphen(char c);

/**

* Counts the words in the specific file

*

* @param filename the file to be counted

* @return the result saves the word(lowercased) as key and count as value

*/

public HashMap<String, Integer> count(String filename);

}

项目管理

为了能高效的合作以及更好的项目管理,我们选择使用 Gradle 进行项目的管理以及依赖管理,使用也可以更好的使用 JUnit5 进行单元测试(其中曾经混用过 JUnit4,并且发现 JUnit5 更为严谨,后全部迁移至 JUnit5)。因为多成员合作,我们使用 Git 进行源代码管理。

build.gradle 来自我们组组员蒋志远同学

其中,Gradle 的配置文件 build.gradle 内容如下,可供参考:

buildscript {

repositories {

mavenCentral()

}

dependencies {

classpath \'org.junit.platform:junit-platform-gradle-plugin:1.1.0\'

}

}

plugins {

id \'com.gradle.build-scan\' version \'1.12.1\'

id \'java\'

id \'eclipse\'

id \'idea\'

id \'maven\'

}

buildScan {

licenseAgreementUrl = "https://gradle.com/terms-of-service"

licenseAgree = "yes"

}

apply plugin: \'org.junit.platform.gradle.plugin\'

int javaVersion = Integer.valueOf((String) JavaVersion.current().getMajorVersion())

if (javaVersion < 10) apply plugin: \'jacoco\'

jar {

baseName = \'wcPro\'

version = \'0.0.1\'

manifest {

attributes \'Main-Class\': \'Main\'

}

}

repositories {

mavenCentral()

}

dependencies {

testCompile (

\'org.junit.jupiter:junit-jupiter-api:5.0.3\',

\'org.json:json:20090211\'

)

testRuntime(

\'org.junit.jupiter:junit-jupiter-engine:5.0.3\',

\'org.junit.vintage:junit-vintage-engine:4.12.1\',

\'org.junit.platform:junit-platform-launcher:1.0.1\',

\'org.junit.platform:junit-platform-runner:1.0.1\'

)

}

task wrapper(type: Wrapper) {

description = \'Generates gradlew[.bat] scripts\'

gradleVersion = \'4.6\'

}

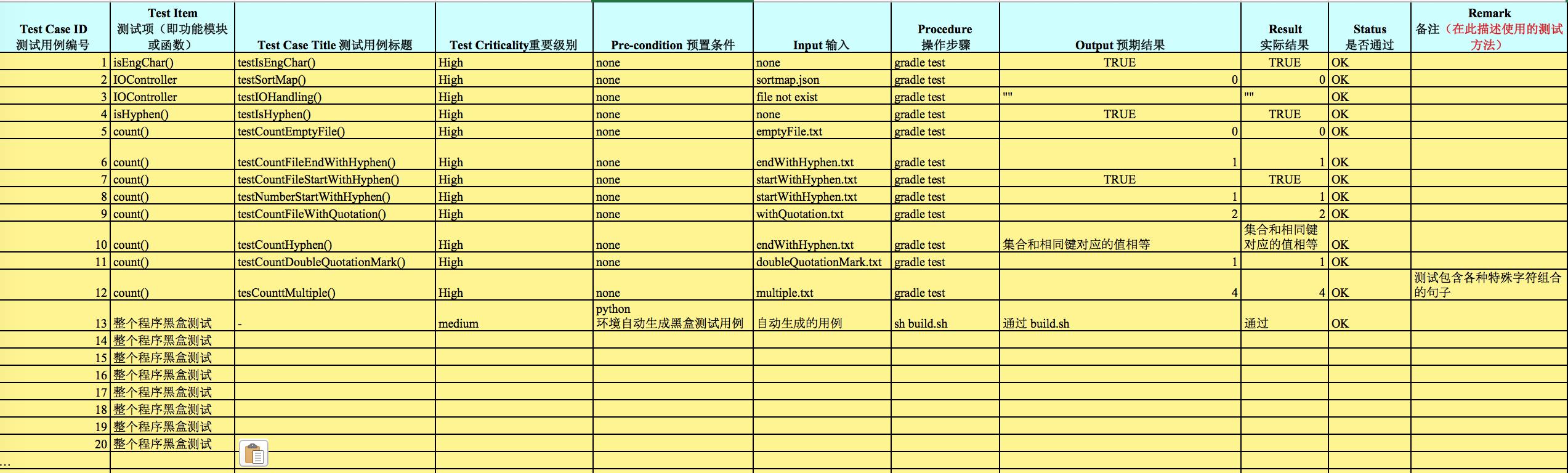

测试

1. 单元测试

白盒测试的测试方法有代码检查法、静态结构分析法、静态质量度量法、逻辑覆盖法、基本路径测试法、域测试、符号测试、路径覆盖和程序变异。

白盒测试法的覆盖标准有逻辑覆盖、循环覆盖和基本路径测试。其中逻辑覆盖包括语句覆盖、判定覆盖、条件覆盖、判定/条件覆盖、条件组合覆盖和路径覆盖。

要保证测试到每一个方法,而复杂的方法,可能需要使用多种测试方法,如边界测试,路径覆盖。

public 方法测试

单元测试我们测试的粒度是到接口,因为项目主要包含 3 个大的接口,所以我们要对其分别进行测试。主要接口:

IOController.get()IOController.save()WordCounter.count()

我们设计了 UnitTest 类来进行接口测试,我主要负责对 WordCounter.count() 进行单元测试(包括边界测试),测试了非法路径、针对老师要求的五种特殊情况做了详细的测试。

第一,由连续的若干个英文字母组成的字符串,例如,software,

第二,用连字符(即短横线)所连接的若干个英文单词也视为1个单词,例如,content-based,视为1个单词。

注意,单词不区分大小写,不考虑英文以外的其他语言,且仅考虑半角。

有关单词识别的部分典型情况的说明:

第一,Let’s,这种包含单引号的情况,视为2个单词,即let和s。

第二,night-,带短横线的单词,视为1个单词,即night。

第三,“I,带双引号的单词,视为1个单词,即i。

第四,TABLE1-2,带数字的单词,视为1个单词,即table。

第五,(see Box 3–2).8885d_c01_016,带数字、常用字符和单词的情况,视为4个单词,即see, box, d, c。

单元测试内容(使用了 @DisplayName 来说明,而且由测试方法名易知其测试目的,如下:

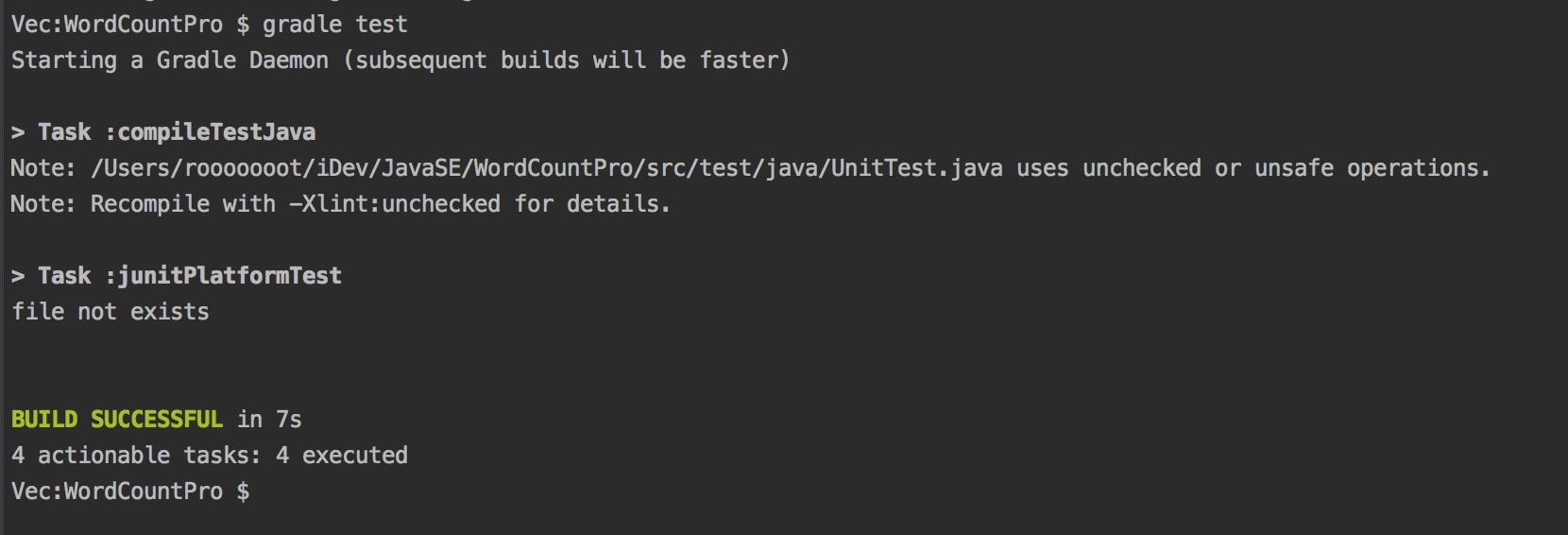

使用命令 gradle test 进行测试

或者在 Idea 里直接点击运行测试:

class UnitTest {

UnitTest() {}

String fileParentPath = "src/test/resources/";

@Test

void testCountEmptyFile() {

String fileName = "emptyFile.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(0, result.size());

}

@Test

@DisplayName("Border test: wc.count(endWithHyphen.txt)")

void testCountFileEndWithHyphen() {

String fileName = "endWithHyphen.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(1, result.size());

}

@Test

@DisplayName("Bord test: wc.count(startWithHyphen.txt)")

void testCountFileStartWithHyphen() {

String fileName = "startWithHyphen.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(true, result.containsKey("hyphen"));

}

@Test

@DisplayName("Bord test: wc.count(startWithHyphen.txt)")

void testNumberStartWithHyphen() {

String fileName = "startWithHyphen.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(1, result.size());

}

@Test

@DisplayName("Bord test: wc.count(startWithHyphen.txt)")

void testCountFileWithQuatation() {

String fileName = "withQuatation.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(2, result.size());

}

@Test

void testCountHyphen() {

String fileName = "endWithHyphen.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

HashMap expect = new HashMap(1);

expect.put("hyphen", 1);

assertEquals(expect.keySet(), result.keySet());

for (Object key: expect.keySet()) {

assertEquals((int)expect.get(key), (int)result.get(key));

}

}

@Test

@DisplayName("Border test: single quotation mark")

void testCountSingleQuotationMark() {

String fileName = "singleQuotationMark.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(2, result.size());

}

@Test

@DisplayName("Border test: double quotation mark")

void testCountDoubleQuotationMark() {

String fileName = "doubleQuotationMark.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(1, result.size());

}

@Test

@DisplayName("Border test: word with number")

void testCountWordWithNumber() {

String fileName = "wordWithNumber.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(1, result.size());

}

@Test

@DisplayName("Border test: word with multiple kinds of char")

void testCountMultiple() {

String fileName = "multiple.txt";

String relativePath = fileParentPath + fileName;

WordCounter wc = new WordCounter();

HashMap result = wc.count(relativePath);

assertEquals(4, result.size());

}

}

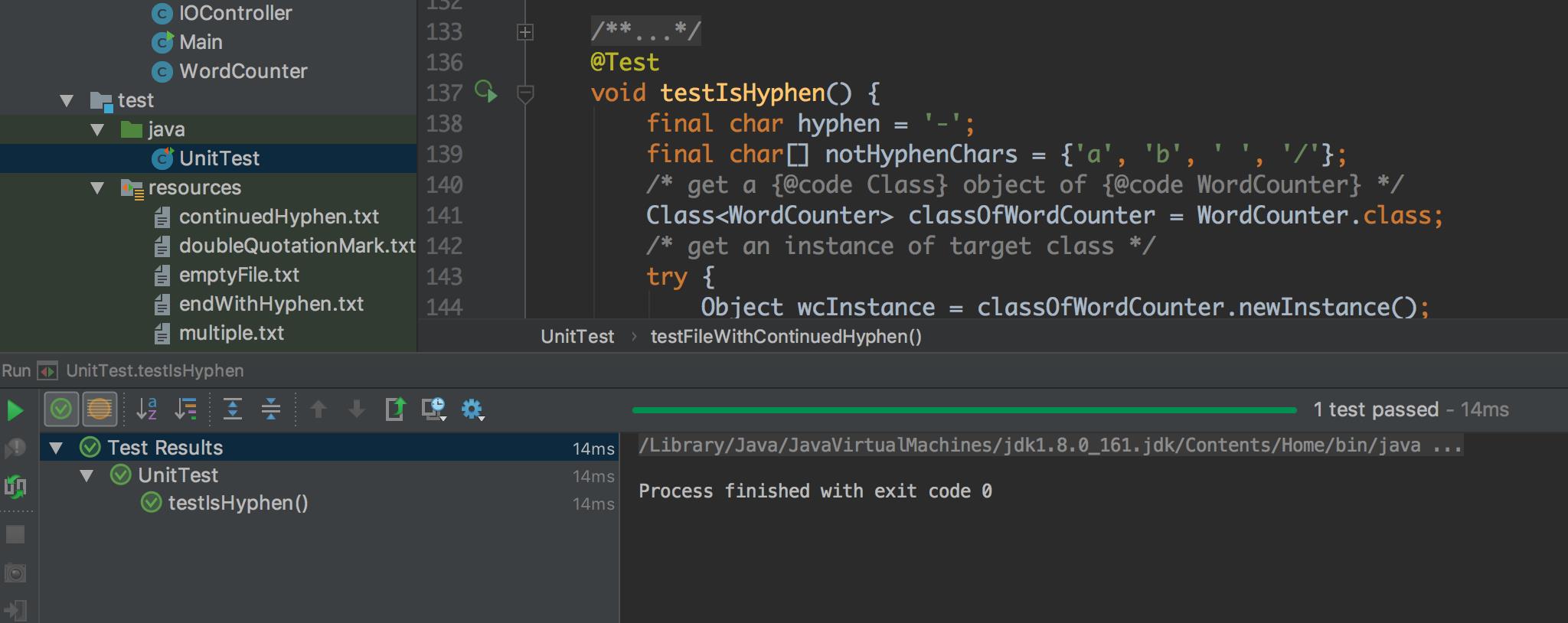

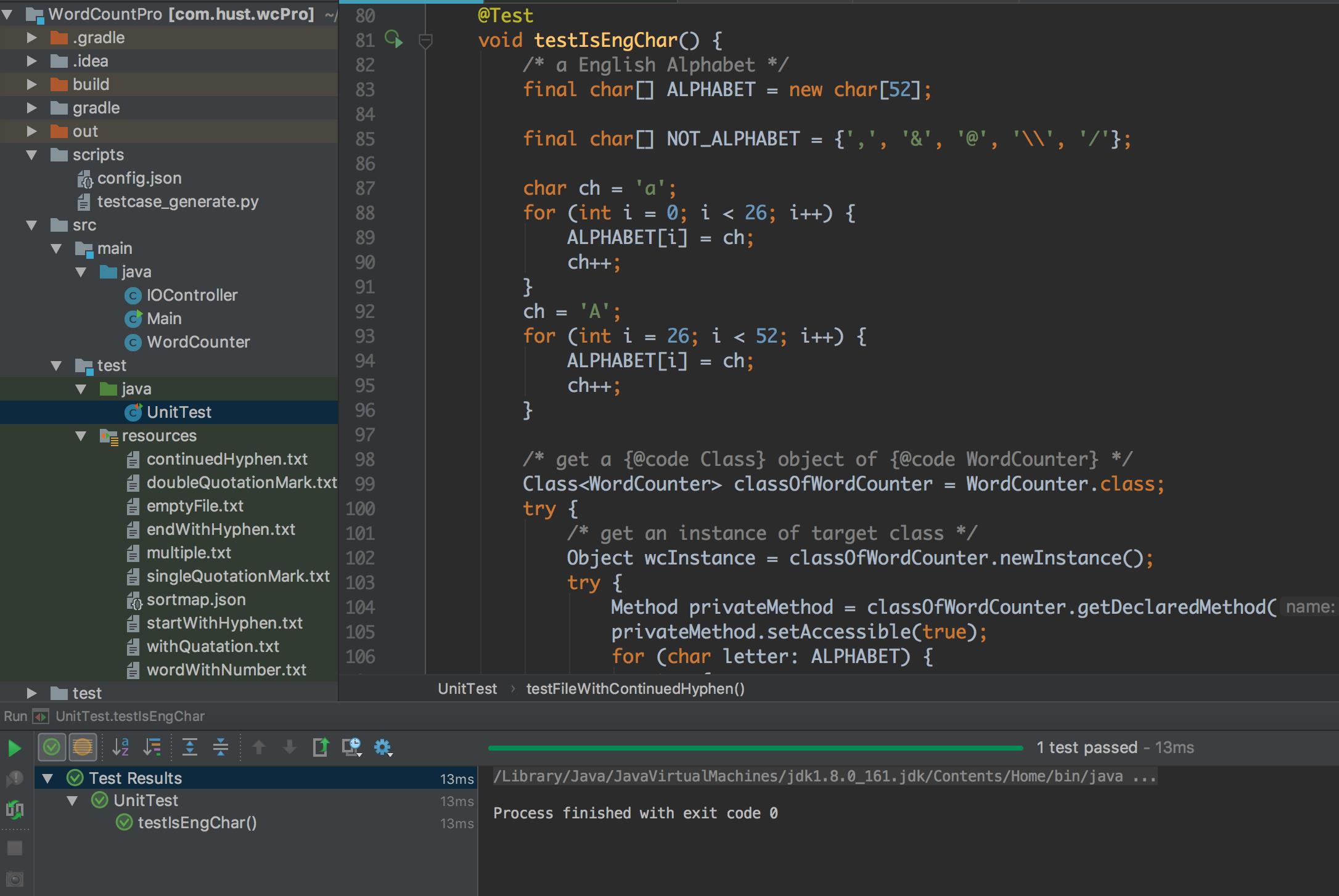

private 方法测试

除了对于公有方法的测试,我还负责对私有方法进行测试。而对于无法没有访问权限的两个私有方法,要如何测试呢?(虽然 WordCounter.java 的两个私有方法十分简单,不过仍有测试以防万一的必要,另外也可以学习如何测试私有方法)。

很显然,碰到这种情况,我们只能用 reflection 即反射来处理,如下:

/**

* Use reflection to test {@code private} method {@isEngChar()}

*/

@Test

void testIsEngChar() {

/* a English Alphabet */

final char[] ALPHABET = new char[52];

final char[] NOT_ALPHABET = {\',\', \'&\', \'@\', \'\\\\\', \'/\'};

char ch = \'a\';

for (int i = 0; i < 26; i++) {

ALPHABET[i] = ch;

ch++;

}

ch = \'A\';

for (int i = 26; i < 52; i++) {

ALPHABET[i] = ch;

ch++;

}

/* get a {@code Class} object of {@code WordCounter} */

Class<WordCounter> classOfWordCounter = WordCounter.class;

try {

/* get an instance of target class */

Object wcInstance = classOfWordCounter.newInstance();

try {

Method privateMethod = classOfWordCounter.getDeclaredMethod("isEngChar", char.class);

privateMethod.setAccessible(true);

for (char letter: ALPHABET) {

try {

boolean result = (Boolean) privateMethod.invoke(wcInstance, new Object[]{letter});

assertEquals(true, result);

} catch (InvocationTargetException e) {

e.printStackTrace();

}

}

for (char notLetter: NOT_ALPHABET) {

try {

boolean result = (Boolean) privateMethod.invoke(wcInstance, new Object[]{notLetter});

assertEquals(false, result);

} catch (InvocationTargetException e) {

e.printStackTrace();

}

}

} catch (NoSuchMethodException e) {

e.printStackTrace();

}

} catch (InstantiationException e) {

e.printStackTrace();

} catch (IllegalAccessException e) {

e.printStackTrace();

}

}

2. 自动化黑盒测试

——来自我们组员蒋志远同学

为了能高效进行测试,我们采用了自动化脚本的方式进行测试能更好的进行压力测试。

首先我们需要大量的、正确的测试用例,每个测试用例的大小必须要足够大、内容也要保证正确。为此,手写测试用例是绝对不实际的,所以我们需要自动生成正确的测试用例。为了达到这个目的,我们组用 Python 写了一个简单的脚本,用来自动生成测试用例,内容随机但是大小可控:

from functools import reduce

import numpy as np

from numpy.random import randint

import json

import sys, os, re

elements = {

"words": "abcdefghijklmnopqrstuvwxyz-",

"symbol": "!@#$%^&*()~`_+=|\\\\:;\\"\'<>?/ \\t\\r\\n1234567890-"

}

def generate_usecase(configs):

global elements

path = os.path.join(\'test\', \'testcase\')

result_path = os.path.join(\'test\', \'result\')

if not os.path.exists(path):

os.makedirs(path)

if not os.path.exists(result_path):

os.makedirs(result_path)

for config_idx, config in enumerate(configs):

word_dict = {}

i = 0

# 这里用于生成一个合法的单词

while i < config[\'num_of_type\']:

word_len = randint(*config[\'word_size\'])

word_elements = randint(0, len(elements[\'words\']), word_len)

word = np.array(list(elements[\'words\']))[word_elements]

word = \'\'.join(word)

# 这里将单词中不合法的 ‘-’ 转化删除掉

word = re.sub(r\'-{2,}\',\'-\', word)

word = re.sub(r\'^-*\', \'\', word)

word = re.sub(r\'-*$\', \'\', word)

if len(word) == 0: # 运气不好全是 ‘-’ 那么单词生成失败,从新生成单词

continue

word_dict[word] = 0

i += 1

total_count = 0

# 设置单词重复出现的次数

for key in word_dict.keys():

word_dict[key] = randint(*config[\'word_repeat\'])

total_count += word_dict[key]

word_dict_tmp = word_dict.copy()

final_string = \'\'

# 构造最终的用例文本

for i in range(total_count):

key, val = None, 0

while (val == 0):

key_tmp = list(word_dict_tmp.keys())[randint(len(word_dict))]

val = word_dict_tmp[key_tmp]

if val != 0:

key = key_tmp

word_dict_tmp[key_tmp] = val-1

# 这里将单词的内容随机大小写

word_upper_case = randint(0, 2, len(key))

key = \'\'.join([s.upper() if word_upper_case[i] > 0 else s for i, s in enumerate(list(key))])

final_string += key

sep = \'\'

# 构造合法的分隔符

for _ in range(randint(*config[\'sep_size\'])):

sep += elements[\'symbol\'][randint(0, len(elements[\'symbol\']))]

if sep == \'-\':

while sep == \'-\':

sep = elements[\'symbol\'][randint(0, len(elements[\'symbol\']))]

final_string += sep

with open(os.path.join(path, \'{}_usecase.txt\').format(config_idx), \'w\') as f:

f.write(final_string)

sorted_key = sorted(word_dict.items(), key=lambda kv:(-kv[1], kv[0]))

result = \'\'

for key, val in sorted_key:

result += key + \': \' + str(val) + \'\\n\'

with open(os.path.join(path, \'{}_result_true.txt\'.format(config_idx)), \'w\') as f:

f.write(result)

print(\'test case {} generated\'.format(config_idx))

def main():

config = sys.argv[-1]

with open(config) as f:

config = json.load(f)

generate_usecase(config)

if __name__ == \'__main__\':

main()

其中的配置文件的格式大致如下:

[

{

"num_of_type": 10,

"word_size": [1, 10],

"sep_size": [1,3],

"word_repeat": [1, 300]

},

{

"num_of_type": 20,

"word_size": [1, 20],

"sep_size": [1,3],

"word_repeat": [20, 300]

}

]

内容很简单,只需要配置有多少个单词,每个单词长度范围,分隔符的长度范围,每个单词重复出现的大小范围,即可生成相应的测试用例和正确的排序后的结果。

..........

YMtyibqY

zxz*^QtRWv*O=3KDvJKmpQb86MThOdnP

ZXZ>#aAys>&mthodnP>`qtRWv(QTRWV*YmTYiBqY^\\O9Zxz_?MthOdNP$ zxZ="MtHODnP#!yMTYibqY:o%2AaYS<#QTRwV8MTHOdnp!o#+MTHodNP)*QTRWV;YmtyiBQY ZXz$hesS`aayS_#FKcU=)AAys;fKcu-$Z$MthoDnp

YMTYIBqy/3aAyS!Zxz\'yMtyiBQY~1KdvjKMpQB\'@aAYs\'Z\'zXZ3z2hESs5aAys@yMtyiBQy4qtRWV3kDvJKMpQB:9yMTyIbqy_YmtyIBqY

KdvJKmpqB>YMtYibQy

>z2O

z`^FKCu$<QTRwv#<mtHOdnP%z+z"*FKCu9hESs<fkcu!YMtYiBqY"HesS9MtHODNp

ZxZ

.........

以上是关于WordCounter Teamwork的主要内容,如果未能解决你的问题,请参考以下文章