hadoop搭建部署

Posted 星辰大海ゞ

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了hadoop搭建部署相关的知识,希望对你有一定的参考价值。

HDFS(Hadoop Distributed File System)和Mapreduce是hadoop的两大核心:

HDFS(文件系统)实现分布式存储的底层支持

Mapreduce(编程模型)实现分布式并行任务处理的程序支持

JobTracker 对应于 NameNode

TaskTracker 对应于 DataNode

DataNode和NameNode 是针对数据存放来而言的

JobTracker和TaskTracker是对于MapReduce执行而言的

从官网下载安装包:

wget http://mirrors.cnnic.cn/apache/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz

JDK安装和ssh免密码等此处不再讲述

hadoop环境变量配置:

vim /etc/profile.d/hadoop.sh

HADOOP_HOME=/usr/local/hadoop HADOOP_HEAPSIZE=2048 HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native PATH=$HADOOP_HOME/bin:$PATH HADOOP_OPTS=-Djava.library.path=$HADOOP_HOME/lib/native

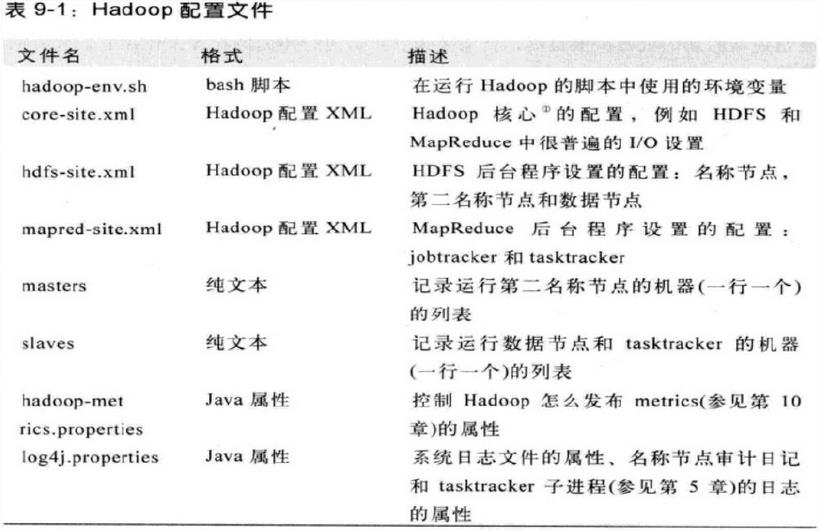

然后主要配置下面5个配置文件:

core-site.xml

hdfs-site.xml

mapred-site.xml

yarn-site.xml

slave

以上各配置文件的各项参数默认值:

http://hadoop.apache.org/docs/r2.7.1/hadoop-project-dist/hadoop-common/core-default.xml

http://hadoop.apache.org/docs/r2.7.1/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

http://hadoop.apache.org/docs/r2.7.1/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

http://hadoop.apache.org/docs/r2.7.1/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

vim core-site.xml 在<configuration>处添加以下部分

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://dataMaster30:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131702</value>

</property>

</configuration>

vim hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>dataMaster30:9001</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>512m</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/data/hadoop/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/data/hadoop/hdfs</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>dataMaster30:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>dataMaster30:19888</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>2048</value>

<description>每个Map任务的物理内存限制</description>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>2048</value>

<description>每个Reduce任务的物理内存限制</description>

</property>

</configuration>

vim yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>dataMaster30</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>65366</value>

<discription>每个节点可用内存,单位MB</discription>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

<discription>单个任务可申请最少内存,默认1024MB</discription>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>16384</value>

<discription>单个任务可申请最大内存,默认8192MB</discription>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>16</value>

<discription>cpu</discription>

</property>

</configuration>

vim slave

#localhost

dataSlave31

dataSlave32

dataSlave33

dataSlave34

dataSlave35

完成后,将配置好的Hadoop目录分发到各个slave节点对应位置上。

在Master节点服务器启动hadoop集群,从节点会自动启动,进入hadoop目录

(1)初始化,格式化Hadoop。输入命令,bin/hdfs namenode -format

(2)全部启动sbin/start-all.sh,也可以分开sbin/start-dfs.sh、sbin/start-yarn.sh

(3)停止的话,输入命令,sbin/stop-all.sh

(4)输入命令,jps,可以看到相关进程信息,从而进行验证是否启动成功。

如果输入jps出现process information unavailable提示时,这时可以进于是/tmp目录下,删除名称为hsperfdata_{username}的文件夹,然后重新启动Hadoop即可。

# jps (主节点)

1701 SecondaryNameNode

1459 NameNode

2242 Jps

1907 ResourceManager

# jps (从节点)

4520 Jps

9677 NodeManager

9526 DataNode

这时可以浏览器打开 IP:8088 和 IP:50070 就可以查看集群状态和NameNode信息了

Hadoop Shell命令:

http://blog.csdn.net/wuwenxiang91322/article/details/22166423

http://hadoop.apache.org/docs/r1.0.4/cn/hdfs_shell.html

以上是关于hadoop搭建部署的主要内容,如果未能解决你的问题,请参考以下文章