Android OpenGL20 模型,视图,投影与Viewport <7>

Posted 喝醉了的熊猫

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Android OpenGL20 模型,视图,投影与Viewport <7>相关的知识,希望对你有一定的参考价值。

对于很多初学者,视图投影之类非常的难理解,然而这个东西非常非常的重要,如果不是非常清楚,根本无法定位3D Object(空间坐标)和观察角度(观察角度不一样,效果就不一样),自己阅博无数,发现了一篇非常棒的blog文章:

http://blog.csdn.net/kesalin/article/details/7168967

由于尽量保证自己博客的原创性,所以不方便装载,所以reviewer一定要看上面链接的文章,图文并茂,然后通过自己的测试程序进行测试,就会彻底明白.当然这个博主是苹果APP的,但是没有关系,理论是通用的.

这里大致总结一下:

概念一:

a> : viewport(视口)变换 : 结合程序,下面是定义点的坐标,平时看sample比较多就会发现,x,y,z轴都用标量为1去设置

private float vertexs[]={

0.0f,0.0f,0.0f,

1.0f,0.0f,0.0f,

0.0f,1.0f,0.0f

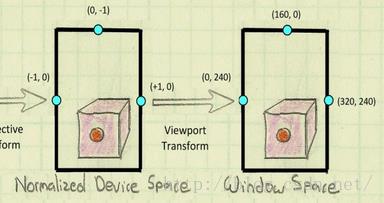

};但是显示在移动设备屏幕上是一个3D图像,但是这个1是如何转换到屏幕的呢?这个1即不代表像素,又没有代表一个比例(比如1:500,1代表占用500像素),却在程序运行后显示一个3D图形.这个地方就是上面博客中提到的:从 Normalized Device Space 到 Window Space 就是 viewport 变换过程:

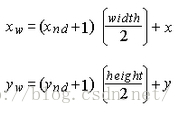

看看上面的,程序中设置就是Normalized Device Space上的"坐标",如果要显示在移动设备的屏幕上,就需要一个转换,转换规则

其中上面转换公式中的参数,x,y,width,height是通过:

glViewport(x, y, width, height);设置的;

(xw, yw)是屏幕坐标;

(xnd, ynd)是投影之后经归一化之后的点(上图中 Normalized Device Space 空间的点);

概念二 :

b> : 模型视图变换 : 这里分两种,

1> : 变换3D Object在空间中的位置和旋转,而观察者(很多地方表述为:Camera)的位置保持不变;

2> : 保持3D Object在空间中的位置和旋转不变,观察者的位置变化.

所以当需要观察3D Object的不同角度的时候,可以通过变换3D Object的位置或角度,也可以变换观察者的位置或角度.

如果变化3D Object可以通过矩阵平移,旋转,缩放等操作;

如果变化观察者角度 :

gluLookAt(eyex, eyey, eyez, centerx, centery, centerz, upx, upy, upz);

eye 表示 camera/viewer 的位置, center 表示相机或眼睛的焦点(它与 eye 共同来决定 eye 的朝向),而 up 表示 eye 的正上方向,注意 up 只表示方向,与大小无关。通过调用此函数,就能够设定观察的场景,在这个场景中的物体就会被 OpenGL 处理。在 OpenGL 中,eye 的默认位置是在原点,指向 Z 轴的负方向(屏幕往里),up 方向为 Y 轴的正方向.

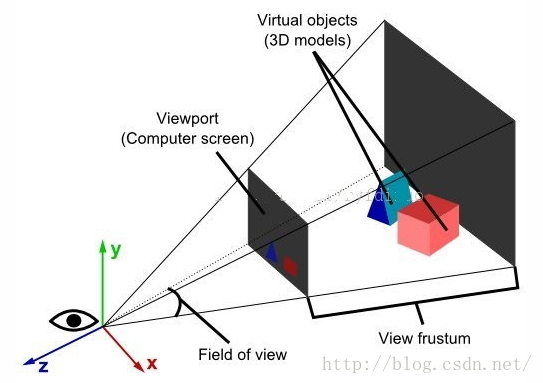

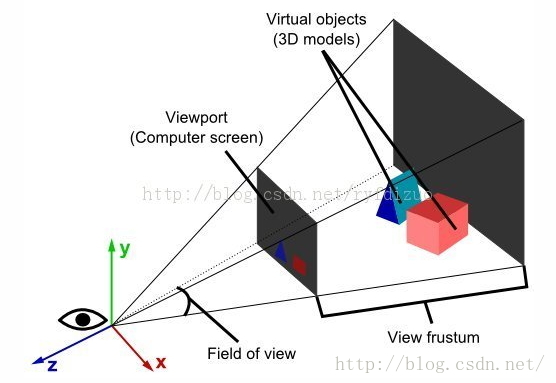

概念三 :

c> : 投影变换 : 投影变换的目的是确定 3D 空间的物体如何投影到 2D 平面上,从而形成2D图像,这些 2D 图像再经视口变换就被渲染到屏幕上;

这个也包含两种情况:

1> : 正交投影;

2> : 透视投影;

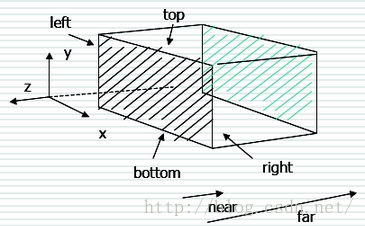

1> : 正交投影:可以把正交投影看成是透视投影的特殊形式:即近裁剪面与远裁剪面除了Z 位置外完全相同,因此物体始终保持一致的大小,即便是在远处看上去也不会变小.

这个图其实非常好了,但是觉得还差一样东西,就可以更明白了,3D Object物体,这个物体一般如果想被观察者看到,就需要将3D物体放在上面的那个立体盒子中(当然很多情况通过设置了near,far会将3D物体"放在盒子外面了"),也就是说,要想看到3D物理,首先需要将其置于两个切面之间(即图中黑色斜线面和蓝绿色斜线面之间),同时如果有必要还需要将3D物理进行缩放操作(这样方便从黑色斜面观察进去).

设置正交投影:

glOrtho(left, right, bottom, top, zNear, zFar);

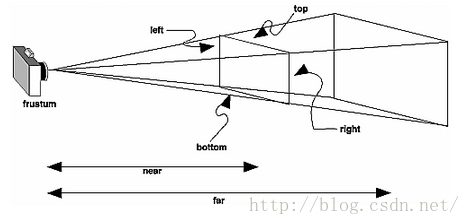

2> : 透视投影:这个地方由于使用的库不一样,存在两种:

<I> : OpenGL es提供的模型:

glFrustum(left, right, bottom, top, zNear, zFar);通过glFrustum机型设置该模型!

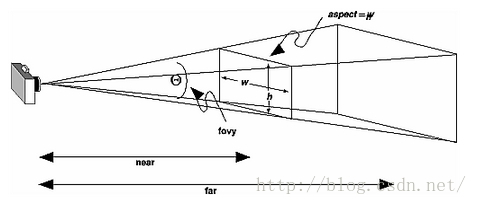

<II> : glut辅助库模型如下:

注意这个模型和上面模型的标注部分,样子是一样的,但是标注是不一样的.

gluPerspective(fovy, aspect, zNear, zFar);fovy 定义了 camera 在 y 方向上的视线角度(介于 0 ~ 180 之间),aspect 定义了近裁剪面的宽高比 aspect = w/h,而 zNear 和 zFar 定义了从 Camera/Viewer 到远近两个裁剪面的距离(注意这两个距离都是正值)。这四个参数同样也定义了一个视锥体。

在 OpenGL ES 2.0 中,我们也需要自己实现该函数。我们可以通过三角公式 tan(fovy/2) = (h / 2)/zNear 计算出 h ,然后再根据 w = h * aspect 计算出 w,这样就可以得到 left, right, top, bottom, zNear, zFar 六个参数,代入在介绍视锥体时提到的公式即可.

补充两个图:

结论:

注意

写 OpenGL 代码时从前到后的顺序依次是:设定 viewport(视口变换),设定投影变换,设定视图变换,设定模型变换,在本地坐标空间描绘物体。而在前面为了便于理解做介绍时,说的顺序是OpenGL 中物体最初是在本地坐标空间中,然后转换到世界坐标空间,再到 camera 视图空间,再到投影空间。由于模型变换包括了本地空间变换到世界坐标空间,所以我们理解3D 变换是一个顺序,而真正写代码时则是以相反的顺序进行的,如果从左乘矩阵这点上去理解就很容易明白为什么会是反序的

重点中的重点,如何将上面的模型转换到程序中,在程序中如何体现:

我们一般会定义:

private float[] mMVPMatrix = new float[16];

private float[] mViewMatrix = new float[16];

private float[] mProjectionMatrix = new float[16];<1> : mViewMatrix是保存4*4的矩阵信息,这个是观察者的眼睛的位置(或者叫做Camera):

Matrix.setLookAtM(mViewMatrix, 0, eyeX, eyeY, eyeZ, lookX, lookY, lookZ, upX, upY, upZ);这个方法在前面介绍了用途,这里将会将设置的矩阵信息保存到mViewMatrix矩阵里面返回,这样我就可以获取眼睛在空间中的基位置,即后面要调整Camera,位置就需要乘以这个基位置矩阵,从而获得最终的Camera位置.

<2> : mProjectionMatrix是保存透视矩阵信息的:我们通过下面建立一个透视模型,然后将这个模型保存到这个矩阵中,算是基矩阵.

Matrix.frustumM(mProjectionMatrix, 0, left, right, bottom, top, near, far);设置透视后,保存信息通过mProjectionMatrix返回.

<3> : mMVPMatrix是保存上面两个建立起来的模型,这个模型即观察者,观察范围和角度都一定设定了的模型,这个模型矩阵的表示是通过观察者和透视矩阵两个相乘得到的:

Matrix.setIdentityM(mModelMatrix, 0);

Matrix.translateM(mModelMatrix, 0, 0.0f, 0.0f, -5.0f);

Matrix.multiplyMM(mMVPMatrix, 0, mViewMatrix, 0, mModelMatrix, 0);

Matrix.multiplyMM(mMVPMatrix, 0, mProjectionMatrix, 0, mMVPMatrix, 0);所以通过上面三步,就建立起透视观测模型,后面的物理坐标设置乘以mMVPMatrix矩阵,就可以让物理定位到模型中显示(当然这个是给出了显示的依据,实际物理不一定会显示在这个透视模型中,可能在之外,所以说这一种参考依据)

根据这个可以做一个android Demo测试一下Android studio工程[]:

代码片区如下:

package org.pumpkin.pumpkintutor2gsls;

import android.support.v7.app.AppCompatActivity;

import android.os.Bundle;

import org.pumpkin.pumpkintutor2gsls.tutor2.cube.CubeSurfaceView;

import org.pumpkin.pumpkintutor2gsls.tutor2.triangle.TriangleSurfaceView;

import org.pumpkin.pumpkintutor2gsls.tutor2.triangle1.TriangleSurfaceView1;

public class PumpKinMainActivity extends AppCompatActivity {

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(new TriangleSurfaceView1(this)/*new CubeSurfaceView(this)*//*new TriangleSurfaceView(this)*/);

}

}

package org.pumpkin.pumpkintutor2gsls.tutor2.triangle1;

import android.content.Context;

import android.opengl.GLSurfaceView;

import org.pumpkin.pumpkintutor2gsls.tutor2.triangle.TriangleRenderer;

/**

* Project name : PumpKinTutor2Gsls

* Created by zhibao.liu on 2016/5/18.

* Time : 11:18

* Email warden_sprite@foxmail.com

* Action : durian

*/

public class TriangleSurfaceView1 extends GLSurfaceView {

public TriangleSurfaceView1(Context context) {

super(context);

this.setEGLContextClientVersion(2);

//fix for error No Config chosen, but I don't know what this does.

super.setEGLConfigChooser(8 , 8, 8, 8, 16, 0);

this.setRenderer(new TriangleRenderer1(context));

// Render the view only when there is a change in the drawing data

setRenderMode(GLSurfaceView.RENDERMODE_WHEN_DIRTY);

}

}

package org.pumpkin.pumpkintutor2gsls.tutor2.triangle1;

import android.content.Context;

import android.opengl.GLES20;

import android.opengl.GLSurfaceView;

import android.opengl.Matrix;

import android.os.SystemClock;

import org.pumpkin.pumpkintutor2gsls.tutor2.coord.Coord;

import javax.microedition.khronos.egl.EGLConfig;

import javax.microedition.khronos.opengles.GL10;

/**

* Project name : PumpKinTutor2Gsls

* Created by zhibao.liu on 2016/5/18.

* Time : 11:17

* Email warden_sprite@foxmail.com

* Action : durian

*/

public class TriangleRenderer1 implements GLSurfaceView.Renderer {

private float[] mMVPMatrix = new float[16];

private float[] mViewMatrix = new float[16];

private float[] mModelMatrix = new float[16];

private float[] mProjectionMatrix = new float[16];

private Context mContext;

private Triangle1 triangle1;

private Coord coord;

public TriangleRenderer1(Context context) {

mContext = context;

}

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

GLES20.glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

GLES20.glEnable(GLES20.GL_CULL_FACE);

GLES20.glEnable(GLES20.GL_DEPTH_TEST);

triangle1 = new Triangle1(mContext);

triangle1.loadTexture();

coord = new Coord(mContext);

// Position the eye behind the origin.

final float eyeX = 0.0f;

final float eyeY = 0.0f;

final float eyeZ = 0.0f;

// We are looking toward the distance

final float lookX = 0.0f;

final float lookY = 0.0f;

final float lookZ = -1.0f;

// Set our up vector. This is where our head would be pointing were we holding the camera.

final float upX = 0.0f;

final float upY = 1.0f;

final float upZ = 0.0f;

// Set the view matrix. This matrix can be said to represent the camera position.

// NOTE: In OpenGL 1, a ModelView matrix is used, which is a combination of a model and

// view matrix. In OpenGL 2, we can keep track of these matrices separately if we choose.

Matrix.setLookAtM(mViewMatrix, 0, eyeX, eyeY, eyeZ, lookX, lookY, lookZ, upX, upY, upZ);

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

GLES20.glViewport(0, 0, width, height);

final float ratio = (float) width / height;

final float left = -ratio;

final float right = ratio;

final float bottom = -1.0f;

final float top = 1.0f;

final float near = 1.0f;

final float far = 10.0f;

Matrix.frustumM(mProjectionMatrix, 0, left, right, bottom, top, near, far);

}

@Override

public void onDrawFrame(GL10 gl) {

GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT | GLES20.GL_DEPTH_BUFFER_BIT);

Matrix.setIdentityM(mModelMatrix, 0);

Matrix.translateM(mModelMatrix, 0, 0.0f, 0.0f, -5.0f);

Matrix.multiplyMM(mMVPMatrix, 0, mViewMatrix, 0, mModelMatrix, 0);

Matrix.multiplyMM(mMVPMatrix, 0, mProjectionMatrix, 0, mMVPMatrix, 0);

triangle1.draw(mMVPMatrix);

coord.draw(mMVPMatrix);

}

}

在上面的渲染器中,调整setLookAtM参数,以及frustumM参数,在运行既可以发现视角在变化.

package org.pumpkin.pumpkintutor2gsls.tutor2.triangle1;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.opengl.GLES20;

import android.opengl.GLUtils;

import org.pumpkin.pumpkintutor2gsls.R;

import org.pumpkin.pumpkintutor2gsls.shader.PumpKinShader;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;

/**

* Project name : PumpKinTutor2Gsls

* Created by zhibao.liu on 2016/5/18.

* Time : 11:17

* Email warden_sprite@foxmail.com

* Action : durian

*/

public class Triangle1 {

private FloatBuffer vertexsBuffer;

private FloatBuffer colorsBuffer;

private FloatBuffer texturesBuffer;

private int mMVPMatrixHandle;

private int mPositionHandle;

private int mColorHandle;

private int mTextureCoordsHandle;

private int mProgram;

private Context mContext;

private Bitmap bitmap;

private int[] textures=new int[1];

private float vertexs[]={

0.0f,0.0f,0.0f,

2.0f,0.0f,0.0f,

0.0f,2.0f,0.0f

};

private float colors[]={

1.0f,0.0f,0.0f,1.0f,

0.0f,1.0f,0.0f,1.0f,

0.0f,0.0f,1.0f,1.0f

};

private float textureCoords[]={

/*0,0,

1,0,

0,1*/

0,1,

1,0,

0,0

};

public Triangle1(Context context){

mContext=context;

ByteBuffer vbb=ByteBuffer.allocateDirect(vertexs.length*4);

vbb.order(ByteOrder.nativeOrder());

vertexsBuffer=vbb.asFloatBuffer();

vertexsBuffer.put(vertexs);

vertexsBuffer.position(0);

ByteBuffer cbb=ByteBuffer.allocateDirect(colors.length*4);

cbb.order(ByteOrder.nativeOrder());

colorsBuffer=cbb.asFloatBuffer();

colorsBuffer.put(colors);

colorsBuffer.position(0);

ByteBuffer tbb=ByteBuffer.allocateDirect(textureCoords.length*4);

tbb.order(ByteOrder.nativeOrder());

texturesBuffer=tbb.asFloatBuffer();

texturesBuffer.put(textureCoords);

texturesBuffer.position(0);

String vshaderCode= PumpKinShader.loadGsls(mContext,0);

String fshaderCode=PumpKinShader.loadGsls(mContext,1);

int mvShaderHandle= GLES20.glCreateShader(GLES20.GL_VERTEX_SHADER);

if(mvShaderHandle!=0) {

GLES20.glShaderSource(mvShaderHandle, vshaderCode);

GLES20.glCompileShader(mvShaderHandle);

int[] status=new int[1];

GLES20.glGetShaderiv(mvShaderHandle,GLES20.GL_COMPILE_STATUS,status,0);

if(status[0]==0){

GLES20.glDeleteShader(mvShaderHandle);

mvShaderHandle=0;

}

}

if(mvShaderHandle==0){

throw new RuntimeException("failed to create vertex shader !");

}

int mfShaderHandle=GLES20.glCreateShader(GLES20.GL_FRAGMENT_SHADER);

if(mfShaderHandle!=0){

GLES20.glShaderSource(mfShaderHandle,fshaderCode);

GLES20.glCompileShader(mfShaderHandle);

int[] status=new int[1];

GLES20.glGetShaderiv(mfShaderHandle,GLES20.GL_COMPILE_STATUS,status,0);

if(status[0]==0){

GLES20.glDeleteShader(mfShaderHandle);

mfShaderHandle=0;

}

}

if(mfShaderHandle==0){

throw new RuntimeException("failed to create fragment shader !");

}

mProgram=GLES20.glCreateProgram();

if(mProgram!=0){

GLES20.glAttachShader(mProgram,mvShaderHandle);

GLES20.glAttachShader(mProgram,mfShaderHandle);

GLES20.glLinkProgram(mProgram);

int[] linkstatus=new int[1];

GLES20.glGetProgramiv(mProgram,GLES20.GL_LINK_STATUS,linkstatus,0);

if(linkstatus[0]==0){

GLES20.glDeleteProgram(mProgram);

mProgram=0;

}

}

if(mProgram==0){

throw new RuntimeException("failed to create program !");

}

}

public void draw(float[] mvpmatrix){

GLES20.glUseProgram(mProgram);

mPositionHandle=GLES20.glGetAttribLocation(mProgram,"a_Position");

GLES20.glVertexAttribPointer(mPositionHandle,3,GLES20.GL_FLOAT,false,0,vertexsBuffer);

GLES20.glEnableVertexAttribArray(mPositionHandle);

mColorHandle=GLES20.glGetAttribLocation(mProgram,"a_Color");

GLES20.glVertexAttribPointer(mColorHandle,4,GLES20.GL_FLOAT,false,0,colorsBuffer);

GLES20.glEnableVertexAttribArray(mColorHandle);

mTextureCoordsHandle=GLES20.glGetAttribLocation(mProgram,"a_inputTextureCoordinate");

GLES20.glVertexAttribPointer(mTextureCoordsHandle,2,GLES20.GL_FLOAT,false,0,texturesBuffer);

GLES20.glEnableVertexAttribArray(mTextureCoordsHandle);

mMVPMatrixHandle=GLES20.glGetUniformLocation(mProgram,"u_MVPMatrix");

PumpKinShader.checkGLError("glGetUniformLocation");

GLES20.glUniformMatrix4fv(mMVPMatrixHandle,1,false,mvpmatrix,0);

PumpKinShader.checkGLError("glUniformMatrix4fv");

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP,0,3);

GLES20.glDisableVertexAttribArray(mPositionHandle);

GLES20.glDisableVertexAttribArray(mColorHandle);

GLES20.glDisableVertexAttribArray(mTextureCoordsHandle);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D,0);

GLES20.glDisable(GLES20.GL_BLEND);

}

public void loadTexture(){

GLES20.glGenTextures(1,textures,0);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D,textures[0]);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,GLES20.GL_TEXTURE_MAG_FILTER,GLES20.GL_LINEAR);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,GLES20.GL_TEXTURE_MIN_FILTER,GLES20.GL_LINEAR);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,GLES20.GL_TEXTURE_WRAP_S,GLES20.GL_CLAMP_TO_EDGE);

GLES20.glTexParameterf(GLES20.GL_TEXTURE_2D,GLES20.GL_TEXTURE_WRAP_T,GLES20.GL_CLAMP_TO_EDGE);

bitmap= BitmapFactory.decodeResource(mContext.getResources(), R.drawable.src);

GLUtils.texImage2D(GLES20.GL_TEXTURE_2D,0,bitmap,0);

}

}

辅助类:

package org.pumpkin.pumpkintutor2gsls.shader;

import android.content.Context;

import android.opengl.GLES20;

import android.util.Log;

import java.io.IOException;

import java.io.InputStream;

/**

* Project name : PumpKinBasicGLSL

* Created by zhibao.liu on 2016/5/11.

* Time : 14:26

* Email warden_sprite@foxmail.com

* Action : durian

*/

public class PumpKinShader {

private final static String TAG="PumpKinShader";

private static int GLESVersion=20;

public static void setVersion(int version){

switch (version){

case 20:

GLESVersion=20;

break;

case 30:

GLESVersion=30;

break;

default:

GLESVersion=20;

break;

}

}

public static String loadGsls(Context context, int type){

String shadercode="";

String shaderfilename="";

switch (type){

case 0:

shaderfilename="vshader.glsl";

break;

case 1:

shaderfilename="fshader.glsl";

break;

case 2:

shaderfilename="tvshader.glsl";

break;

case 3:

shaderfilename="tfshader.glsl";

break;

case 4:

shaderfilename="coordvshader.glsl";

break;

case 5:

shaderfilename="coordfshader.glsl";

break;

}

try {

InputStream is=context.getResources().getAssets().open(shaderfilename);

int length=is.available();

byte[] buffer=new byte[length];

int read = is.read(buffer);

shadercode=new String(buffer);//buffer.toString();

} catch (IOException e) {

e.printStackTrace();

}

Log.i(TAG,"shadercode : "+shadercode);

return shadercode;

}

public static int loadShader(int type,String shadercode){

int shader= GLES20.glCreateShader(type);

GLES20.glShaderSource(shader,shadercode);

GLES20.glCompileShader(shader);

return shader;

}

public static void checkGLError(String glOperation){

int error;

while((error=GLES20.glGetError())!=GLES20.GL_NO_ERROR){

throw new RuntimeException(glOperation+" : glError "+error);

}

}

}

同样增加一个坐标显示:

package org.pumpkin.pumpkintutor2gsls.tutor2.coord;

import android.content.Context;

import android.opengl.GLES20;

import org.pumpkin.pumpkintutor2gsls.shader.PumpKinShader;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;

/**

* Project name : PumpKinTutor2Gsls

* Created by zhibao.liu on 2016/5/18.

* Time : 15:36

* Email warden_sprite@foxmail.com

* Action : durian

*/

public class Coord {

private FloatBuffer vertexsBuffer;

private FloatBuffer colorsBuffer;

private int mPositionHandle;

private int mColorHandle;

private int mMVPMatrixHandle;

private int mProgram;

private Context mContext;

private float[] vertexs={

0,0,0,

5,0,0,

0,0,0,

0,5,0,

0,0,0,

0,0,5

};

private float[] colors={

1.0f,0.0f,0.0f,1.0f,

1.0f,0.0f,0.0f,1.0f,

0.0f,1.0f,0.0f,1.0f,

0.0f,1.0f,0.0f,1.0f,

0.0f,0.0f,1.0f,1.0f,

0.0f,0.0f,1.0f,1.0f

};

public Coord(Context context){

mContext=context;

ByteBuffer vbb=ByteBuffer.allocateDirect(vertexs.length*4);

vbb.order(ByteOrder.nativeOrder());

vertexsBuffer=vbb.asFloatBuffer();

vertexsBuffer.put(vertexs);

vertexsBuffer.position(0);

ByteBuffer cbb=ByteBuffer.allocateDirect(colors.length*4);

cbb.order(ByteOrder.nativeOrder());

colorsBuffer=cbb.asFloatBuffer();

colorsBuffer.put(colors);

colorsBuffer.position(0);

String vshaderCode= PumpKinShader.loadGsls(mContext,4);

String fshaderCode=PumpKinShader.loadGsls(mContext,5);

int vshaderHandle=GLES20.glCreateShader(GLES20.GL_VERTEX_SHADER);

if(vshaderHandle!=0){

GLES20.glShaderSource(vshaderHandle,vshaderCode);

GLES20.glCompileShader(vshaderHandle);

int[] status=new int[1];

GLES20.glGetShaderiv(vshaderHandle,GLES20.GL_COMPILE_STATUS,status,0);

if(status[0]==0){

GLES20.glDeleteShader(vshaderHandle);

vshaderHandle=0;

}

}

int fshaderHandle=GLES20.glCreateShader(GLES20.GL_FRAGMENT_SHADER);

if(fshaderHandle!=0){

GLES20.glShaderSource(fshaderHandle,fshaderCode);

GLES20.glCompileShader(fshaderHandle);

int[] status=new int[1];

GLES20.glGetShaderiv(fshaderHandle,GLES20.GL_COMPILE_STATUS,status,0);

if(status[0]==0){

GLES20.glDeleteShader(fshaderHandle);

fshaderHandle=0;

}

}

if(fshaderHandle==0){

throw new RuntimeException("failed to create frag shader !");

}

mProgram=GLES20.glCreateProgram();

if(mProgram!=0){

GLES20.glAttachShader(mProgram,vshaderHandle);

GLES20.glAttachShader(mProgram,fshaderHandle);

GLES20.glLinkProgram(mProgram);

int[] linkstatus=new int[1];

GLES20.glGetProgramiv(mProgram,GLES20.GL_LINK_STATUS,linkstatus,0);

if(linkstatus[0]==0){

GLES20.glDeleteProgram(mProgram);

mProgram=0;

}

}

if(mProgram==0){

throw new RuntimeException("failed to create program !");

}

}

public void draw(float[] mvpMatrix){

GLES20.glUseProgram(mProgram);

mPositionHandle=GLES20.glGetAttribLocation(mProgram,"a_Position");

GLES20.glVertexAttribPointer(mPositionHandle,3,GLES20.GL_FLOAT,false,0,vertexsBuffer);

GLES20.glEnableVertexAttribArray(mPositionHandle);

mColorHandle=GLES20.glGetAttribLocation(mProgram,"a_Color");

GLES20.glVertexAttribPointer(mColorHandle,4,GLES20.GL_FLOAT,false,0,colorsBuffer);

GLES20.glEnableVertexAttribArray(mColorHandle);

mMVPMatrixHandle=GLES20.glGetUniformLocation(mProgram,"u_MvpMatrix");

PumpKinShader.checkGLError("glGetUniformLocation");

GLES20.glUniformMatrix4fv(mMVPMatrixHandle,1,false,mvpMatrix,0);

PumpKinShader.checkGLError("glUniformMatrix4fv");

GLES20.glDrawArrays(GLES20.GL_LINES,0,vertexs.length/3);

GLES20.glDisableVertexAttribArray(mColorHandle);

GLES20.glDisableVertexAttribArray(mPositionHandle);

}

}

下面是glsl脚本:

vshader.glsl :

uniform mat4 u_MVPMatrix;

uniform vec4 u_Color;

attribute vec4 a_Position;

attribute vec4 a_Color;

attribute vec4 a_inputTextureCoordinate;

varying vec2 textureCoordinate;

varying vec4 v_Color;

void main(){

gl_Position=u_MVPMatrix*a_Position;

v_Color=a_Color;

textureCoordinate=a_inputTextureCoordinate.xy;

}gshader.glsl :

precision mediump float;

varying vec4 v_Color;

varying highp vec2 textureCoordinate;

uniform sampler2D inputImageTexture;

void main(){

gl_FragColor=v_Color*texture2D(inputImageTexture,textureCoordinate);

}

坐标对应的glsl脚本:

coordvshader.glsl:

uniform mat4 u_MvpMatrix;

attribute vec4 a_Position;

attribute vec4 a_Color;

varying vec4 v_Color;

void main() {

gl_Position=u_MvpMatrix*a_Position;

v_Color=a_Color;

}

coordfshader.glsl :

precision mediump float;

varying vec4 v_Color;

void main() {

gl_FragColor=v_Color;

}

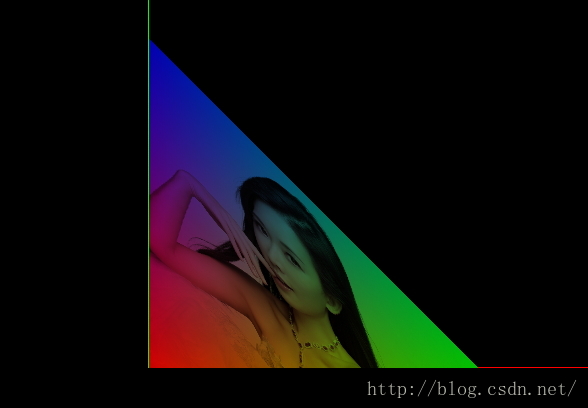

另外在drawable下面增加一个src.png的图片/

运行结果:

最后:下载Nate Robin tutors-win32.zip这个包,里面有3D模拟器,可以通过3D模拟器参数设置观察效果,从而进一步理解上面的理论.这个模拟器可以说是神器啊!

以上是关于Android OpenGL20 模型,视图,投影与Viewport <7>的主要内容,如果未能解决你的问题,请参考以下文章