前言

笔者多年前便维护过ELK,但是由于站点日志流量及服务器数量并不是很多基本都是单机搞定。

然而光Web服务器就400+,nginx日志大小每天50G+,加上其他业务系统日志,之前单机ELK显然不足以支撑现有的业务场景。

规划篇

目前的业务采用阿里云+自建机房的模式,阿里云做为线上业务,自建机房做为灾备中心,尽可能的将线上日志实时传输到自建机房进行数据分析。

架构图

简述

1.日志集中处理

笔者一开始是在每台机器通过filebeat+logstash的方式将日志进行收集和处理后发送到elasticsearch,logstash本身java应用比较耗费内存,而且维护成本较高。

后来采用rsyslog的方式将所有服务器存储到单台阿里云服务器,再通过rsyslog转发到自建机房,基本实现了毫秒级的同步。

2.解耦

起初是通过Logstash直接往Elasticsearch存储日志,一旦遇到需要重启或者维护Elasticsearch集群的时候这时日志将无处安放,难免造成日志丢失.

引入Redis后即便elasticsearch在维护期间也可以先将数据缓存下来。将Logstash-shipper和Logstash-indexer划清了界限。

3.集群多实例

自建机房预备了3台内存为256G服务器部署ELK集群,但是官方建议jvm的内存不要超过32G,大概原因是一旦jvm设置超过32G将会采用不同的算法,这种算法会耗费更多系统资源。

比如设置为48G的情况下性能甚至不及20G,具体解释参考官方链接。

https://www.elastic.co/guide/en/elasticsearch/guide/current/heap-sizing.html

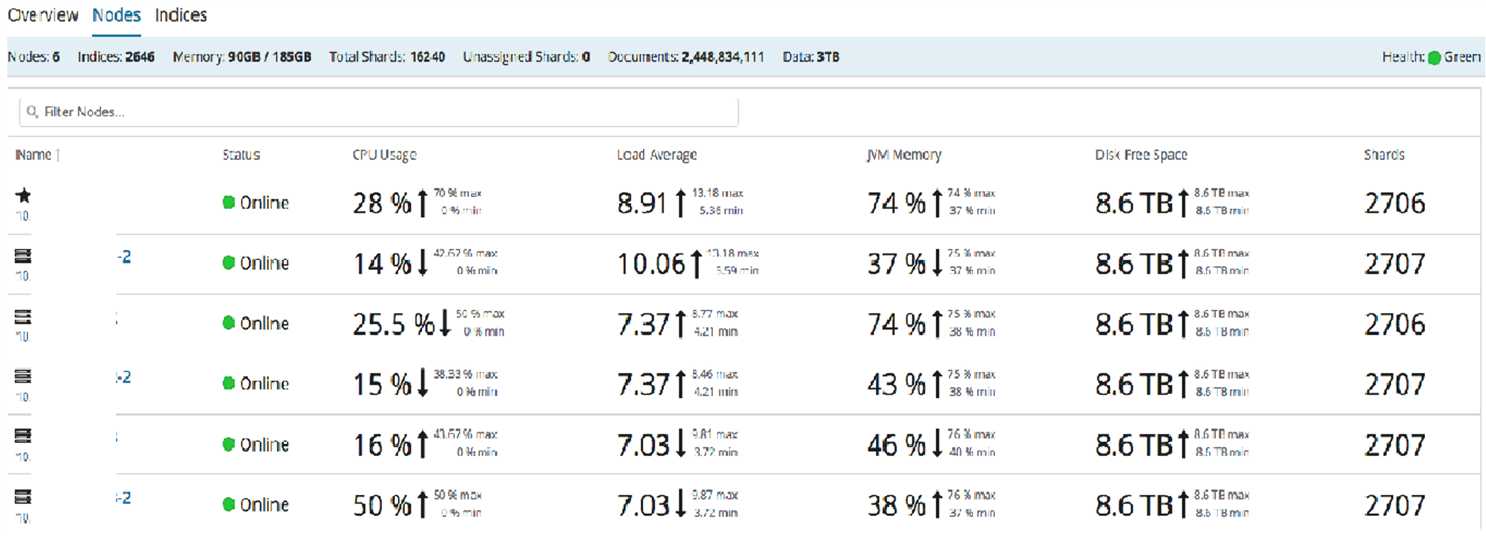

所以为了不浪费资源我在每台机器上部署了两个Elasticsearch节点,共6个node.

4.收集日志类型

- nginx-access.log

- nginx-error.log

- php-error.log

- php-slow.log

- action.log 前面几个都好理解,这个日志是开发采集用户后台管理操作关键行为的json格式日志。

5.服务器规划

主机名和公网IP均做了化名处理.ELK stak3台物理机跑了6个Elasticsearch集群node,6个logstash-indexer,1个logstash-shipper,1个redis,1个kibana,1个nginx.

| 主机名 | 配置 | 用途 | 备注 |

| rsyslog-relay | 8core 16G 1T | 收集所有服务器所需日志,集中存储转发。 | 系统为centos7.3 |

| elk01 | 16core 256G 9.8T |

nginx kibana rsyslog-server logstash-shipper elk01-indexer elk01-indexer2 elk01-elasticsearch elk01-elasticsearch2 |

3台服务器配置均相同 系统均为centos7.3 磁盘为12块1.8T 10k的sas盘,做Raid 0+1. |

| elk02 | 16core 256G 9.8T |

elk02-indexer elk02-indexer2 elk02-elasticsearch elk02-elasticsearch2 |

|

| elk03 | 16core 256G 9.8T |

elk03-indexer elk03-indexer2 elk03-elasticsearch elk03-elasticsearch2 |

部署篇

rsyslog

升级

将日志集中存储到本地机房,Centos7.3自带的rsyslog为V7版本,先升级到V8。因为V8的rsyslog-relp有日志重传机制,可防止数据丢失。

卸载原有版本,添加v8 yum源,安装新版本。

[[email protected] ~]# rpm -qa|grep rsyslog rsyslog-7.4.7-16.el7.x86_64

[[email protected] ~]# yum remove -y rsyslog-7.4.7-16.el7.x86_64

vim /etc/yum.repos.d/rsyslog_v8.repo [rsyslog_v8] baseurl = http://rpms.adiscon.com/v8-stable/epel-$releasever/$basearch enabled = 1 gpgcheck = 1 gpgkey = http://rpms.adiscon.com/RPM-GPG-KEY-Adiscon name = Rsyslog version 8 repository

[[email protected] ~]#yum install rsyslog rsyslog-relp -y

rsyslog客户端配置

nginx的文件名根据业务区分为后台管理访问日志,前台访问日志,支付日志,但是根据类型打上了nginx-access和nginx-error两个标签。

配置文件路径为/etc/rsyslog.conf

1 $ModLoad imuxsock 2 $ModLoad imjournal 3 $ModLoad imfile 4 $ActionFileDefaultTemplate RSYSLOG_TraditionalFileFormat 5 $IncludeConfig /etc/rsyslog.d/*.conf 6 *.info;mail.none;authpriv.none;cron.none /var/log/messages 7 authpriv.* /var/log/secure 8 mail.* -/var/log/maillog 9 cron.* /var/log/cron 10 *.emerg :omusrmsg:* 11 uucp,news.crit /var/log/spooler 12 local7.* /var/log/boot.log 13 14 ##########Start Nginx Log File################# 15 $InputFileName /var/log/nginx/access-admin.log 16 $InputFileTag site-web1-nginx-access: 17 $InputFileStateFile site-web1-nginx-access 18 $InputFileSeverity debug 19 $InputRunFileMonitor 20 $InputFilePollInterval 1 21 22 $InputFileName /var/log/nginx/error-admin.log 23 $InputFileTag site-web1-nginx-error: 24 $InputFileStateFile site-web1-nginx-error 25 $InputFileSeverity debug 26 $InputRunFileMonitor 27 $InputFilePollInterval 1 28 29 $InputFileName /var/log/nginx/access-frontend.log 30 $InputFileTag site-web1-nginx-access: 31 $InputFileStateFile site-web1-nginx-access 32 $InputFileSeverity debug 33 $InputRunFileMonitor 34 $InputFilePollInterval 1 35 36 $InputFileName /var/log/nginx/error-frontend.log 37 $InputFileTag site-web1-nginx-error: 38 $InputFileStateFile site-web1-nginx-error 39 $InputFileSeverity debug 40 $InputRunFileMonitor 41 $InputFilePollInterval 1 42 43 $InputFileName /var/log/nginx/access-pay.log 44 $InputFileTag site-web1-nginx-access: 45 $InputFileStateFile site-web1-nginx-access 46 $InputFileSeverity debug 47 $InputRunFileMonitor 48 $InputFilePollInterval 1 49 50 $InputFileName /var/log/nginx/error-pay.log 51 $InputFileTag site-web1-nginx-error: 52 $InputFileStateFile site-web1-nginx-error 53 $InputFileSeverity debug 54 $InputRunFileMonitor 55 $InputFilePollInterval 1 56 ######################End Of Nginx Log File################ 57 58 ######################Start Of Action Log File############# 59 $InputFileName /var/log/php-fpm/action_log.log 60 $InputFileTag site-web1-action: 61 $InputFileStateFile site-web1-action 62 $InputFileSeverity debug 63 $InputRunFileMonitor 64 $InputFilePollInterval 1 65 ######################End Of Action Log File############### 66 67 #####################Start PHP Log File################### 68 $InputFileName /var/log/php-fpm/www-slow.log 69 $InputFileTag site-web1-php-slow: 70 $InputFileStateFile site-web1-php-slow 71 $InputFileSeverity debug 72 $InputRunFileMonitor 73 $InputFilePollInterval 1 74 $InputFileReadMode 2 75 76 $InputFileName /var/log/php-fpm/error.log 77 $InputFileTag site-web1-php-error: 78 $InputFileStateFile site-web1-php-error 79 $InputFileSeverity debug 80 $InputRunFileMonitor 81 $InputFilePollInterval 1 82 83 $WorkDirectory /var/lib/rsyslog 84 $ActionQueueType LinkedList 85 $ActionQueueFileName srvrfwd 86 $ActionResumeRetryCount -1 87 $ActionQueueSaveOnShutdown on 88 ####################End Of PHP log File##################### 89 90 ###################Start Log Forward############################################## 91 if $programname == ‘site-web1-nginx-access‘ then @@阿里云rsyslog服务器内网地址:514 92 if $programname == ‘site-web1-nginx-error‘ then @@阿里云rsyslog服务器内网地址:514 93 if $programname == ‘site-web1-php-slow‘ then @@阿里云rsyslog服务器内网地址:514 94 if $programname == ‘site-web1-php-error‘ then @@阿里云rsyslog服务器内网地址:514 95 if $programname == ‘site-web1-action‘ then @@阿里云rsyslog服务器内网地址:514 96 ###################End Of log Forward##############################################

rsyslog阿里云中继服务器配置(rsyslog-relay)

1.主配置文件路径为/etc/rsyslogconf,每台服务器对应的配置文件通过include的方式放置在

/etc/rsyslog.d/,文件名以.conf结尾,对应主配置文件的第7行。

1 $ModLoad omrelp 2 $ModLoad imudp 3 $UDPServerRun 514 4 $ModLoad imtcp 5 $InputTCPServerRun 514 6 $ActionFileDefaultTemplate RSYSLOG_TraditionalFileFormat 7 $IncludeConfig /etc/rsyslog.d/*.conf 8 $umask 0022 9 *.info;mail.none;authpriv.none;cron.none /var/log/messages 10 authpriv.* /var/log/secure 11 mail.* -/var/log/maillog 12 cron.* /var/log/cron 13 *.emerg :omusrmsg:* 14 uucp,news.crit /var/log/spooler 15 local7.* /var/log/boot.log 16 *.* :omrelp:本地机房rsyslog服务器:20514

2.每台服务器配置文件,如site-web1的示例如下:

1 $template site-web1-nginx-access,"/data/rsyslog/nginx/site/site-web1-nginx-access.log" 2 $template site-web1-nginx-error,"/data/rsyslog/nginx/site/site-web1-nginx-error.log" 3 $template site-web1-php-slow,"/data/rsyslog/php/site/site-web1-php-slow.log" 4 $template site-web1-php-error,"/data/rsyslog/php/site/site-web1-php-error.log" 5 $template site-web1-action,"/data/rsyslog/php/site/site-web1-action.log" 6 7 if $programname == ‘site-web1-nginx-access‘ then ?site-web1-nginx-access 8 if $programname == ‘site-web1-nginx-error‘ then ?site-web1-nginx-error 9 if $programname == ‘site-web1-php-slow‘ then ?site-web1-php-slow 10 if $programname == ‘site-web1-php-error‘ then ?site-web1-php-error 11 if $programname == ‘site-web1-action‘ then ?site-web1-action

本地机房rsyslog配置(elk01)

/etc/rsyslog.conf主配置文件如下,另外/etc/rsyslog.d/里面的站点配置文件跟中继服务器里面的一模一样。

1 $ModLoad imrelp 2 $ModLoad omrelp 3 $InputRELPServerRun 20514 4 $WorkDirectory /var/lib/rsyslog 5 $DirCreateMode 0755 6 $FileCreateMode 0644 7 $FileOwner logstash 8 $DirOwner logstash 9 $ActionFileDefaultTemplate RSYSLOG_TraditionalFileFormat 10 $IncludeConfig /etc/rsyslog.d/*.conf 11 $OmitLocalLogging on 12 $IMJournalStateFile imjournal.state 13 *.info;mail.none;authpriv.none;cron.none /var/log/messages 14 authpriv.* /var/log/secure 15 mail.* -/var/log/maillog 16 cron.* /var/log/cron 17 *.emerg :omusrmsg:* 18 uucp,news.crit /var/log/spooler 19 local7.* /var/log/boot.log 20 $PrivDropToGroup logstash

至此所有服务器日志都通过rsyslog集中收集了.

elk安装

均是通过yum方式安装的最新6.x版本,根据规划elk01上安装的nginx及elk02上安装的redis均是采用yum方式安装,就不在一一赘述。

elasticsearch yum源

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

logstash yum源

[logstash-6.x] name=Elastic repository for 6.x packages baseurl=https://artifacts.elastic.co/packages/6.x/yum gpgcheck=1 gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch enabled=1 autorefresh=1 type=rpm-md

kibana yum源

[kibana-6.x] name=Kibana repository for 6.x packages baseurl=https://artifacts.elastic.co/packages/6.x/yum gpgcheck=1 gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch enabled=1 autorefresh=1 type=rpm-md

logstash-shipper配置

logstash安装后配置文件路径默认为/etc/logstash,拷贝一份做为logstash-shipper的配置文件目录.

cp -r /etc/logstash /etc/logstash-shipper

chown -R logstash.logstash /etc/logstash-shipper

主配置文件/etc/logstash-shipper/logstash.yml

path.data: /var/lib/logstash-shipper path.config: /etc/logstash-shipper/conf.d path.logs: /var/log/logstash/shipper

创建相关目录并授权予logstash用户

mkdir -p /var/lib/logstash-shipper && chown logstash.logstash /var/lib/logstash-shipper

mkdir -p /var/log/logstash/shipper && chown logstash.logstash /var/log/logstash/shipper

站点配置文件/etc/logstash-shipper/conf.d/shipper.conf

截至目前站点配置有3千多行,全部贴出来略显冗余,这里挑一个站的配置供参考。

input { file { path => "/data/rsyslog/php/site/site-*-php-error.log" type => "site-php-error" sincedb_path => "/data/sincedb/site" } file { path => "/data/rsyslog/php/site/site-*-php-slow.log" type => "site-php-slow" sincedb_path => "/data/sincedb/site" } file { path => "/data/rsyslog/nginx/site/site-*-nginx-error.log" type => "site-nginx-error" sincedb_path => "/data/sincedb/site" } file { path => "/data/rsyslog/nginx/site/site-*-nginx-access.log" type => "site-nginx-access" sincedb_path => "/data/sincedb/site" } file { path => "/data/rsyslog/php/site/site*action.log" type => "site-action" sincedb_path => "/data/sincedb/site" } } output { redis { host => "elk02内网地址" port => "6379" db => "8" data_type => "list" key => "server-log" } }

服务器启动脚本:/etc/systemd/system/logstash-shipper.service

[Unit] Description=logstash-shipper [Service] Type=simple User=logstash Group=logstash # Load env vars from /etc/default/ and /etc/sysconfig/ if they exist. # Prefixing the path with ‘-‘ makes it try to load, but if the file doesn‘t # exist, it continues onward. EnvironmentFile=-/etc/default/logstash EnvironmentFile=-/etc/sysconfig/logstash ExecStart=/usr/share/logstash/bin/logstash "--path.settings" "/etc/logstash-shipper" Restart=always WorkingDirectory=/ Nice=19 LimitNOFILE=16384 [Install] WantedBy=multi-user.target

logstash-indexer配置

每台服务器均跑了两个indexer

logstash-indexer1

拷贝配置文件目录

cp -r /etc/logstash /etc/logstash-indexerchown -R logstash.logstash /etc/logstash-indexer

主配置文件/etc/logstash-indexer/logstash.yml

path.data: /var/lib/logstash path.config: /etc/logstash-indexer/conf.d path.logs: /var/log/logstash/indexer

创建相关目录并授权予logstash用户

mkdir -p /var/log/logstash/indexer && chown logstash.logstash /var/log/logstash/indexer

每个日志类型都对应了一个配置文件,放置在/etc/logstash-indexer/conf.d

/etc/logstash-indexer/conf.d

├── action.conf 程序自定义的用户行为日志

├── nginx_access.conf nginx访问日志

├── nginx_error.conf nginx错误日志

├── php_error.conf php错误日志

└── php_slow.conf php-slow日志

各配置文件如下

action.conf

input { redis { host => "elk02内网ip" port => "6379" db => "8" data_type => "list" key => "kosun-log" } } filter { if [type] =~ ‘^.+action‘ { mutate { gsub => [ "message", "^.+-action: ", "" ] } json { source => "message" } date { match => ["time", "yyyy-MM-dd HH:mm:ss"] target => "@timestamp" "locale" => "en" timezone => "Asia/Shanghai" remove_field => ["time"] } } } output { if [type] =~ ‘^.+action‘ { elasticsearch { hosts => ["elasticsearch:9200"] index => "logstash-action-%{+YYY.MM.dd}" } } }

nginx-access

input { redis { host => "elk02内网地址" port => "6379" db => "8" data_type => "list" key => "kosun-log" } } filter { if [type] =~ ‘^.+nginx-access‘ { fingerprint { method => "SHA1" key => "^.+nginx-access" } grok { match => [ "message" , "%{COMBINEDAPACHELOG} %{DATA:msec} %{QUOTEDSTRING:x_forward_ip} %{DATA:server_name} %{DATA:request_time} %{DATA:upstream_response_time} %{DATA:scheme} %{GREEDYDATA:extra_fields}" ] overwrite => [ "message" ] } date { match => ["timestamp", "dd/MMM/yyyy:HH:mm:ss.SSS Z"] #target => "@timestamp" "locale" => "en" timezone => "Asia/Shanghai" } mutate { gsub => ["agent", "\\"", ""] gsub => ["referrer", "\\"", ""] gsub => ["x_forward_ip", "\\"", ""] gsub => ["extra_fields", "\\"", ""] } if [extra_fields] =~ /^{.*}$/ { mutate { gsub => ["extra_fields", "\\"","", "extra_fields", "\\\\x0A","", "extra_fields", "\\\\x22",‘\\"‘, "extra_fields", "(\\\\)","" ] } json { source => "extra_fields" target => "extra_fields_json" } } geoip { source => "clientip" fields => ["city_name","location"] } } } output { if [type] =~ ‘^.+nginx-access‘ { elasticsearch { hosts => ["elasticsearch:9200"] index => "logstash-%{type}-%{+YYYY.MM.dd}" document_type => "%{type}" # flush_size => 50000 # idle_flush_time => 10 sniffing => true template_overwrite => true document_id => "%{fingerprint}" } } }

nginx-error

input { redis { host => "elk02内网地址" port => "6379" db => "8" data_type => "list" key => "kosun-log" } } filter { if [type] =~ ‘^.+nginx-error‘ { fingerprint { method => "SHA1" key => "^.+nginx-error" } grok { match => { "message" => [ "(?<timestamp>\\d{4}/\\d{2}/\\d{2} \\d{2}:\\d{2}:\\d{2}) \\[%{DATA:err_severity}\\] (%{NUMBER:pid:int}#%{NUMBER}: \\*%{NUMBER}|\\*%{NUMBER}) %{DATA:err_message}(?:, client: (?<clientip>%{IP}|%{HOSTNAME}))(?:, server: %{IPORHOST:server})(?:, request: %{QS:request})?(?:, host: %{QS:client_ip})?(?:, referrer: \\"%{URI:referrer})?", "%{DATESTAMP:timestamp} \\[%{DATA:err_severity}\\] %{GREEDYDATA:err_message}" ] } } date { match => ["timestamp" , "YYYY/MM/dd HH:mm:ss"] "locale" => "en" timezone => "Asia/Shanghai" remove_field => [ "timestamp" ] } } } output { if [type] =~ ‘^.+nginx-error‘ { elasticsearch { hosts => ["elasticsearch:9200"] index => "logstash-nginx-error-%{+YYY.MM.dd}" document_id => "%{fingerprint}" } } }

php-error

input { redis { host => "elk02内网地址" port => "6379" db => "8" data_type => "list" key => "kosun-log" } } output { if [type] =~ ‘^.+php-error‘ { elasticsearch { hosts => ["elasticsearch:9200"] index => "logstash-php-error-%{+YYY.MM.dd}" } } }

php-slow

input { redis { host => "elk02内网地址" port => "6379" db => "8" data_type => "list" key => "kosun-log" } } filter { if [type] =~ ‘^.+slow‘ { multiline { pattern => "\\[\\d{2}-" negate => true what => "previous" } } } output { if [type] =~ ‘^.+slow‘ { elasticsearch { hosts => ["elasticsearch:9200"] index => "logstash-php-slow-%{+YYY.MM.dd}" } } }

服务启动脚本/etc/systemd/system/logstash-indexer.service

[Unit] Description=logstash-indexer [Service] Type=simple User=logstash Group=logstash # Load env vars from /etc/default/ and /etc/sysconfig/ if they exist. # Prefixing the path with ‘-‘ makes it try to load, but if the file doesn‘t # exist, it continues onward. EnvironmentFile=-/etc/default/logstash EnvironmentFile=-/etc/sysconfig/logstash ExecStart=/usr/share/logstash/bin/logstash "--path.settings" "/etc/logstash-indexer" Restart=always WorkingDirectory=/ Nice=19 LimitNOFILE=16384 [Install] WantedBy=multi-user.target

logstash-indexer2的配置文件一样,只需修改相应的目录和启动脚本即可。

Elasticsearch集群部署

每台服务器跑了两个elasticsearch实例,一个为yum安装,一个为源码包解压。

yum默认安装的配置文件位于/etc/elasticsearch

以elk01为例elasticsearch实例1配置

修改jvm.options里面的内存设置为31g

-Xms31g

-Xmx31g

主配置文件/etc/elasticsearch/elasticsearch.yml

#============================cluster setting============================== cluster.name:elk cluster.routing.allocation.same_shard.host: true #============================node setting================================= node.name: elk01 node.master: true node.data: true #============================path setting================================= path.data: /data/es-data path.logs: /var/log/elasticsearch #============================memory setting=============================== bootstrap.memory_lock: false #============================network setting============================== network.host: elk01内网地址 http.port: 9200 transport.tcp.port: 9300 #============================thread_pool setting========================== thread_pool.search.queue_size: 10000 #============================discovery setting============================ discovery.zen.ping.unicast.hosts: ["elk01:9300", "elk02:9300", "elk03:9300"] discovery.zen.minimum_master_nodes: 2 #============================gateway setting============================== gateway.recover_after_nodes: 4 gateway.recover_after_time: 5m gateway.expected_nodes: 5 indices.recovery.max_bytes_per_sec: 800mb http.cors.enabled: true http.cors.allow-origin: "*" xpack.security.enabled: false xpack.monitoring.enabled: true xpack.graph.enabled: false xpack.watcher.enabled: false

创建数据目录并授权

mkdir -p /data/es-data && chown elasticsearch.elasticsearch /data/es-data

服务启动脚本,yum安装自带,无需修改/usr/lib/systemd/system/elasticsearch.service

elk01 elasticsearch实例2配置

该实例是用源码包做的

下载源码包,解压,移动到指定目录,授权

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.1.2.zip

tar xf elasticsearch-6.1.2.zip

mv elasticsearch-6.1.2 /usr/local/

chown -R elasticsearch.elasticsearch /usr/local/elasticsearch-6.1.2

修改/usr/local/elasticsearch-6.1.2/config/jvm.options 内存设置31g

配置文件/usr/local/elasticsearch-6.1.2/config/elasticsearch.yml

#============================cluster setting============================== cluster.name: elk cluster.routing.allocation.same_shard.host: true #node.max_local_storage_nodes: 2 #============================node setting================================= node.name: elk01-2 node.master: false node.data: true #============================path setting================================= path.data: /data/es-data2 path.logs: /var/log/elasticsearch2 #============================memory setting=============================== bootstrap.memory_lock: false #============================network setting============================== network.host: elk01内网ip http.port: 9201 transport.tcp.port: 9301 #============================thread_pool setting========================== thread_pool.search.queue_size: 10000 #============================discovery setting============================ discovery.zen.ping.unicast.hosts: ["elk01:9300", "elk02:9300", "elk03:9300"] discovery.zen.minimum_master_nodes: 2 #============================gateway setting============================== gateway.recover_after_nodes: 4 gateway.recover_after_time: 5m gateway.expected_nodes: 5 indices.recovery.max_bytes_per_sec: 800mb http.cors.enabled: true http.cors.allow-origin: "*" xpack.security.enabled: false xpack.monitoring.enabled: true xpack.graph.enabled: false xpack.watcher.enabled: false

创建相关目录并授权

mkdir -p /data/es-data2 && chown elasticsearch.elasticsearch /data/es-data2 mkdir -p /var/log/elasticsearch2 elasticsearch.elasticsearch /var/log/elasticsearch2

服务启动脚本/etc/init.d/elasticsearch2

#!/bin/bash # # elasticsearch <summary> # # chkconfig: 2345 80 20 # description: Starts and stops a single elasticsearch instance on this system # ### BEGIN INIT INFO # Provides: Elasticsearch # Required-Start: $network $named # Required-Stop: $network $named # Default-Start: 2 3 4 5 # Default-Stop: 0 1 6 # Short-Description: This service manages the elasticsearch daemon # Description: Elasticsearch is a very scalable, schema-free and high-performance search solution supporting multi-tenancy and near realtime search. ### END INIT INFO # # init.d / servicectl compatibility (openSUSE) # if [ -f /etc/rc.status ]; then . /etc/rc.status rc_reset fi # # Source function library. # if [ -f /etc/rc.d/init.d/functions ]; then . /etc/rc.d/init.d/functions fi # Sets the default values for elasticsearch variables used in this script ES_HOME="/usr/share/elasticsearch2" MAX_OPEN_FILES=65536 MAX_MAP_COUNT=262144 ES_PATH_CONF="/etc/elasticsearch2" PID_DIR="/var/run/elasticsearch2" # Source the default env file ES_ENV_FILE="/etc/sysconfig/elasticsearch" if [ -f "$ES_ENV_FILE" ]; then . "$ES_ENV_FILE" fi exec="$ES_HOME/bin/elasticsearch" prog="elasticsearch" pidfile="$PID_DIR/${prog}.pid" export ES_JAVA_OPTS export JAVA_HOME export ES_PATH_CONF export ES_STARTUP_SLEEP_TIME lockfile=/var/lock/subsys/$prog start() { # Ensure that the PID_DIR exists (it is cleaned at OS startup time) if [ -n "$PID_DIR" ] && [ ! -e "$PID_DIR" ]; then mkdir -p "$PID_DIR" && chown elasticsearch:elasticsearch "$PID_DIR" fi if [ -n "$pidfile" ] && [ ! -e "$pidfile" ]; then touch "$pidfile" && chown elasticsearch:elasticsearch "$pidfile" fi cd $ES_HOME echo -n $"Starting $prog: " # if not running, start it up here, usually something like "daemon $exec" #daemon --user elasticsearch --pidfile $pidfile $exec -p $pidfile -d su - elasticsearch -c "${exec} -p ${pidfile} -d" retval=$? echo [ $retval -eq 0 ] && touch $lockfile return $retval } stop() { echo -n $"Stopping $prog: " # stop it here, often "killproc $prog" killproc -p $pidfile -d 86400 $prog retval=$? echo [ $retval -eq 0 ] && rm -f $lockfile return $retval } restart() { stop start } reload() { restart } force_reload() { restart } rh_status() { # run checks to determine if the service is running or use generic status status -p $pidfile $prog } rh_status_q() { rh_status >/dev/null 2>&1 } case "$1" in start) rh_status_q && exit 0 $1 ;; stop) rh_status_q || exit 0 $1 ;; restart) $1 ;; reload) rh_status_q || exit 7 $1 ;; force-reload) force_reload ;; status) rh_status ;; condrestart|try-restart) rh_status_q || exit 0 restart ;; *) echo $"Usage: $0 {start|stop|status|restart|condrestart|try-restart|reload|force-reload}" exit 2 esac exit $?

Kibana

elasticsearch均添加了hosts文件解析到本机内网IP

配置文件/etc/kibana/kibana.yml

egrep -v ‘^$|#‘ /etc/kibana/kibana.yml server.port: 5601 server.host: "0.0.0.0" elasticsearch.url: "http://elasticsearch:9200" elasticsearch.requestTimeout: 3000000

Nginx server配置

server { listen 80; server_name 域名; access_log /var/log/nginx/access-elk.log; error_log /var/log/nginx/error-elk.log; location ~ /.well-known/acme-challenge { allow all; content_by_lua_block { auto_ssl:challenge_server() } } location / { return 301 https://$host$request_uri; } } server { listen 443 ssl http2; server_name 域名; ssl_certificate_by_lua_block { auto_ssl:ssl_certificate() } ssl_certificate /etc/nginx/ssl/elk.crt; ssl_certificate_key /etc/nginx/ssl/elk.key; ssl_ciphers "EECDH+AESGCM:EDH+AESGCM:ECDHE-RSA-AES128-GCM-SHA256:AES256+EECDH:DHE-RSA-AES128-GCM-SHA256:AES256+EDH:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES128-SHA256:ECDHE-RSA-AES256-SHA:ECDHE-RSA-AES128-SHA:DHE-RSA-AES256-SHA256:DHE-RSA-AES128-SHA256:DHE-RSA-AES256-SHA:DHE-RSA-AES128-SHA:ECDHE-RSA-DES-CBC3-SHA:EDH-RSA-DES-CBC3-SHA:AES256-GCM-SHA384:AES128-GCM-SHA256:AES256-SHA256:AES128-SHA256:AES256-SHA:AES128-SHA:DES-CBC3-SHA:HIGH:!aNULL:!eNULL:!EXPORT:!DES:!MD5:!PSK:!RC4"; ssl_prefer_server_ciphers on; ssl_session_cache shared:SSL:16m; ssl_dhparam /etc/nginx/ssl/dhparam.pem; access_log /var/log/nginx/acess-elk.log; error_log /var/log/nginx/error-elk.log warn; allow 开放访问IP段; deny all; location / { proxy_pass http://elasticsearch:5601; proxy_set_header Host $host; } }

到这里配置部署篇基本已经完成,后续篇幅将介绍日常运维中常见的操作。

比如一键部署脚本,配置增删,批量部署,index,shard,常见操作,性能监控,优化,扩容......

先上个现在集群运行1个星期左右的x-pack监控截图,共采集2,448,834,111条记录,3T数据,日均约3亿条。

结语:

这篇博文断断续续大概花了将近1个星期整理完,本来想详细到每行配置文件都加上注解,熟话说万事开头难,好歹在博客园也开了个头了。

此时网易云音乐随机到一首乔杉唱的《塑料袋》颇有共鸣,突然觉得他是个被喜剧耽误了的胖歌手。