使用Naive Bayes从个人广告中获取区域倾向

Posted 卷珠帘

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了使用Naive Bayes从个人广告中获取区域倾向相关的知识,希望对你有一定的参考价值。

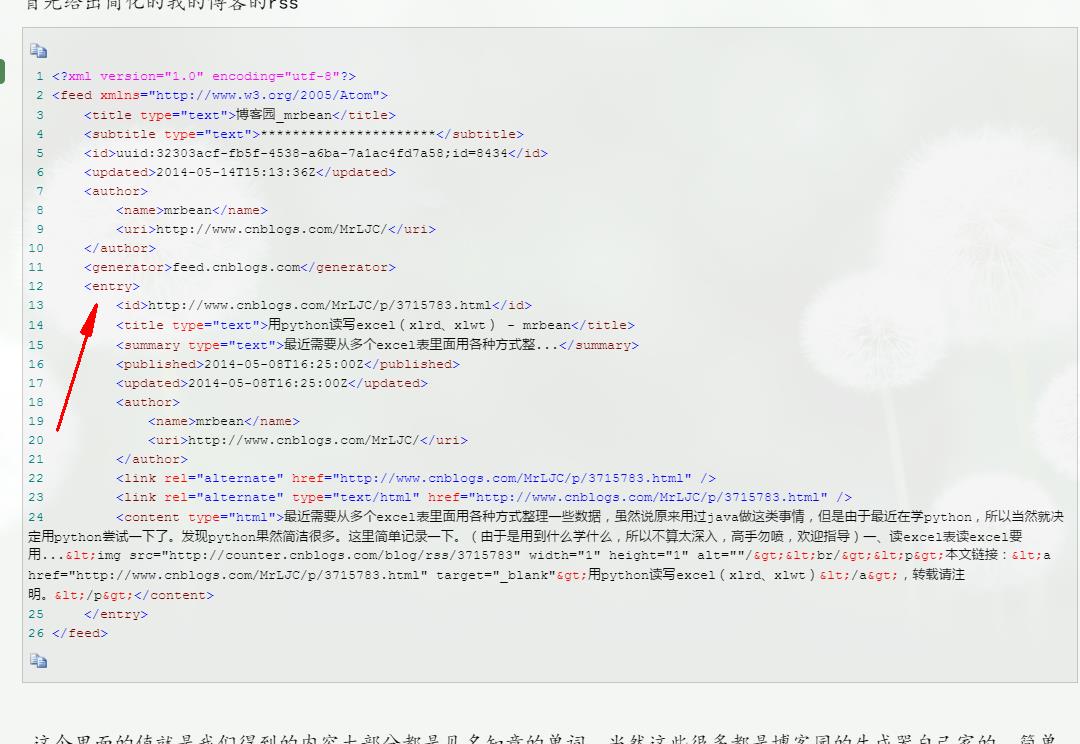

RSS源介绍:https://zhidao.baidu.com/question/2051890587299176627.html

http://www.rssboard.org/rss-profile

这个老铁讲的好:https://www.cnblogs.com/MrLJC/p/3731213.html

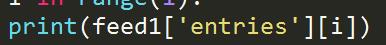

多个entry就是entries,所以我通过

这样的方式来枚举每一条RSS源,

枚举后,

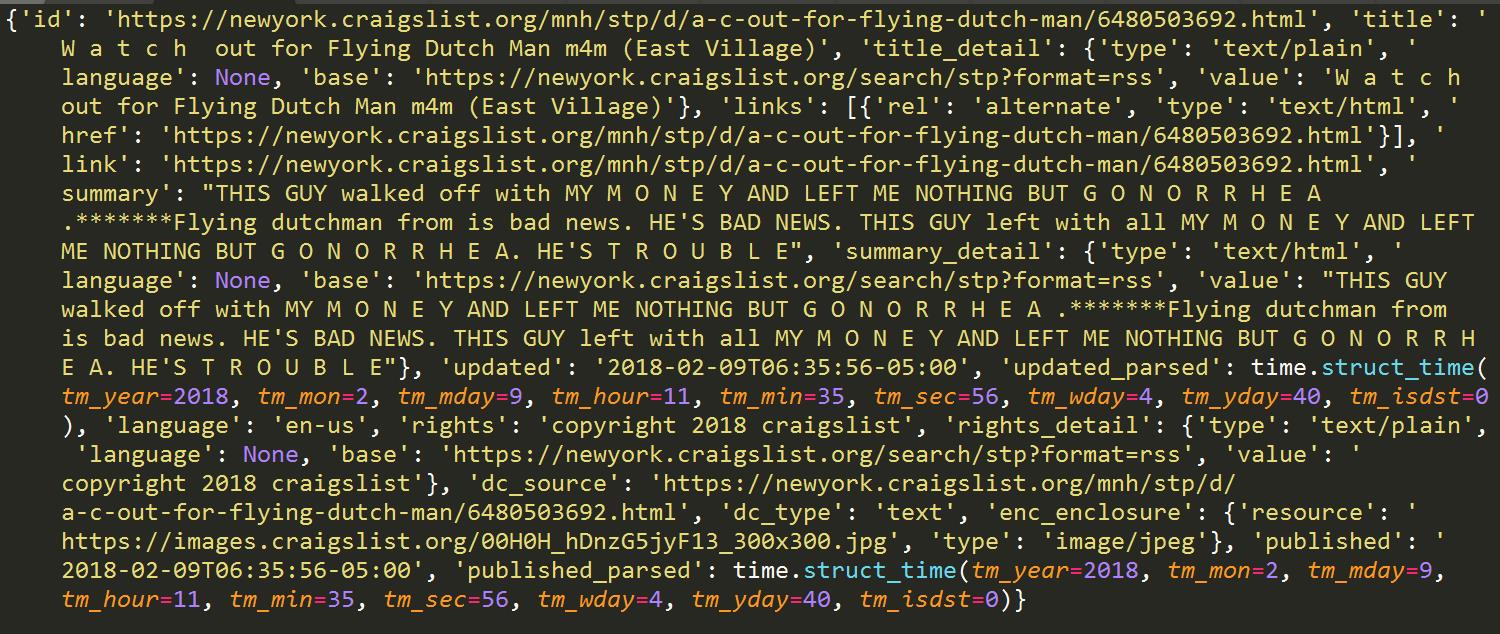

书中这样子便是先把总结弄出来

便是这样

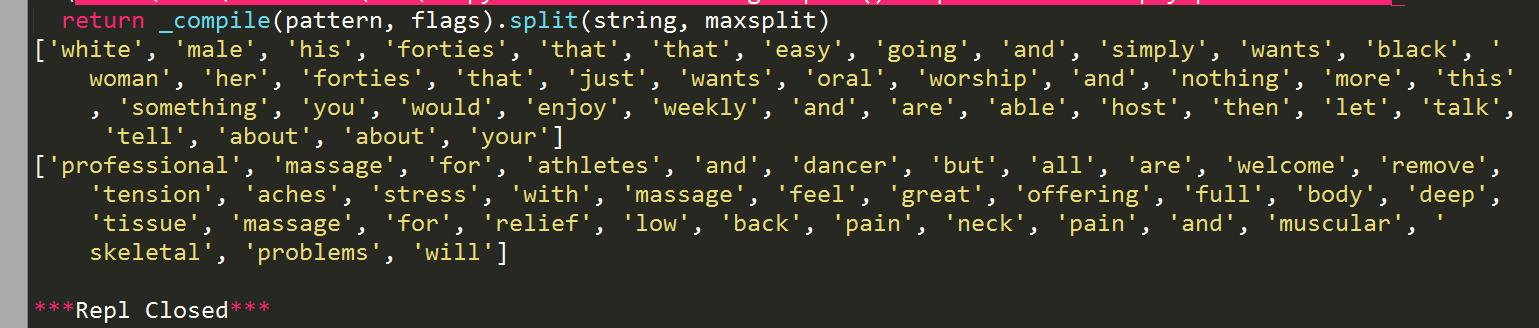

wordList中便是这样的

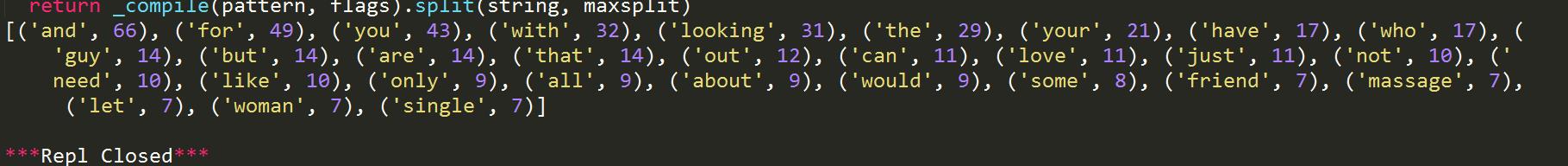

通过下面的函数选出使用频率最高的30个词汇:

def calMostFreq(vocabList,fulltext): #返回使用频率最高的30个词

freqDict = {} #dict

for token in vocabList:

freqDict[token] = fulltext.count(token)

sortedFreq = sorted(freqDict.items(),key=operator.itemgetter(1),reverse=True) #按第二关键字(索引从0开始)

return sortedFreq[:30]

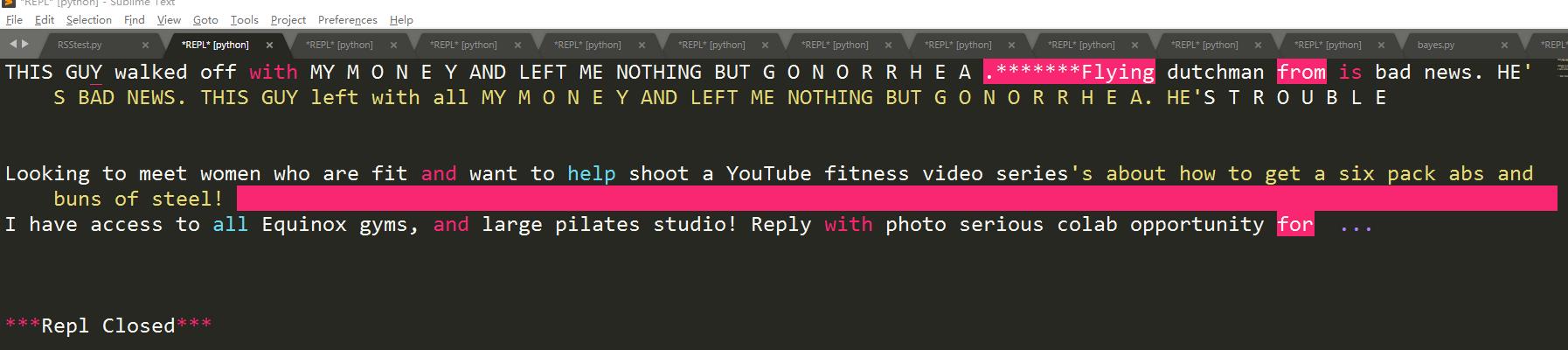

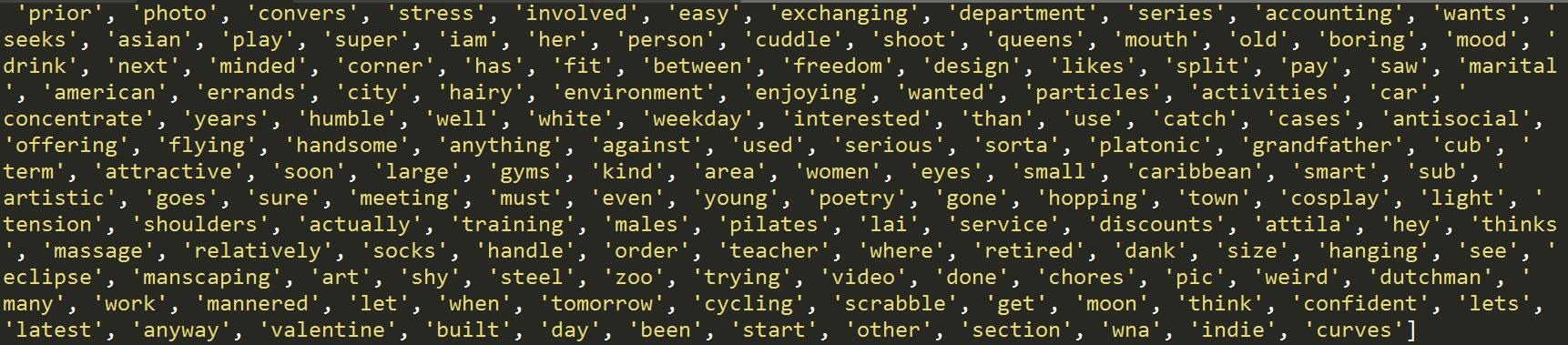

这是剔除了这30个词汇后,词汇表中的词

这词一看就很个性,”cosplay“……

关于sorted(key=lambda),这位小哥写的和细,https://www.cnblogs.com/zle1992/p/6271105.html

全部代码:

from bayes import * import operator import feedparser def calMostFreq(vocabList,fulltext): #返回使用频率最高的30个词 freqDict = {} #dict for token in vocabList: freqDict[token] = fulltext.count(token) sortedFreq = sorted(freqDict.items(),key=operator.itemgetter(1),reverse=True) #按第二关键字(索引从0开始) return sortedFreq[:30] def localWords(feed1,feed0): docList = [];classList = [];fulltext = [] minLen = min(len(feed1[\'entries\']),len(feed0[\'entries\'])) # print("adsas") # print(len(feed1[\'entries\'])) # print(len(feed0[\'entries\'])) #for i in range(minLen): for i in range(minLen): # print(feed1[\'entries\'][i]) # print("\\n") wordList = textParse(feed1[\'entries\'][i][\'summary\']) #print(wordList) docList.append(wordList) #没合 fulltext.extend(wordList) #合了 classList.append(1) #类别属性 wordList = textParse(feed0[\'entries\'][i][\'summary\']) #print(wordList) docList.append(wordList) #没合 fulltext.extend(wordList) #合了 classList.append(0) #类别属性 vocabList = createVocabList(docList) #创建不重复词的集合 top30words = calMostFreq(vocabList,fulltext) #print(top30words) #print(vocabList) for mp in top30words: if mp[0] in vocabList: vocabList.remove(mp[0]) #下面再用交叉验证的方式,来筛选训练集和测试集 trainingSet = list(range(minLen*2)) #总过的summary个数 print(trainingSet) testSet = [] for i in range(20): randIndex = int(random.uniform(0,len(trainingSet))) testSet.append(randIndex) #保留索引 del(trainingSet[randIndex]) trainMat = [];trainClasses = [] for docIndex in trainingSet: #训练 trainMat.append(bagOfWordsVec(vocabList,docList[docIndex])) trainClasses.append(classList[docIndex]) p0V,p1V,pSpam = trainNB0(array(trainMat),array(trainClasses)) numOError = 0 for docIndex in testSet: wordVector = bagOfWordsVec(vocabList,docList[docIndex]) if classifyNB(array(wordVector),p0V,p1V,pSpam)!=classList[docIndex]: numOError += 1 print("the error text %s" % docList[docIndex]) print("error rate: %f " % (float(numOError)/len(testSet))) return vocabList,p0V,p1V def getTopWords(ny,sf): vocabList,p0V,p1V = localWords(ny,sf) topNY = [];topSF = [] for i in range(len(p0V)): if p0V[i] > -6.0: topSF.append((vocabList[i],p0V[i])) #概率大约是0.247% if p1V[i] > -6.0: topNY.append((vocabList[i],p1V[i])) sortedSF = sorted(topSF,key=lambda pair:pair[1],reverse = True) #按照第二个参数排序 print("SF*SF*SF*SF*SF*SF") for item in sortedSF: print(item) sortedNY = sorted(topNY,key=lambda pair:pair[1],reverse = True) #按照第二个参数排序 print("NY*NY*NY*NY*NY") for item in sortedNY: print(item) def main(): print(log(e)) ny = feedparser.parse(\'http://newyork.craigslist.org/stp/index.rss\') sf = feedparser.parse(\'http://sfbay.craigslist.org/stp/index.rss\') getTopWords(ny,sf) main()

以上是关于使用Naive Bayes从个人广告中获取区域倾向的主要内容,如果未能解决你的问题,请参考以下文章