Docker实践 -- 使用Open vSwitch实现跨主机通信

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Docker实践 -- 使用Open vSwitch实现跨主机通信相关的知识,希望对你有一定的参考价值。

作为目前最火热的容器技术,docker在网络实现和管理方面还存在不足。最开始的docker是依赖于Linux Bridge实现的网络设置,只能在容器里创建一个网卡。后来随着docker/libnetwork项目的进展,docker的网络管理功能逐渐多了起来。尽管如此,跨主机通信对于docker来说还是一个需要面对的问题,这一点对于kubernetes类的容器管理系统异常重要。目前市面上主流的解决方法有flannel, weave, Pipework, Open vSwitch等。

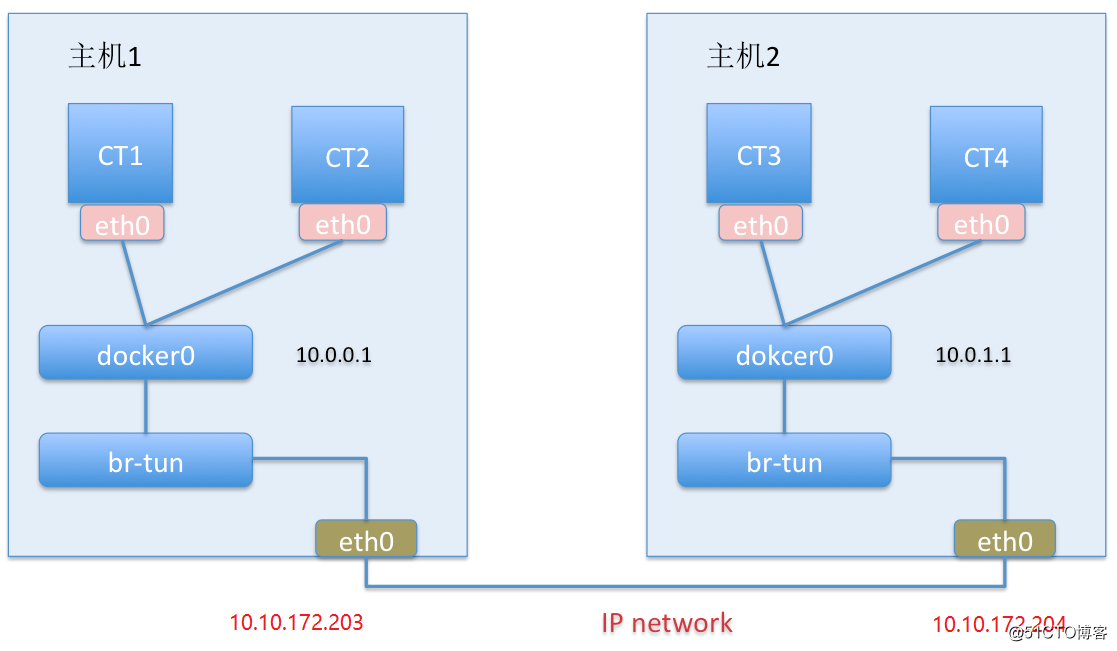

Open vSwitch实现比较简单,成熟且功能强大,所以很适合作为解决docker底层网络互联互通的工具。言归正传,如下是基本架构图:

具体的实现步骤如下:

1. 安装docker, bridge-utils和openvswitch

[[email protected] ~]# yum install docker bridge-utils -y [[email protected] ~]# yum install wget openssl-devel -y [[email protected] ~]# yum groupinstall "Development Tools" [[email protected] ~]# adduser ovswitch [[email protected] ~]# su - ovswitch [[email protected] ~]$ wget http://openvswitch.org/releases/openvswitch-2.3.0.tar.gz [[email protected] ~]$ tar -zxvpf openvswitch-2.3.0.tar.gz [[email protected] ~]$ mkdir -p ~/rpmbuild/SOURCES [[email protected] ~]$ sed 's/openvswitch-kmod, //g' openvswitch-2.3.0/rhel/openvswitch.spec > openvswitch-2.3.0/rhel/openvswitch_no_kmod.spec [[email protected] ~]$ cp openvswitch-2.3.0.tar.gz rpmbuild/SOURCES/ [[email protected] ~]$ rpmbuild -bb --without check ~/openvswitch-2.3.0/rhel/openvswitch_no_kmod.spec [[email protected] ~]$ exit [[email protected] ~]# yum localinstall /home/ovswitch/rpmbuild/RPMS/x86_64/openvswitch-2.3.0-1.x86_64.rpm -y [[email protected] ~]# mkdir /etc/openvswitch [[email protected] ~]# setenforce 0

2. 在dockerserver1上编辑/usr/lib/systemd/system/docker.service文件,添加docker启动选项"--bip=10.0.0.1/24",如下:

[[email protected] ~]# cat /usr/lib/systemd/system/docker.service [Unit] Description=Docker Application Container Engine Documentation=http://docs.docker.com After=network.target rhel-push-plugin.socket registries.service Wants=docker-storage-setup.service Requires=docker-cleanup.timer [Service] Type=notify NotifyAccess=all EnvironmentFile=-/run/containers/registries.conf EnvironmentFile=-/etc/sysconfig/docker EnvironmentFile=-/etc/sysconfig/docker-storage EnvironmentFile=-/etc/sysconfig/docker-network Environment=GOTRACEBACK=crash Environment=DOCKER_HTTP_HOST_COMPAT=1 Environment=PATH=/usr/libexec/docker:/usr/bin:/usr/sbin ExecStart=/usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-current --default-runtime=docker-runc --exec-opt native.cgroupdriver=systemd --userland-proxy-path=/usr/libexec/docker/docker-proxy-current --bip=10.0.0.1/24 $OPTIONS $DOCKER_STORAGE_OPTIONS $DOCKER_NETWORK_OPTIONS $ADD_REGISTRY $BLOCK_REGISTRY $INSECURE_REGISTRY $REGISTRIES ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=1048576 LimitNPROC=1048576 LimitCORE=infinity TimeoutStartSec=0 Restart=on-abnormal MountFlags=slave KillMode=process [Install] WantedBy=multi-user.target [[email protected] ~]#

3. 在dockerserver2上编辑/usr/lib/systemd/system/docker.service文件,添加docker启动选项"--bip=10.0.1.1/24",如下:

[[email protected] ~]# cat /usr/lib/systemd/system/docker.service [Unit] Description=Docker Application Container Engine Documentation=http://docs.docker.com After=network.target rhel-push-plugin.socket registries.service Wants=docker-storage-setup.service Requires=docker-cleanup.timer [Service] Type=notify NotifyAccess=all EnvironmentFile=-/run/containers/registries.conf EnvironmentFile=-/etc/sysconfig/docker EnvironmentFile=-/etc/sysconfig/docker-storage EnvironmentFile=-/etc/sysconfig/docker-network Environment=GOTRACEBACK=crash Environment=DOCKER_HTTP_HOST_COMPAT=1 Environment=PATH=/usr/libexec/docker:/usr/bin:/usr/sbin ExecStart=/usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-current --default-runtime=docker-runc --exec-opt native.cgroupdriver=systemd --userland-proxy-path=/usr/libexec/docker/docker-proxy-current --bip=10.0.1.1/24 $OPTIONS $DOCKER_STORAGE_OPTIONS $DOCKER_NETWORK_OPTIONS $ADD_REGISTRY $BLOCK_REGISTRY $INSECURE_REGISTRY $REGISTRIES ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=1048576 LimitNPROC=1048576 LimitCORE=infinity TimeoutStartSec=0 Restart=on-abnormal MountFlags=slave KillMode=process [Install] WantedBy=multi-user.target [[email protected] ~]#

4. 在两个主机上启动docker服务

# systemctl start docker # systemctl enable docker

5. docker服务启动后,可以看到一个新的bridge docker0被创建出来,并且被赋予了我们之前配置的IP地址:

[[email protected] ~]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.02427076111e no [[email protected] ~]# ifconfig docker0 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.0.0.1 netmask 255.255.255.0 broadcast 0.0.0.0 ether 02:42:70:76:11:1e txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [[email protected] ~]#

[[email protected] ~]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242ba00c394 no [[email protected] ~]# ifconfig docker0 docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.0.1.1 netmask 255.255.255.0 broadcast 0.0.0.0 ether 02:42:ba:00:c3:94 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [[email protected] ~]#

6. 在两个主机上启动openvswitch

# systemctl start openvswitch # chkconfig openvswitch on

7. 在两个主机上创建隧道网桥br-tun,并通过VXLAN协议创建隧道

[[email protected] ~]# ovs-vsctl add-br br-tun [[email protected] ~]# ovs-vsctl add-port br-tun vxlan0 -- set Interface vxlan0 type=vxlan options:remote_ip=10.10.172.204 [[email protected] ~]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.02427076111e no br-tun [[email protected] ~]# ovs-vsctl add-br br-tun [[email protected] ~]# ovs-vsctl add-port br-tun vxlan0 -- set Interface vxlan0 type=vxlan options:remote_ip=10.10.172.203 [[email protected] ~]# brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242ba00c394 no br-tun

8. 将br-tun作为接口加入docker0网桥

[[email protected] ~]# brctl addif docker0 br-tun [[email protected] ~]# brctl addif docker0 br-tun

9. 由于两个主机的容器处于不同的网段,需要添加路由才能让两边的容器互相通信

[[email protected] ~]# ip route add 10.0.1.0/24 via 10.10.172.204 dev eth0 [[email protected] ~]# ip route add 10.0.0.0/24 via 10.10.172.203 dev eth0

10. 在两个主机上各自启动一个docker容器,验证互相能否通信。

[[email protected] ~]# docker run --rm -it centos /bin/bash [[email protected] /]# yum install net-tools -y [[email protected] /]# ifconfig eth0 eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.0.0.2 netmask 255.255.255.0 broadcast 0.0.0.0 inet6 fe80::42:aff:fe00:2 prefixlen 64 scopeid 0x20<link> ether 02:42:0a:00:00:02 txqueuelen 0 (Ethernet) RX packets 4266 bytes 13337782 (12.7 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 4144 bytes 288723 (281.9 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [[email protected] /]#

[[email protected] ~]# docker run --rm -it centos /bin/bash [[email protected] /]# yum install net-tools -y [[email protected] /]# ifconfig eth0 eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.0.1.2 netmask 255.255.255.0 broadcast 0.0.0.0 inet6 fe80::42:aff:fe00:102 prefixlen 64 scopeid 0x20<link> ether 02:42:0a:00:01:02 txqueuelen 0 (Ethernet) RX packets 4536 bytes 13344451 (12.7 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 4381 bytes 301685 (294.6 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [[email protected] /]# ping 10.0.0.2 PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data. 64 bytes from 10.0.0.2: icmp_seq=1 ttl=62 time=1.68 ms ^C --- 10.0.0.2 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 1.683/1.683/1.683/0.000 ms [[email protected] /]#

11.查看两个docker宿主机的网卡信息

[[email protected] ~]# ip addr list 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000 link/ether 00:50:56:86:3e:d8 brd ff:ff:ff:ff:ff:ff inet 10.10.172.203/24 brd 10.10.172.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::250:56ff:fe86:3ed8/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:70:76:11:1e brd ff:ff:ff:ff:ff:ff inet 10.0.0.1/24 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:70ff:fe76:111e/64 scope link valid_lft forever preferred_lft forever 4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether 06:19:20:ae:f6:61 brd ff:ff:ff:ff:ff:ff 5: br-tun: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master docker0 state DOWN link/ether 42:2c:39:7f:a2:4a brd ff:ff:ff:ff:ff:ff [[email protected] ~]#

[[email protected] ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000 link/ether 00:50:56:86:22:d8 brd ff:ff:ff:ff:ff:ff inet 10.10.172.204/24 brd 10.10.172.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::250:56ff:fe86:22d8/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:ba:00:c3:94 brd ff:ff:ff:ff:ff:ff inet 10.0.1.1/24 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:baff:fe00:c394/64 scope link valid_lft forever preferred_lft forever 4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether be:d4:64:ee:cb:29 brd ff:ff:ff:ff:ff:ff 5: br-tun: <BROADCAST,MULTICAST> mtu 1500 qdisc noop master docker0 state DOWN link/ether 6e:3d:3e:1a:6a:4e brd ff:ff:ff:ff:ff:ff [[email protected] ~]#

以上是关于Docker实践 -- 使用Open vSwitch实现跨主机通信的主要内容,如果未能解决你的问题,请参考以下文章