hyperledger fabric 1.0.5 分布式部署

Posted chenfool

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了hyperledger fabric 1.0.5 分布式部署 相关的知识,希望对你有一定的参考价值。

环境:2台 ubuntu 16.04

角色列表

| 角色 | IP地址 | 宿主端口 | docker端口 |

| peer0.org1.example.com | 47.93.249.250 | 7051 | 7051 |

| peer1.org1.example.com | 47.93.249.250 | 7051 | 8051 |

| peer0.org2.example.com | 47.93.249.250 | 7051 | 9051 |

| peer1.org2.example.com | 47.93.249.250 | 7051 | 10051 |

| cli | 47.93.249.250 | NULL | NULL |

| orderer.example.com | 47.94.244.156 | 7050 | 7050 |

- 环境初始化

2台机器的fabric 环境初始化方法,读者参考作者之前写的一片文章:http://www.cnblogs.com/chenfool/p/8353425.html,并且确保两台机器都能够正常运行 e2e_cli 的测试程序。

在完成e2e_cli 程序的测试后,读者一定要清理环境。

./network_setup.sh down mychannel

- 分布式部署(1)

按照角色列表,首先在 47.94.244.156 机器上,目录切换到 /opt/gopath/src/github.com/hyperledger/fabric/examples/e2e_cli ,然后复制一份 docker-compose 的配置文件

cp docker-compose-cli.yaml docker-compose-test.yaml

对 docker-compose-test.yaml 配置进行修改,修改后的内容如下

# Copyright IBM Corp. All Rights Reserved. # # SPDX-License-Identifier: Apache-2.0 # version: \'2\' services: orderer.example.com: extends: file: base/docker-compose-base.yaml service: orderer.example.com container_name: orderer.example.com

执行以下命令,生成公私钥、证书、创世区块等,该命令执行后,会在本地生成 channel-artifacts 和 crypto-config 目录。

读者这里需要注意一下,在执行 generateArtifacets.sh 脚本时,需要确保 channel-artifacts 目录已经存在,否则可能会报错

chmod 755 generateArtifacts.sh ./generateArtifacts.sh mychannel

将channel-artifacts 和 crypto-config 目录文件拷贝到 47.93.249.250 机器的相同目录

scp -r crypto-config root@47.93.249.250:/opt/gopath/src/github.com/hyperledger/fabric/examples/e2e_cli scp -r channel-artifacts root@47.93.249.250:/opt/gopath/src/github.com/hyperledger/fabric/examples/e2e_cli

启动docker 进程

docker-compose -f docker-compose-test.yaml up -d

docker 进程启动后,读者可以通过以下命令查看正在运行的进程

docker ps

- 分布式部署(2)

读者此时在 47.93.249.250 机器上切换目录到 /opt/gopath/src/github.com/hyperledger/fabric/examples/e2e_cli,同样的复制一份docker-compose 的配置文件

cp docker-compose-cli.yaml docker-compose-test.yaml

对 docker-compose-test.yaml 配置进行修改,修改后的内容如下

# Copyright IBM Corp. All Rights Reserved. # # SPDX-License-Identifier: Apache-2.0 # version: \'2\' services: peer0.org1.example.com: container_name: peer0.org1.example.com extends: file: base/docker-compose-base.yaml service: peer0.org1.example.com extra_hosts: - "orderer.example.com:47.94.244.156" peer1.org1.example.com: container_name: peer1.org1.example.com extends: file: base/docker-compose-base.yaml service: peer1.org1.example.com extra_hosts: - "orderer.example.com:47.94.244.156" - "peer0.org1.example.com:47.93.249.250" peer0.org2.example.com: container_name: peer0.org2.example.com extends: file: base/docker-compose-base.yaml service: peer0.org2.example.com extra_hosts: - "orderer.example.com:47.94.244.156" peer1.org2.example.com: container_name: peer1.org2.example.com extends: file: base/docker-compose-base.yaml service: peer1.org2.example.com extra_hosts: - "orderer.example.com:47.94.244.156" - "peer0.org2.example.com:47.93.249.250" cli: container_name: cli image: hyperledger/fabric-tools tty: true environment: - GOPATH=/opt/gopath - CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock - CORE_LOGGING_LEVEL=DEBUG - CORE_PEER_ID=cli - CORE_PEER_ADDRESS=peer0.org1.example.com:7051 - CORE_PEER_LOCALMSPID=Org1MSP - CORE_PEER_TLS_ENABLED=true - CORE_PEER_TLS_CERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/server.crt - CORE_PEER_TLS_KEY_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/server.key - CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/ca.crt - CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/users/Admin@org1.example.com/msp working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer #command: /bin/bash -c \'./scripts/script.sh ${CHANNEL_NAME}; sleep $TIMEOUT\' volumes: - /var/run/:/host/var/run/ - ../chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric/examples/chaincode/go - ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ - ./scripts:/opt/gopath/src/github.com/hyperledger/fabric/peer/scripts/ - ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts depends_on: - peer0.org1.example.com - peer1.org1.example.com - peer0.org2.example.com - peer1.org2.example.com extra_hosts: - "orderer.example.com:47.94.244.156" - "peer0.org1.example.com:47.93.249.250" - "peer1.org1.example.com:47.93.249.250" - "peer0.org2.example.com:47.93.249.250" - "peer1.org2.example.com:47.93.249.250"

启动该台机器的docker 相关服务

docker-compose -f docker-compose-test.yaml up -d

docker 进程启动后,读者可以通过以下命令查看正在运行的进程

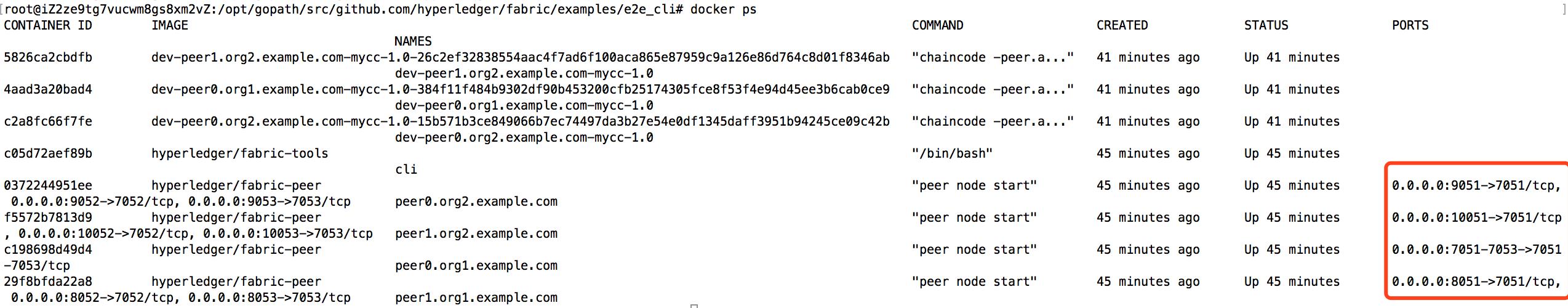

docker ps

此时屏幕上将输出的类似如下信息

读者可以看到docker中的服务映射的端口号的区别,然后针对不同的docker 服务,继续修改 scripts/script.sh 脚本中的 setGlobals 函数(大约是32行)中,各个docker 服务的端口号

vi scripts/script.sh

setGlobals () { if [ $1 -eq 0 -o $1 -eq 1 ] ; then CORE_PEER_LOCALMSPID="Org1MSP" CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/peers/peer0.org1.example.com/tls/ca.crt CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org1.example.com/users/Admin@org1.example.com/msp if [ $1 -eq 0 ]; then CORE_PEER_ADDRESS=peer0.org1.example.com:7051 else CORE_PEER_ADDRESS=peer1.org1.example.com:8051 fi else CORE_PEER_LOCALMSPID="Org2MSP" CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/peers/peer0.org2.example.com/tls/ca.crt CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/org2.example.com/users/Admin@org2.example.com/msp if [ $1 -eq 2 ]; then CORE_PEER_ADDRESS=peer0.org2.example.com:9051 else CORE_PEER_ADDRESS=peer1.org2.example.com:10051 fi fi env |grep CORE }

将最新的 scripts/script.sh 脚本上传到docker 的cli 镜像中

注意:c05d72aef89b 是作者docker 的cli 容器编号,具体使用方法可以参考:http://blog.csdn.net/u011596455/article/details/76862271,并且将script.sh 脚本上传cli 容器后,该脚本将一直保存在cli 的容器中(包括重启容器服务)

docker cp scripts/script.sh c05d72aef89b:/opt/gopath/src/github.com/hyperledger/fabric/peer/scripts/

不需要从宿主机器拷贝script.sh 脚本到 cli 中,因为 docker-compose-test.yaml 配置文件中,已经将宿主的script.sh 脚本挂载到cli 中了

volumes: - /var/run/:/host/var/run/ - ../chaincode/go/:/opt/gopath/src/github.com/hyperledger/fabric/examples/chaincode/go - ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ - ./scripts:/opt/gopath/src/github.com/hyperledger/fabric/peer/scripts/ - ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts

- init fabric 集群环境

选择其中一台机器进入docker cli 镜像的shell 环境,作者选择了 47.93.249.250 进行操作

docker exec -it cli bash

再执行script.sh 脚本

./scripts/script.sh mychannel

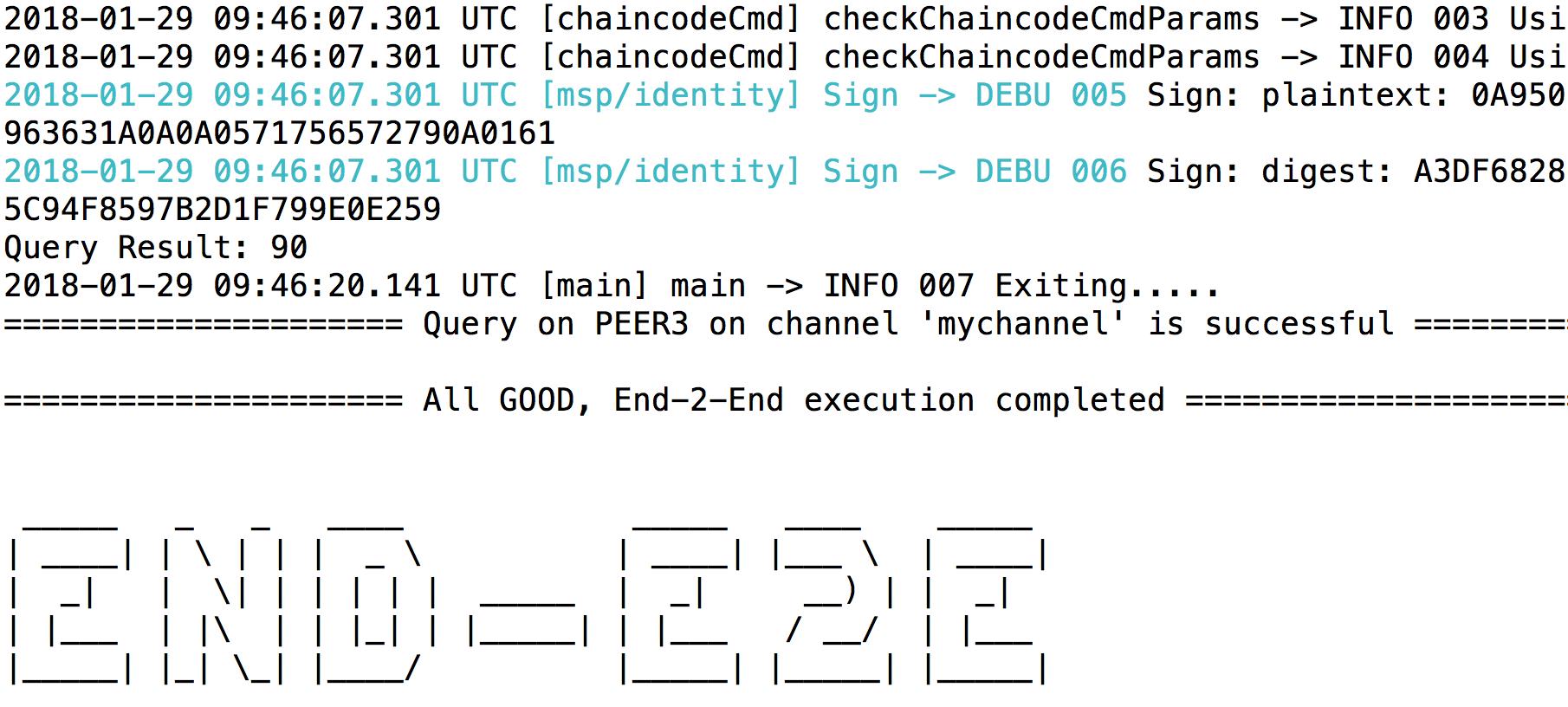

然后集群就开始真正的初始化,等待所有的操作都结束后,将出现如下信息

到这里,fabric 的集群环境就部署好了,如果读者想部署更多机器的fabric 环境,也可以照葫芦画瓢地进行配置和部署

参考博客:

http://www.cnblogs.com/aberic/p/7541470.html

http://www.cnblogs.com/aberic/p/7542167.html

http://blog.csdn.net/u011596455/article/details/76862271

以上是关于hyperledger fabric 1.0.5 分布式部署 的主要内容,如果未能解决你的问题,请参考以下文章