搭建部署 分布式ELK平台

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了搭建部署 分布式ELK平台 相关的知识,希望对你有一定的参考价值。

logstash

? logstash 是什么

– logstash是一个数据采集、加工处理以及传输的工具

? logstash 特点:

– 所有类型的数据集中处理

– 不同模式和格式数据的正常化

– 自定义日志格式的迅速扩展

– 为自定义数据源轻松添加插件

? logstash 安装

– Logstash 依赖 java 环境,需要安装 java-1.8.0-openjdk

– Logstash 没有默认的配置文件,需要手动配置

– logstash 安装在 /opt/logstash 目录下

# rpm -ivh logstash-2.3.4-1.noarch.rpm

# rpm -qc logstash

/etc/init.d/logstash

/etc/logrotate.d/logstash

/etc/sysconfig/logstash

// 查看logstash 模块插件列表

# /opt/logstash/bin/logstash-plugin list

logstash-codec-collectd

logstash-codec-json

.. ..

logstash-filter-anonymize

logstash-filter-checksum

.. ..

logstash-input-beats

logstash-input-exec

.. ..

logstash-output-cloudwatch

logstash-output-csv

.. ..

logstash-patterns-core

第一列表示是 logstash 的模块

第二列表示在那个区域段执行 codec 属于编解码 是字符编码类型的 在全部的区域段可以运行

? logstash 工作结构

– { 数据源 } ==>

– input { } ==> //收集日志

– filter { } ==> //日志处理 整理格式

– output { } ==> //日志输出

– { ES }

? logstash 里面的类型

– 布尔值类型: ssl_enable => true

– 字节类型:bytes => "1MiB"

– 字符串类型: name => "xkops"

– 数值类型: port => 22

– 数组: match => ["datetime","UNIX"]

– 哈希: options => {k => "v",k2 => "v2"}

– 编码解码: codec => "json"

– 路径: file_path => "/tmp/filename"

– 注释: #

? logstash 条件判断

– 等于: ==

– 不等于: !=

– 小于: <

– 大于: >

– 小于等于: <=

– 大于等于: >=

– 匹配正则: =~

– 不匹配正则: !~

logstash 条件判断

– 包含: in

– 不包含: not in

– 与: and

– 或: or

– 非与: nand

– 非或: xor

– 复合表达式: ()

– 取反符合: !()

配置 logastash

# cd /etc/logstash/

// logstash 默认没有配置文件

# vim logstash.conf

input{

stdin{} //标准输入

}

filter{}

output{

stdout{} // 标准输出

}

# /opt/logstash/bin/logstash -f logstash.conf // 相当cat

Settings: Default pipeline workers: 1

Pipeline main started

Hello word!!!

2018-01-26T13:21:22.031Z 0.0.0.0 Hello word!!!

– 上页的配置文件使用了 logstash-input-stdin 和logstash-output-stdout 两个插件

查看插件具体使用方法 的方式是 https://github.com/logstash-plugins

练习1

# vim logstash.conf

input{

stdin{ codec => "json" }

}

filter{}

output{

stdout{}

}

# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

12345 // 123456 不是 json 模式 所有报错

A plugin had an unrecoverable error. Will restart this plugin.

Plugin: <LogStash::Inputs::Stdin codec=><LogStash::Codecs::JSON charset=>"UTF-8">>

Error: can't convert String into Integer {:level=>:error}

'abc'

2018-01-26T13:43:17.840Z 0.0.0.0 'abc'

'{"a":1,"b":2}'

2018-01-26T13:43:46.889Z 0.0.0.0 '{"a":1,"b":2}'

练习2

# vim logstash.conf

input{

stdin{ codec => "json" }

}

filter{}

output{

stdout{ codec => "rubydebug"} //调试数据的模式

}

# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

'"aaa"'

{

"message" => "'\"aaa\"'",

"tags" => [

[0] "_jsonparsefailure"

],

"@version" => "1",

"@timestamp" => "2018-01-26T13:52:45.307Z",

"host" => "0.0.0.0"

}

{"aa":1,"bb":2}

{

"aa" => 1,

"bb" => 2,

"@version" => "1",

"@timestamp" => "2018-01-26T13:53:00.452Z",

"host" => "0.0.0.0"

}

练习3

# touch /tmp/a.log /tmp/b.log

# vim logstash.conf

input{

file { //监控文件

path => ["/tmp/a.log","/tmp/b.log"] //监控文件 路径

type => "testlog" //声明文件类型

}

}

filter{}

output{

stdout{ codec => "rubydebug"}

}

# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

... //开始监控日志文件

//切换到另一个终端测试

# cd /tmp/

// 为日志文件 添加内容

# echo 12345 > a.log

// 这时在 会看见终端输出监控信息

{

"message" => "12345",

"@version" => "1",

"@timestamp" => "2018-01-27T00:45:15.470Z",

"path" => "/tmp/a.log",

"host" => "0.0.0.0",

"type" => "testlog"

}

# echo b123456 > b.log

{

"message" => "b123456",

"@version" => "1",

"@timestamp" => "2018-01-27T00:45:30.487Z",

"path" => "/tmp/b.log",

"host" => "0.0.0.0",

"type" => "testlog"

}

# echo c123456 >> b.log

{

"message" => "c123456",

"@version" => "1",

"@timestamp" => "2018-01-27T00:45:45.501Z",

"path" => "/tmp/b.log",

"host" => "0.0.0.0",

"type" => "testlog"

}

// 默认记录 读取位置的文件 在管理员家目录 .sincedb_ 后面是一串哈希数

# cat /root/.sincedb_ab3977c541d1144f701eedeb3af4956a

3190503 0 64768 6

3190504 0 64768 16

# du -b /tmp/a.log du -b 查看文件类型

6/tmp/a.log

# du -b /tmp/b.log

16/tmp/b.log

// 进行优化

# vim logstash.conf

input{

file {

start_position => "beginning" //设置当记录位置的库文件不存在时 从文件开始读取

sincedb_path => "/var/lib/logstash/sincedb-access" //记录位置的库文件 默认放在每个用户下的 所以将记录位置的库文件固定位置

path => ["/tmp/a.log","/tmp/b.log"]

type => "testlog"

}

}

filter{}

output{

stdout{ codec => "rubydebug"}

}

# rm -rf /root/.sincedb_ab3977c541d1144f701eedeb3af4956a

# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "12345",

"@version" => "1",

"@timestamp" => "2018-01-27T10:44:54.890Z",

"path" => "/tmp/a.log",

"host" => "0.0.0.0",

"type" => "testlog"

}

{

"message" => "b123456",

"@version" => "1",

"@timestamp" => "2018-01-27T10:44:55.242Z",

"path" => "/tmp/b.log",

"host" => "0.0.0.0",

"type" => "testlog"

}

{

"message" => "c123456",

"@version" => "1",

"@timestamp" => "2018-01-27T10:44:55.242Z",

"path" => "/tmp/b.log",

"host" => "0.0.0.0",

"type" => "testlog"

}

练习 tcp udp 插件

# vim logstash.conf

input{

tcp {

host => "0.0.0.0"

port => 8888

type => "tcplog"

}

udp {

host => "0.0.0.0"

port => 9999

type => "udplog"

}

}

filter{}

output{

stdout{ codec => "rubydebug"}

}

# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

...

//在另一个终端查看监听端口

# netstat -pantu | grep -E "(8888|9999)"

tcp6 0 0 :::8888 :::* LISTEN 3098/java

udp6 0 0 :::9999 :::* 3098/java

模拟客户端 发送数据报文

// 发送tcp 数据报文 exec 改变当前文件描述符 标准输入 标准输出重新设置

# exec 9<>/dev/tcp/192.168.4.10/8888 //打开建立连接

# echo "hello world" >&9 //发送 hello world 字符串 给连接

# exec 9<&- // 关闭连接

// 接收到连接

{

"message" => "hello world",

"@version" => "1",

"@timestamp" => "2018-01-27T11:01:35.356Z",

"host" => "192.168.4.11",

"port" => 48654,

"type" => "tcplog"

}

// 发送udp 数据报文

# exec 7<>/dev/udp/192.168.4.10/9999

# echo "is udp log" >&7

# exec 7<&-

// 接收到连接

{

"message" => "is udp log\n",

"@version" => "1",

"@timestamp" => "2018-01-27T11:05:18.850Z",

"type" => "udplog",

"host" => "192.168.4.11"

}

// 发送文件

# exec 8<>/dev/udp/192.168.4.10/9999

# cat /etc/hosts >&8

# exec 9<&-

// 接受到信息

{

"message" => "127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4\n::1 localhost localhost.localdomain localhost6 localhost6.localdomain6\n192.168.4.11\tes1\n192.168.4.12\tes2\n192.168.4.13\tes3\n192.168.4.14\tes4\n192.168.4.15\tes5\n",

"@version" => "1",

"@timestamp" => "2018-01-27T11:10:31.099Z",

"type" => "udplog",

"host" => "192.168.4.11"

}

syslog 插件练习

# vim logstash.conf

input{

syslog{

host => "192.168.4.10"

port => 514 //系统日志默认端口

type => "syslog"

}

}

filter{}

output{

stdout{ codec => "rubydebug"}

}

# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

. . .

# netstat -pantu | grep 514

tcp6 0 0 192.168.4.10:514 :::* LISTEN 3545/java

udp6 0 0 192.168.4.10:514 :::* 3545/java

//在客户端主机上 自定义日志文件 发给logstash 主机

# vim /etc/rsyslog.conf

# sed -n '74p' /etc/rsyslog.conf

local0.info@@192.168.4.10:514

# systemctl restart rsyslog.service

# logger -p local0.info -t testlog "hello world" // 发送一条测试日志

// logstash 主机收到日志

{

"message" => "hello world\n",

"@version" => "1",

"@timestamp" => "2018-01-27T11:29:03.000Z",

"type" => "syslog",

"host" => "192.168.4.11",

"priority" => 134,

"timestamp" => "Jan 27 06:29:03",

"logsource" => "es1",

"program" => "testlog",

"severity" => 6,

"facility" => 16,

"facility_label" => "local0",

"severity_label" => "Informational"

}

扩展 如果想要把登录日志发给 logstash

# vim /etc/rsyslog.conf

# sed -n '75p' /etc/rsyslog.conf

authpriv.*@@192.168.4.10:514

# systemctl restart rsyslog.service

//测试登录 登出

# exit

登出

Connection to 192.168.4.11 closed.

# ssh -X [email protected]

[email protected]'s password:

Last login: Sat Jan 27 05:27:21 2018 from 192.168.4.254

//实时接受的日志

{

"message" => "Accepted password for root from 192.168.4.254 port 50820 ssh2\n",

"@version" => "1",

"@timestamp" => "2018-01-27T11:32:07.000Z",

"type" => "syslog",

"host" => "192.168.4.11",

"priority" => 86,

"timestamp" => "Jan 27 06:32:07",

"logsource" => "es1",

"program" => "sshd",

"pid" => "3734",

"severity" => 6,

"facility" => 10,

"facility_label" => "security/authorization",

"severity_label" => "Informational"

}

{

"message" => "pam_unix(sshd:session): session opened for user root by (uid=0)\n",

"@version" => "1",

"@timestamp" => "2018-01-27T11:32:07.000Z",

"type" => "syslog",

"host" => "192.168.4.11",

"priority" => 86,

"timestamp" => "Jan 27 06:32:07",

"logsource" => "es1",

"program" => "sshd",

"pid" => "3734",

"severity" => 6,

"facility" => 10,

"facility_label" => "security/authorization",

"severity_label" => "Informational"

}

? filter grok插件

– 解析各种非结构化的日志数据插件

– grok 使用正则表达式把飞结构化的数据结构化

– 在分组匹配,正则表达式需要根据具体数据结构编写

– 虽然编写困难,但适用性极广

– 几乎可以应用于各类数据

? grok 正则分组匹配

– 匹配 ip 时间戳 和 请求方法

"(?<ip>(\d+\.){3}\d+) \S+ \S+

(?<time>.*\])\s+\"(?<method>[A-Z]+)"]

– 使用正则宏

%{IPORHOST:clientip} %{HTTPDUSER:ident} %{USER:auth}

\[%{HTTPDATE:timestamp}\] \"%{WORD:verb}

– 最终版本

%{COMMONAPACHELOG} \"(?<referer>[^\"]+)\"

\"(?<UA>[^\"]+)\"

练习 匹配Apache 日志

# cd /opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-2.0.5/patterns/

# vim grok-patterns //日志宏定义仓库

....

COMMONAPACHELOG %{IPORHOST:clientip} %{HTTPDUSER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

....

# vim /tmp/test.log //在测试文件中添加一条日志

220.181.108.115 - - [11/Jul/2017:03:07:16 +0800] "GET /%B8%DF%BC%B6%D7%E2%C1%DE%D6%F7%C8%CE/QYQiu_j.html HTTP/1.1" 200 20756 "-" "Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)" "-" zxzs.buildhr.com 558742

# vim logstash.conf

input{

file{

start_position => "beginning"

sincedb_path => "/dev/null" //为了调试方便

path => [ "/tmp/test.log" ]

type => 'filelog'

}

}

filter{

grok{

match => ["message","%{COMMONAPACHELOG}"]

}

}

output{

stdout{ codec => "rubydebug"}

}

# /opt/logstash/bin/logstash -f logstash.conf Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "220.181.108.115 - - [11/Jul/2017:03:07:16 +0800] \"GET /%B8%DF%BC%B6%D7%E2%C1%DE%D6%F7%C8%CE/QYQiu_j.html HTTP/1.1\" 200 20756 \"-\" \"Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)\" \"-\" zxzs.buildhr.com 558742",

"@version" => "1",

"@timestamp" => "2018-01-27T12:03:10.363Z",

"path" => "/tmp/test.log",

"host" => "0.0.0.0",

"type" => "filelog",

"clientip" => "220.181.108.115",

"ident" => "-",

"auth" => "-",

"timestamp" => "11/Jul/2017:03:07:16 +0800",

"verb" => "GET",

"request" => "/%B8%DF%BC%B6%D7%E2%C1%DE%D6%F7%C8%CE/QYQiu_j.html",

"httpversion" => "1.1",

"response" => "200",

"bytes" => "20756"

}

{

"message" => "",

"@version" => "1",

"@timestamp" => "2018-01-27T12:03:10.584Z",

"path" => "/tmp/test.log",

"host" => "0.0.0.0",

"type" => "filelog",

"tags" => [

[0] "_grokparsefailure"

]

}

编写分析日志正则时 先区宏库中寻找 找不到可以 去百度 尽量不要自己手动写 会很累

练习 同时解析不同日志

# vim logstash.conf

input{

file{

start_position => "beginning"

sincedb_path => "/dev/null"

path => [ "/tmp/test.log" ]

type => 'filelog'

}

file{

start_position => "beginning"

sincedb_path => "/dev/null"

path => [ "/tmp/test.json" ]

type => 'jsonlog'

codec => 'json'

}

}

filter{

if [type] == "filelog"{

grok{

match => ["message","%{COMMONAPACHELOG}"]

}

}

}

output{

stdout{ codec => "rubydebug"}

}

[[email protected] logstash]# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "220.181.108.115 - - [11/Jul/2017:03:07:16 +0800] \"GET /%B8%DF%BC%B6%D7%E2%C1%DE%D6%F7%C8%CE/QYQiu_j.html HTTP/1.1\" 200 20756 \"-\" \"Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)\" \"-\" zxzs.buildhr.com 558742",

"@version" => "1",

"@timestamp" => "2018-01-27T12:22:06.481Z",

"path" => "/tmp/test.log",

"host" => "0.0.0.0",

"type" => "filelog",

"clientip" => "220.181.108.115",

"ident" => "-",

"auth" => "-",

"timestamp" => "11/Jul/2017:03:07:16 +0800",

"verb" => "GET",

"request" => "/%B8%DF%BC%B6%D7%E2%C1%DE%D6%F7%C8%CE/QYQiu_j.html",

"httpversion" => "1.1",

"response" => "200",

"bytes" => "20756"

}

{

"message" => "",

"@version" => "1",

"@timestamp" => "2018-01-27T12:22:06.834Z",

"path" => "/tmp/test.log",

"host" => "0.0.0.0",

"type" => "filelog",

"tags" => [

[0] "_grokparsefailure"

]

}

{

"@timestamp" => "2015-05-18T12:28:25.013Z",

"ip" => "79.1.14.87",

"extension" => "gif",

"response" => "200",

"geo" => {

"coordinates" => {

"lat" => 35.16531472,

"lon" => -107.9006142

},

"src" => "GN",

"dest" => "US",

"srcdest" => "GN:US"

},

"@tags" => [

[0] "success",

[1] "info"

],

"utc_time" => "2015-05-18T12:28:25.013Z",

"referer" => "http://www.slate.com/warning/b-alvin-drew",

"agent" => "Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24",

"clientip" => "79.1.14.87",

"bytes" => 774,

"host" => "motion-media.theacademyofperformingartsandscience.org",

"request" => "/canhaz/james-mcdivitt.gif",

"url" => "https://motion-media.theacademyofperformingartsandscience.org/canhaz/james-mcdivitt.gif",

"@message" => "79.1.14.87 - - [2015-05-18T12:28:25.013Z] \"GET /canhaz/james-mcdivitt.gif HTTP/1.1\" 200 774 \"-\" \"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\"",

"spaces" => "this is a thing with lots of spaces wwwwoooooo",

"xss" => "<script>console.log(\"xss\")</script>",

"headings" => [

[0] "<h3>charles-bolden</h5>",

[1] "http://www.slate.com/success/barry-wilmore"

],

"links" => [

[0] "[email protected]",

[1] "http://facebook.com/info/anatoly-solovyev",

[2] "www.www.slate.com"

],

"relatedContent" => [],

"machine" => {

"os" => "osx",

"ram" => 8589934592

},

"@version" => "1",

"path" => "/tmp/test.json",

"type" => "jsonlog"

}

真正在项目中可以和开放人员商量 让其提供json 格式的日志 这样能轻松很多

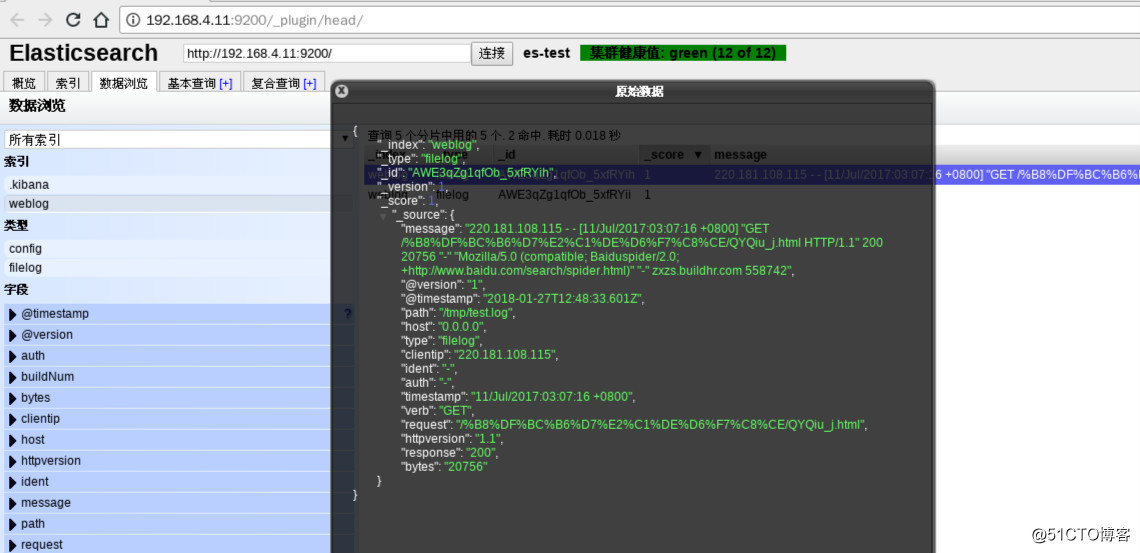

output ES 插件

调试成功后,把数据写入 ES 集群

# cat logstash.conf

input{

file{

start_position => "beginning"

sincedb_path => "/dev/null"

path => [ "/tmp/test.log" ]

type => 'filelog'

}

file{

start_position => "beginning"

sincedb_path => "/dev/null"

path => [ "/tmp/test.json" ]

type => 'jsonlog'

codec => 'json'

}

}

filter{

if [type] == "filelog"{

grok{

match => ["message","%{COMMONAPACHELOG}"]

}

}

}

output{

if [type] == "filelog"{

elasticsearch {

hosts => ["192.168.4.11:9200","192.168.4.12:9200"]

index => "weblog"

flush_size => 2000

idle_flush_time => 10

}

}

}

# /opt/logstash/bin/logstash -f logstash.conf

Settings: Default pipeline workers: 1

Pipeline main started

以上是关于搭建部署 分布式ELK平台 的主要内容,如果未能解决你的问题,请参考以下文章