全网最详细使用Scrapy时遇到0: UserWarning: You do not have a working installation of the service_identity modul

Posted 大数据和人工智能躺过的坑

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了全网最详细使用Scrapy时遇到0: UserWarning: You do not have a working installation of the service_identity modul相关的知识,希望对你有一定的参考价值。

不多说,直接上干货!

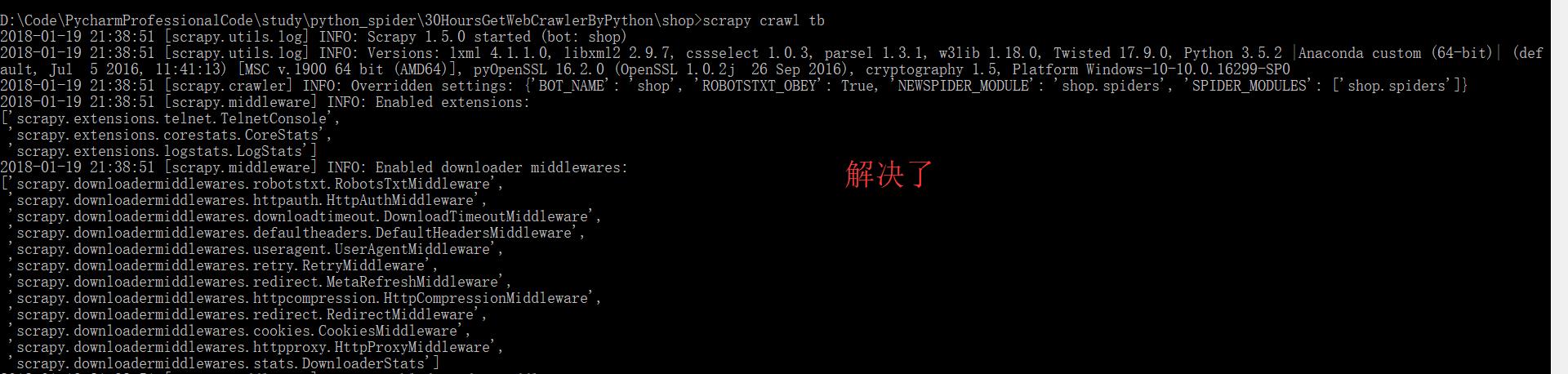

但是在运行爬虫程序的时候报错了,如下:

D:\\Code\\PycharmProfessionalCode\\study\\python_spider\\30HoursGetWebCrawlerByPython>cd shop D:\\Code\\PycharmProfessionalCode\\study\\python_spider\\30HoursGetWebCrawlerByPython\\shop>scrapy crawl tb :0: UserWarning: You do not have a working installation of the service_identity module: \'cannot import name \'opentype\'\'. Please install it from <https://pypi.python.org/pypi/service_identity> and make sure all of its dependencies are satisfied. Without the service_identity module, Twisted can perform only rudimentary TLS client hostname verification. Many valid certificate/hostname mappings may be rejected. 2018-01-19 21:18:52 [scrapy.utils.log] INFO: Scrapy 1.5.0 started (bot: shop) 2018-01-19 21:18:52 [scrapy.utils.log] INFO: Versions: lxml 4.1.1.0, libxml2 2.9.7, cssselect 1.0.3, parsel 1.3.1, w3lib 1.18.0, Twisted 17.9.0, Python 3.5.2 |Anaconda custom (64-bit)| (default, Jul 5 2016, 11:41:13) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 16.2.0 (OpenSSL 1.0.2j 26 Sep 2016), cryptography 1.5, Platform Windows-10-10.0.16299-SP0 2018-01-19 21:18:52 [scrapy.crawler] INFO: Overridden settings: {\'NEWSPIDER_MODULE\': \'shop.spiders\', \'SPIDER_MODULES\': [\'shop.spiders\'], \'ROBOTSTXT_OBEY\': True, \'BOT_NAME\': \'shop\'} 2018-01-19 21:18:52 [scrapy.middleware] INFO: Enabled extensions: [\'scrapy.extensions.logstats.LogStats\', \'scrapy.extensions.corestats.CoreStats\', \'scrapy.extensions.telnet.TelnetConsole\'] 2018-01-19 21:18:52 [scrapy.middleware] INFO: Enabled downloader middlewares: [\'scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware\', \'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware\', \'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware\', \'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware\', \'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware\', \'scrapy.downloadermiddlewares.retry.RetryMiddleware\', \'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware\', \'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware\', \'scrapy.downloadermiddlewares.redirect.RedirectMiddleware\', \'scrapy.downloadermiddlewares.cookies.CookiesMiddleware\', \'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware\', \'scrapy.downloadermiddlewares.stats.DownloaderStats\'] 2018-01-19 21:18:52 [scrapy.middleware] INFO: Enabled spider middlewares: [\'scrapy.spidermiddlewares.httperror.HttpErrorMiddleware\', \'scrapy.spidermiddlewares.offsite.OffsiteMiddleware\', \'scrapy.spidermiddlewares.referer.RefererMiddleware\', \'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware\', \'scrapy.spidermiddlewares.depth.DepthMiddleware\'] 2018-01-19 21:18:52 [scrapy.middleware] INFO: Enabled item pipelines: [] 2018-01-19 21:18:52 [scrapy.core.engine] INFO: Spider opened 2018-01-19 21:18:52 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min) 2018-01-19 21:18:52 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023 2018-01-19 21:18:53 [scrapy.core.downloader.tls] WARNING: Remote certificate is not valid for hostname "www.taobao.com"; \'*.tmall.com\'!=\'www.taobao.com\' 2018-01-19 21:18:53 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://www.taobao.com/robots.txt> (referer: None) 2018-01-19 21:18:53 [scrapy.downloadermiddlewares.robotstxt] DEBUG: Forbidden by robots.txt: <GET https://www.taobao.com/> 2018-01-19 21:18:53 [scrapy.core.engine] INFO: Closing spider (finished) 2018-01-19 21:18:53 [scrapy.statscollectors] INFO: Dumping Scrapy stats: {\'downloader/exception_count\': 1, \'downloader/exception_type_count/scrapy.exceptions.IgnoreRequest\': 1, \'downloader/request_bytes\': 223, \'downloader/request_count\': 1,

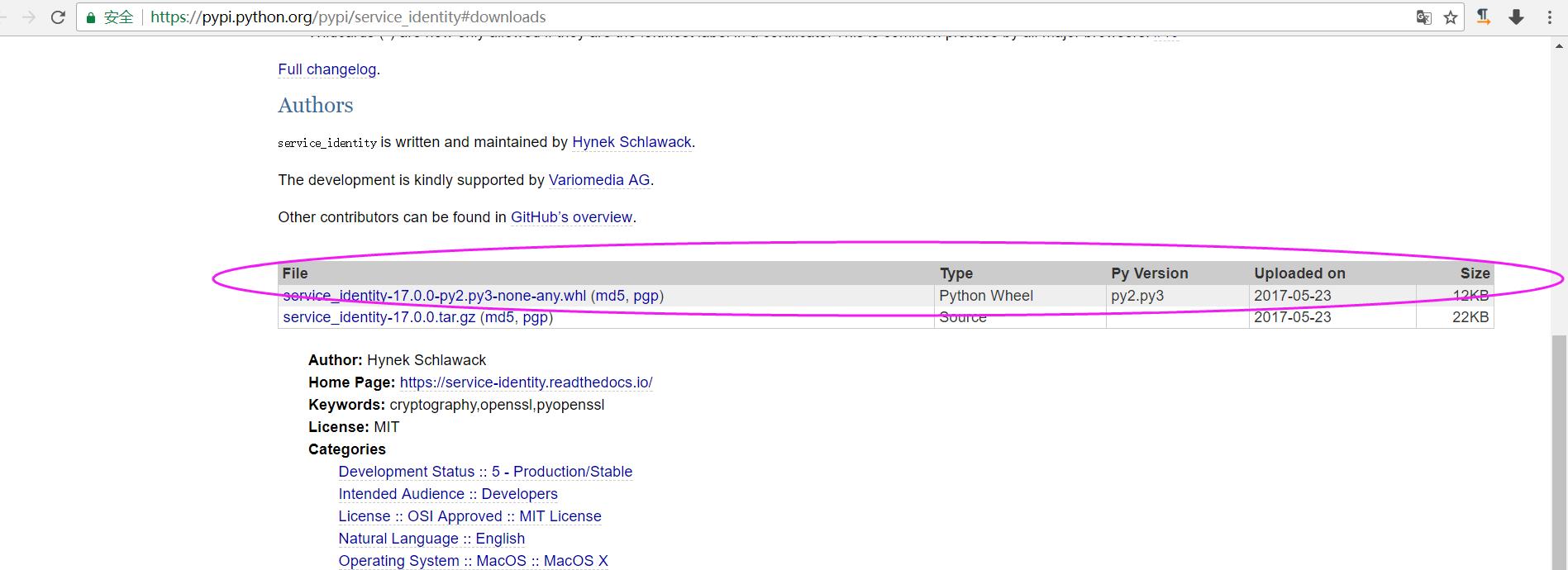

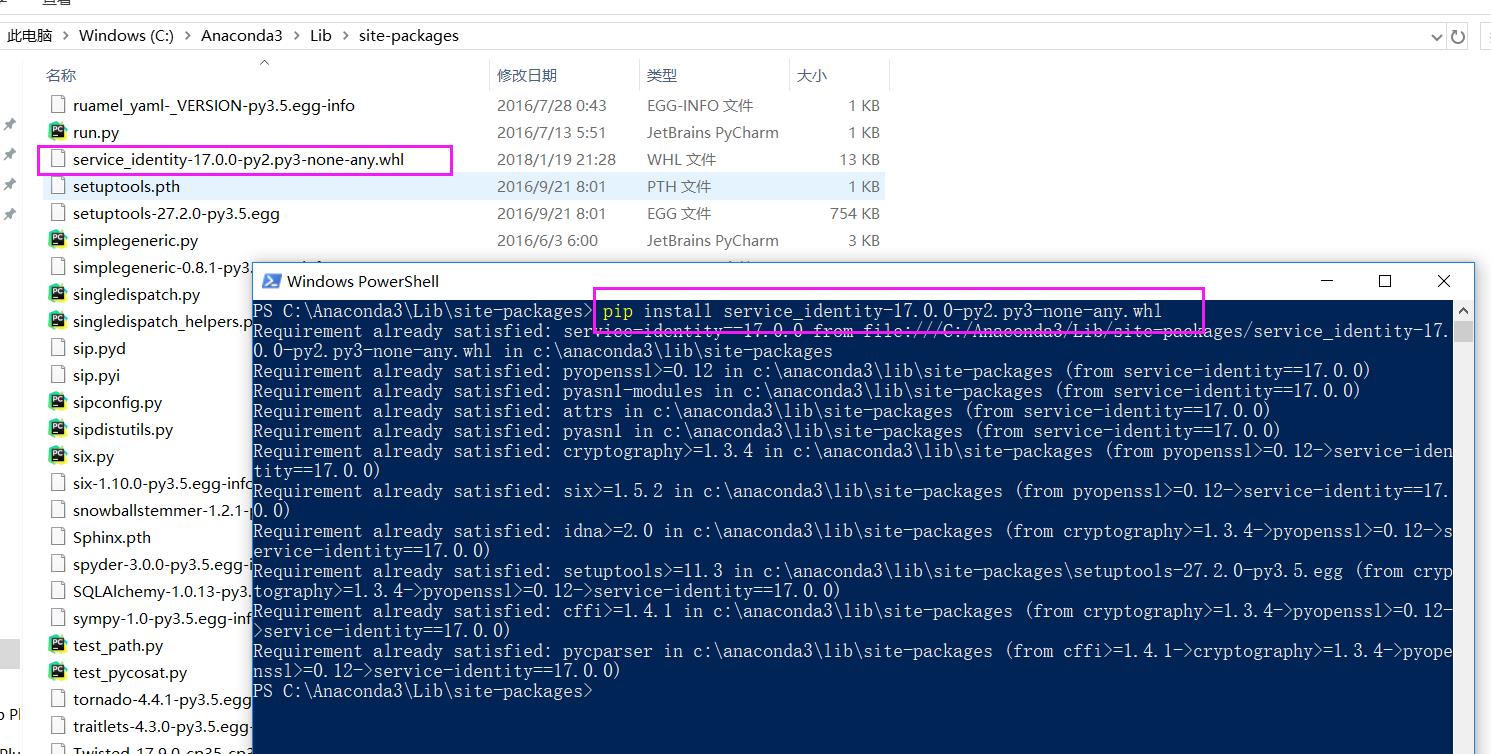

根据提示,去下载和安装service_identity,地址为:https://pypi.python.org/pypi/service_identity#downloads,下载whl文件

PS C:\\Anaconda3\\Lib\\site-packages> pip install service_identity-17.0.0-py2.py3-none-any.whl Requirement already satisfied: service-identity==17.0.0 from file:///C:/Anaconda3/Lib/site-packages/service_identity-17.0.0-py2.py3-none-any.whl in c:\\anaconda3\\lib\\site-packages Requirement already satisfied: pyopenssl>=0.12 in c:\\anaconda3\\lib\\site-packages (from service-identity==17.0.0) Requirement already satisfied: pyasn1-modules in c:\\anaconda3\\lib\\site-packages (from service-identity==17.0.0) Requirement already satisfied: attrs in c:\\anaconda3\\lib\\site-packages (from service-identity==17.0.0) Requirement already satisfied: pyasn1 in c:\\anaconda3\\lib\\site-packages (from service-identity==17.0.0) Requirement already satisfied: cryptography>=1.3.4 in c:\\anaconda3\\lib\\site-packages (from pyopenssl>=0.12->service-identity==17.0.0) Requirement already satisfied: six>=1.5.2 in c:\\anaconda3\\lib\\site-packages (from pyopenssl>=0.12->service-identity==17.0.0) Requirement already satisfied: idna>=2.0 in c:\\anaconda3\\lib\\site-packages (from cryptography>=1.3.4->pyopenssl>=0.12->service-identity==17.0.0) Requirement already satisfied: setuptools>=11.3 in c:\\anaconda3\\lib\\site-packages\\setuptools-27.2.0-py3.5.egg (from cryptography>=1.3.4->pyopenssl>=0.12->service-identity==17.0.0) Requirement already satisfied: cffi>=1.4.1 in c:\\anaconda3\\lib\\site-packages (from cryptography>=1.3.4->pyopenssl>=0.12->service-identity==17.0.0) Requirement already satisfied: pycparser in c:\\anaconda3\\lib\\site-packages (from cffi>=1.4.1->cryptography>=1.3.4->pyopenssl>=0.12->service-identity==17.0.0) PS C:\\Anaconda3\\Lib\\site-packages>

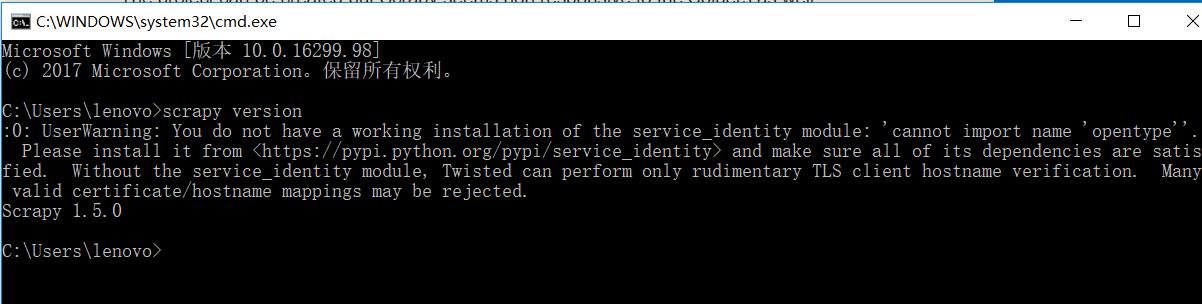

Microsoft Windows [版本 10.0.16299.98] (c) 2017 Microsoft Corporation。保留所有权利。 C:\\Users\\lenovo>scrapy version :0: UserWarning: You do not have a working installation of the service_identity module: \'cannot import name \'opentype\'\'. Please install it from <https://pypi.python.org/pypi/service_identity> and make sure all of its dependencies are satisfied. Without the service_identity module, Twisted can perform only rudimentary TLS client hostname verification. Many valid certificate/hostname mappings may be rejected. Scrapy 1.5.0 C:\\Users\\lenovo>

可见,在scrapy安装时,其实还有点问题的。

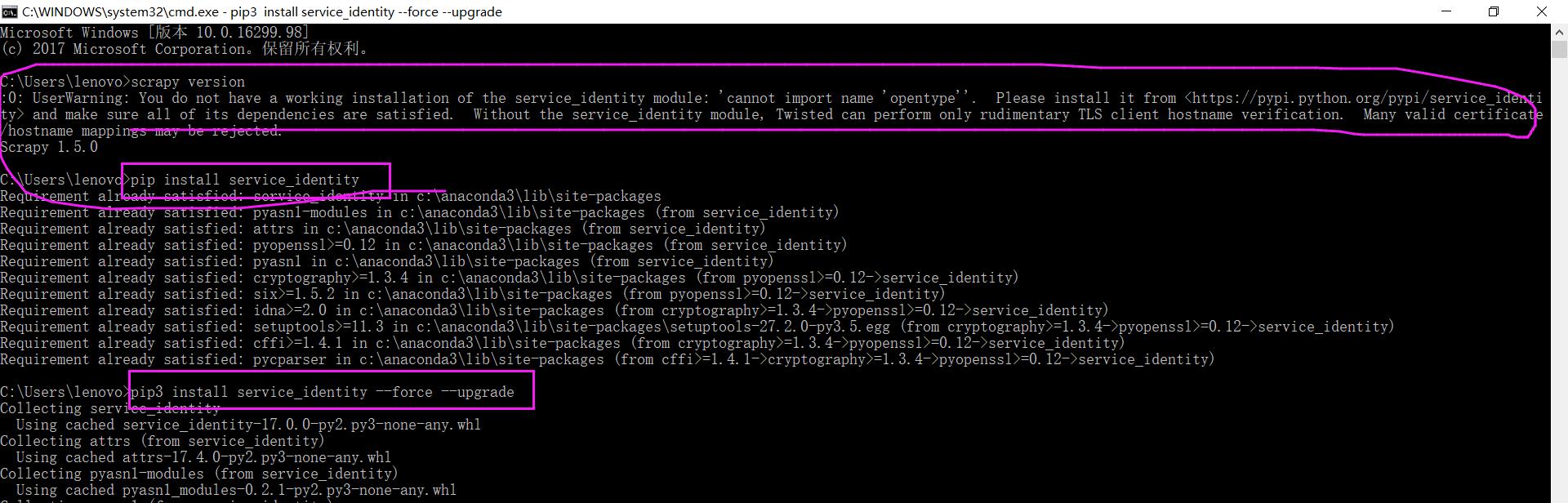

原因是不知道因为什么原因导致本机上的service_identity模块太老旧,而你通过install安装的时候 不会更新到最新版本。

然后,再执行

Microsoft Windows [版本 10.0.16299.98] (c) 2017 Microsoft Corporation。保留所有权利。 C:\\Users\\lenovo>scrapy version :0: UserWarning: You do not have a working installation of the service_identity module: \'cannot import name \'opentype\'\'. Please install it from <https://pypi.python.org/pypi/service_identity> and make sure all of its dependencies are satisfied. Without the service_identity module, Twisted can perform only rudimentary TLS client hostname verification. Many valid certificate/hostname mappings may be rejected. Scrapy 1.5.0 C:\\Users\\lenovo>pip install service_identity Requirement already satisfied: service_identity in c:\\anaconda3\\lib\\site-packages Requirement already satisfied: pyasn1-modules in c:\\anaconda3\\lib\\site-packages (from service_identity) Requirement already satisfied: attrs in c:\\anaconda3\\lib\\site-packages (from service_identity) Requirement already satisfied: pyopenssl>=0.12 in c:\\anaconda3\\lib\\site-packages (from service_identity) Requirement already satisfied: pyasn1 in c:\\anaconda3\\lib\\site-packages (from service_identity) Requirement already satisfied: cryptography>=1.3.4 in c:\\anaconda3\\lib\\site-packages (from pyopenssl>=0.12->service_identity) Requirement already satisfied: six>=1.5.2 in c:\\anaconda3\\lib\\site-packages (from pyopenssl>=0.12->service_identity) Requirement already satisfied: idna>=2.0 in c:\\anaconda3\\lib\\site-packages (from cryptography>=1.3.4->pyopenssl>=0.12->service_identity) Requirement already satisfied: setuptools>=11.3 in c:\\anaconda3\\lib\\site-packages\\setuptools-27.2.0-py3.5.egg (from cryptography>=1.3.4->pyopenssl>=0.12->service_identity) Requirement already satisfied: cffi>=1.4.1 in c:\\anaconda3\\lib\\site-packages (from cryptography>=1.3.4->pyopenssl>=0.12->service_identity) Requirement already satisfied: pycparser in c:\\anaconda3\\lib\\site-packages (from cffi>=1.4.1->cryptography>=1.3.4->pyopenssl>=0.12->service_identity) C:\\Users\\lenovo>pip3 install service_identity --force --upgrade Collecting service_identity Using cached service_identity-17.0.0-py2.py3-none-any.whl Collecting attrs (from service_identity) Using cached attrs-17.4.0-py2.py3-none-any.whl Collecting pyasn1-modules (from service_identity) Using cached pyasn1_modules-0.2.1-py2.py3-none-any.whl Collecting pyasn1 (from service_identity) Downloading pyasn1-0.4.2-py2.py3-none-any.whl (71kB) 100% |████████████████████████████████| 71kB 8.3kB/s Collecting pyopenssl>=0.12 (from service_identity) Downloading pyOpenSSL-17.5.0-py2.py3-none-any.whl (53kB) 100% |████████████████████████████████| 61kB 9.0kB/s Collecting six>=1.5.2 (from pyopenssl>=0.12->service_identity) Cache entry deserialization failed, entry ignored Cache entry deserialization failed, entry ignored Downloading six-1.11.0-py2.py3-none-any.whl Collecting cryptography>=2.1.4 (from pyopenssl>=0.12->service_identity) Downloading cryptography-2.1.4-cp35-cp35m-win_amd64.whl (1.3MB) 100% |████████████████████████████████| 1.3MB 9.5kB/s Collecting idna>=2.1 (from cryptography>=2.1.4->pyopenssl>=0.12->service_identity) Downloading idna-2.6-py2.py3-none-any.whl (56kB) 100% |████████████████████████████████| 61kB 15kB/s Collecting asn1crypto>=0.21.0 (from cryptography>=2.1.4->pyopenssl>=0.12->service_identity) Downloading asn1crypto-0.24.0-py2.py3-none-any.whl (101kB) 100% |████████████████████████████████| 102kB 10kB/s Collecting cffi>=1.7; platform_python_implementation != "PyPy" (from cryptography>=2.1.4->pyopenssl>=0.12->service_identity) Downloading cffi-1.11.4-cp35-cp35m-win_amd64.whl (166kB) 100% |████████████████████████████████| 174kB 9.2kB/s Collecting pycparser (from cffi>=1.7; platform_python_implementation != "PyPy"->cryptography>=2.1.4->pyopenssl>=0.12->service_identity) Downloading pycparser-2.18.tar.gz (245kB) 100% |████████████████████████████████| 256kB 8.2kB/s

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

以及对应本平台的QQ群:161156071(大数据躺过的坑)

以上是关于全网最详细使用Scrapy时遇到0: UserWarning: You do not have a working installation of the service_identity modul的主要内容,如果未能解决你的问题,请参考以下文章