信用卡欺诈检测分析案例

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了信用卡欺诈检测分析案例相关的知识,希望对你有一定的参考价值。

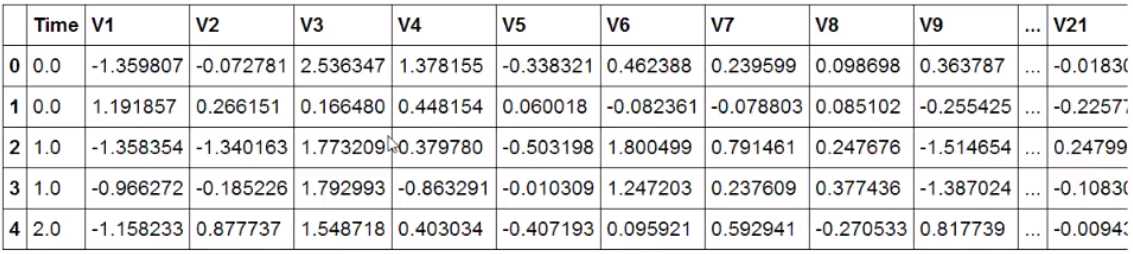

data = pd.read_csv("creditcard.csv") data.head()

count_classes = pd.value_counts(data[‘class‘],sort = True).sort_index() # value_count:计算数值的个数

count_classes.plot(kind = ‘bar‘) # 绘制条形图

plt.title("Fraud class hiistogram")

plt.xlabel("class")

plt.ylabel("Frequency")

样本不平衡解决方案

from sklearn.preprocessing import StandardScaler data[‘normAmount‘] = StandardScaler().fit_transform(data[‘Amount‘].reshape(-1,1)) # fit_transform对数据进行变换,amount数据值较大,机器算法会把它的数值看得比较

# 重要,所以要进行转换,让它的每一列数据的重要程度看起来一样

# [2,3].reshape(-1,2), -1:2*3/2 1:变成列向量 data = data.drop([‘Time‘,‘Amount‘],axis=1) # 删除Time,Amount这两列数据 data.head()

下采样策略:

X = data.ix[:,data.columns != ‘Class‘] y = data.ix[:,data.columns == ‘Class‘] number_records_fraud = len(data[data.Class == 1]) # class=1的样本函数 fraud_indices = np.array(data[data.Class == 1].index) # 样本等于1的索引值 normal_indices = data[data.Class == 0].index # 样本等于0的索引值 random_normal_indices = np.random.choice(normal_indeces,number_records_fraud,replace = False)

random_normal_indices = np.array(random_normal_indices)

under_sample_indices = np.concatenate([fraud_indices,random_normal_indices]) # Appending the 2 indices

under_sample_data = data.iloc[under_sample_indices,:] # Under sample dataset

X_undersample = under_sample_data.ix[:,under_sample_data.columns != ‘Class‘]

y_undersample = under_sample_data.ix[:,under_sample_data.columns == ‘Class‘]

交叉验证 from sklearn.cross_validation import train_test_split # While dataset X_train,X_test,y_train,y_test = train_test_split(X,y,test_size = 0.3,random_state = 0) # random_state

# Undersampled dataset

X_train_undersample,X_test_undersample,y_train_undersample,y_test_undersample = train_test_split(X_undersample,y_undersample,test_size=0.3,random_state=0)

模型评估方法 from sklearn.linear_model import LogisticRegression from sklearn.cross_validation import KFold,cross_val_score from sklearn.metrics import confusion_matrix,reclll_score,classification_report

def printing_Kfold_scores(x_train_data,y_train_data):

fold = KFold(len(y_train_data),5,shuffle=False) # 将原始的数据进行切分

c_param_range = [0.01,0.1,1,10,100] # 惩罚力度参数

results_table = pd.DataFrame(index = range(len(c_param_range),2),columns = ["C_parameter‘,‘Mean recall s’]

results_table[‘C_parameter‘] = c_param_range

j = 0

for c_param in c_param_range:

recall_accs = []

for iteration,indices in enumerate(fold,start=1):

lr = LogisticRegression(C=c_param,penalty=‘l1‘)# 建立逻辑回归模型,l1是w的绝对值惩罚,l2是w2的惩罚

lr.fit(x_train_data.iloc[indices[0],:],y_train_data.iloc[indices[0],:].values.ravel())

y_pred_undersample = lr.predict(x_train_data.iloc[indices[1],:].values)

recall_acc = recall_score(y_train_data.iloc[indices[1],:].values,y_pred_undersample) # 召回率

recall_accs.append(recall_acc)

print("Iteration",iteration,‘:recall score = ‘,recall_acc)

result_table.ix[j,‘Mean recall score‘] = np.mean(recall_accs)

j += 1

print(‘Mean recall score‘,np.mean(recall_accs))

best_c = results_table.loc[results_table[‘Mean recall score‘].idxmax()][‘C_parameter‘]

print(“Best model”,best_c)

return best_c

混淆矩阵

逻辑回归阈值对结果的影响

lr = LogisticRegression(C = 0.01,penalty=‘l1‘) lr.fit(X_train_undersample,y_train_undersample.values.ravel()) y_pred_undersample_proba = lr.predict_proba(X_test_undersample.values) thresholds=[0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9] plt.figure(figsize=(10,10)) j= 1 for i in thresholds: y_test_predicttions_high_recall = y_pred_undersample_proba[:,1]> i

plt.subplot(3,3,j)

j += 1

cnf_matrix = confusion_matrix(y_test_undersample,y_test_predictions_high_recall)

np.set_printoptions(precision=2)

print("Recall metric in the testing dataset:",cnf_matrix[1,1]/(cnf_matrix[1,0]+cnf_matrix[1,1]))

class_names = [0,1]

plot_confusion_matrix(cnf_matrix,classes=class_names,title="Threshold >= %s‘%i)

SMOTE样本生成策略(过采样) import pandas as pd from imblearn.over_sampling import SMOTE from sklearn.ensemble import RandomForestClassifier from sklearn.metrics import confusion_matrix from sklearn.model_selection import train_test_split

credit_cards = pd.read_csv(‘creditcard.csv‘)

columns = credit_cards.columns

features_columns = columns.delete(len(columns)-1)

features = credit_cards[features_columns]

labels = credit_cards[‘Class‘]

features_train,features_test,labels_train,labels_test = train_test_split(features,labels,test_size=0.2,random_state=0)

oversampler = SMOTE(random_state=0)

os_features,os_labels = oversampler.fit_sample(features_train,labels_train)

len(os_labels[os_labels==1])

os_features = pd.DataFrame(os_features)

os_labels = pd.DataFrame(os_labels)

best_c = printing_Kfold_scores(os_features,os_labels)

lr = LogisticRegression(C = best_c,penalty=‘l1‘) lr.fit(os_features,os_labels.values.ravel()) y_pred = lr.predict(features_test.values) cnf_matrix = confusiion_matrix(labels_test,y_pred) np.set_printopotions(precision=2) print(‘recall metric:‘,cnf_matrix[1,1]/(cnf_matrix[1,0]+cnf_matrix[1,1]))

class_names[0,1]

plt.figure()

plot_confusion_matrix(cnf_matrix,classes=class_names,title=‘Confusion matrix‘)

plt.show()

以上是关于信用卡欺诈检测分析案例的主要内容,如果未能解决你的问题,请参考以下文章