使用 BeautifulSoup抓取网页信息信息

Posted Michael2397

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了使用 BeautifulSoup抓取网页信息信息相关的知识,希望对你有一定的参考价值。

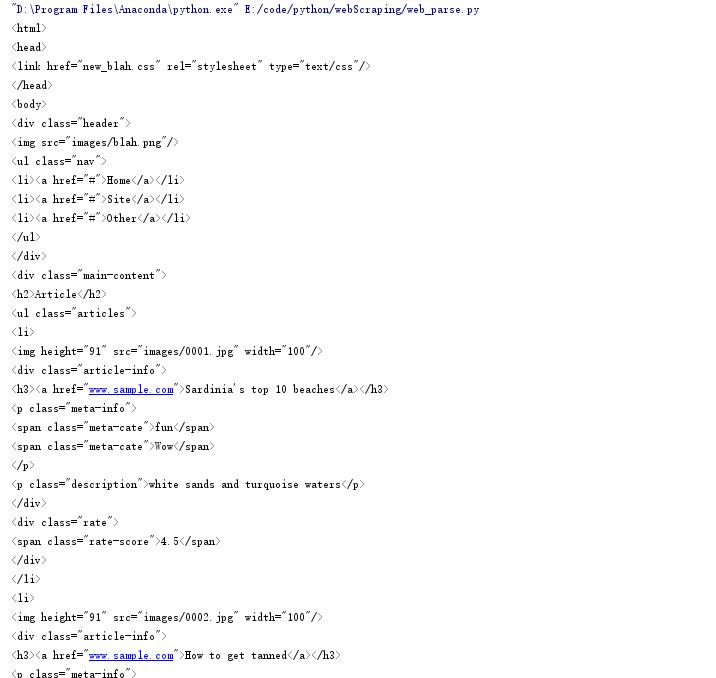

一、解析网页信息

from bs4 import BeautifulSoup with open(\'C:/Users/michael/Desktop/Plan-for-combating-master/week1/1_2/1_2code_of_video/web/new_index.html\',\'r\') as web_data: Soup = BeautifulSoup(web_data,\'lxml\') print(Soup)

二、获取要爬取元素的位置

浏览器右键-》审查元素-》copy-》seletor

""" body > div.main-content > ul > li:nth-child(1) > div.article-info > h3 > a body > div.main-content > ul > li:nth-child(1) > div.article-info > p.meta-info > span:nth-child(2) body > div.main-content > ul > li:nth-child(1) > div.article-info > p.description body > div.main-content > ul > li:nth-child(1) > div.rate > span body > div.main-content > ul > li:nth-child(1) > img """

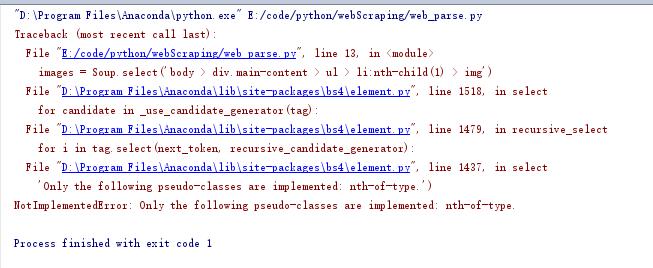

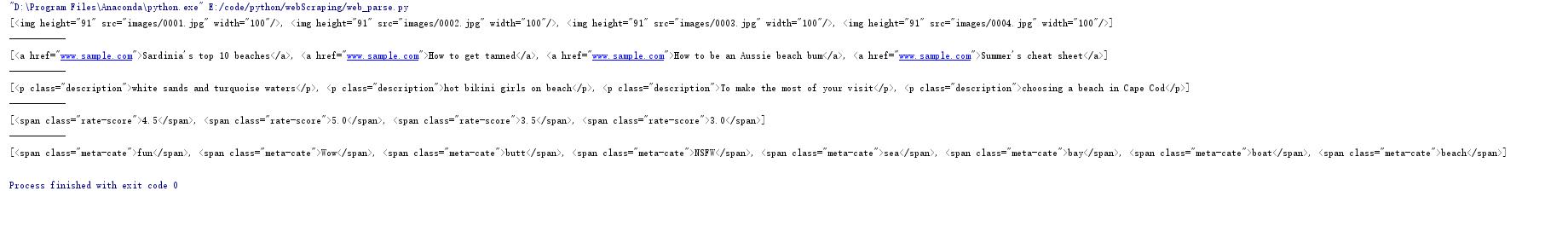

images = Soup.select(\'body > div.main-content > ul > li:nth-child(1) > img\') print(images)

修改成:

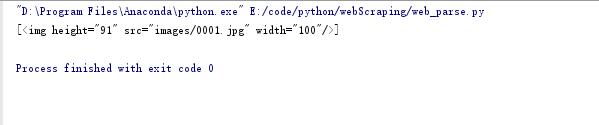

images = Soup.select(\'body > div.main-content > ul > li:nth-of-type(1) > img\') print(images)

这时候能获取到一个

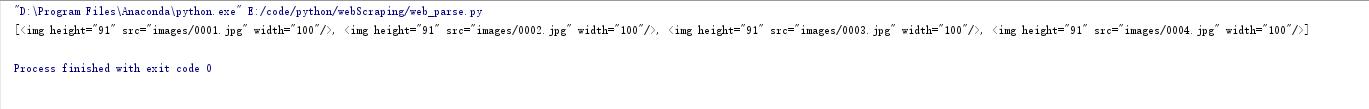

images = Soup.select(\'body > div.main-content > ul > li > img\') print(images)

获取到了所有图片

titles = Soup.select(\'body > div.main-content > ul > li > div.article-info > h3 > a\') descs = Soup.select(\'body > div.main-content > ul > li > div.article-info > p.description\') rates = Soup.select(\' body > div.main-content > ul > li > div.rate > span\') cates = Soup.select(\' body > div.main-content > ul > li > div.article-info > p.meta-info > span\') print(images,titles,descs,rates,cates,sep=\'\\n-----------\\n\')

获取到了其他信息

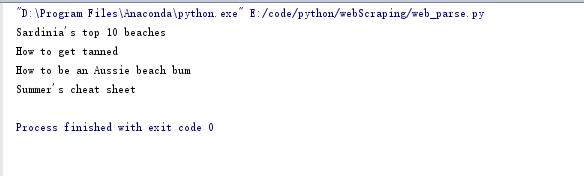

三、获取标签中的文本信息(get_text())及属性(get())

for title in titles: print(title.get_text())

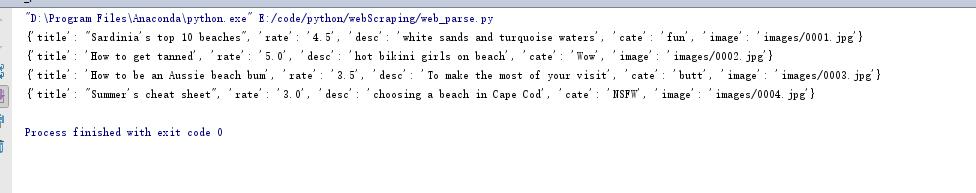

封装成字典:

for title,image,desc,rate,cate in zip(titles,images,descs,rates,cates): data = { \'title\':title.get_text(), \'rate\':rate.get_text(), \'desc\':desc.get_text(), \'cate\':cate.get_text(), \'image\':image.get(\'src\') } print(data)

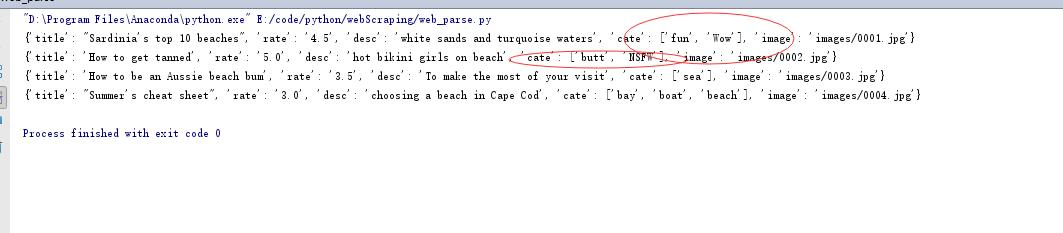

因为cates有多个属性,需要上升到父节点

cates = Soup.select(\' body > div.main-content > ul > li > div.article-info > p.meta-info\')

for title,image,desc,rate,cate in zip(titles,images,descs,rates,cates): data = { \'title\':title.get_text(), \'rate\':rate.get_text(), \'desc\':desc.get_text(), \'cate\':list(cate.stripped_strings), \'image\':image.get(\'src\') } print(data)

#找到评分大于3的文章 for i in info: if float(i[\'rate\'])>3: print(i[\'title\'],i[\'cate\'])

四、完整代码

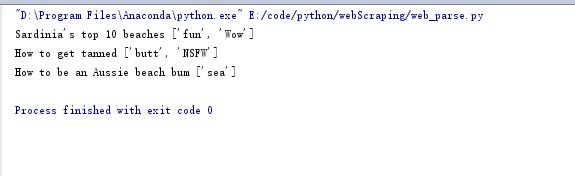

from bs4 import BeautifulSoup info =[] with open(\'C:/Users/michael/Desktop/Plan-for-combating-master/week1/1_2/1_2code_of_video/web/new_index.html\',\'r\') as web_data: Soup = BeautifulSoup(web_data,\'lxml\') # print(Soup) """ body > div.main-content > ul > li:nth-child(1) > div.article-info > h3 > a body > div.main-content > ul > li:nth-child(1) > div.article-info > p.meta-info > span:nth-child(2) body > div.main-content > ul > li:nth-child(1) > div.article-info > p.description body > div.main-content > ul > li:nth-child(1) > div.rate > span body > div.main-content > ul > li:nth-child(1) > img """ images = Soup.select(\'body > div.main-content > ul > li > img\') titles = Soup.select(\'body > div.main-content > ul > li > div.article-info > h3 > a\') descs = Soup.select(\'body > div.main-content > ul > li > div.article-info > p.description\') rates = Soup.select(\' body > div.main-content > ul > li > div.rate > span\') cates = Soup.select(\' body > div.main-content > ul > li > div.article-info > p.meta-info\') # print(images,titles,descs,rates,cates,sep=\'\\n-----------\\n\') for title,image,desc,rate,cate in zip(titles,images,descs,rates,cates): data = { \'title\':title.get_text(), \'rate\':rate.get_text(), \'desc\':desc.get_text(), \'cate\':list(cate.stripped_strings), \'image\':image.get(\'src\') } #添加到列表中 info.append(data) #找到评分大于3的文章 for i in info: if float(i[\'rate\'])>3: print(i[\'title\'],i[\'cate\'])

以上是关于使用 BeautifulSoup抓取网页信息信息的主要内容,如果未能解决你的问题,请参考以下文章