爬取所有校园新闻

Posted 410陈锐锦

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬取所有校园新闻相关的知识,希望对你有一定的参考价值。

获取单条新闻的#标题#链接#时间#来源#内容 #点击次数,并包装成一个函数。

点击次数:

import requests from bs4 import BeautifulSoup from datetime import datetime res = requests.get(\'http://news.gzcc.cn/html//xiaoyuanxinwen/\') res.encoding = \'utf-8\' soup = BeautifulSoup(res.text,\'html.parser\') for news in soup.select(\'li\'): if len(news.select(\'.news-list-title\'))>0: t = news.select(\'.news-list-title\')[0].text a = news.select(\'a\')[0][\'href\'] d = news.select(\'.news-list-info\')[0].contents[0].text dt = datetime.strptime(d,\'%Y-%m-%d\') s = news.select(\'.news-list-info\')[0].contents[1].text #print(t,a,d,s) resl = requests.get(a) resl.encoding = \'utf-8\' soupl = BeautifulSoup(resl.text,\'html.parser\') ar = soupl.select(\'.show-content\')[0] click = requests.get(\'http://oa.gzcc.cn/api.php?op=count&id=8302&modelid=80\').text.split(\'.\')[-1].lstrip("html(\'").rstrip("\');") print(click) break

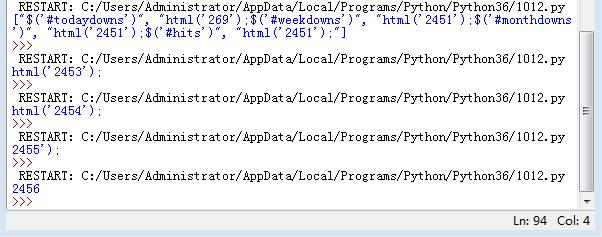

结果:

后面函数:

import requests from bs4 import BeautifulSoup from datetime import datetime import re def getclick(newsurl): id = re.match(\'http://news.gzcc.cn/html/2017/xiaoyuanxinwen_(.*).html\',newsurl).groups()[0].split(\'/\')[1] clickurl = \'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80\'.format(id) click = int(requests.get(clickurl).text.split(\'.\')[-1].lstrip("html(\'").rstrip("\');")) return(click) def getonepage(listurl): res = requests.get(listurl) res.encoding = \'utf-8\' soup = BeautifulSoup(res.text,\'html.parser\') for news in soup.select(\'li\'): if len(news.select(\'.news-list-title\'))>0: t = news.select(\'.news-list-title\')[0].text a = news.select(\'a\')[0][\'href\'] d = news.select(\'.news-list-info\')[0].contents[0].text dt = datetime.strptime(d,\'%Y-%m-%d\') s = news.select(\'.news-list-info\')[0].contents[1].text #print(t,a,d,s) resl = requests.get(a) resl.encoding = \'utf-8\' soupl = BeautifulSoup(resl.text,\'html.parser\') ar = soupl.select(\'.show-content\')[0].text click = getclick(a) print(click,t,dt,s,a,ar) getonepage(\'http://news.gzcc.cn/html/xiaoyuanxinwen/index.html\') res=requests.get(\'http://news.gzcc.cn/html/xiaoyuanxinwen\') res.encoding = \'utf-8\' soup = BeautifulSoup(res.text,\'html.parser\') page = int(soup.select(\'.a1\')[0].text.rstrip(\'条\'))//10+1 for i in range(2,4): listurl = \'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html\'.format(i) getonepage(listurl)

完成自己所选其他主题相应数据的爬取工作。

以上是关于爬取所有校园新闻的主要内容,如果未能解决你的问题,请参考以下文章