聚合链路与桥接测试 Posted 2020-10-09 遠離塵世の方舟

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了聚合链路与桥接测试相关的知识,希望对你有一定的参考价值。

用途 :将主机上的两块网卡绑定在一起,构成一个逻辑端口。

RHEL5/6叫网卡bonding,需要加载内核模块

聚合网络的原理

真实的ip地址并不是在物理网卡上设置的,而是把两个或多个物理网卡聚合成一个虚拟的网卡,在虚拟网卡上设置地址,而外部网络访问本机时,就是访问的这个虚拟网卡的地址,虚拟网卡接到数据后经过两个网卡的负载交给服务器处理。如果一块网卡出现问题,则通过另一块传递数据,保证正常通信。聚合网络实验过程中,添加的两块新网卡可以不是active状态,甚至nmcli connect show查看没有新添加的网卡信息。也可以使active状态的网卡。

链路聚合的工作模式

测试环境:

[root@localhost ~]# ifconfig

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

[root@localhost ~]# hostnamectl

一、测试主备模式(active-backup) 1、在主机模式再添加一块新的网卡

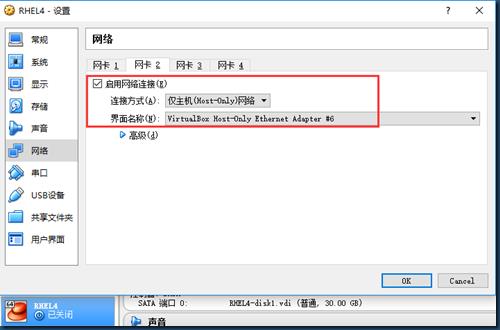

先shutdown 虚拟机,然后再 VirtualBox界面再启用网卡2。

然后启动虚拟机。

2、查看网卡信息

[root@localhost ~]# nmcli device show | grep -i device --新增网卡为enp0s8

[root@localhost ~]# nmcli connection show ---看不到enp0s8,因为无配置文件

# nmcli connection show 看不到enp0s8,因为无配置文件,如果手动添加配置文件后,就可以看到enp0s8了。其实都没必要为enp0s8和enp0s3造配置文件,因为我们要跟把两块网卡绑定到一块,作为新的team设备,使用新的配置文件。这里只为了测试一个小知识点。

[root@localhost ~]# nmcli connection add con-name enp0s8 type ethernet ifname enp0s8

3、添加team设备及配置文件

[root@localhost ~]# nmcli connection add type team con-name testteamfile ifname testteamdevice config \'{"runner":{"name":"activebackup"}}\'

说明:

# nmcli connection add type team con-name testteamfile ifname testteamdevice config \'{"runner":{"name":"activebackup"}}\'

如果添加错误,则使用 nmcli connection delete testteamfile来删除,然后重新再添加。

配置文件添加成功后,会在/etc/sysconfig/network-scripts/目录下生成。

[root@localhost ~]# cd /etc/sysconfig/network-scripts/

4、为team设备配置IP

[root@localhost network-scripts]# nmcli connection modify testteamfile ipv4.method manual ipv4.addresses 192.168.100.123/24 ipv4.gateway 192.168.100.100 ipv4.dns 192.168.100.1 connection.autoconnect yes

5、将两块网卡enp0s3和enp0s8绑定到虚拟网卡上

[root@localhost ~]# nmcli connection add type team-slave con-name testteamslave1 ifname enp0s3 master testteamdevice

[root@localhost ~]# nmcli connection show

enp0s3----->testteamslave1------>testteamdevice

enpos8----->testteamslave2------>testteamdevice

可以这么理解:enp0s3和enp0s8被它们的主子master买去做了奴隶,一个取名叫slave1,另一个取名叫slave2。

6、激活虚拟网卡testteamdevice

[root@localhost ~]# nmcli connection up testteamfile ---注意启动的是文件,而不是设备

[root@localhost ~]# nmcli connection up testteamslave1

[root@localhost ~]# nmcli connection up testteamslave2

先启动master,再启动两个slave。

[root@localhost ~]# nmcli connection show

[root@localhost ~]# ifconfig

enp0s8: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

testteamdevice: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

You have mail in /var/spool/mail/root

从上边可以看出:testteamdevice,enp0s3,enp0s8 三块网卡的mac地址一样。

三个常用的命令:

1、查看虚拟网卡的状态

[root@localhost ~]# teamdctl testteamdevice state

2、查看虚拟网卡的端口

[root@localhost ~]# teamnl testdevice -h

[root@localhost ~]# teamnl testteamdevice getoption activeport ---当前使用的网卡端口,当前使用的是enp0s3。

验证结果:

[root@localhost ~]# nmcli device disconnect enp0s3 --断开enp0s3

[root@localhost ~]#teamncl testteamdevice port

3:enp0s8:up 1000Mbit FD

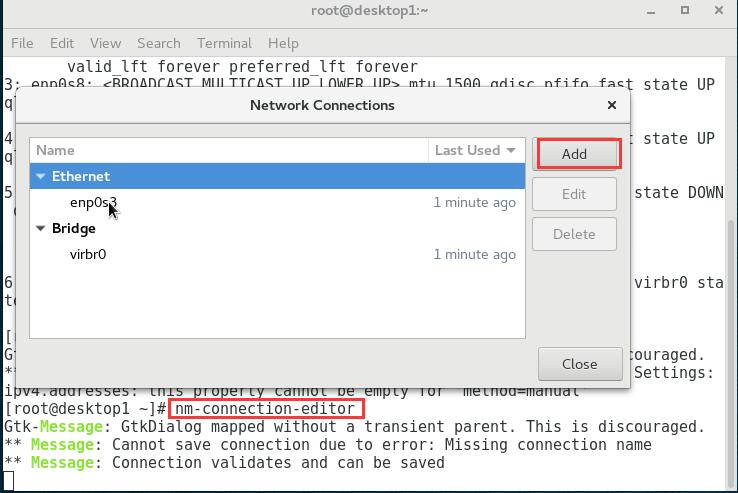

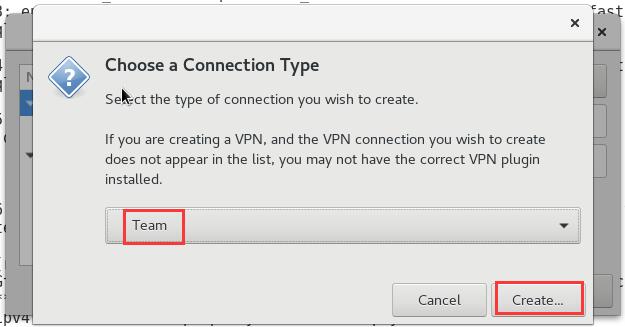

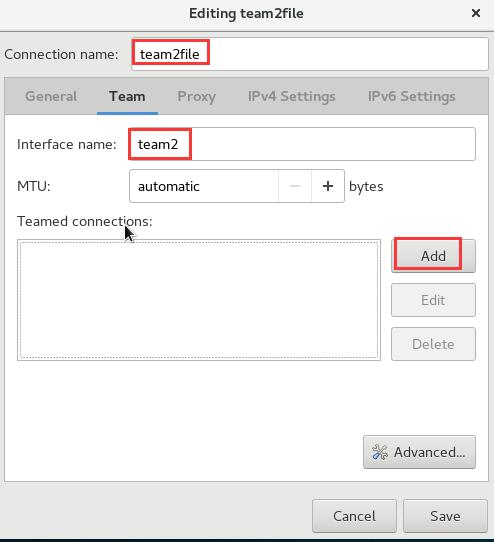

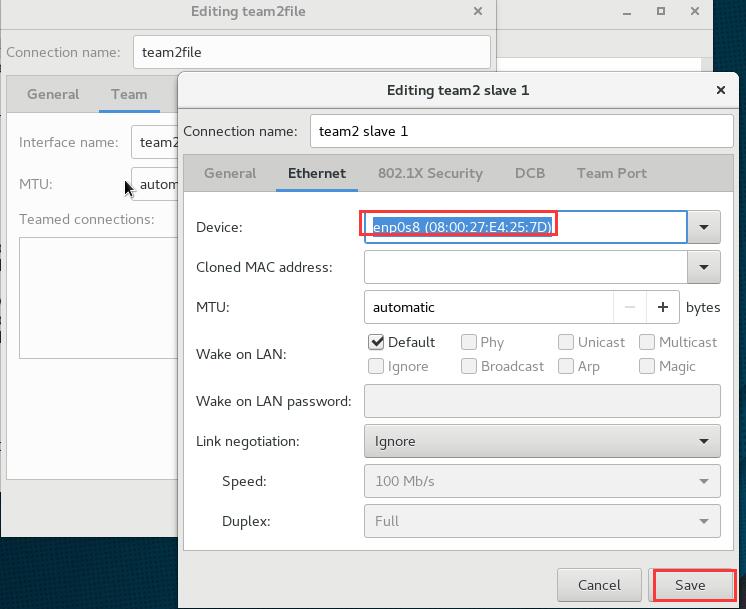

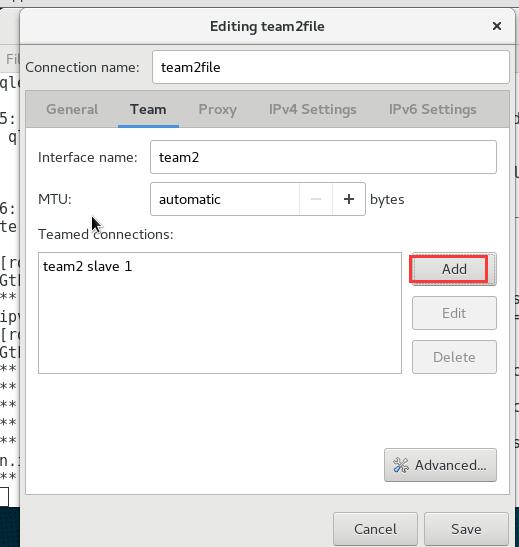

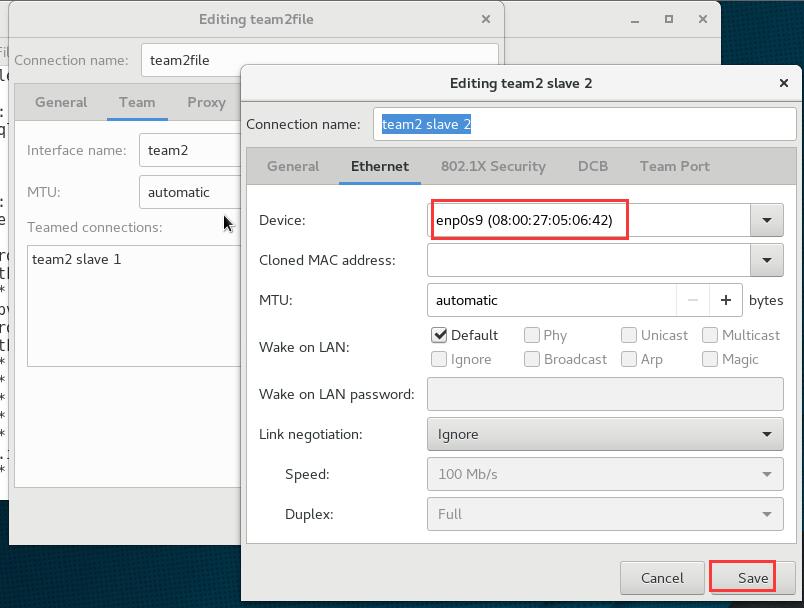

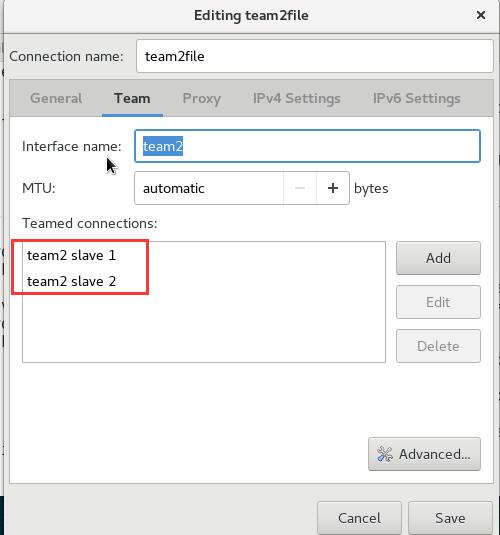

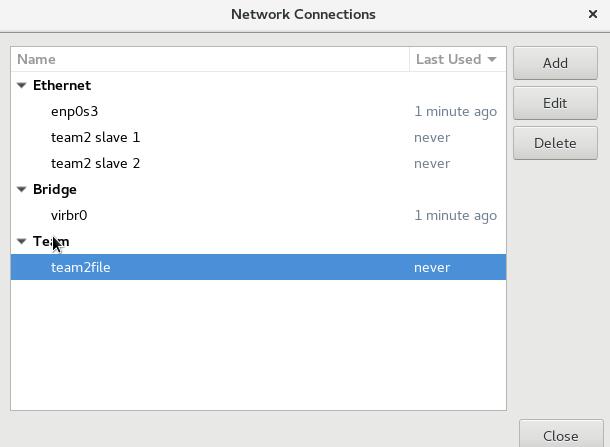

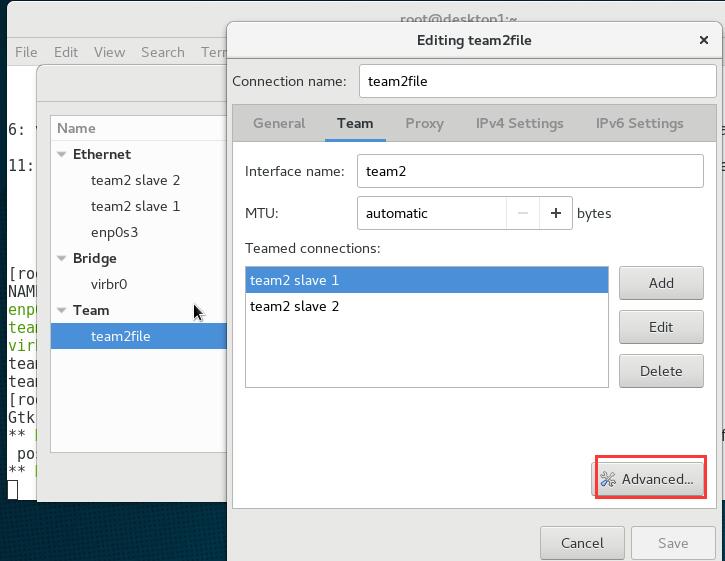

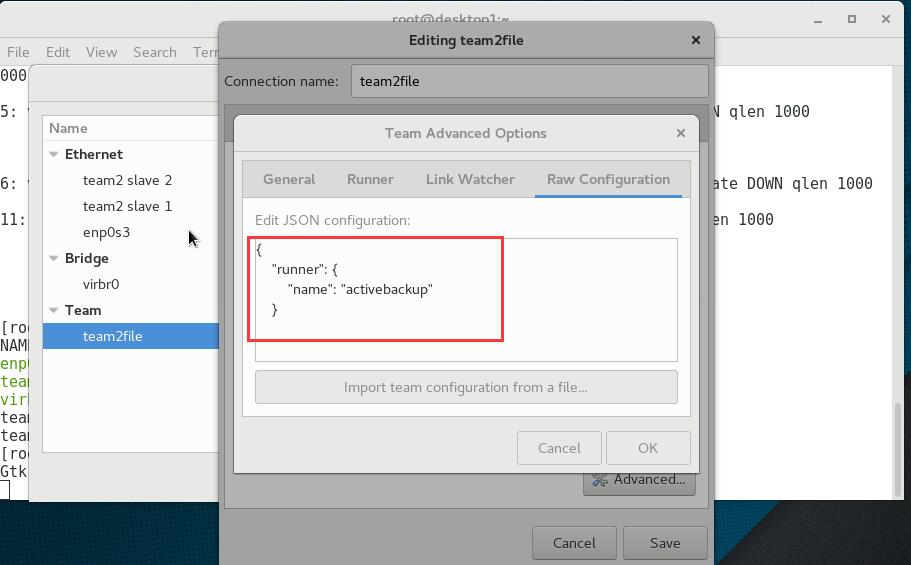

图形化界面配置方式:

#nm-connection-editor

同样,再添加team设备的另外一块网卡进来。

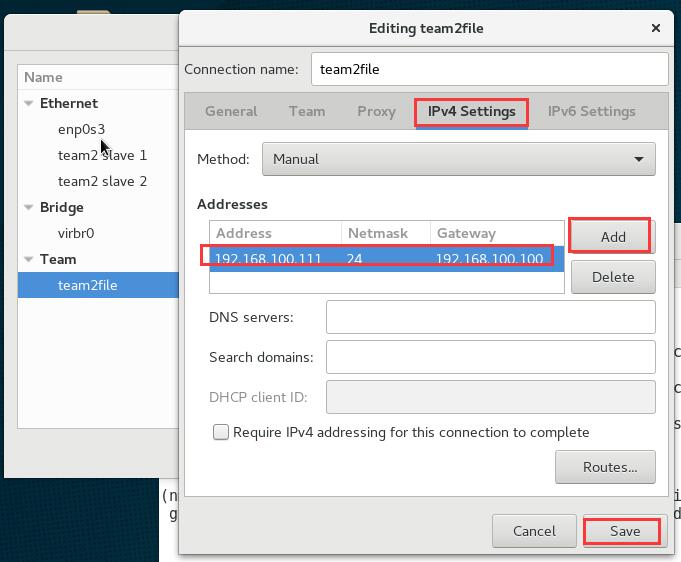

为team2设备配置IP地址:

二、桥接

要求:把本机1的其中一块网卡转换为桥接设备(交换机),其它客户机连接到该设备后能与主机1正常通信。

下面把网卡enp0s8转换为桥接设备:

[root@localhost ~]# nmcli connection show

enp0s8: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

[root@localhost ~]#

#nmcli connection add type bridge con-name testbrifile ifname testbridevice

以上是关于聚合链路与桥接测试的主要内容,如果未能解决你的问题,请参考以下文章