用requests库和BeautifulSoup4库爬取新闻列表

Posted 胡思琪

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了用requests库和BeautifulSoup4库爬取新闻列表相关的知识,希望对你有一定的参考价值。

1.用requests库和BeautifulSoup4库,爬取校园新闻列表的时间、标题、链接、来源。

import requests from bs4 import BeautifulSoup gzccurl = \'http://news.gzcc.cn/html/xiaoyuanxinwen/\' res = requests.get(gzccurl) res.encoding=\'utf-8\' soup = BeautifulSoup(res.text,\'html.parser\') for news in soup.select(\'li\'): if len(news.select(\'.news-list-title\'))>0: title = news.select(\'.news-list-title\')[0].text#标题 url = news.select(\'a\')[0][\'href\']#链接 time = news.select(\'.news-list-info\')[0].contents[0].text source = news.select(\'.news-list-info\')[0].contents[1].text #详情 #resd = requests.get(url) #res.encoding=\'utf-8\' #soupd = BeautifulSoup(res.text,\'html.parser\') #detail = soupd.select(\'.show-content\') print(time,\'\\n\',title,\'\\n\',url,\'\\n\',source,\'\\n\')

结果:

2.将其中的时间str转换成datetime类型。

import requests from bs4 import BeautifulSoup from datetime import datetime gzccurl = \'http://news.gzcc.cn/html/xiaoyuanxinwen/\' res = requests.get(gzccurl) res.encoding=\'utf-8\' soup = BeautifulSoup(res.text,\'html.parser\') #def getdetail(url): #resd = requests.get(url) #resd.encoding=\'utf-8\' #soupd = BeautifulSoup(res.text,\'html.parser\') #return (soupd.select(\'.show-content\')[0].text) for news in soup.select(\'li\'): if len(news.select(\'.news-list-title\'))>0: title = news.select(\'.news-list-title\')[0].text#标题 url = news.select(\'a\')[0][\'href\']#链接 time = news.select(\'.news-list-info\')[0].contents[0].text dt = datetime.strptime(time,\'%Y-%m-%d\') source = news.select(\'.news-list-info\')[0].contents[1].text#来源 #detail = getdetail(url)#详情 print(dt,\'\\n\',title,\'\\n\',url,\'\\n\',source,\'\\n\')

结果

3.将取得详细内容的代码包装成函数。

import requests from bs4 import BeautifulSoup from datetime import datetime gzccurl = \'http://news.gzcc.cn/html/xiaoyuanxinwen/\' res = requests.get(gzccurl) res.encoding=\'utf-8\' soup = BeautifulSoup(res.text,\'html.parser\') def getdetail(url): resd = requests.get(url) resd.encoding=\'utf-8\' soupd = BeautifulSoup(res.text,\'html.parser\') return (soupd.select(\'.show-content\')[0].text) for news in soup.select(\'li\'): if len(news.select(\'.news-list-title\'))>0: title = news.select(\'.news-list-title\')[0].text#标题 url = news.select(\'a\')[0][\'href\']#链接 time = news.select(\'.news-list-info\')[0].contents[0].text dt = datetime.strptime(time,\'%Y-%m-%d\') source = news.select(\'.news-list-info\')[0].contents[1].text#来源 detail = getdetail(url)#详情 print(dt,\'\\n\',title,\'\\n\',url,\'\\n\',source,\'\\n\') break

结果

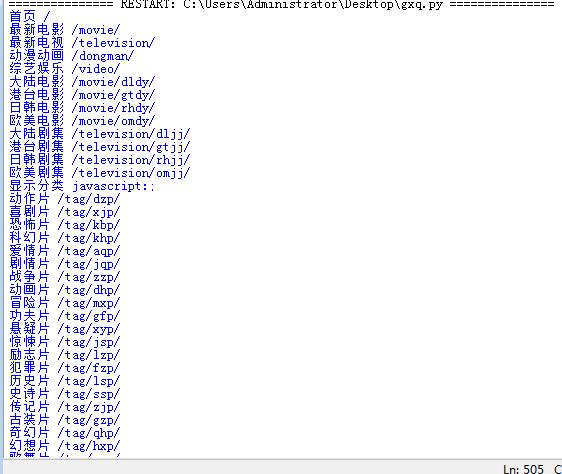

4.选一个自己感兴趣的主题,做类似的操作,为“爬取网络数据并进行文本分析”做准备。

import requests from bs4 import BeautifulSoup jq=\'http://www.lbldy.com/tag/gqdy/\' res = requests.get(jq) res.encoding=\'utf-8\' soup = BeautifulSoup(res.text,\'html.parser\') for news in soup.select(\'li\'): if len(news.select(\'a\'))>0: title=news.select(\'a\')[0].text url=news.select(\'a\')[0][\'href\'] #time=news.select(\'span\')[0].contents[0].text #print(time,title,url) print(title,url)

结果:

以上是关于用requests库和BeautifulSoup4库爬取新闻列表的主要内容,如果未能解决你的问题,请参考以下文章