crawler_exa2

Posted 北海悟空

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了crawler_exa2相关的知识,希望对你有一定的参考价值。

优化中...

#! /usr/bin/env python

# -*- coding:utf-8 -*-

# Author: Tdcqma

\'\'\'

v17.0920.1401 基本功能实现,漏洞标题与漏洞链接优化

v17.0922.1800 已实现对【Cisco 漏洞告警】提供受影响的版本信息

v17.0922.1913 优化代码,对版本v17.0922.1800实现函数化

\'\'\'

import urllib.request

import ssl,re

import smtplib,email

import datetime

f = open("secInfo-lvmeng.txt", \'w\', encoding=\'utf-8\')

#today = str(datetime.date.today()) # 指定以当前时间为搜索条件

today = "2017-09-20" # 临时指定测试时间

# 生产列表保存所有安全漏洞标题

tomcat_sec = ["Apache Tomcat 漏洞告警(当前生产版本为7.0.68)\\n\\n"]

Cisco_sec = ["Cisco 漏洞告警(当前生产版本为1.0.35)\\n\\n"]

WebLogic_sec = ["WebLogic 漏洞告警(当前生产版本为10.33)\\n\\n"]

Microsoft_sec = ["Microsoft 漏洞告警(当前生产版本为windows2012)\\n\\n"]

Noinfo_sec = "本日无爬虫安全告警.\\n\\n"

tomcat_msg = \'\'\' \'\'\'

WebLogic_msg = \'\'\' \'\'\'

Cisco_msg = \'\'\' \'\'\'

Microsoft_msg = \'\'\' \'\'\'

count = 0 # 计算告警漏洞总数

str_domain = "http://www.nsfocus.net"

msg_fl = ""

newline = ""

def get_infected_vision(info_sec,info_msg,sub_url):

line = " ♠ " + today + " " + title + "\\n >> " + sub_url + \'\\n\'

info_msg += line # 逐行读取,将其保存到msg字符变量里。

info_sec.append(line)

global count

count += 1

# 进入漏洞详细页面,调取受影响的漏洞版本

vul_request = urllib.request.Request(sub_url)

vul_response = urllib.request.urlopen(vul_request)

vul_data = vul_response.read().decode(\'utf-8\')

# 正则表达式匹配受影响的版本

affected_version = re.findall("<blockquote>.*</blockquote>", vul_data, re.S)

affected_version = " 受影响的版本:" + affected_version[0][12:-13], \'\\n\'

for newline in affected_version:

newline = newline.replace(\'<\', \'<\')

info_sec.append(newline + \'\\n\')

for i in range(5): #指定被扫描网站需要扫描的网页数范围,默认读取1-10页,即一天的漏洞个数可能要占用好几个页面

url = "http://www.nsfocus.net/index.php?act=sec_bug&type_id=&os=&keyword=&page=%s" % (i+1)

request = urllib.request.Request(url)

# 当尝试访问https开始当站点时,设置全局取消SSL证书验证

ssl._create_default_https_context = ssl._create_unverified_context

response = urllib.request.urlopen(request)

data = response.read().decode(\'utf-8\')

if today in data:

# 用于匹配内容的正则表达式部分

str_re = "<.*" + today + ".*"

res = re.findall(str_re, data)

for line in res:

title_craw = re.findall("/vulndb/\\d+.*</a>", line) # 获取标题

title = title_craw[0][15:-4]

url_craw = re.findall("/vulndb/\\d+", line) # 获取链接

sub_url = str_domain + url_craw[0]

if "Apache Tomcat" in title:

get_infected_vision(tomcat_sec,tomcat_msg,sub_url)

elif "WebLogic" in title:

get_infected_vision(WebLogic_sec,WebLogic_msg,sub_url)

elif "Cisco" in title:

get_infected_vision(Cisco_sec,Cisco_msg,sub_url) # 获取漏洞的受影响版本

elif "Microsoft" in title:

get_infected_vision(Microsoft_sec,Microsoft_msg,sub_url)

msg_fl = [tomcat_sec, WebLogic_sec, Cisco_sec,Microsoft_sec]

secu_msg = \'\'\' \'\'\'

for i in range(len(msg_fl)):

if len(msg_fl[i]) > 1:

for j in range(len(msg_fl[i])):

secu_msg += msg_fl[i][j]

msg_fl = secu_msg

if count == 0 :

msg_fl += Noinfo_sec

msg_fl += ("漏洞告警总数:" + str(count))

else:

msg_fl += ("漏洞告警总数:" + str(count))

f.writelines(msg_fl)

f.close()

# print(msg_fl)

# 发送邮件

chst = email.charset.Charset(input_charset = \'utf-8\')

header = ("From: %s\\nTo: %s\\nSubject: %s\\n\\n" %

("from_mail@163.com",

"to_mail@163.com",

chst.header_encode("[爬虫安全通告-绿盟]")))

# 借用163smtp服务器发送邮件,将上面读到的报警信息作为邮件正文发送。

email_con = header.encode(\'utf-8\') + msg_fl.encode(\'utf-8\')

smtp = smtplib.SMTP("smtp.163.com")

smtp.login("from_mail@163.com","from_mail_password")

smtp.sendmail(\'from_mail@163.com\',\'to_mail@163.com\',email_con)

print(\'mail send success!\')

smtp.quit()

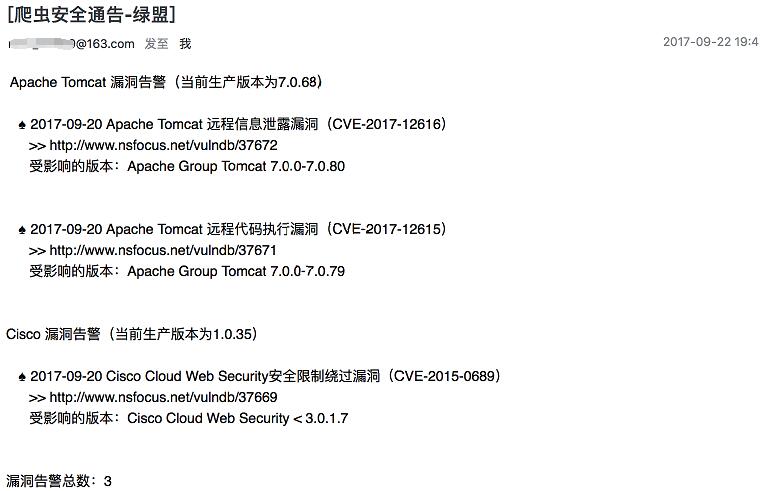

爬虫邮件告警截图:

以上是关于crawler_exa2的主要内容,如果未能解决你的问题,请参考以下文章