今日头条爬虫

Posted Ryana

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了今日头条爬虫相关的知识,希望对你有一定的参考价值。

今日头条是一个js动态加载的网站,尝试了两种方式爬取,一是页面直接提取,一是通过接口提取:

version1:直接页面提取

#coding=utf-8 #今日头条 from lxml import etree import requests import urllib2,urllib def get_url(): url = \'https://www.toutiao.com/ch/news_hot/\' global count try: headers = { \'Host\': \'www.toutiao.com\', \'User-Agent\': \'Mozilla/4.0 (compatible; MSIE 9.0; Windows NT 6.1; 125LA; .NET CLR 2.0.50727; .NET CLR 3.0.04506.648; .NET CLR 3.5.21022)\', \'Connection\': \'Keep-Alive\', \'Content-Type\': \'text/plain; Charset=UTF-8\', \'Accept\': \'*/*\', \'Accept-Language\': \'zh-cn\', \'cookie\':\'__tasessionId=u690hhtp21501983729114;cp=59861769FA4FFE1\'} response = requests.get(url,headers = headers) print response.status_code html = response.content #print html tree = etree.HTML(html) title = tree.xpath(\'//a[@class="link title"]/text()\') source = tree.xpath(\'//a[@class="lbtn source"]/text()\') comment = tree.xpath(\'//a[@class="lbtn comment"]/text()\') stime = tree.xpath(\'//span[@class="lbtn"]/text()\') print len(title) #0 print type(title) #<type \'list\'> for x,y,z,q in zip(title,source,comment,stime): count += 1 data = { \'title\':x.text, \'source\':y.text, \'comment\':z.text, \'stime\':q.text} print count,\'|\',data except urllib2.URLError, e: print e.reason if __name__ == \'__main__\': count = 0 get_url()

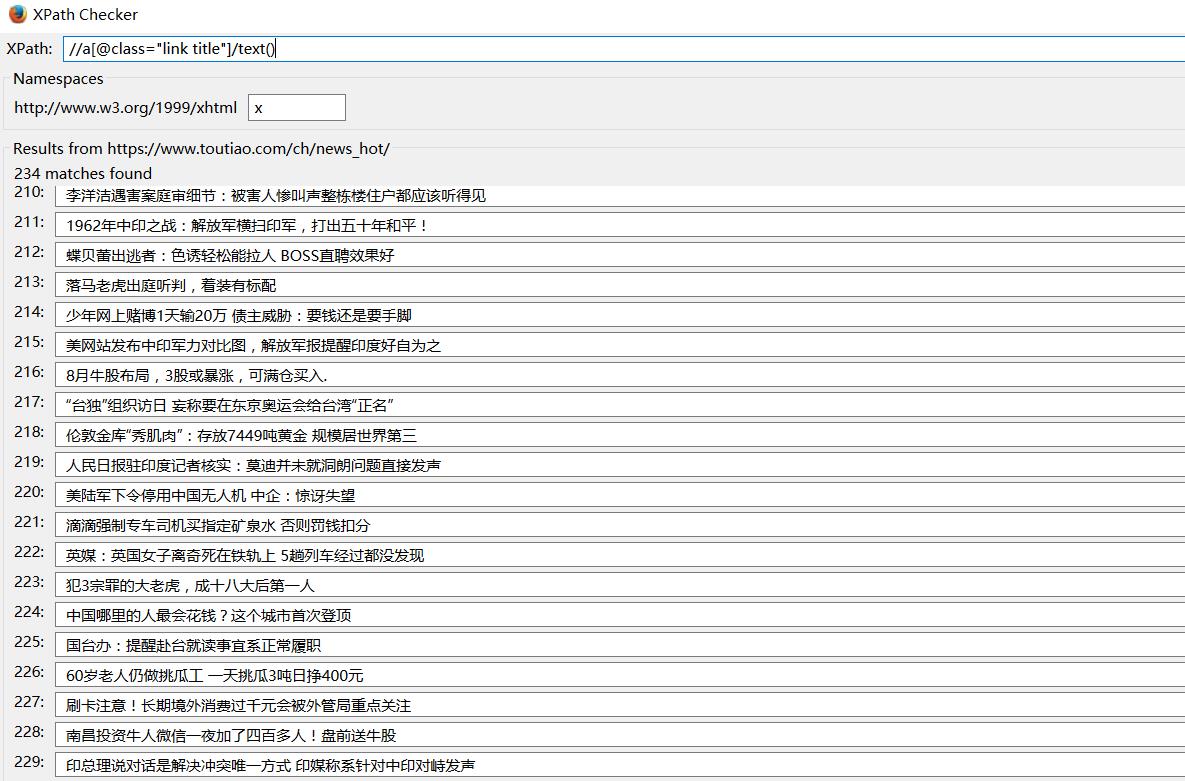

问题:title = tree.xpath(\'//a[@class="link title"]/text()\')提取内容失败,用xpath check插件提取成功

version2:通过接口提取

1.通过F12-network,查看?category=news_society&utm_source=toutiao&widen=1&max_behot_time=0&max_behot_time_tmp=0&tadrequire=true&as=A1B5093B4F12B65&cp=59BF52DB0655DE1的response

2.有效参数:

&category=news_society :头条类型,必填

&max_behot_time=0 &max_behot_time_tmp=0 :打开网页的时间(格林威治秒)和时间戳

&as=A1B5093B4F12B65&cp=59BF52DB0655DE1 :as和cp是用来提取和验证访问者页面停留时间的参数,cp对浏览器的时间进行了加密混淆,as通过md5来对时间进行验证

import requests import json url = \'http://www.toutiao.com/api/pc/feed/?category=news_society&utm_source=toutiao&widen=1&max_behot_time=0&max_behot_time_tmp=0&tadrequire=true&as=A1B5093B4F12B65&cp=59BF52DB0655DE1\' resp = requests.get(url) print resp.status_code Jdata = json.loads(resp.text) #print Jdata news = Jdata[\'data\'] for n in news: title = n[\'title\']

source = n[\'source\']

groupID = n[\'group_id\']

print title,\'|\',source,\'|\',groupID

注:只爬取了7条数据

关于as和cp参数,有大神研究如下:

1.找到js代码,直接crtl+f 找as和cp关键字

function(t) {

var e = {};

e.getHoney = function() {

var t = Math.floor((new Date).getTime() / 1e3),

e = t.toString(16).toUpperCase(),

i = md5(t).toString().toUpperCase();

if (8 != e.length) return {

as: "479BB4B7254C150",

cp: "7E0AC8874BB0985"

};

for (var n = i.slice(0, 5), a = i.slice(-5), s = "", o = 0; 5 > o; o++) s += n[o] + e[o];

for (var r = "", c = 0; 5 > c; c++) r += e[c + 3] + a[c];

return {

as: "A1" + s + e.slice(-3),

cp: e.slice(0, 3) + r + "E1"

}

},

2.模拟as和cp参数:

import time import hashlib def get_as_cp(): zz ={} now = round(time.time()) print now #获取计算机时间 e = hex(int(now)).upper()[2:] #hex()转换一个整数对象为十六进制的字符串表示 print e i = hashlib.md5(str(int(now))).hexdigest().upper() #hashlib.md5().hexdigest()创建hash对象并返回16进制结果 if len(e)!=8: zz = {\'as\': "479BB4B7254C150", \'cp\': "7E0AC8874BB0985"} return zz n=i[:5] a=i[-5:] r = "" s = "" for i in range(5): s = s+n[i]+e[i] for j in range(5): r = r+e[j+3]+a[j] zz = { \'as\': "A1" + s + e[-3:], \'cp\': e[0:3] + r + "E1" } print zz if __name__ == "__main__": get_as_cp()

以上是关于今日头条爬虫的主要内容,如果未能解决你的问题,请参考以下文章