爬虫性能相关

Posted 敏而好学,不耻下问。

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了爬虫性能相关相关的知识,希望对你有一定的参考价值。

性能相关

在编写爬虫时,性能的消耗主要在IO请求中,当单进程单线程模式下请求URL时必然会引起等待,从而使得请求整体变慢。

import requests def fetch_async(url): response = requests.get(url) return response url_list = [\'http://www.github.com\', \'http://www.bing.com\'] for url in url_list: fetch_async(url)

from concurrent.futures import ThreadPoolExecutor import requests def fetch_async(url): response = requests.get(url) return response url_list = [\'http://www.github.com\', \'http://www.bing.com\'] pool = ThreadPoolExecutor(5) for url in url_list: pool.submit(fetch_async, url) pool.shutdown(wait=True)

from concurrent.futures import ThreadPoolExecutor import requests def fetch_async(url): response = requests.get(url) return response def callback(future): print(future.result()) url_list = [\'http://www.github.com\', \'http://www.bing.com\'] pool = ThreadPoolExecutor(5) for url in url_list: v = pool.submit(fetch_async, url) v.add_done_callback(callback) pool.shutdown(wait=True)

from concurrent.futures import ProcessPoolExecutor import requests def fetch_async(url): response = requests.get(url) return response url_list = [\'http://www.github.com\', \'http://www.bing.com\'] pool = ProcessPoolExecutor(5) for url in url_list: pool.submit(fetch_async, url) pool.shutdown(wait=True)

from concurrent.futures import ProcessPoolExecutor import requests def fetch_async(url): response = requests.get(url) return response def callback(future): print(future.result()) url_list = [\'http://www.github.com\', \'http://www.bing.com\'] pool = ProcessPoolExecutor(5) for url in url_list: v = pool.submit(fetch_async, url) v.add_done_callback(callback) pool.shutdown(wait=True)

通过上述代码均可以完成对请求性能的提高,对于多线程和多进行的缺点是在IO阻塞时会造成了线程和进程的浪费,所以异步IO回事首选:

import asyncio @asyncio.coroutine def func1(): print(\'before...func1......\') yield from asyncio.sleep(5) print(\'end...func1......\') tasks = [func1(), func1()] loop = asyncio.get_event_loop() loop.run_until_complete(asyncio.gather(*tasks)) loop.close()

import asyncio @asyncio.coroutine def fetch_async(host, url=\'/\'): print(host, url) reader, writer = yield from asyncio.open_connection(host, 80) request_header_content = """GET %s HTTP/1.0\\r\\nHost: %s\\r\\n\\r\\n""" % (url, host,) request_header_content = bytes(request_header_content, encoding=\'utf-8\') writer.write(request_header_content) yield from writer.drain() text = yield from reader.read() print(host, url, text) writer.close() tasks = [ fetch_async(\'www.cnblogs.com\', \'/wupeiqi/\'), fetch_async(\'dig.chouti.com\', \'/pic/show?nid=4073644713430508&lid=10273091\') ] loop = asyncio.get_event_loop() results = loop.run_until_complete(asyncio.gather(*tasks)) loop.close()

import aiohttp import asyncio @asyncio.coroutine def fetch_async(url): print(url) response = yield from aiohttp.request(\'GET\', url) # data = yield from response.read() # print(url, data) print(url, response) response.close() tasks = [fetch_async(\'http://www.google.com/\'), fetch_async(\'http://www.chouti.com/\')] event_loop = asyncio.get_event_loop() results = event_loop.run_until_complete(asyncio.gather(*tasks)) event_loop.close()

import asyncio import requests @asyncio.coroutine def fetch_async(func, *args): loop = asyncio.get_event_loop() future = loop.run_in_executor(None, func, *args) response = yield from future print(response.url, response.content) tasks = [ fetch_async(requests.get, \'http://www.cnblogs.com/wupeiqi/\'), fetch_async(requests.get, \'http://dig.chouti.com/pic/show?nid=4073644713430508&lid=10273091\') ] loop = asyncio.get_event_loop() results = loop.run_until_complete(asyncio.gather(*tasks)) loop.close()

import gevent import requests from gevent import monkey monkey.patch_all() def fetch_async(method, url, req_kwargs): print(method, url, req_kwargs) response = requests.request(method=method, url=url, **req_kwargs) print(response.url, response.content) # ##### 发送请求 ##### gevent.joinall([ gevent.spawn(fetch_async, method=\'get\', url=\'https://www.python.org/\', req_kwargs={}), gevent.spawn(fetch_async, method=\'get\', url=\'https://www.yahoo.com/\', req_kwargs={}), gevent.spawn(fetch_async, method=\'get\', url=\'https://github.com/\', req_kwargs={}), ]) # ##### 发送请求(协程池控制最大协程数量) ##### # from gevent.pool import Pool # pool = Pool(None) # gevent.joinall([ # pool.spawn(fetch_async, method=\'get\', url=\'https://www.python.org/\', req_kwargs={}), # pool.spawn(fetch_async, method=\'get\', url=\'https://www.yahoo.com/\', req_kwargs={}), # pool.spawn(fetch_async, method=\'get\', url=\'https://www.github.com/\', req_kwargs={}), # ])

import grequests request_list = [ grequests.get(\'http://httpbin.org/delay/1\', timeout=0.001), grequests.get(\'http://fakedomain/\'), grequests.get(\'http://httpbin.org/status/500\') ] # ##### 执行并获取响应列表 ##### # response_list = grequests.map(request_list) # print(response_list) # ##### 执行并获取响应列表(处理异常) ##### # def exception_handler(request, exception): # print(request,exception) # print("Request failed") # response_list = grequests.map(request_list, exception_handler=exception_handler) # print(response_list)

from twisted.web.client import getPage, defer from twisted.internet import reactor def all_done(arg): reactor.stop() def callback(contents): print(contents) deferred_list = [] url_list = [\'http://www.bing.com\', \'http://www.baidu.com\', ] for url in url_list: deferred = getPage(bytes(url, encoding=\'utf8\')) deferred.addCallback(callback) deferred_list.append(deferred) dlist = defer.DeferredList(deferred_list) dlist.addBoth(all_done) reactor.run()

from tornado.httpclient import AsyncHTTPClient from tornado.httpclient import HTTPRequest from tornado import ioloop def handle_response(response): """ 处理返回值内容(需要维护计数器,来停止IO循环),调用 ioloop.IOLoop.current().stop() :param response: :return: """ if response.error: print("Error:", response.error) else: print(response.body) def func(): url_list = [ \'http://www.baidu.com\', \'http://www.bing.com\', ] for url in url_list: print(url) http_client = AsyncHTTPClient() http_client.fetch(HTTPRequest(url), handle_response) ioloop.IOLoop.current().add_callback(func) ioloop.IOLoop.current().start()

from twisted.internet import reactor from twisted.web.client import getPage import urllib.parse def one_done(arg): print(arg) reactor.stop() post_data = urllib.parse.urlencode({\'check_data\': \'adf\'}) post_data = bytes(post_data, encoding=\'utf8\') headers = {b\'Content-Type\': b\'application/x-www-form-urlencoded\'} response = getPage(bytes(\'http://dig.chouti.com/login\', encoding=\'utf8\'), method=bytes(\'POST\', encoding=\'utf8\'), postdata=post_data, cookies={}, headers=headers) response.addBoth(one_done) reactor.run()

以上均是Python内置以及第三方模块提供异步IO请求模块,使用简便大大提高效率,而对于异步IO请求的本质则是【非阻塞Socket】+【IO多路复用】:

import select import socket import time class AsyncTimeoutException(TimeoutError): """ 请求超时异常类 """ def __init__(self, msg): self.msg = msg super(AsyncTimeoutException, self).__init__(msg) class HttpContext(object): """封装请求和相应的基本数据""" def __init__(self, sock, host, port, method, url, data, callback, timeout=5): """ sock: 请求的客户端socket对象 host: 请求的主机名 port: 请求的端口 port: 请求的端口 method: 请求方式 url: 请求的URL data: 请求时请求体中的数据 callback: 请求完成后的回调函数 timeout: 请求的超时时间 """ self.sock = sock self.callback = callback self.host = host self.port = port self.method = method self.url = url self.data = data self.timeout = timeout self.__start_time = time.time() self.__buffer = [] def is_timeout(self): """当前请求是否已经超时""" current_time = time.time() if (self.__start_time + self.timeout) < current_time: return True def fileno(self): """请求sockect对象的文件描述符,用于select监听""" return self.sock.fileno() def write(self, data): """在buffer中写入响应内容""" self.__buffer.append(data) def finish(self, exc=None): """在buffer中写入响应内容完成,执行请求的回调函数""" if not exc: response = b\'\'.join(self.__buffer) self.callback(self, response, exc) else: self.callback(self, None, exc) def send_request_data(self): content = """%s %s HTTP/1.0\\r\\nHost: %s\\r\\n\\r\\n%s""" % ( self.method.upper(), self.url, self.host, self.data,) return content.encode(encoding=\'utf8\') class AsyncRequest(object): def __init__(self): self.fds = [] self.connections = [] def add_request(self, host, port, method, url, data, callback, timeout): """创建一个要请求""" client = socket.socket() client.setblocking(False) try: client.connect((host, port)) except BlockingIOError as e: pass # print(\'已经向远程发送连接的请求\') req = HttpContext(client, host, port, method, url, data, callback, timeout) self.connections.append(req) self.fds.append(req) def check_conn_timeout(self): """检查所有的请求,是否有已经连接超时,如果有则终止""" timeout_list = [] for context in self.connections: if context.is_timeout(): timeout_list.append(context) for context in timeout_list: context.finish(AsyncTimeoutException(\'请求超时\')) self.fds.remove(context) self.connections.remove(context) def running(self): """事件循环,用于检测请求的socket是否已经就绪,从而执行相关操作""" while True: r, w, e = select.select(self.fds, self.connections, self.fds, 0.05) if not self.fds: return for context in r: sock = context.sock while True: try: data = sock.recv(8096) if not data: self.fds.remove(context) context.finish() break else: context.write(data) except BlockingIOError as e: break except TimeoutError as e: self.fds.remove(context) self.connections.remove(context) context.finish(e) break for context in w: # 已经连接成功远程服务器,开始向远程发送请求数据 if context in self.fds: data = context.send_request_data() context.sock.sendall(data) self.connections.remove(context) self.check_conn_timeout() if __name__ == \'__main__\': def callback_func(context, response, ex): """ :param context: HttpContext对象,内部封装了请求相关信息 :param response: 请求响应内容 :param ex: 是否出现异常(如果有异常则值为异常对象;否则值为None) :return: """ print(context, response, ex) obj = AsyncRequest() url_list = [ {\'host\': \'www.google.com\', \'port\': 80, \'method\': \'GET\', \'url\': \'/\', \'data\': \'\', \'timeout\': 5, \'callback\': callback_func}, {\'host\': \'www.baidu.com\', \'port\': 80, \'method\': \'GET\', \'url\': \'/\', \'data\': \'\', \'timeout\': 5, \'callback\': callback_func}, {\'host\': \'www.bing.com\', \'port\': 80, \'method\': \'GET\', \'url\': \'/\', \'data\': \'\', \'timeout\': 5, \'callback\': callback_func}, ] for item in url_list: print(item) obj.add_request(**item) obj.running()

Scrapy

Scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。 其可以应用在数据挖掘,信息处理或存储历史数据等一系列的程序中。

其最初是为了页面抓取 (更确切来说, 网络抓取 )所设计的, 也可以应用在获取API所返回的数据(例如 Amazon Associates Web Services ) 或者通用的网络爬虫。Scrapy用途广泛,可以用于数据挖掘、监测和自动化测试。

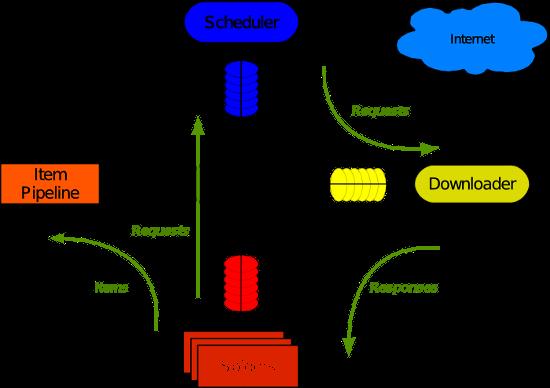

Scrapy 使用了 Twisted异步网络库来处理网络通讯。整体架构大致如下

Scrapy主要包括了以下组件:

- 引擎(Scrapy)

用来处理整个系统的数据流处理, 触发事务(框架核心) - 调度器(Scheduler)

用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址 - 下载器(Downloader)

用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的) - 爬虫(Spiders)

爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面 - 项目管道(Pipeline)

负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。 - 下载器中间件(Downloader Middlewares)

位于Scrapy引擎和下载器之间的框架,主要是处理Scrapy引擎与下载器之间的请求及响应。 - 爬虫中间件(Spider Middlewares)

介于Scrapy引擎和爬虫之间的框架,主要工作是处理蜘蛛的响应输入和请求输出。 - 调度中间件(Scheduler Middewares)

介于Scrapy引擎和调度之间的中间件,从Scrapy引擎发送到调度的请求和响应。

Scrapy运行流程大概如下:

- 引擎从调度器中取出一个链接(URL)用于接下来的抓取

- 引擎把URL封装成一个请求(Request)传给下载器

- 下载器把资源下载下来,并封装成应答包(Response)

- 爬虫解析Response

- 解析出实体(Item),则交给实体管道进行进一步的处理

- 解析出的是链接(URL),则把URL交给调度器等待抓取

一、安装

Linux

pip3 install scrapy

Windows

a. pip3 install wheel

b. 下载twisted http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

c. 进入下载目录,执行 pip3 install Twisted‑17.1.0‑cp35‑cp35m‑win_amd64.whl

d. pip3 install scrapy

e. 下载并安装pywin32:https://sourceforge.net/projects/pywin32/files/

二、基本使用

1. 基本命令

1. scrapy startproject 项目名称

- 在当前目录中创建中创建一个项目文件(类似于Django)

2. scrapy genspider [-t template] <name> <domain>

- 创建爬虫应用

如:

scrapy gensipider -t basic oldboy oldboy.com

scrapy gensipider -t xmlfeed autohome autohome.com.cn

PS:

查看所有命令:scrapy gensipider -l

查看模板命令:scrapy gensipider -d 模板名称

3. scrapy list

- 展示爬虫应用列表

4. scrapy crawl 爬虫应用名称

- 运行单独爬虫应用

2.项目结构以及爬虫应用简介

project_name/

scrapy.cfg

project_name/

__init__.py

items.py

pipelines.py

settings.py

spiders/

__init__.py

爬虫1.py

爬虫2.py

爬虫3.py

文件说明:

- scrapy.cfg 项目的主配置信息。(真正爬虫相关的配置信息在settings.py文件中)

- items.py 设置数据存储模板,用于结构化数据,如:Django的Model

- pipelines 数据处理行为,如:一般结构化的数据持久化

- settings.py 配置文件,如:递归的层数、并发数,延迟下载等

- spiders 爬虫目录,如:创建文件,编写爬虫规则

注意:一般创建爬虫文件时,以网站域名命名

import scrapy class XiaoHuarSpider(scrapy.spiders.Spider): name = "xiaohuar" # 爬虫名称 ***** allowed_domains = ["xiaohuar.com"] # 允许的域名 start_urls = [ "http://www.xiaohuar.com/hua/", # 其实URL ] def parse(self, response): # 访问起始URL并获取结果后的回调函数

import sys,os sys.stdout=io.TextIOWrapper(sys.stdout.buffer,encoding=\'gb18030\')

3. 小试牛刀

import scrapy from scrapy.selector import htmlXPathSelector from scrapy.http.request import Request class DigSpider(scrapy.Spider): # 爬虫应用的名称,通过此名称启动爬虫命令 name = "dig" # 允许的域名 allowed_domains = ["chouti.com"] # 起始URL start_urls = [ \'http://dig.chouti.com/\', ] has_request_set = {} def parse(self, response): print(response.url) hxs = HtmlXPathSelector(response) page_list = hxs.select(\'//div[@id="dig_lcpage"]//a[re:test(@href, "/all/hot/recent/\\d+")]/@href\').extract() for page in page_list: page_url = \'http://dig.chouti.com%s\' % page key = self.md5(page_url) if key in self.has_request_set: pass else: self.has_request_set[key] = page_url obj = Request(url=page_url, method=\'GET\', callback=self.parse) yield obj @staticmethod def md5(val): import hashlib ha = hashlib.md5() ha.update(bytes(val, encoding=\'utf-8\')) key = ha.hexdigest() return key

执行此爬虫文件,则在终端进入项目目录执行如下命令:

scrapy crawl dig --nolog

对于上述代码重要之处在于:

- Request是一个封装用户请求的类,在回调函数中yield该对象表示继续访问

- HtmlXpathSelector用于结构化HTML代码并提供选择器功能

4. 选择器

#!/usr/bin/env python

# -*- coding:utf-8 -*-

from scrapy.selector import Selector, HtmlXPathSelector

from scrapy.http import HtmlResponse

html = """<!DOCTYPE html>

<html>

<head lang="en">

<meta cha以上是关于爬虫性能相关的主要内容,如果未能解决你的问题,请参考以下文章