CUDA实例练习:零拷贝内存

Posted Jason&Hymer

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了CUDA实例练习:零拷贝内存相关的知识,希望对你有一定的参考价值。

可以在CUDA C核函数中直接访问这种类型的主机内存。由于这种内存不需要复制到GPU,因此也称为零拷贝内存。

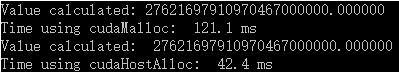

1 #include "book.h" 2 #include <stdio.h> 3 #include <cuda_runtime.h> 4 #include <device_launch_parameters.h> 5 #define imin(a,b) (a<b?a:b) 6 7 const int N = 33 * 1024 * 1024; 8 const int threadsPerBlock = 256; 9 const int blocksPerGrid = imin(32, (N + threadsPerBlock - 1) / threadsPerBlock); 10 11 __global__ void dot(int size, float *a, float *b, float *c) { 12 __shared__ float cache[threadsPerBlock]; 13 int tid = threadIdx.x + blockIdx.x * blockDim.x; 14 int cacheIndex = threadIdx.x; 15 16 float temp = 0; 17 while (tid < size) { 18 temp += a[tid] * b[tid]; 19 tid += blockDim.x * gridDim.x; 20 } 21 22 // set the cache values 23 cache[cacheIndex] = temp; 24 25 // synchronize threads in this block 26 __syncthreads(); 27 28 // for reductions, threadsPerBlock must be a power of 2 29 // because of the following code 30 int i = blockDim.x / 2; 31 while (i != 0) { 32 if (cacheIndex < i) 33 cache[cacheIndex] += cache[cacheIndex + i]; 34 __syncthreads(); 35 i /= 2; 36 } 37 38 if (cacheIndex == 0) 39 c[blockIdx.x] = cache[0]; 40 } 41 42 //点积运算的主机内存版本 43 float malloc_test(int size) { 44 cudaEvent_t start, stop; 45 float *a, *b,c, *partial_c; 46 float *dev_a, *dev_b, *dev_partial_c; 47 float elapsedTime; 48 49 HANDLE_ERROR(cudaEventCreate(&start)); 50 HANDLE_ERROR(cudaEventCreate(&stop)); 51 52 //在CPU上分配内存 53 a = (float *)malloc(size * sizeof(float)); 54 b = (float *)malloc(size * sizeof(float)); 55 partial_c = (float *)malloc(blocksPerGrid * sizeof(float)); 56 57 //在GPU上分配内存 58 HANDLE_ERROR(cudaMalloc((void **)&dev_a, size * sizeof(float))); 59 HANDLE_ERROR(cudaMalloc((void **)&dev_b, size * sizeof(float))); 60 HANDLE_ERROR(cudaMalloc((void **)&dev_partial_c, blocksPerGrid * sizeof(float))); 61 62 //用数据填充主机内存 63 for (int i = 0; i < size; i++){ 64 a[i] = i; 65 b[i] = i * 2; 66 } 67 68 HANDLE_ERROR(cudaEventRecord(start, 0)); 69 //将数组\'a\'和\'b\'复制到GPU 70 HANDLE_ERROR(cudaMemcpy(dev_a, a, size * sizeof(float), 71 cudaMemcpyHostToDevice)); 72 HANDLE_ERROR(cudaMemcpy(dev_b, b, size * sizeof(float), 73 cudaMemcpyHostToDevice)); 74 dot << <blocksPerGrid, threadsPerBlock >> >(size, dev_a, dev_b, dev_partial_c); 75 76 //将数组\'c\'从GPU复制到CPU 77 HANDLE_ERROR(cudaMemcpy(partial_c, dev_partial_c, 78 blocksPerGrid * sizeof(float), cudaMemcpyDeviceToHost)); 79 HANDLE_ERROR(cudaEventRecord(stop, 0)); 80 HANDLE_ERROR(cudaEventSynchronize(stop)); 81 HANDLE_ERROR(cudaEventElapsedTime(&elapsedTime, start, stop)); 82 83 //结束CPU上的计算 84 c = 0; 85 for (int i = 0; i < blocksPerGrid; i++){ 86 c += partial_c[i]; 87 } 88 HANDLE_ERROR(cudaFree(dev_a)); 89 HANDLE_ERROR(cudaFree(dev_b)); 90 HANDLE_ERROR(cudaFree(dev_partial_c)); 91 92 //释放CPU上的内存 93 free(a); 94 free(b); 95 free(partial_c); 96 97 //释放事件 98 HANDLE_ERROR(cudaEventDestroy(start)); 99 HANDLE_ERROR(cudaEventDestroy(stop)); 100 101 printf("Value calculated: %f\\n", c); 102 103 return elapsedTime; 104 } 105 106 //点积运算的零拷贝内存版本 107 float cuda_host_alloc_test(int size){ 108 cudaEvent_t start, stop; 109 float *a, *b, c, *partial_c; 110 float *dev_a, *dev_b, *dev_partial_c; 111 float elapsedTime; 112 113 HANDLE_ERROR(cudaEventCreate(&start)); 114 HANDLE_ERROR(cudaEventCreate(&stop)); 115 116 //在CPU上分配内存 117 HANDLE_ERROR(cudaHostAlloc((void **)&a, size * sizeof(float), 118 cudaHostAllocWriteCombined | cudaHostAllocMapped)); 119 HANDLE_ERROR(cudaHostAlloc((void **)&b, size * sizeof(float), 120 cudaHostAllocWriteCombined | cudaHostAllocMapped)); 121 HANDLE_ERROR(cudaHostAlloc((void **)&partial_c, 122 blocksPerGrid * sizeof(float), cudaHostAllocMapped)); 123 124 //用数据填充主机内存 125 for (int i = 0; i < size; i++){ 126 a[i] = i; 127 b[i] = i * 2; 128 } 129 // find out the GPU pointers 130 HANDLE_ERROR(cudaHostGetDevicePointer(&dev_a, a, 0)); 131 HANDLE_ERROR(cudaHostGetDevicePointer(&dev_b, b, 0)); 132 HANDLE_ERROR(cudaHostGetDevicePointer(&dev_partial_c, 133 partial_c, 0)); 134 135 // fill in the host memory with data 136 for (int i = 0; i<size; i++) { 137 a[i] = i; 138 b[i] = i * 2; 139 } 140 141 HANDLE_ERROR(cudaEventRecord(start, 0)); 142 143 dot << <blocksPerGrid, threadsPerBlock >> >(size, dev_a, dev_b, 144 dev_partial_c); 145 146 HANDLE_ERROR(cudaThreadSynchronize()); 147 HANDLE_ERROR(cudaEventRecord(stop, 0)); 148 HANDLE_ERROR(cudaEventSynchronize(stop)); 149 HANDLE_ERROR(cudaEventElapsedTime(&elapsedTime, 150 start, stop)); 151 152 // finish up on the CPU side 153 c = 0; 154 for (int i = 0; i<blocksPerGrid; i++) { 155 c += partial_c[i]; 156 } 157 158 HANDLE_ERROR(cudaFreeHost(a)); 159 HANDLE_ERROR(cudaFreeHost(b)); 160 HANDLE_ERROR(cudaFreeHost(partial_c)); 161 162 // free events 163 HANDLE_ERROR(cudaEventDestroy(start)); 164 HANDLE_ERROR(cudaEventDestroy(stop)); 165 166 printf("Value calculated: %f\\n", c); 167 168 return elapsedTime; 169 } 170 171 int main(void) { 172 cudaDeviceProp prop; 173 int whichDevice; 174 HANDLE_ERROR(cudaGetDevice(&whichDevice)); 175 HANDLE_ERROR(cudaGetDeviceProperties(&prop, whichDevice)); 176 if (prop.canMapHostMemory != 1) { 177 printf("Device can not map memory.\\n"); 178 return 0; 179 } 180 181 float elapsedTime; 182 183 HANDLE_ERROR(cudaSetDeviceFlags(cudaDeviceMapHost)); 184 185 // try it with malloc 186 elapsedTime = malloc_test(N); 187 printf("Time using cudaMalloc: %3.1f ms\\n", 188 elapsedTime); 189 190 // now try it with cudaHostAlloc 191 elapsedTime = cuda_host_alloc_test(N); 192 printf("Time using cudaHostAlloc: %3.1f ms\\n", 193 elapsedTime); 194 }

以上是关于CUDA实例练习:零拷贝内存的主要内容,如果未能解决你的问题,请参考以下文章