Theano Multi Layer Perceptron 多层感知机

Posted zhchoutai

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Theano Multi Layer Perceptron 多层感知机相关的知识,希望对你有一定的参考价值。

理论

机器学习技法:https://www.coursera.org/course/ntumltwo

假设上述网址不可用的话,自行度娘找别人做好的种子。或者看这篇讲义也能够:http://www.cnblogs.com/xbf9xbf/p/4712785.html

Theano代码

须要使用我上一篇博客关于逻辑回归的代码:http://blog.csdn.net/yangnanhai93/article/details/50410026

保存成ls_sgd.py 文件,置于同一个文件夹下就可以。

#!/usr/bin/env python

# -*- encoding:utf-8 -*-

\'\'\'

This is done by Vincent.Y

mainly modified from deep learning tutorial

\'\'\'

import os

import sys

import timeit

import numpy as np

import theano

import theano.tensor as T

from theano import function

from lr_sgd import LogisticRegression ,load_data,plot_decision_boundary

import matplotlib.pyplot as plt

class HiddenLayer():

def __init__(self,rng,X,n_in,n_out,W=None,b=None,activation=T.tanh):

self.X=X

if W is None:

W_value=np.asarray(

rng.uniform(

low=-np.sqrt(6.0/(n_in+n_out)),

high=np.sqrt(6.0/(n_in+n_out)),

size=(n_in,n_out)

),

dtype=theano.config.floatX

)

if activation== theano.tensor.nnet.sigmoid:

W_value*=4

W=theano.shared(value=W_value,name=\'W\',borrow=True)

if b is None:

b_value=np.zeros((n_out,),dtype=theano.config.floatX)

b=theano.shared(value=b_value,name=\'b\',borrow=True)

self.W=W

self.b=b

lin_output=T.dot(X,self.W)+self.b

self.output=(lin_output if activation is None else activation(lin_output))

self.params=[self.W,self.b]

class MLP():

def __init__(self,rng,X,n_in,n_hidden,n_out):

self.hiddenLayer=HiddenLayer(

rng=rng,

X=X,

n_in=n_in,

n_out=n_hidden,

activation=T.tanh

)

self.logisticRegressionLayer=LogisticRegression(

X=self.hiddenLayer.output,

n_in=n_hidden,

n_out=n_out

)

self.L1=(abs(self.hiddenLayer.W).sum()+abs(self.logisticRegressionLayer.W).sum())

self.L2=((self.hiddenLayer.W**2).sum()+(self.logisticRegressionLayer.W**2).sum())

self.negative_log_likelihood=self.logisticRegressionLayer.negative_log_likelihood

self.errors=self.logisticRegressionLayer.errors #this is a function

self.params=self.logisticRegressionLayer.params+self.hiddenLayer.params

self.X=X

self.y_pred=self.logisticRegressionLayer.y_pred

def test_mlp(learning_rate=0.11,L1_reg=0.00,L2_reg=0.0001,n_epochs=6000,n_hidden=10):

datasets=load_data()

train_set_x,train_set_y=datasets[0]

test_set_x,test_set_y=datasets[1]

x=T.matrix(\'x\')

y=T.lvector(\'y\')

rng=np.random.RandomState(218)

classifier=MLP(

rng=rng,

X=x,

n_in=2,

n_out=2,

n_hidden=n_hidden

)

cost=(classifier.negative_log_likelihood(y)+L1_reg*classifier.L1+L2_reg*classifier.L2)

test_model=function(

inputs=[x,y],

outputs=classifier.errors(y)

)

gparams=[T.grad(cost,param) for param in classifier.params]

updates=[

(param,param-learning_rate*gparam)

for param,gparam in zip(classifier.params,gparams)

]

train_model=function(

inputs=[x,y],

outputs=cost,

updates=updates

)

epoch=0

while epoch < n_epochs:

epoch=epoch+1

avg_cost=train_model(train_set_x,train_set_y)

test_cost=test_model(test_set_x,test_set_y)

print "epoch is %d,train error %f, test error %f"%(epoch,avg_cost,test_cost)

predict_model=function(

inputs=[x],

outputs=classifier.logisticRegressionLayer.y_pred

)

plot_decision_boundary(lambda x:predict_model(x),train_set_x,train_set_y)

if __name__=="__main__":

test_mlp()效果

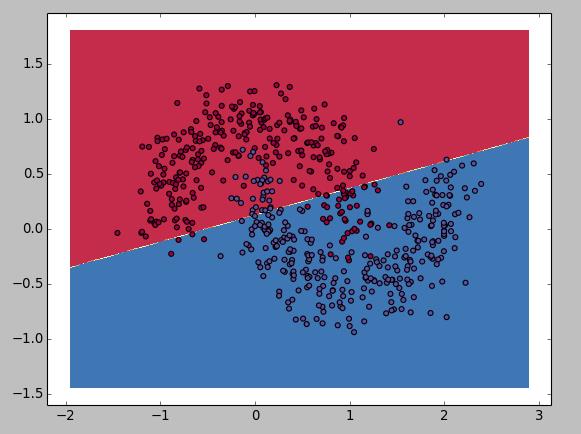

迭代600次,隐层数量为2

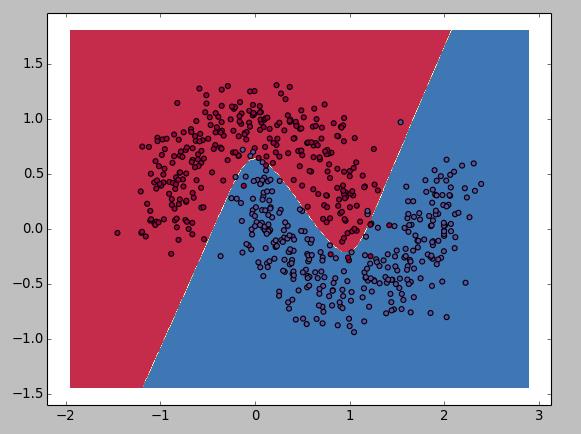

迭代6000次。隐层数量为20

当隐层数量非常少。如2或者1的时候。添加迭代次数,分类超平面依然是一条直线;当隐层数量多,迭代次数过少的时候分类超平面也是一条直线。所以在训练的过程中。总是要依据训练的结果来调整隐层节点的数量以及迭代次数来获取最好的效果,当中迭代次数可用early stopping来控制。

以上是关于Theano Multi Layer Perceptron 多层感知机的主要内容,如果未能解决你的问题,请参考以下文章