基本光线追踪软件的设计与实现

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基本光线追踪软件的设计与实现相关的知识,希望对你有一定的参考价值。

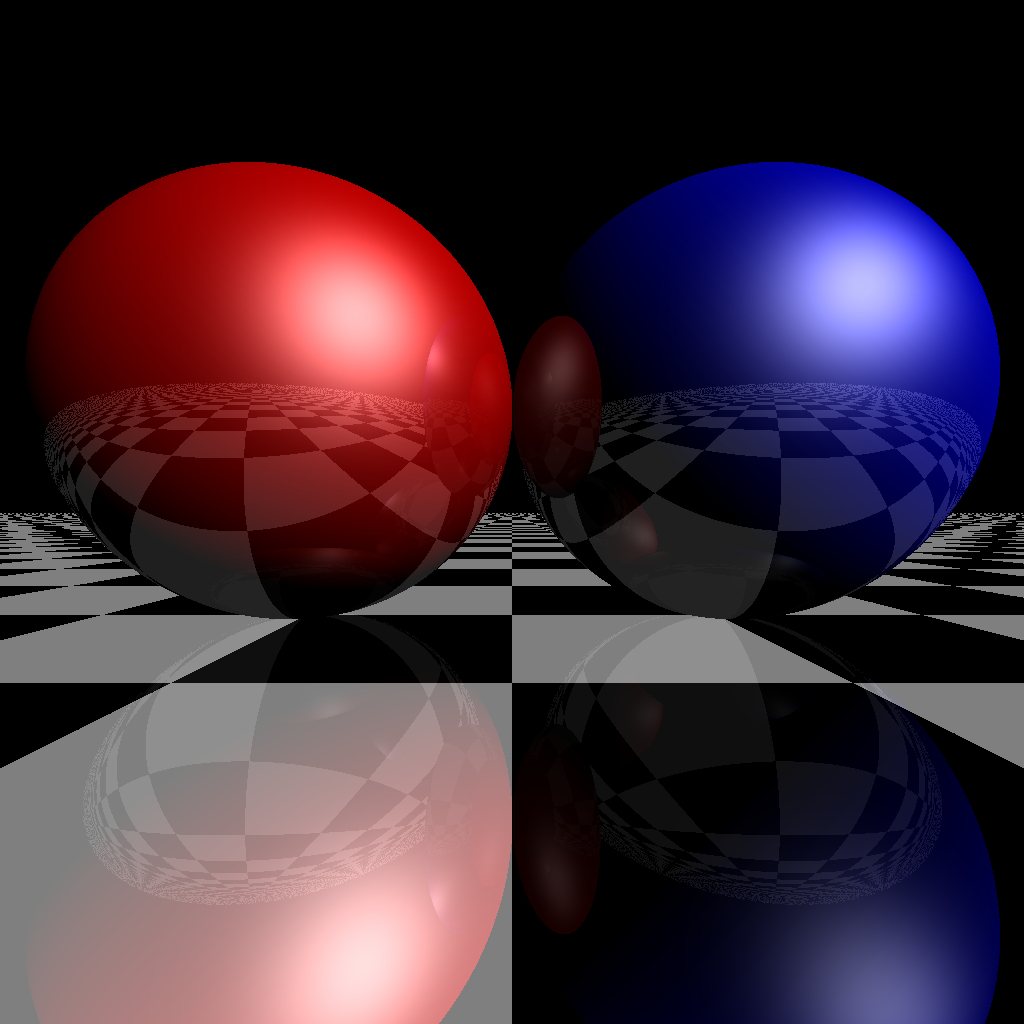

这篇文章做的是计算机图形学中传统的光线追踪渲染,用的递归,最大反射次数为3,phong材料。

(上图 1024* 1024) 运行测速 = 0.09帧/秒

流程:

1.建立场景

2.相机发出射线

3.追踪该射线

4.根据光照模型和光照方向渲染

代码中的特点:

1.找到的这份代码,用的是结构体struct来定义的用于计算的数据,搜索了下C++里面的struct和class基本差别不大,最本质的一个是访问控制,struct默认访问控制是private,其实也就是说,在这份代码里面把每一个struct改为class也是同样可以运行的。

2.在这份代码里面大量的使用了inline内联函数,内联函数用法类似于#define ,省去了函数在寄存器中的反复调用,这样效率会更高的。

核心的结构体:

Vector , Color ,IGeometry , IMaterial , IntersectResult , PerspectiveCamera , Ray

同样有保存出渲染结果为找的类。

整个流程:

通过给一个test函数传值,test名称,函数的名字,运行次数这三个信息,如:test("rayTraceRecursive", rayTraceRecursive, 50);

test函数框架,先定义一个pixels32的图片变量,设置好大小, 在一个for循环里面,循环传入的运行次数这么多次,开始计时。

这个时候传入的函数名字,函数名字是const T_proc类型变量(T_proc的定义 typedef void(*T_proc)(const TPixels32Ref& dst);),用来直接作为函数的名字的变量,以运行相应的函数。

当执行for次数完成的时候,运行次数/经过的时间,得到渲染的fps值。

最后以 TFileOutputStream 获取图片输出的数据变量,TBmpFile::save的方式保存出照片。

画板测试部分:

void canvasTest(const TPixels32Ref& ctx) {

if (ctx.getIsEmpty())

return;

long w = ctx.width;

long h = ctx.height;

ctx.fillColor(Color32(0, 0, 0, 0));

Color32* pixels = ctx.pdata;

for (long y = 0; y < h; ++y){

for (long x = 0; x < w; ++x){

pixels[x].r = (UInt8)(x * 255 / w);

pixels[x].g = (UInt8)(y * 255 / h);

pixels[x].b = 0;

pixels[x].a = 255;

}

(UInt8*&)pixels += ctx.byte_width;

}

}

渲染深度测试部分:

void renderDepth(const TPixels32Ref& ctx) {

if (ctx.getIsEmpty())

return;

Union scene;

scene.push(new Sphere(Vector3(0, 10, -10), 10));

scene.push(new Plane(Vector3(0, 1, 0), 0));

PerspectiveCamera camera(Vector3(0, 10, 10), Vector3(0, 0, -1), Vector3(0, 1, 0), 90);

long maxDepth = 20;

long w = ctx.width;

long h = ctx.height;

ctx.fillColor(Color32(0, 0, 0, 0));

Color32* pixels = ctx.pdata;

scene.initialize();

camera.initialize();

float dx = 1.0f / w;

float dy = 1.0f / h;

float dD = 255.0f / maxDepth;

for (long y = 0; y < h; ++y){

float sy = 1 - dy*y;

for (long x = 0; x < w; ++x){

float sx = dx*x;

Ray3 ray(camera.generateRay(sx, sy));

IntersectResult result = scene.intersect(ray);

if (result.geometry) {

UInt8 depth = (UInt8)(255 - std::min(result.distance*dD, 255.0f));

pixels[x].r = depth;

pixels[x].g = depth;

pixels[x].b = depth;

pixels[x].a = 255;

}

}

(UInt8*&)pixels += ctx.byte_width;

}

}

渲染法线测试部分:

void renderNormal(const TPixels32Ref& ctx) {

if (ctx.getIsEmpty())

return;

Sphere scene(Vector3(0, 10, -10), 10);

PerspectiveCamera camera(Vector3(0, 10, 10), Vector3(0, 0, -1), Vector3(0, 1, 0), 90);

long maxDepth = 20;

long w = ctx.width;

long h = ctx.height;

ctx.fillColor(Color32(0, 0, 0, 0));

Color32* pixels = ctx.pdata;

scene.initialize();

camera.initialize();

float dx = 1.0f / w;

float dy = 1.0f / h;

float dD = 255.0f / maxDepth;

for (long y = 0; y < h; ++y){

float sy = 1 - dy*y;

for (long x = 0; x < w; ++x){

float sx = dx*x;

Ray3 ray(camera.generateRay(sx, sy));

IntersectResult result = scene.intersect(ray);

if (result.geometry) {

pixels[x].r = (UInt8)((result.normal.x + 1) * 128);

pixels[x].g = (UInt8)((result.normal.y + 1) * 128);

pixels[x].b = (UInt8)((result.normal.z + 1) * 128);

pixels[x].a = 255;

}

}

(UInt8*&)pixels += ctx.byte_width;

}

}

光线追踪部分:

void rayTrace(const TPixels32Ref& ctx) {

if (ctx.getIsEmpty())

return;

Plane* plane = new Plane(Vector3(0, 1, 0), 0);

Sphere* sphere1 = new Sphere(Vector3(-10, 10, -10), 10);

Sphere* sphere2 = new Sphere(Vector3(10, 10, -10), 10);

plane->material = new CheckerMaterial(0.1f);

sphere1->material = new PhongMaterial(Color::red(), Color::white(), 16);

sphere2->material = new PhongMaterial(Color::blue(), Color::white(), 16);

Union scene;

scene.push(plane);

scene.push(sphere1);

scene.push(sphere2);

PerspectiveCamera camera(Vector3(0, 5, 15), Vector3(0, 0, -1), Vector3(0, 1, 0), 90);

long w = ctx.width;

long h = ctx.height;

ctx.fillColor(Color32(0, 0, 0, 0));

Color32* pixels = ctx.pdata;

scene.initialize();

camera.initialize();

float dx = 1.0f / w;

float dy = 1.0f / h;

for (long y = 0; y < h; ++y){

float sy = 1 - dy*y;

for (long x = 0; x < w; ++x){

float sx = dx*x;

Ray3 ray(camera.generateRay(sx, sy));

IntersectResult result = scene.intersect(ray);

if (result.geometry) {

Color color = result.geometry->material->sample(ray, result.position, result.normal);

color.saturate();

pixels[x].r = (UInt8)(color.r * 255);

pixels[x].g = (UInt8)(color.g * 255);

pixels[x].b = (UInt8)(color.b * 255);

pixels[x].a = 255;

}

}

(UInt8*&)pixels += ctx.byte_width;

}

}

递归光照追踪部分:

Color rayTraceRecursive(IGeometry* scene, const Ray3& ray, long maxReflect) {

IntersectResult result = scene->intersect(ray);

if (result.geometry){

float reflectiveness = result.geometry->material->reflectiveness;

Color color = result.geometry->material->sample(ray, result.position, result.normal);

color = color.multiply(1 - reflectiveness);

if ((reflectiveness > 0) && (maxReflect > 0)) {

Vector3 r = result.normal.multiply(-2 * result.normal.dot(ray.direction)).add(ray.direction);

Ray3 ray = Ray3(result.position, r);

Color reflectedColor = rayTraceRecursive(scene, ray, maxReflect - 1);

color = color.add(reflectedColor.multiply(reflectiveness));

}

return color;

}

else

return Color::black();

}

void rayTraceRecursive(const TPixels32Ref& ctx) {

if (ctx.getIsEmpty())

return;

Plane* plane = new Plane(Vector3(0, 1, 0), 0);

Sphere* sphere1 = new Sphere(Vector3(-10, 10, -10), 10);

Sphere* sphere2 = new Sphere(Vector3(10, 10, -10), 10);

plane->material = new CheckerMaterial(0.1f, 0.5);

sphere1->material = new PhongMaterial(Color::red(), Color::white(), 16, 0.25);

sphere2->material = new PhongMaterial(Color::blue(), Color::white(), 16, 0.25);

Union scene;

scene.push(plane);

scene.push(sphere1);

scene.push(sphere2);

PerspectiveCamera camera(Vector3(0, 5, 15), Vector3(0, 0, -1), Vector3(0, 1, 0), 90);

long maxReflect = 3;

long w = ctx.width;

long h = ctx.height;

ctx.fillColor(Color32(0, 0, 0, 0));

Color32* pixels = ctx.pdata;

scene.initialize();

camera.initialize();

float dx = 1.0f / w;

float dy = 1.0f / h;

for (long y = 0; y < h; ++y){

float sy = 1 - dy*y;

for (long x = 0; x < w; ++x){

float sx = dx*x;

Ray3 ray(camera.generateRay(sx, sy));

Color color = rayTraceRecursive(&scene, ray, maxReflect);

color.saturate();

pixels[x].r = (UInt8)(color.r * 255);

pixels[x].g = (UInt8)(color.g * 255);

pixels[x].b = (UInt8)(color.b * 255);

pixels[x].a = 255;

}

(UInt8*&)pixels += ctx.byte_width;

}

}

主函数实现测试:

int main(){

std::cout << " 请输入回车键开始测试(可以把进程优先级设置为“实时”)> ";

waitInputChar();

std::cout << std::endl;

test("canvasTest", canvasTest, 2000);

test("renderDepth", renderDepth, 100);

test("renderNormal", renderNormal, 200);

test("rayTrace", rayTrace, 50);

test("rayTraceRecursive", rayTraceRecursive, 50);

std::cout << std::endl << " 测试完成. ";

waitInputChar();

return 0;

}

以上是关于基本光线追踪软件的设计与实现的主要内容,如果未能解决你的问题,请参考以下文章