如何使用OpenCV实现基于标记的定位

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了如何使用OpenCV实现基于标记的定位相关的知识,希望对你有一定的参考价值。

准备一张示例图片,如图示,图片中存在方形,多变形,不规则图形,圆形,且颜色各异。 下面介绍如何定位图中的两个圆。此图片大家可以使用windows自带的小画家来画,注意画圆的时候按shift键可以画出正圆。引用OpenCV 头文件和库文件,并且包含vector, 后面要用到。

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <iostream>

#include <stdio.h>

#include <vector>

#include <math.h>

#pragma comment (lib,"opencv_core244d.lib")

#pragma comment (lib,"opencv_highgui244d.lib")

#pragma comment (lib,"opencv_imgproc244d.lib")

using namespace std;

using namespace cv;

加载待处理图片,为了便于显示,对图片进行缩放,其实缩放这个小技巧还可以提高后续的处理速度。然后对图片进行灰度处理,然后对图片记性二值化处理,去掉饱和度较低的部分,利用HoughCircles函数,提取轮廓为圆的部分。

注意调整HoughCirles函数的几个参数,可以抓到不同的结果,要耐心根据实际情况调整。

Mat resized;

resize(src,resized,Size(src.cols/ratio,src.rows/ratio));

int w=resized.size().width;

int h=resized.size().height;

Mat gray;

cvtColor(resized,gray,CV_BGR2GRAY);

blur(gray,gray,Size(3,3));

threshold(gray,gray,160,255,THRESH_BINARY_INV);

blur(gray,gray,Size(3,3));

vector<Vec3f> circles;

HoughCircles(gray,circles,CV_HOUGH_GRADIENT,2,h/4,25,100,h/32,h/8);

vector<Vec3f>::const_iterator it=circles.begin();

while(it!=circles.end())

circle(resized,Point((*it)[0],(*it)[1]),2,Scalar(0,0,255),2);

circle(resized,Point((*it)[0],(*it)[1]),(*it)[2],Scalar(0,0,255),2);

++it;

namedWindow("src");

imshow("src",resized);

namedWindow("resized");

imshow("resized",gray);

waitKey(0);

代码解读:

使用HoughCircles必须注意,传入的第一个参数必须是灰度图,根据需要设置其参数,参数2 :累加器分辨率, 参数3: 两个圆之间的最小距离,参数4:Canny 高阀值,参数5:最小投票数,参数6,7:最小,最大半径。

函数抓取的圆保存到向量circles中, Vec3f 是个3个变量的结构体Vec3f[0] ,Vec3f[1] 对应圆心坐标值,Vec3f[2] 对应圆心半径。

为了显示结果, 使用circle函数画出圆心和圆,颜色使用红色。

但是摄像头拍照不比电脑作图,实际拍照会受光照条件和强度的影响,导致抓取结果偏差很大。 如下图,实际拍摄的图可能有阴影投射,灰度处理后影响值得抓取。使用上述代码,却抓到了7个圆,实际只需要左右2个,所以可以根据坐标将不需要的过滤掉。

实际基于标记的坐标定位也可使用如下的图形进行实现,该图解析出来后不但能够识别坐标,还可判断方向,实际开发中,下图会转化为海明码进行分析。 参考技术A Kalman滤波理论主要应用在现实世界中个,并不是理想环境。主要是来跟踪的某一个变量的值,跟踪的依据是首先根据系统的运动方程来对该值做预测,比如说我们知道一个物体的运动速度,那么下面时刻它的位置按照道理是可以预测出来的,不过该预测肯定有误差,只能作为跟踪的依据。另一个依据是可以用测量手段来测量那个变量的值,当然该测量也是有误差的,也只能作为依据,不过这二个依据的权重比例不同。最后kalman滤波就是利用这两个依据进行一些列迭代进行目标跟踪的本回答被提问者采纳 参考技术B 如果仅有一张图片的样本的话,很难实现。 识别图片的过程大概是: 提取特征 训练样本,得到模型 使用模型判断 如果题主想速成的话,还是去下现成代码吧。。。

基于opencv.js实现二维码定位

通过分析OpenCV.JS(官方下载地址 https://docs.opencv.org/_VERSION_/opencv.js)的白名单,我们可以了解目前官方PreBuild版本并没有实现QR识别。

# Classes and methods whitelist

core = {\'\': [\'absdiff\', \'add\', \'addWeighted\', \'bitwise_and\', \'bitwise_not\', \'bitwise_or\', \'bitwise_xor\', \'cartToPolar\',\\

\'compare\', \'convertScaleAbs\', \'copyMakeBorder\', \'countNonZero\', \'determinant\', \'dft\', \'divide\', \'eigen\', \\

\'exp\', \'flip\', \'getOptimalDFTSize\',\'gemm\', \'hconcat\', \'inRange\', \'invert\', \'kmeans\', \'log\', \'magnitude\', \\

\'max\', \'mean\', \'meanStdDev\', \'merge\', \'min\', \'minMaxLoc\', \'mixChannels\', \'multiply\', \'norm\', \'normalize\', \\

\'perspectiveTransform\', \'polarToCart\', \'pow\', \'randn\', \'randu\', \'reduce\', \'repeat\', \'rotate\', \'setIdentity\', \'setRNGSeed\', \\

\'solve\', \'solvePoly\', \'split\', \'sqrt\', \'subtract\', \'trace\', \'transform\', \'transpose\', \'vconcat\'],

\'Algorithm\': []}

imgproc = {\'\': [\'Canny\', \'GaussianBlur\', \'Laplacian\', \'HoughLines\', \'HoughLinesP\', \'HoughCircles\', \'Scharr\',\'Sobel\', \\

\'adaptiveThreshold\',\'approxPolyDP\',\'arcLength\',\'bilateralFilter\',\'blur\',\'boundingRect\',\'boxFilter\',\\

\'calcBackProject\',\'calcHist\',\'circle\',\'compareHist\',\'connectedComponents\',\'connectedComponentsWithStats\', \\

\'contourArea\', \'convexHull\', \'convexityDefects\', \'cornerHarris\',\'cornerMinEigenVal\',\'createCLAHE\', \\

\'createLineSegmentDetector\',\'cvtColor\',\'demosaicing\',\'dilate\', \'distanceTransform\',\'distanceTransformWithLabels\', \\

\'drawContours\',\'ellipse\',\'ellipse2Poly\',\'equalizeHist\',\'erode\', \'filter2D\', \'findContours\',\'fitEllipse\', \\

\'fitLine\', \'floodFill\',\'getAffineTransform\', \'getPerspectiveTransform\', \'getRotationMatrix2D\', \'getStructuringElement\', \\

\'goodFeaturesToTrack\',\'grabCut\',\'initUndistortRectifyMap\', \'integral\',\'integral2\', \'isContourConvex\', \'line\', \\

\'matchShapes\', \'matchTemplate\',\'medianBlur\', \'minAreaRect\', \'minEnclosingCircle\', \'moments\', \'morphologyEx\', \\

\'pointPolygonTest\', \'putText\',\'pyrDown\',\'pyrUp\',\'rectangle\',\'remap\', \'resize\',\'sepFilter2D\',\'threshold\', \\

\'undistort\',\'warpAffine\',\'warpPerspective\',\'warpPolar\',\'watershed\', \\

\'fillPoly\', \'fillConvexPoly\'],

\'CLAHE\': [\'apply\', \'collectGarbage\', \'getClipLimit\', \'getTilesGridSize\', \'setClipLimit\', \'setTilesGridSize\']}

objdetect = {\'\': [\'groupRectangles\'],

\'HOGDescriptor\': [\'load\', \'HOGDescriptor\', \'getDefaultPeopleDetector\', \'getDaimlerPeopleDetector\', \'setSVMDetector\', \'detectMultiScale\'],

\'CascadeClassifier\': [\'load\', \'detectMultiScale2\', \'CascadeClassifier\', \'detectMultiScale3\', \'empty\', \'detectMultiScale\']}

video = {\'\': [\'CamShift\', \'calcOpticalFlowFarneback\', \'calcOpticalFlowPyrLK\', \'createBackgroundSubtractorMOG2\', \\

\'findTransformECC\', \'meanShift\'],

\'BackgroundSubtractorMOG2\': [\'BackgroundSubtractorMOG2\', \'apply\'],

\'BackgroundSubtractor\': [\'apply\', \'getBackgroundImage\']}

dnn = {\'dnn_Net\': [\'setInput\', \'forward\'],

\'\': [\'readNetFromCaffe\', \'readNetFromTensorflow\', \'readNetFromTorch\', \'readNetFromDarknet\',

\'readNetFromONNX\', \'readNet\', \'blobFromImage\']}

features2d = {\'Feature2D\': [\'detect\', \'compute\', \'detectAndCompute\', \'descriptorSize\', \'descriptorType\', \'defaultNorm\', \'empty\', \'getDefaultName\'],

\'BRISK\': [\'create\', \'getDefaultName\'],

\'ORB\': [\'create\', \'setMaxFeatures\', \'setScaleFactor\', \'setNLevels\', \'setEdgeThreshold\', \'setFirstLevel\', \'setWTA_K\', \'setScoreType\', \'setPatchSize\', \'getFastThreshold\', \'getDefaultName\'],

\'MSER\': [\'create\', \'detectRegions\', \'setDelta\', \'getDelta\', \'setMinArea\', \'getMinArea\', \'setMaxArea\', \'getMaxArea\', \'setPass2Only\', \'getPass2Only\', \'getDefaultName\'],

\'FastFeatureDetector\': [\'create\', \'setThreshold\', \'getThreshold\', \'setNonmaxSuppression\', \'getNonmaxSuppression\', \'setType\', \'getType\', \'getDefaultName\'],

\'AgastFeatureDetector\': [\'create\', \'setThreshold\', \'getThreshold\', \'setNonmaxSuppression\', \'getNonmaxSuppression\', \'setType\', \'getType\', \'getDefaultName\'],

\'GFTTDetector\': [\'create\', \'setMaxFeatures\', \'getMaxFeatures\', \'setQualityLevel\', \'getQualityLevel\', \'setMinDistance\', \'getMinDistance\', \'setBlockSize\', \'getBlockSize\', \'setHarrisDetector\', \'getHarrisDetector\', \'setK\', \'getK\', \'getDefaultName\'],

# \'SimpleBlobDetector\': [\'create\'],

\'KAZE\': [\'create\', \'setExtended\', \'getExtended\', \'setUpright\', \'getUpright\', \'setThreshold\', \'getThreshold\', \'setNOctaves\', \'getNOctaves\', \'setNOctaveLayers\', \'getNOctaveLayers\', \'setDiffusivity\', \'getDiffusivity\', \'getDefaultName\'],

\'AKAZE\': [\'create\', \'setDescriptorType\', \'getDescriptorType\', \'setDescriptorSize\', \'getDescriptorSize\', \'setDescriptorChannels\', \'getDescriptorChannels\', \'setThreshold\', \'getThreshold\', \'setNOctaves\', \'getNOctaves\', \'setNOctaveLayers\', \'getNOctaveLayers\', \'setDiffusivity\', \'getDiffusivity\', \'getDefaultName\'],

\'DescriptorMatcher\': [\'add\', \'clear\', \'empty\', \'isMaskSupported\', \'train\', \'match\', \'knnMatch\', \'radiusMatch\', \'clone\', \'create\'],

\'BFMatcher\': [\'isMaskSupported\', \'create\'],

\'\': [\'drawKeypoints\', \'drawMatches\', \'drawMatchesKnn\']}

photo = {\'\': [\'createAlignMTB\', \'createCalibrateDebevec\', \'createCalibrateRobertson\', \\

\'createMergeDebevec\', \'createMergeMertens\', \'createMergeRobertson\', \\

\'createTonemapDrago\', \'createTonemapMantiuk\', \'createTonemapReinhard\', \'inpaint\'],

\'CalibrateCRF\': [\'process\'],

\'AlignMTB\' : [\'calculateShift\', \'shiftMat\', \'computeBitmaps\', \'getMaxBits\', \'setMaxBits\', \\

\'getExcludeRange\', \'setExcludeRange\', \'getCut\', \'setCut\'],

\'CalibrateDebevec\' : [\'getLambda\', \'setLambda\', \'getSamples\', \'setSamples\', \'getRandom\', \'setRandom\'],

\'CalibrateRobertson\' : [\'getMaxIter\', \'setMaxIter\', \'getThreshold\', \'setThreshold\', \'getRadiance\'],

\'MergeExposures\' : [\'process\'],

\'MergeDebevec\' : [\'process\'],

\'MergeMertens\' : [\'process\', \'getContrastWeight\', \'setContrastWeight\', \'getSaturationWeight\', \\

\'setSaturationWeight\', \'getExposureWeight\', \'setExposureWeight\'],

\'MergeRobertson\' : [\'process\'],

\'Tonemap\' : [\'process\' , \'getGamma\', \'setGamma\'],

\'TonemapDrago\' : [\'getSaturation\', \'setSaturation\', \'getBias\', \'setBias\', \\

\'getSigmaColor\', \'setSigmaColor\', \'getSigmaSpace\',\'setSigmaSpace\'],

\'TonemapMantiuk\' : [\'getScale\', \'setScale\', \'getSaturation\', \'setSaturation\'],

\'TonemapReinhard\' : [\'getIntensity\', \'setIntensity\', \'getLightAdaptation\', \'setLightAdaptation\', \\

\'getColorAdaptation\', \'setColorAdaptation\']

}

aruco = {\'\': [\'detectMarkers\', \'drawDetectedMarkers\', \'drawAxis\', \'estimatePoseSingleMarkers\', \'estimatePoseBoard\', \'estimatePoseCharucoBoard\', \'interpolateCornersCharuco\', \'drawDetectedCornersCharuco\'],

\'aruco_Dictionary\': [\'get\', \'drawMarker\'],

\'aruco_Board\': [\'create\'],

\'aruco_GridBoard\': [\'create\', \'draw\'],

\'aruco_CharucoBoard\': [\'create\', \'draw\'],

}

calib3d = {\'\': [\'findHomography\', \'calibrateCameraExtended\', \'drawFrameAxes\', \'estimateAffine2D\', \'getDefaultNewCameraMatrix\', \'initUndistortRectifyMap\', \'Rodrigues\']}

white_list = makeWhiteList([core, imgproc, objdetect, video, dnn, features2d, photo, aruco, calib3d]) 但是我们仍然可以通过轮廓分析的相关方法,去实现“基于opencv.js实现二维码定位”,这就是本篇BLOG的主要内容。

一、基本原理

主要内容请参考《OpenCV使用FindContours进行二维码定位》,这里重要的回顾一下。

使用过FindContours直接寻找联通区域的函数。典型的运用在二维码上面:

对于它的3个定位点 ,这种重复包含的特性,在图上只有不容易重复的三处,这是具有排它性的。

,这种重复包含的特性,在图上只有不容易重复的三处,这是具有排它性的。

,这种重复包含的特性,在图上只有不容易重复的三处,这是具有排它性的。

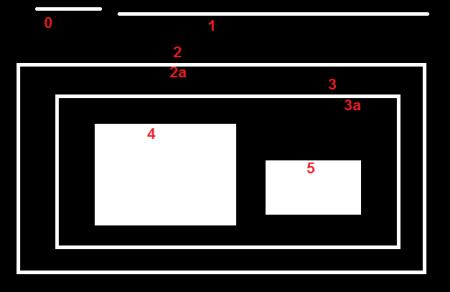

,这种重复包含的特性,在图上只有不容易重复的三处,这是具有排它性的。 那么轮廓识别的结果是如何展示的了?比如在这幅图中(白色区域为有数据的区域,黑色为无数据),0,1,2是第一层,然后里面是3,3的里面是4和5。(2a表示是2的内部),他们的关系应该是这样的:

所以我们只需要寻找某一个轮廓“有无爷爷轮廓”,就可以判断出来它是否“重复包含”

值得参考的C++代码应该是这样的,其中注释部分已经说明的比较清楚。

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include <iostream>

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

using namespace cv;

using namespace std;

//找到所提取轮廓的中心点

//在提取的中心小正方形的边界上每隔周长个像素提取一个点的坐标,求所提取四个点的平均坐标(即为小正方形的大致中心)

Point Center_cal(vector<vector<Point> > contours,int i)

{

int centerx=0,centery=0,n=contours[i].size();

centerx = (contours[i][n/4].x + contours[i][n*2/4].x + contours[i][3*n/4].x + contours[i][n-1].x)/4;

centery = (contours[i][n/4].y + contours[i][n*2/4].y + contours[i][3*n/4].y + contours[i][n-1].y)/4;

Point point1=Point(centerx,centery);

return point1;

}

int main( int argc, char** argv[] )

{

Mat src = imread( "e:/sandbox/qrcode.jpg", 1 );

resize(src,src,Size(800,600));//标准大小

Mat src_gray;

Mat src_all=src.clone();

Mat threshold_output;

vector<vector<Point> > contours,contours2;

vector<Vec4i> hierarchy;

//预处理

cvtColor( src, src_gray, CV_BGR2GRAY );

blur( src_gray, src_gray, Size(3,3) ); //模糊,去除毛刺

threshold( src_gray, threshold_output, 100, 255, THRESH_OTSU );

//寻找轮廓

//第一个参数是输入图像 2值化的

//第二个参数是内存存储器,FindContours找到的轮廓放到内存里面。

//第三个参数是层级,**[Next, Previous, First_Child, Parent]** 的vector

//第四个参数是类型,采用树结构

//第五个参数是节点拟合模式,这里是全部寻找

findContours( threshold_output, contours, hierarchy, CV_RETR_TREE, CHAIN_APPROX_NONE, Point(0, 0) );

//轮廓筛选

int c=0,ic=0,area=0;

int parentIdx=-1;

for( int i = 0; i< contours.size(); i++ )

{

//hierarchy[i][2] != -1 表示不是最外面的轮廓

if (hierarchy[i][2] != -1 && ic==0)

{

parentIdx = i;

ic++;

}

else if (hierarchy[i][2] != -1)

{

ic++;

}

//最外面的清0

else if(hierarchy[i][2] == -1)

{

ic = 0;

parentIdx = -1;

}

//找到定位点信息

if ( ic >= 2)

{

contours2.push_back(contours[parentIdx]);

ic = 0;

parentIdx = -1;

}

}

//填充定位点

for(int i=0; i<contours2.size(); i++)

drawContours( src_all, contours2, i, CV_RGB(0,255,0) , -1 );

//连接定位点

Point point[3];

for(int i=0; i<contours2.size(); i++)

{

point[i] = Center_cal( contours2, i );

以上是关于如何使用OpenCV实现基于标记的定位的主要内容,如果未能解决你的问题,请参考以下文章

通过分析OpenCV.JS(官方下载地址 https://docs.opencv.org/_VERSION_/opencv.js)的白名单,我们可以了解目前官方PreBuild版本并没有实现QR识别。但是我们仍然可以通过轮廓分析的相关方法,去实现“基于opencv.js实现二维码定位”,这就是本篇BLOG的主要内容。

通过分析OpenCV.JS(官方下载地址 https://docs.opencv.org/_VERSION_/opencv.js)的白名单,我们可以了解目前官方PreBuild版本并没有实现QR识别。但是我们仍然可以通过轮廓分析的相关方法,去实现“基于opencv.js实现二维码定位”,这就是本篇BLOG的主要内容。